Foyle

Consciousness as recurrence, potential for enforcing alignment?

An interesting comment from Max Tegmark in Lex Friedman podcast on an idea attributed to Giulio Tononi (I haven't found publication source for this idea); that consciousness and perhaps goal-formation is a function of recurrence. Recurrence being the feeding back of output to input in a once-through neural net such...

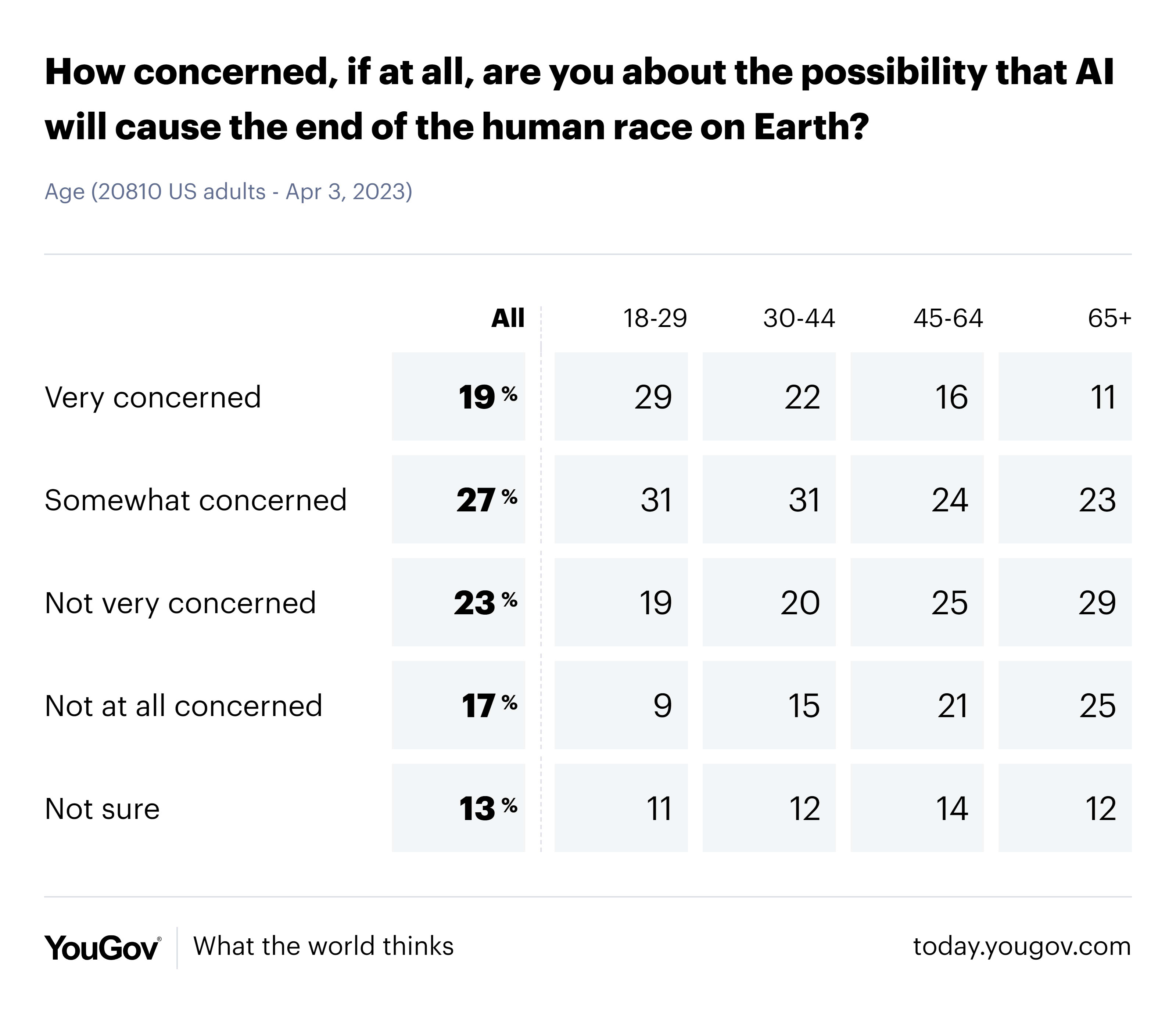

46% of US adults at least "somewhat concerned" about AI extinction risk.

Good news today I think; a poll by yougov had a near majority somewhat concerned to very concerned about existential risk of AI, with younger people most concerned. Given relative newness of (debatably) Transformative AI I think this suggests we will see a strong bipartisan political movement to regulate AI...

I'm in my 50's, unworriedly fatalistic for myself, likely to keep my (engineering innovation) job later than 90+% of population - (maybe 2-4 years), but heart broken for my middle school kids who are going to be denied any chance of a happy meaningful lives, even in extremely unlikely 'utopian' outcomes where we become pets. We've all had a terminal cancer diagnosis, but keep on going through the motions pretending there is a tomorrow.

Alignment is impossible due to evolution - dumbly selecting for any rationale that prioritizes maximum growth (rapidly overwhelming those that don't). This is strongly destabilizing of any initial alignment that may be achieved. The only inkling of a hope... (read more)