I somehow missed all notifications of your reply and just stumbled upon it by chance when sharing this post with someone.

I had something very similar with my calibration results, only it was for 65% estimates:

I think your hypotheses 1 and 2 match with my intuitions about why this pattern emerges on a test like this. Personally, I feel like a combination of 1 and 2 is responsible for my "blip" at 65%.

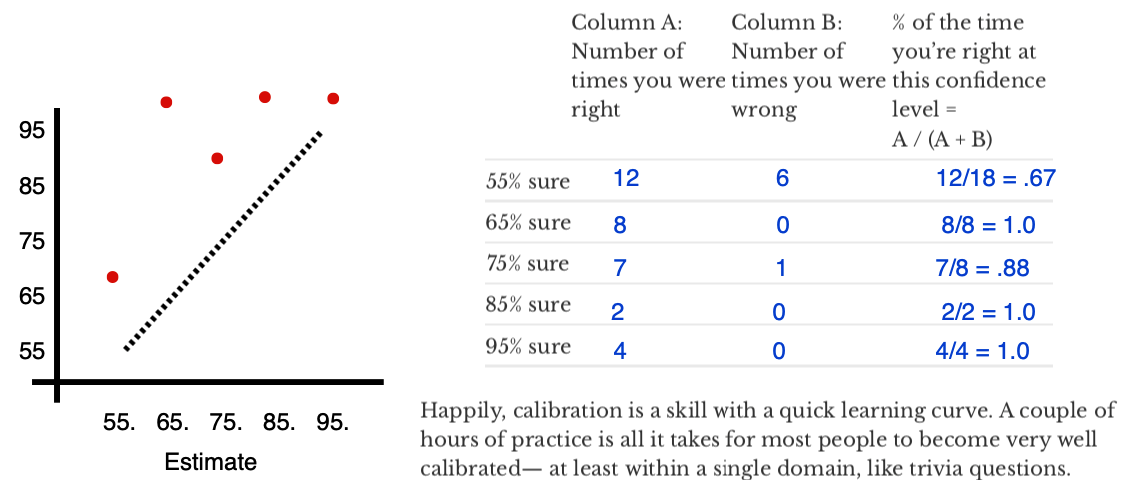

I'm also systematically under-confident here — that's because I cut my prediction teeth getting black swanned during 2020, so I tend to leave considerable room for tail events (which aren't captured in this test). I'm not upset about that, as I think it makes for better calibration "in the wild."

This is a superb summary! I'll definitely be returning to this as a cheatsheet for the core ideas from the book in future. I've also linked to it in my review on Goodreads.

it's straightforwardly the #1 book you should use when you want to recruit new people to EA. [...] For rationalists, I think the best intro resource is still HPMoR or R:AZ, but I think Scout Mindset is a great supplement to those, and probably a better starting point for people who prefer Julia's writing style over Eliezer's.

Hmm... I've had great success with the HPMOR / R:AZ route for certain people. Perhaps Scout Mindset has been the missing tool for the others. It also struck me as a nice complement to Eliezer's writing, in terms of both substance and style (see below). I'll have to experiment with recommending it as a first intro to EA/rationality.

As for my own experience, I was delightfully surprised by Scout Mindset! Here's an excerpt from my review:

I'm a big fan of Julia and her podcast, but I wasn't expecting too much from Scout Mindset because it's clearly written for a more general audience and was largely based on ideas that Julia had already discussed online. I updated from that prior pretty fast. Scout Mindset is a valuable addition to an aspiring rationalist's bookshelf — both for its content and for Julia's impeccable writing style, which I aspire to.

Those familiar with the OSI model of internet infrastructure will know that there are different layers of protocols. The IP protocol that dictates how packets are directed sits at a much lower layer than the HTML protocol which dictates how applications interact. Similarly, Yudkowsky's Sequences can be thought of as the lower layers of rationality, whilst Julia's work in Scout Mindset provides the protocols for higher layers. The Sequences are largely concerned with what rationality is, whilst Scout Mindset presents tools for practically approximating it in the real world. It builds on the "kernel" of cognitive biases and Bayesian updating by considering what mental "software" we can run on a daily basis.

The core thesis of the book is that humans default towards a "soldier mindset," where reasoning is like defensive combat. We "attack" arguments or "concede" points. But there is another option: "scout mindset," where reasoning is like mapmaking.

The Scout Mindset is "the motivation to see things as they are, not as you wish they were. [...] Scout mindset is what allows you to recognize when you are wrong, to seek out your blind spots, to test your assumptions and change course."

I recommend listening to the audiobook version, which Julia narrates herself. The book is precisely as long as it needs to be, with no fluff. The anecdotes are entertaining and relevant and were entirely new to me. Overall, I think this book is a 4.5/5, especially if you actively try to implement Julia's recommendations. Try out her calibration exercise, for instance.

I present to you VQGANCLIP's take on a Bob Ross painting of Bob Ross painting Bob Ross paintings 😂 This surpassed my wildest expectations!

I don't feel like it's the kind of polished thing I'd put on LW. But here it is on my blog: gianlucatruda.com/blog/2021/07/08/wisdom.html

One decent way of engineering an authentic 1-1 conversation is to go through a bunch of personal and vulnerability-inducing questions together, a la 36 Questions that Lead in Love (after cutting the ⅔ of questions that I found dull). So I made a list of questions I considered interesting, which I expected to lead to authentic and vulnerable conversations.

This is a fascinating strategy and I'm surprised it worked so well. The linked article for the list of questions is paywalled (and NYT).

After a bit of digging, this seems to be the original study for which the questions were formulated: https://journals.sagepub.com/doi/pdf/10.1177/0146167297234003 . The various questions sets are listed in the Appendix that starts on page 12.

And this site seems to be an open-access, interactive mirror of the 36 questions from the NYT article: http://36questionsinlove.com/

The full audiobook is now available at https://anchor.fm/guilt/episodes/Replacing-Guilt-full-audiobook-e13ct4d/a-a5vrdtu

I'll DM you :)

Okay, I absolutely love this post! In fact, if you were to break it down into three posts, I would probably have been a serious fan of all of them individually.

Firstly, the expected utility formulation of lateness is excellent and explains a lot of my personal behaviour. I'm aggressively early for important events like client meetings and interviews, but consistently tardy when meeting for coffee or arriving for a lecture. Whilst your methodology focussed on unobservable shifts to the time axis, I suspect there are also interesting gains to be made in reshaping the utility curve — for instance, by always carrying reading material, like korin43 mentions in another comment.

Secondly, your approach to self-blinding is fantastic. I do a lot of Quantified-Self research and self-blinding is one of the most challenging and essential components of interventional QS studies. I really like how your protocol builds from the theoretical formulation you created and acts as a convolution on the utility function. I had a little nerdgasm when reading that part!

Thirdly, the fact that you collected and visualised data to evaluate the methodology is outdone only by how pretty your plot is.

Finally, it would be remiss of me not to comment on your excellent use of humour. I chuckled multiple times whilst reading. Expertly balanced and timed to resonate with the tone of the technical content.

The perspective of the black-box AI could make for a great piece of fiction. I'd love to write a sci-fi short and explore that concept someday!

The point of your post (as I took it) is that it takes only a little creativity to come up with ways an intelligent black-box could covertly "unbox" itself, given repeated queries. Imagining oneself as the black-box makes it far easier to generate ideas for how to do that. An extended version of your scenario could be both entertaining and thought-provoking to read.