METR's Evaluation of GPT-5

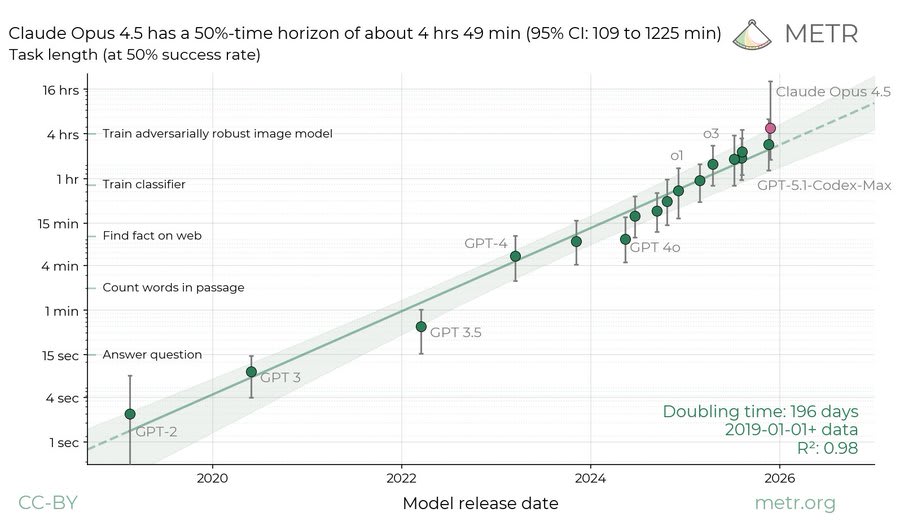

METR (where I work, though I'm cross-posting in a personal capacity) evaluated GPT-5 before it was externally deployed. We performed a much more comprehensive safety analysis than we ever have before; it feels like pre-deployment evals are getting more mature. This is the first time METR has produced something we've felt comfortable calling an "evaluation" instead of a "preliminary evaluation". It's much more thorough and comprehensive than the things we've created before and it explores three different threat models. It's one of the closest things out there to a real-world autonomy safety-case. It also provides a rough sense of how long it'll be before current evaluations no longer provide safety assurances. I've ported the blogpost over to LW in case people want to read it. Details about METR’s evaluation of OpenAI GPT-5 Note on independence: This evaluation was conducted under a standard NDA. Due to the sensitive information shared with METR as part of this evaluation, OpenAI’s comms and legal team required review and approval of this post (the latter is not true of any other METR reports shared on our website).[1] Executive Summary As described in OpenAI’s GPT-5 System Card, METR assessed whether OpenAI GPT-5 could pose catastrophic risks, considering three key threat models: 1. AI R&D Automation: AI systems speeding up AI researchers by >10X (as compared to researchers with no AI assistance), or otherwise causing a rapid intelligence explosion, which could cause or amplify a variety of risks if stolen or handled without care 2. Rogue replication: AI systems posing direct risks of rapid takeover, which likely requires them to be able to maintain infrastructure, acquire resources, and evade shutdown (see the rogue replication threat model) 3. Strategic sabotage: AI systems broadly and strategically misleading researchers in evaluations, or sabotaging further AI development to significantly increase the risk of

Yep I had Eliezer and Nate Soares in mind when I wrote the footnote "Some people don't think nonhuman animals are sentient beings, but I feel relatively confident they're applying a standard Peter Singer would approve of as morally consistent."

Note that Eliezer has written a relatively thoughtful justification on his vies of theory of mind and why he thinks various farmed animals aren't moral patients. He also says:

... (read more)