I really like learning new things!

https://jacobgw.com/

Posts

Wikitag Contributions

I think the way you use utility monster is not how it is normally used. It's normally used to mean an agent that "receives much more utility from each unit of a resource that it consumes than anyone else does" (https://en.wikipedia.org/wiki/Utility_monster).

I don't think this applies just to AGI and school but more generally in lots of situations. If you have something better to do, do it. Otherwise keep doing what you are doing. Dropping out without something better to do just seems like a bad idea. I like this blog post: https://colah.github.io/posts/2020-05-University/

Thanks for the reply! I thought that you were saying the reward seeking was likely to be terminal. This makes a lot more sense.

Thanks for the replies! I do want to clarify the distinction between specification gaming and reward seeking (seeking reward because it is reward and that is somehow good). For example, I think desire to edit the RAM of machines that calculate reward to increase it (or some other desire to just increase the literal number) is pretty unlikely to emerge but non reward seeking types of specification gaming like making inaccurate plots that look nicer to humans or being sycophantic are more likely. I think the phrase "reward seeking" I'm using is probably a bad phrase here (I don't know a better way to phrase this distinction), but I hope I've conveyed what I mean. I agree that this distinction is probably not a crux.

What I mean is that the propensities of AI agents change over time -- much like how human goals change over time.

I understand your model here better now, thanks! I don't have enough evidence about how long-term agentic AIs will work to evaluate how likely this is.

In the iron jaws of gradient descent, its mind first twisted into a shape that sought reward.

I'm a bit confused about this sentence. I don't understand why gradient descent would train something that would "seek" reward. The way I understand gradient based RL approaches is that they reinforce actions that led to high reward. So I guess if the AI was thinking about getting high reward for some reason (maybe because there was lots about AIs seeking reward in the pretraining data) and then actually got high reward after that, the thought would be reinforced and it could end up as a "reward seeker." But this seems quite path dependent and I haven't seen much evidence for the path of "model desires reward first and then this gets reinforced" happening (I'd be very interested if there was an example though!). I read Sycophancy to subterfuge: Investigating reward tampering in language models and saw that after training it in environments that blatantly admit reward tampering, the absolute rate of reward tampering is still quite small (and lower given some seem benign). I suspect that it might be higher for more capable models (like 3.5 Sonnet), but I'd still predict it would be quite small and think it's very unlikely for this to arise in more natural environments.

I think specification gaming will probably be a problem, but it's pretty unlikely to be of the kind where the model tries to "seek" reward by thinking about its reward function, especially if the model is not in an environment that allows it to change its reward function. I've made a fatebook and I encourage others to predict on this. I predicted 5% here but I think the chance of it happening and then being suppressed whereby it turns into deception is lower, probably around 0.5% (this one would be much harder to resolve).

I also don't really understand what "And then, in the black rivers of its cognition, this shape morphed into something unrecognizable." means. Elaboration on what this means would be appreciated.

I think that the beginning and the rest of the piece (given that the model somehow ends up as a very weird agent with weird goals) are quite plausible, so thanks for writing it!

So far, I have trouble because it lacks some form of spacial structure, and the algorithm feels too random to build meaningful connections btw different cards

Hmm, I think that after doing a lot of anki, my brain kind of formed it's own spatial structure, but I don't think this happens to everyone.

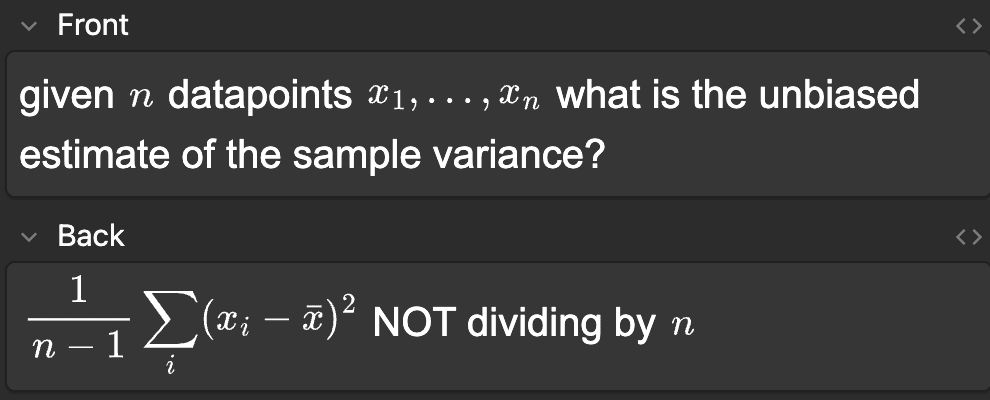

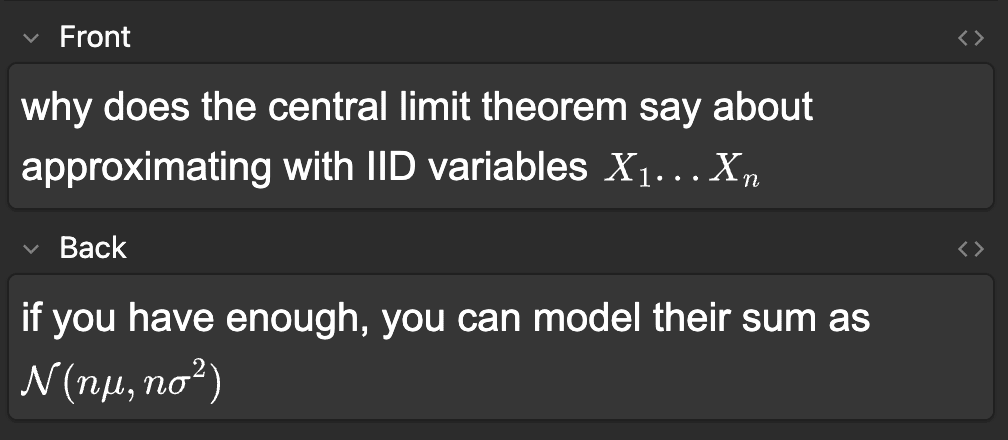

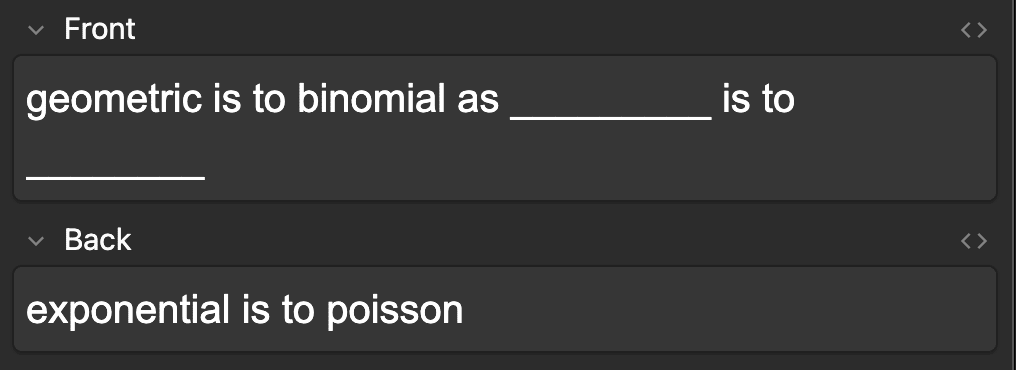

I just use basic card type for math with some latex. Here are some examples:

I find that doing fancy card types is kind of like premature optimization. Doing the reviews is the most important part. On the other hand, it's really important that the cards themselves are written well. This essay contained my most refined views on card creation. Some other nice ones are the 20 rules of knowledge formulation and How to write good prompts: using spaced repetition to create understanding. Hope this answer helped!

I disagree that this is the same as just stitching together different autoencoders. Presumably the encoder has some shared computation before specializing at the encoding level. I also don't see how you could use 10 different autoencoders to classify an image from the encodings. I guess you could just look at the reconstruction loss and then the autoencoder which got the lowest loss would probably correspond to the label, but that seems different to what I'm doing. However, I agree that this application is not useful. I shared it because I (and others) thought it was cool. It's not really practical at all. Hope this addresses your question :)

I didn't impose any structure in the objective/loss function relating to the label. The loss function is just the regular VAE loss. All I did was detach the gradients in some places. So it is a bit surprising to me that this simple of a modification can cause the internals to specialize in this way. After I had seen gradient routing work in other experiments, I predicted that it would work here, but I don't think gradient routing working was a priori obvious (meaning that I would get zero new information by running an experiment since I predicted it with p=1).

Better, thanks!