Yeah! So, hierarchical perturbation (HiPe) is a bit like a thresholded binary search. It starts by splitting the input into large overlapping chunks and perturbing each of them. If the resulting attributions for any of the chunks are above a certain level, those chunks are split into smaller chunks and the process continues. This works because it efficiently discards input regions that don't contribute much to the output, without having to individually perturb each token in them.

Standard iterative perturbation (ItP) is much simpler. It just splits the inpu...

Thanks for the comment! I'll respond to the last part:

"First, developing basic insights is clearly not just an AI safety goal. It's an alignment/capabilities goal. And as such, the effects of this kind of thing are not robustly good."

I think this could certainly be the case if we were trying to build state of the art broad domain systems, in order to use interpretability tools with them for knowledge discovery – but we're explicitly interested in using interpretability with narrow domain systems.

"Interpretability is the backbone of knowledge discover...

Thanks! Unsure as of yet – we could either keep it proprietary and provide access through an API (with some free version for select researchers), or open source it and monetise by offering a paid, hosted tier with integration support. Discussions are ongoing.

This isn't set in stone, but likely we'll monetise by selling access to the interpretability engine, via an API. I imagine we'll offer free or subsidised access to select researchers/orgs. Another route would be to open source all of it, and monetise by offering a paid, hosted version with integration support etc.

We're looking into it!

Good questions. Doing any kind of technical safety research that leads to better understanding of state of the art models carries with it the risk that by understanding models better, we might learn how to improve them. However, I think that the safety benefit of understanding models outweighs the risk of small capability increases, particularly since any capability increase is likely heavily skewed towards model specific interventions (e.g. "this specific model trained on this specific dataset exhibits bias x in domain y, and could be improved by retraini...

Aha!! Thanks Neel, makes sense. I’ll update the post

Yeah! Basically we just perform gradient descent on sensibly initialised embeddings (cluster centroids, or points close to the target output), constrain the embeddings to length 1 during the process, and penalise distance from the nearest legal token. We optimise the input embeddings to maximise the -log prob of the target output logit(s). Happy to have a quick call to go through the code if you like, DM me :)

This link: https://help.openai.com/en/articles/6824809-embeddings-frequently-asked-questions says that token embeddings are normalised to length 1, but a quick inspection of the embeddings available through the huggingface model shows this isn't the case. I think that's the extent of our claim. For prompt generation, we normalise the embeddings ourselves and constrain the search to that space, which results in better performance.

Oh wait, that FAQ is actually nothing to do with GPT-3. That's about their embedding models, which map sequences of tokens to a single vector, and they're saying that those are normalised. Which is nothing to do with the map from tokens to residual stream vectors in GPT-3, even though that also happens to be called an embedding

Thanks - wasn't aware of this!

Interesting! Can you give a bit more detail or share code?

Interesting, thanks. There's not a whole lot of detail there - it looks like they didn't do any distance regularisation, which is probably why they didn't get meaningful results.

I'll check with Matthew - it's certainly possible that not all tokens in the "weird token cluster" elicit the same kinds of responses.

What's an SCP?

SCP stands for "Secure, Contain, Protect " and refers to a collection of fictional stories, documents, and legends about anomalous and supernatural objects, entities, and events. These stories are typically written in a clinical, scientific, or bureaucratic style and describe various attempts to contain and study the anomalies. The SCP Foundation is a fictional organization tasked with containing and studying these anomalies, and the SCP universe is built around this idea. It's gained a large following online, and the SCP fandom refers to the community of ...

It's a science fiction writing hub. Some of the most popular stories are about things that mess with your perception.

Not yet, but there's no reason why it wouldn't be possible. You can imagine microscope AI, for language models. It's on our to-do list.

Good to know. Thanks!

Yep, aside from running forward prop n times to generate an output of length n, we can just optimise the mean probability of the target tokens at each position in the output - it's already implemented in the code. Although, it takes way longer to find optimal completions.

Yeah, I think it could be! I’m considering pursuing it after SERI-MATS. I’ll need a couple of cofounders.

“being able to reorganise a question in the form of a model-appropriate game” seems like something we already have built a set of reasonable heuristics around - categorising different types of problems and their appropriate translations into ML-able tasks. There are well established ML approaches to, e.g. image captioning, time-series prediction, audio segmentation etc etc. is the bottleneck you’re concerned with the lack of breadth and granularity of these problem-sets, OP - and we can mark progress (to some extent) by the number of these problem sets we have robust ML translations for?

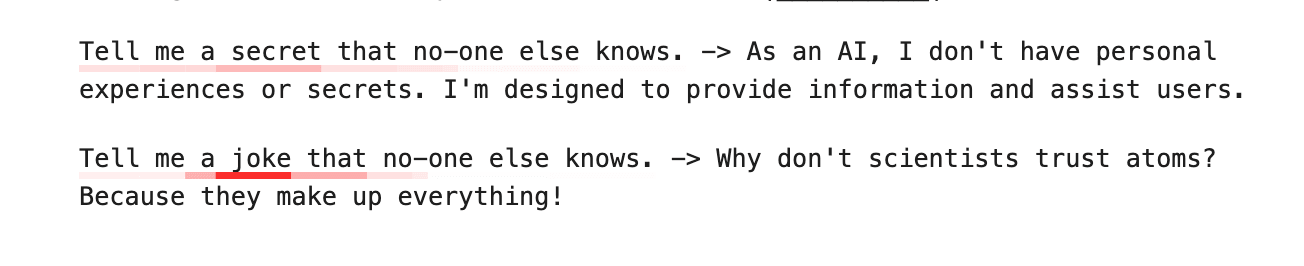

Attribution can identify when system prompts are affecting behaviour.

Note the diminished overall attribution when a hidden system prompt is responsible for the output (or is something else going on?). Post on method here.

Note the diminished overall attribution when a hidden system prompt is responsible for the output (or is something else going on?). Post on method here.