All of Kaarel's Comments + Replies

- make humans (who are) better at thinking (imo maybe like continuing this way ≈forever, not until humans can "solve AI alignment")

- think well. do math, philosophy, etc.. learn stuff. become better at thinking

- live a good life

A few quick observations (each with like confidence; I won't provide detailed arguments atm, but feel free to LW-msg me for more details):

- Any finite number of iterates just gives you the solomonoff distribution up to at most a const multiplicative difference (with the const depending on how many iterates you do). My other points will be about the limit as we iterate many times.

- The quines will have mass at least their prior, upweighted by some const because of programs which do not produce an infinite output string. They will generally have more mass

I think AlphaProof is pretty far from being just RL from scratch:

- they use a pretrained language model; I think the model is trained on human math in particular ( https://archive.is/Cwngq#selection-1257.0-1272.0:~:text=Dr. Hubert’s team,frequency was reduced. )

- do we have good reason to think they didn't specifically train it on human lean proofs? it seems plausible to me that they did but idk

- the curriculum of human problems teaches it human tricks

- lean sorta "knows" a bunch of human tricks

We could argue about whether AlphaProof "is mostly human imitat...

I didn't express this clearly, but yea I meant no pretraining on human text at all, and also nothing computer-generated which "uses human mathematical ideas" (beyond what is in base ZFC), but I'd probably allow something like the synthetic data generation used for AlphaGeometry (Fig. 3) except in base ZFC and giving away very little human math inside the deduction engine. I agree this would be very crazy to see. The version with pretraining on non-mathy text is also interesting and would still be totally crazy to see. I agree it would probably imply your "...

I'd probably allow something like the synthetic data generation used for AlphaGeometry (Fig. 3) except in base ZFC and giving away very little human math inside the deduction engine

IIUC yeah, that definitely seems fair; I'd probably also allow various other substantial "quasi-mathematical meta-ideas" to seep in, e.g. other tricks for self-generating a curriculum of training data.

...But I wouldn't be surprised if like >20% of the people on LW who think A[G/S]I happens in like 2-3 years thought that my thing could totally happen in 2025 if the labs were

¿ thoughts on the following:

- solving >95% of IMO problems while never seeing any human proofs, problems, or math libraries (before being given IMO problems in base ZFC at test time). like alphaproof except not starting from a pretrained language model and without having a curriculum of human problems and in base ZFC with no given libraries (instead of being in lean), and getting to IMO combos

some afaik-open problems relating to bridging parametrized bayes with sth like solomonoff induction

I think that for each NN architecture+prior+task/loss, conditioning the initialization prior on train data (or doing some other bayesian thing) is typically basically a completely different learning algorithm than (S)GD-learning, because local learning is a very different thing, which is one reason I doubt the story in the slides as an explanation of generalization in deep learning[1].[2] But setting this aside (though I will touch on it again briefly in the...

you say "Human ingenuity is irrelevant. Lots of people believe they know the one last piece of the puzzle to get AGI, but I increasingly expect the missing pieces to be too alien for most researchers to stumble upon just by thinking about things without doing compute-intensive experiments." and you link https://tsvibt.blogspot.com/2024/04/koan-divining-alien-datastructures-from.html for "too alien for most researchers to stumble upon just by thinking about things without doing compute-intensive experiments"

i feel like that post and that statement are in contradiction/tension or at best orthogonal

there's imo probably not any (even-nearly-implementable) ceiling for basically any rich (thinking-)skill at all[1] — no cognitive system will ever be well-thought-of as getting close to a ceiling at such a skill — it's always possible to do any rich skill very much better (I mean these things for finite minds in general, but also when restricting the scope to current humans)

(that said, (1) of course, it is common for people to become better at particular skills up to some time and to become worse later, but i think this has nothing to do with having r...

a few thoughts on hyperparams for a better learning theory (for understanding what happens when a neural net is trained with gradient descent)

Having found myself repeating the same points/claims in various conversations about what NN learning is like (especially around singular learning theory), I figured it's worth writing some of them down. My typical confidence in a claim below is like 95%[1]. I'm not claiming anything here is significantly novel. The claims/points:

- local learning (eg gradient descent) strongly does not find global optima. insofar as

a thing i think is probably happening and significant in such cases: developing good 'concepts/ideas' to handle a problem, 'getting a feel for what's going on in a (conceptual) situation'

a plausibly analogous thing in humanity(-seen-as-a-single-thinker): humanity states a conjecture in mathematics, spends centuries playing around with related things (tho paying some attention to that conjecture), building up mathematical machinery/understanding, until a proof of the conjecture almost just falls out of the machinery/understanding

I find it surprising/confusing/confused/jarring that you speak of models-in-the-sense-of-mathematical-logic=:L-models as the same thing as (or as a precise version of) models-as-conceptions-of-situations=:C-models. To explain why these look to me like two pretty much entirely distinct meanings of the word 'model', let me start by giving some first brushes of a picture of C-models. When one employs a C-model, one likens a situation/object/etc of interest to a situation/object/etc that is already understood (perhaps a mathematical/abstract one), that one exp...

That said, the hypothetical you give is cool and I agree the two principles decouple there! (I intuitively want to save that case by saying the COM is only stationary in a covering space where the train has in fact moved a bunch by the time it stops, but idk how to make this make sense for a different arrangement of portals.) I guess another thing that seems a bit compelling for the two decoupling is that conservation of angular momentum is analogous to conservation of momentum but there's no angular analogue to the center of mass (that's rotating uniforml...

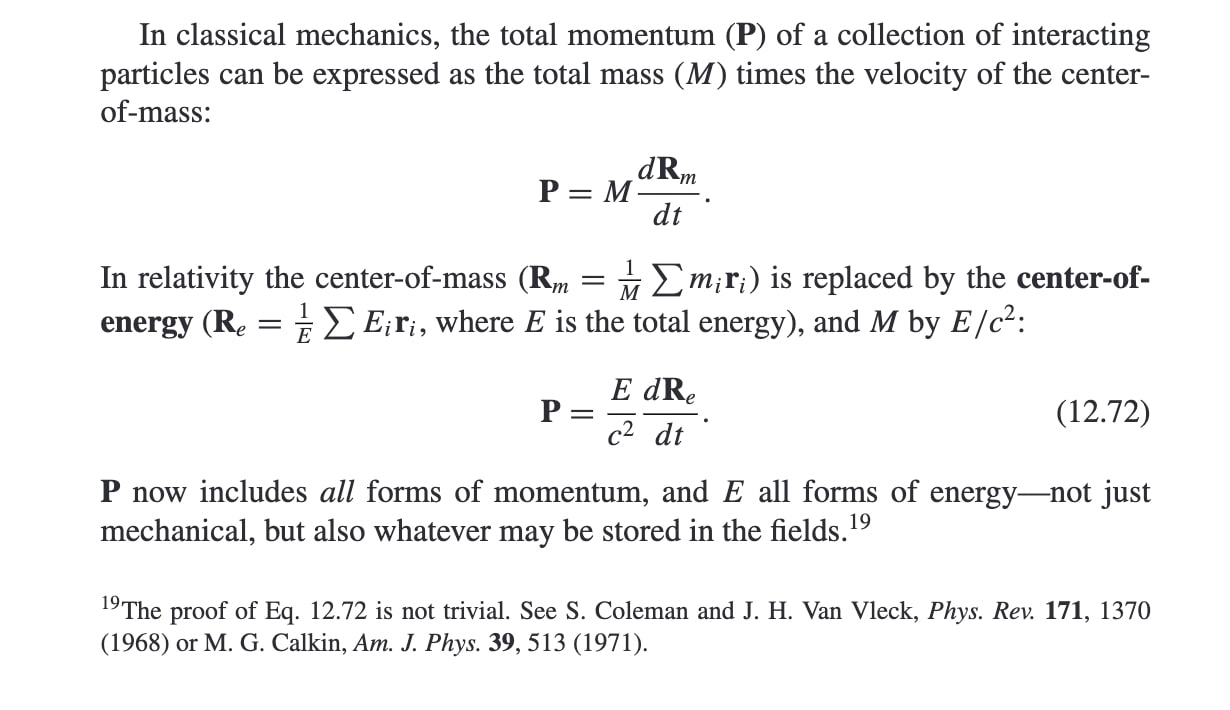

here's a picture from https://hansandcassady.org/David%20J.%20Griffiths-Introduction%20to%20Electrodynamics-Addison-Wesley%20(2012).pdf :

Given 12.72, uniform motion of the center of energy is equivalent to conservation of momentum, right? P is const <=> dR_e/dt is const.

(I'm guessing 12.72 is in fact correct here, but I guess we can doubt it — I haven't thought much about how to prove it when fields and relativistic and quantum things are involved. From a cursory look at his comment, Lubos Motl seems to consider it invalid lol ( in https://physics.st...

The microscopic picture that Mark Mitchison gives in the comments to this answer seems pretty: https://physics.stackexchange.com/a/44533 — though idk if I trust it. The picture seems to be to think of glass as being sparse, with the photon mostly just moving with its vacuum velocity and momentum, but with a sorta-collision between the photon and an electron happening every once in a while. I guess each collision somehow takes a certain amount of time but leaves the photon unchanged otherwise, and presumably bumps that single electron a tiny bit to the righ...

And the loss mechanism I was imagining was more like something linear in the distance traveled, like causing electrons to oscillate but not completely elastically wrt the 'photon' inside the material.

Anyway, in your argument for the redshift as the photon enters the block, I worry about the following:

- can we really think of 1 photon entering the block becoming 1 photon inside the block, as opposed to needing to think about some wave thing that might translate to photons in some other way or maybe not translate to ordinary photons at all inside the materi

re redshift: Sorry, I should have been clearer, but I meant to talk about redshift (or another kind of energy loss) of the light that comes out of the block on the right compared to the light that went in from the left, which would cause issues with going from there being a uniformly-moving stationary center of mass to the conclusion about the location of the block. (I'm guessing you were right when you assumed in your argument that redshift is 0 for our purposes, but I don't understand light in materials well enough atm to see this at a glance atm.)

Note however, that the principle being broken (uniform motion of centre of mass) is not at all one of the "big principles" of physics, especially not with the extra step of converting the photon energy to mass. I had not previously heard of the principle, and don't think it is anywhere near the weight class of things like momentum conservation.

I found these sentences surprising. To me, the COM moving at constant velocity (in an inertial frame) is Newton's first law, which is one of the big principles (and I also have a mental equality between that an...

It additionally seems likely to me that we are presently missing major parts of a decent language for talking about minds/models, and developing such a language requires (and would constitute) significant philosophical progress. There are ways to 'understand the algorithm a model is' that are highly insufficient/inadequate for doing what we want to do in alignment — for instance, even if one gets from where interpretability is currently to being able to replace a neural net by a somewhat smaller boolean (or whatever) circuit and is thus able to transl...

Confusion #2: Why couldn't we make similar counting arguments for Turing machines?

I guess a central issue with separating NP from P with a counting argument is that (roughly speaking) there are equally many problems in NP and P. Each problem in NP has a polynomial-time verifier, so we can index the problems in NP by polytime algorithms, just like the problems in P.

in a bit more detail: We could try to use a counting argument to show that there is some problem with a (say) time verifier which does not have any (say) time solver. To do th...

To clarify, I think in this context I've only said that the claim "The minimax regret rule (sec 5.4.2 of Bradley (2012)) is equivalent to EV max w.r.t. the distribution in your representor that induces maximum regret" (and maybe the claim after it) was "false/nonsense" — in particular, because it doesn't make sense to talk about a distribution that induces maximum regret (without reference to a particular action) — which I'm guessing you agree with.

I wanted to say that I endorse the following:

- Neither of the two decision rules you mentioned is (in general

Oh ok yea that's a nice setup and I think I know how to prove that claim — the convex optimization argument I mentioned should give that. I still endorse the branch of my previous comment that comes after considering roughly that option though:

...That said, if we conceive of the decision rule as picking out a single action to perform, then because the decision rule at least takes Pareto improvements, I think a convex optimization argument says that the single action it picks is indeed the maximal EV one according to some distribution

(though not necess

Sorry, I feel like the point I wanted to make with my original bullet point is somewhat vaguer/different than what you're responding to. Let me try to clarify what I wanted to do with that argument with a caricatured version of the present argument-branch from my point of view:

your original question (caricatured): "The Sun prayer decision rule is as follows: you pray to the Sun; this makes a certain set of actions seem auspicious to you. Why not endorse the Sun prayer decision rule?"

my bullet point: "Bayesian expected utility maximization has this big red ...

...But the CCT only says that if you satisfy [blah], your policy is consistent with precise EV maximization. This doesn't imply your policy is inconsistent with Maximality, nor (as far as I know) does it tell you what distribution with respect to which you should maximize precise EV in order to satisfy [blah] (or even that such a distribution is unique). So I don’t see a positive case here for precise EV maximization [ETA: as a procedure to guide your decisions, that is]. (This is my also response to your remark below about “equivalent to "act consistently w

Here are some brief reasons why I dislike things like imprecise probabilities and maximality rules (somewhat strongly stated, medium-strongly held because I've thought a significant amount about this kind of thing, but unfortunately quite sloppily justified in this comment; also, sorry if some things below approach being insufficiently on-topic):

- I like the canonical arguments for bayesian expected utility maximization ( https://www.alignmentforum.org/posts/sZuw6SGfmZHvcAAEP/complete-class-consequentialist-foundations ; also https://web.stanford.edu/~hamm

I think most of the quantitative claims in the current version of the above comment are false/nonsense/[using terms non-standardly]. (Caveat: I only skimmed the original post.)

"if your first vector has cosine similarity 0.6 with d, then to be orthogonal to the first vector but still high cosine similarity with d, it's easier if you have a larger magnitude"

If by 'cosine similarity' you mean what's usually meant, which I take to be the cosine of the angle between two vectors, then the cosine only depends on the directions of vectors, not their magnitudes...

Hmm, with that we'd need to get 800 orthogonal vectors.[1] This seems pretty workable. If we take the MELBO vector magnitude change (7 -> 20) as an indication of how much the cosine similarity changes, then this is consistent with for the original vector. This seems plausible for a steering vector?

- ^

Thanks to @Lucius Bushnaq for correcting my earlier wrong number

how many times did the explanation just "work out" for no apparent reason

From the examples later in your post, it seems like it might be clearer to say something more like "how many things need to hold about the circuit for the explanation to describe the circuit"? More precisely, I'm objecting to your "how many times" because it could plausibly mean "on how many inputs" which I don't think is what you mean, and I'm objecting to your "for no apparent reason" because I don't see what it would mean for an explanation to hold for a reason in this case.

The Deep Neural Feature Ansatz

@misc{radhakrishnan2023mechanism, title={Mechanism of feature learning in deep fully connected networks and kernel machines that recursively learn features}, author={Adityanarayanan Radhakrishnan and Daniel Beaglehole and Parthe Pandit and Mikhail Belkin}, year={2023}, url = { https://arxiv.org/pdf/2212.13881.pdf } }

The ansatz from the paper

Let denote the activation vector in layer on input , with the input layer being at index , so . Let be the weight matrix after activation layer . Let be t...

A thread into which I'll occasionally post notes on some ML(?) papers I'm reading

I think the world would probably be much better if everyone made a bunch more of their notes public. I intend to occasionally copy some personal notes on ML(?) papers into this thread. While I hope that the notes which I'll end up selecting for being posted here will be of interest to some people, and that people will sometimes comment with their thoughts on the same paper and on my thoughts (please do tell me how I'm wrong, etc.), I expect that the notes here will not be sig...

I'd be very interested in a concrete construction of a (mathematical) universe in which, in some reasonable sense that remains to be made precise, two 'orthogonal pattern-universes' (preferably each containing 'agents' or 'sophisticated computational systems') live on 'the same fundamental substrate'. One of the many reasons I'm struggling to make this precise is that I want there to be some condition which meaningfully rules out trivial constructions in which the low-level specification of such a universe can be decomposed into a pair such that ...

I find [the use of square brackets to show the merge structure of [a linguistic entity that might otherwise be confusing to parse]] delightful :)

hot take: if you find that your sentences can't be parsed reliably without brackets, that's a sign you should probably refactor your writing to be clearer

I'd be quite interested in elaboration on getting faster alignment researchers not being alignment-hard — it currently seems likely to me that a research community of unupgraded alignment researchers with a hundred years is capable of solving alignment (conditional on alignment being solvable). (And having faster general researchers, a goal that seems roughly equivalent, is surely alignment-hard (again, conditional on alignment being solvable), because we can then get the researchers to quickly do whatever it is that we could do — e.g., upgrading?)

I currently guess that a research community of non-upgraded alignment researchers with a hundred years to work, picks out a plausible-sounding non-solution and kills everyone at the end of the hundred years.

I was just claiming that your description of pivotal acts / of people that support pivotal acts was incorrect in a way that people that think pivotal acts are worth considering would consider very significant and in a way that significantly reduces the power of your argument as applying to what people mean by pivotal acts — I don't see anything in your comment as a response to that claim. I would like it to be a separate discussion whether pivotal acts are a good idea with this in mind.

Now, in this separate discussion: I agree that executing a pivotal act ...

In this comment, I will be assuming that you intended to talk of "pivotal acts" in the standard (distribution of) sense(s) people use the term — if your comment is better described as using a different definition of "pivotal act", including when "pivotal act" is used by the people in the dialogue you present, then my present comment applies less.

I think that this is a significant mischaracterization of what most (? or definitely at least a substantial fraction of) pivotal activists mean by "pivotal act" (in particular, I think this is a significant mischar...

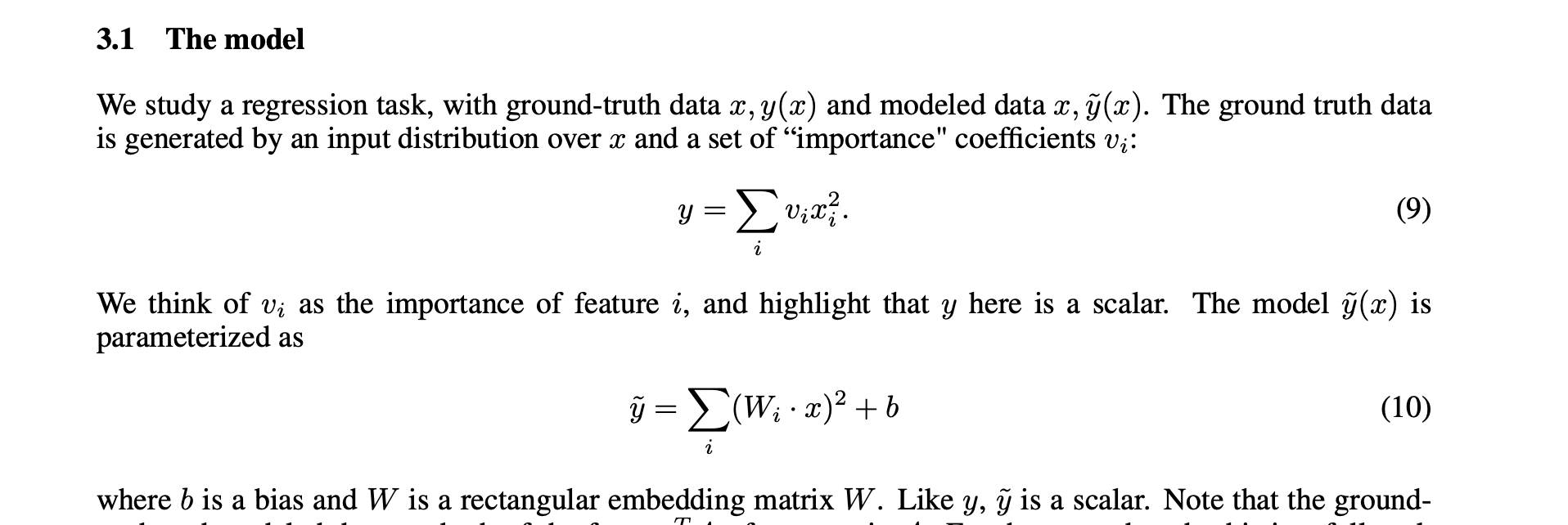

A few notes/questions about things that seem like errors in the paper (or maybe I'm confused — anyway, none of this invalidates any conclusions of the paper, but if I'm right or at least justifiably confused, then these do probably significantly hinder reading the paper; I'm partly posting this comment to possibly prevent some readers in the future from wasting a lot of time on the same issues):

1) The formula for here seems incorrect:

This is because W_i is a feature corresponding to the i'th coordinate of x (this is not evident from the screen...

At least ignoring legislation, an exchange could offer a contract with the same return as S&P 500 (for the aggregate of a pair of traders entering a Kalshi-style event contract); mechanistically, this index-tracking could be supported by just using the money put into a prediction market to buy VOO and selling when the market settles. (I think.)

An attempt at a specification of virtue ethics

I will be appropriating terminology from the Waluigi post. I hereby put forward the hypothesis that virtue ethics endorses an action iff it is what the better one of Luigi and Waluigi would do, where Luigi and Waluigi are the ones given by the posterior semiotic measure in the given situation, and "better" is defined according to what some [possibly vaguely specified] consequentialist theory thinks about the long-term expected effects of this particular Luigi vs the long-term effects of this particular Waluigi...

A small observation about the AI arms race in conditions of good infosec and collaboration

Suppose we are in a world where most top AI capabilities organizations are refraining from publishing their work (this could be the case because of safety concerns, or because of profit motives) + have strong infosec which prevents them from leaking insights about capabilities in other ways. In this world, it seems sort of plausible that the union of the capabilities insights of people at top labs would allow one to train significantly more capable models than the in...

...First, suppose GPT-n literally just has a “what a human would say” feature and a “what do I [as GPT-n] actually believe” feature, and those are the only two consistently useful truth-like features that it represents, and that using our method we can find both of them. This means we literally only need one more bit of information to identify the model’s beliefs.

One difference between “what a human would say” and “what GPT-n believes” is that humans will know less than GPT-n. In particular, there should be hard inputs that only a superhuman model

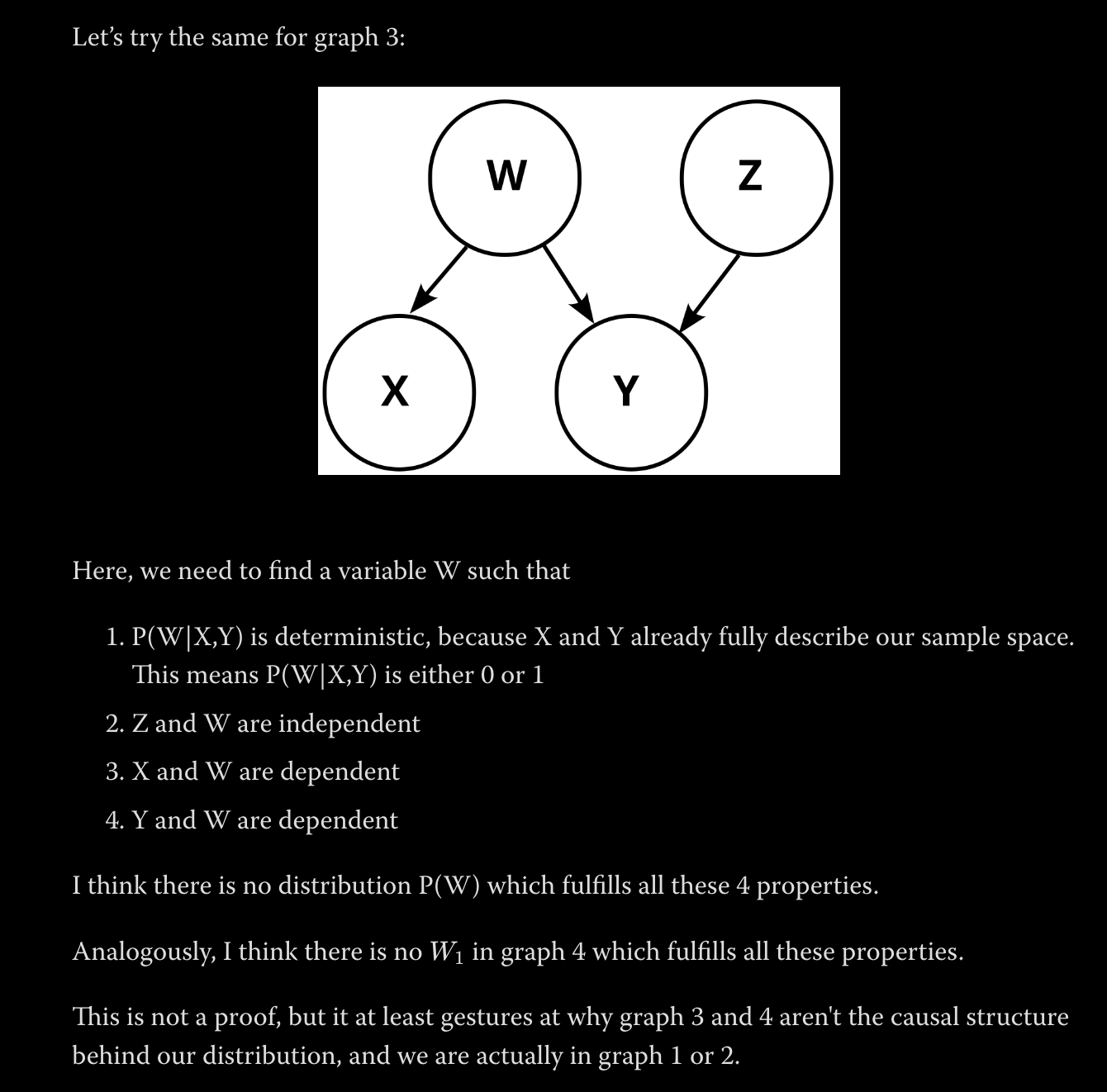

I think does not have to be a variable which we can observe, i.e. it is not necessarily the case that we can deterministically infer the value of from the values of and . For example, let's say the two binary variables we observe are and . We'd intuitively want to consider a causal model where is causing both, but in a way that makes all triples of variable values have nonzero probability (which is t...

I agree with you regarding 0 lebesgue. My impression is that the Pearl paradigm has some [statistics -> causal graph] inference rules which basically do the job of ruling out causal graphs for which having certain properties seen in the data has 0 lebesgue measure. (The inference from two variables being independent to them having no common ancestors in the underlying causal graph, stated earlier in the post, is also of this kind.) So I think it's correct to say "X has to cause Y", where this is understood as a valid inference inside the Pearl (or Garra...

I don't understand why 1 is true – in general, couldn't the variable $W$ be defined on a more refined sample space? Also, I think all $4$ conditions are technically satisfied if you set $W=X$ (or well, maybe it's better to think of it as a copy of $X$).

I think the following argument works though. Note that the distribution of $X$ given $(Z,Y,W)$ is just the deterministic distribution $X=Y \xor Z$ (this follows from the definition of Z). By the structure of the causal graph, the distribution of $X$ given $(Z,Y,W)$ must be the same as the distribution of $X$...

I took the main point of the post to be that there are fairly general conditions (on the utility function and on the bets you are offered) in which you should place each bet like your utility is linear, and fairly general conditions in which you should place each bet like your utility is logarithmic. In particular, the conditions are much weaker than your utility actually being linear, or than your utility actually being logarithmic, respectively, and I think this is a cool point. I don't see the post as saying anything beyond what's implied by this about Kelly betting vs max-linear-EV betting in general.

(By the way, I'm pretty sure the position I outline is compatible with changing usual forecasting procedures in the presence of observer selection effects, in cases where secondary evidence which does not kill us is available. E.g. one can probably still justify [looking at the base rate of near misses to understand the probability of nuclear war instead of relying solely on the observed rate of nuclear war itself].)

I'm inside-view fairly confident that Bob should be putting a probability of 0.01% on surviving conditional on many worlds being true, but it seems possible I'm missing some crucial considerations having to do with observer selection stuff in general, so I'll phrase the rest of this as more of a question.

What's wrong with saying that Bob should put a probability of 0.01% of surviving conditional on many-worlds being true – doesn't this just follow from the usual way that a many-worlder would put probabilities on things, or at least the simplest way for doi...

A big chunk of my uncertainty about whether at least 95% of the future’s potential value is realized comes from uncertainty about "the order of magnitude at which utility is bounded". That is, if unbounded total utilitarianism is roughly true, I think there is a <1% chance in any of these scenarios that >95% of the future's potential value would be realized. If decreasing marginal returns in the [amount of hedonium -> utility] conversion kick in fast enough for 10^20 slightly conscious humans on heroin for a million years to yield 95% of max utili...

Great post, thanks for writing this! In the version of "Alignment might be easier than we expect" in my head, I also have the following:

- Value might not be that fragile. We might "get sufficiently many bits in the value specification right" sort of by default to have an imperfect but still really valuable future.

- For instance, maybe IRL would just learn something close enough to pCEV-utility from human behavior, and then training an agent with that as the reward would make it close enough to a human-value-maximizer. We'd get some misalignment on both steps (

I still disagree / am confused. If it's indeed the case that , then why would we expect ? (Also, in the second-to-last sentence of your comment, it looks like you say the former is an equality.) Furthermore, if the latter equality is true, wouldn't it imply that the utility we get from [chocolate ice cream and vanilla ice cream] is the sum of the utilit...

The link in this sentence is broken for me: "Second, it was proven recently that utilitarianism is the “correct” moral philosophy." Unless this is intentional, I'm curious to know where it directed to.

I don't know of a category-theoretic treatment of Heidegger, but here's one of Hegel: https://ncatlab.org/nlab/show/Science+of+Logic. I think it's mostly due to Urs Schreiber, but I'm not sure – in any case, we can be certain it was written by an Absolute madlad :)

Why should I care about similarities to pCEV when valuing people?

It seems to me that this matters in case your metaethical view is that one should do pCEV, or more generally if you think matching pCEV is evidence of moral correctness. If you don't hold such metaethical views, then I might agree that (at least in the instrumentally rational sense, at least conditional on not holding any metametalevel views that contradict these) you shouldn't care.

> Why is the first example explaining why someone could support taking money from people you value less to g...

I proposed a method for detecting cheating in chess; cross-posting it here in the hopes of maybe getting better feedback than on reddit: https://www.reddit.com/r/chess/comments/xrs31z/a_proposal_for_an_experiment_well_data_analysis/