kaarelh AT gmail DOT com

Posts

Wiki Contributions

¿ thoughts on the following:

- solving >95% of IMO problems while never seeing any human proofs, problems, or math libraries (before being given IMO problems in base ZFC at test time). like alphaproof except not starting from a pretrained language model and without having a curriculum of human problems and in base ZFC with no given libraries (instead of being in lean), and getting to IMO combos

some afaik-open problems relating to bridging parametrized bayes with sth like solomonoff induction

I think that for each NN architecture+prior+task/loss, conditioning the initialization prior on train data (or doing some other bayesian thing) is typically basically a completely different learning algorithm than (S)GD-learning, because local learning is a very different thing, which is one reason I doubt the story in the slides as an explanation of generalization in deep learning[1].[2] But setting this aside (though I will touch on it again briefly in the last point I make below), I agree it would be cool to have a story connecting the parametrized bayesian thing to something like Solomonoff induction. Here's an outline of an attempt to give a more precise story extending the one in Lucius's slides, with a few afaik-open problems:

- Let's focus on boolean functions (because that's easy to think about — but feel free to make a different choice). Let's take a learner to be shown certain input-output pairs (that's "training it"), and having to predict outputs on new inputs (that's "test time"). Let's say we're interested in understanding something about which learning setups "generalize well" to these new inputs.

- What should we mean by "generalizing well" in this context? This isn't so clear to me — we could e.g. ask that it does well on problems "like this" which come up in practice, but to solve such problems, one would want to look at what situation gave us the problem and so on, which doesn't seem like the kind of data we want to include in the problem setup here; we could imagine simply removing such data and asking for something that would work well in practice, but this still doesn't seem like such a clean criterion.

- But anyway, the following seems like a reasonable Solomonoff-like thing:

- There's some complexity (i.e., size/[description length], probably) prior on boolean circuits. There can be multiple reasonable choices of [types of circuits admitted] and/or [description language] giving probably genuinely different priors here, but make some choice (it seems fine to make whatever reasonable choice which will fit best with the later parts of the story we're attempting to build).

- Think of all the outputs (i.e. train and test) as being generated by taking a circuit from this prior and running the inputs through it.

- To predict outputs on new inputs, just do the bayesian thing (ie condition the induced prior on functions on all the outputs you've seen).

- My suggestion is that to explain why another learning setup (for boolean functions) has good generalization properties, we could be sort of happy with building a bridge between it and the above simplicity-prior-circuit-solomonoff thing. (This could let us bypass having to further specify what it is to generalize well.)[3]

- One key step in the present attempt at building a bridge from NN-bayes to simplicity-prior-circuit-solomonoff is to get from simplicity-prior-circuit-solomonoff to a setup with a uniform prior over circuits — the story would like to say that instead of picking circuits from a simplicity prior, you can pick circuits uniformly at random from among all circuits of up to a certain size. The first main afaik-open problem I want to suggest is to actually work out this step: to provide a precise setup where the uniform prior on boolean circuits up to a certain size is like the simplicity prior on boolean circuits (and to work out the correspondence). (It could also be interesting and [sufficient for building a bridge] to argue that the uniform prior on boolean circuits has good generalization properties in some other way.) I haven't thought about this that much, but my initial sense is that this could totally be false unless one is careful about getting the right setup (for example: given inputs-outputs from a particular boolean function with a small circuit, maybe it would work up to a certain upper bound on the size of the circuits on which we have a uniform prior, and then stop working; and/or maybe it depends more precisely on our [types of circuits admitted] and/or [description language]). (I know there is this story with programs, but idk how to get such a correspondence for circuits from that, and the correspondence for circuits seems like what we actually need/want.)

- The second afaik-open problem I'm suggesting is to figure out in much more detail how to get from e.g. the MLP with a certain prior to boolean circuits with a uniform prior.

- One reason I'm stressing these afaik-open problems (particularly the second one) is that I'm pretty sure many parametrized bayesian setups do not in fact give good generalization behavior — one probably needs some further things (about the architecture+prior, given the task) to go right to get good generalization (in fact, I'd guess that it's "rare" to get good generalization without these further unclear hyperparams taking on the right values), and one's attempt at building a bridge should probably make contact with these further things (so as to not be "explaining" a falsehood).

- One interesting example is given by MLPs in the NN gaussian process limit (i.e. a certain kind of initialization + taking the width to infinity) learning boolean functions (edit: I've realized I should clarify that I'm (somewhat roughly speaking) assuming the convention, not the convention), which I think ends up being equivalent to kernel ridge regression with the fourier basis on boolean functions as the kernel features (with certain weights depending on the size of the XOR), which I think doesn't have great generalization properties — in particular, it's quite unlike simplicity-prior-circuit-solomonoff, and it's probably fair to think of it as doing sth more like a polyfit in some sense. I think this also happens for the NTK, btw. (But I should say I'm going off some only loosely figured out calculations (joint with Dmitry Vaintrob and o1-preview) here, so there's a real chance I'm wrong about this example and you shouldn't completely trust me on it currently.) But I'd guess that deep learning can do somewhat better than this. (speculation: Maybe a major role in getting bad generalization here is played by the NNGP and NTK not "learning intermediate variables", preventing any analogy with boolean circuits with some depth going through, whereas deep learning can learn intermediate variables to some extent.) So if we want to have a correct solomonoff story which explains better generalization behavior than that of this probably fairly stupid kernel thing, then we would probably want the story to make some distinction which prevents it from also applying in this NNGP limit. (Anyway, even if I'm wrong about the NNGP case, I'd guess that most setups provide examples of fairly poor generalization, so one probably really needn't appeal to NNGP calculations to make this point.)

Separately from the above bridge attempt, it is not at all obvious to me that parametrized bayes in fact has such good generalization behavior at all (i.e., "at least as good as deep learning", whatever that means, let's say)[4]; here's some messages on this topic I sent to [the group chat in which the posted discussion happened] later:

"i'd be interested in hearing your reasons to think that NN-parametrized bayesian inference with a prior given by canonical initialization randomization (or some other reasonable prior) generalizes well (for eg canonical ML tasks or boolean functions), if you think it does — this isn't so clear to me at all

some practical SGD-NNs generalize decently, but that's imo a sufficiently different learning process to give little evidence about the bayesian case (but i'm open to further discussion of this). i have some vague sense that the bayesian thing should be better than SGD, but idk if i actually have good reason to believe this?

i assume that there are some other practical ML things inspired by bayes which generalize decently but it seems plausible that those are still pretty local so pretty far from actual bayes and maybe even closer to SGD than to bayes, tho idk what i should precisely mean by that. but eg it seems plausible from 3 min of thinking that some MCMC (eg SGLD) setup with a non-galactic amount of time on a NN of practical size would basically walk from init to a local likelihood max and not escape it in time, which sounds a lot more like SGD than like bayes (but idk maybe some step size scheduling makes the mixing time non-galactic in some interesting case somehow, or if it doesn't actually do that maybe it can give a fine approximation of the posterior in some other practical sense anyway? seems tough). i haven't thought about variational inference much tho — maybe there's something practical which is more like bayes here and we could get some relevant evidence from that

maybe there's some obvious answer and i'm being stupid here, idk :)

one could also directly appeal to the uniformly random program analogy but the current version of that imo doesn't remotely constitute sufficiently good reason to think that bayesian NNs generalize well on its own"

(edit: this comment suggests https://arxiv.org/pdf/2002.02405 as evidence that bayes-NNs generalize worse than SGD-NNs. but idk — I haven't looked at the paper yet — ie no endorsement of it one way or the other from me atm)

to the extent that deep learning in fact exhibits good generalization, which is probably a very small extent compared to sth like Solomonoff induction, and this has to do with some stuff I talked about in my messages in the post above; but I digress ↩︎

I also think that different architecture+prior+task/loss choices probably give many substantially-differently-behaved learning setups, deserving somewhat separate explanations of generalization, for both bayes and SGD. ↩︎

edit: Instead of doing this thing with circuits, you could get an alternative "principled generalization baseline/ceiling" from doing the same thing with programs instead (i.e., have a complexity prior on turing machines and condition it on seen input-output pairs), which I think ends up being equivalent (up to a probably-in-some-sense-small term) to using the kolmogorov complexities of these functions (thought of "extensionally" as strings, ie just listing outputs in some canonical order (different choices of canonical order should again give the same complexities (up to a probably-in-some-sense-small term))). While this is probably a more standard choice historically, it seems worse for our purposes given that (1) it would probably be strictly harder to build a bridge from NNs to it (and there probably just isn't any NNs <-> programs bridge which is as precise as the NNs <-> circuits bridge we might hope to build, given that NNs are already circuity things and it's easy to have a small program for a function without having a small circuit for it (as the small program could run for a long time)), and (2) it's imo plausible that some variant of the circuit prior is "philosophically/physically more correct" than the program prior, though this is less clear than the first point. ↩︎

to be clear: I'm not claiming it doesn't have good generalization behavior — instead, I lack good evidence/reason to think it does or doesn't and feel like I don't know ↩︎

you say "Human ingenuity is irrelevant. Lots of people believe they know the one last piece of the puzzle to get AGI, but I increasingly expect the missing pieces to be too alien for most researchers to stumble upon just by thinking about things without doing compute-intensive experiments." and you link https://tsvibt.blogspot.com/2024/04/koan-divining-alien-datastructures-from.html for "too alien for most researchers to stumble upon just by thinking about things without doing compute-intensive experiments"

i feel like that post and that statement are in contradiction/tension or at best orthogonal

there's imo probably not any (even-nearly-implementable) ceiling for basically any rich (thinking-)skill at all[1] — no cognitive system will ever be well-thought-of as getting close to a ceiling at such a skill — it's always possible to do any rich skill very much better (I mean these things for finite minds in general, but also when restricting the scope to current humans)

(that said, (1) of course, it is common for people to become better at particular skills up to some time and to become worse later, but i think this has nothing to do with having reached some principled ceiling; (2) also, we could perhaps eg try to talk about 'the artifact that takes at most bits to specify (in some specification-language) which figures out units of math the quickest (for some sufficiently large compared to )', but even if we could make sense of that, it wouldn't be right to think of it as being at some math skill ceiling to begin with, because it will probably very quickly change very much about its thinking (i.e. reprogram itself, imo plausibly indefinitely many times, including indefinitely many times in important ways, until the heat death of the universe or whatever); (3) i admit that there can be some purposes for which there is an appropriate way to measure goodness at some rich skill with a score in , and for such a purpose potential goodness at even a rich skill is of course appropriate to consider bounded and optimal performance might be rightly said to be approachable, but this somehow feels not-that-relevant in the present context)

i'll try to get away with not being very clear about what i mean by a 'rich (thinking-)skill' except that it has to do with having a rich domain (the domain either effectively presenting any sufficiently rich set of mathematical questions as problems or relating richly to humans, or in particular just to yourself, usually suffices) and i would include all the examples you give ↩︎

a few thoughts on hyperparams for a better learning theory (for understanding what happens when a neural net is trained with gradient descent)

Having found myself repeating the same points/claims in various conversations about what NN learning is like (especially around singular learning theory), I figured it's worth writing some of them down. My typical confidence in a claim below is like 95%[1]. I'm not claiming anything here is significantly novel. The claims/points:

- local learning (eg gradient descent) strongly does not find global optima. insofar as running a local learning process from many seeds produces outputs with 'similar' (train or test) losses, that's a law of large numbers phenomenon[2], not a consequence of always finding the optimal neural net weights.[3][4]

- if your method can't produce better weights: were you trying to produce better weights by running gradient descent from a bunch of different starting points? getting similar losses this way is a LLN phenomenon

- maybe this is a crisp way to see a counterexample instead: train, then identify a 'lottery ticket' subnetwork after training like done in that literature. now get rid of all other edges in the network, and retrain that subnetwork either from the previous initialization or from a new initialization — i think this literature says that you get a much worse loss in the latter case. so training from a random initialization here gives a much worse loss than possible

- dynamics (kinetics) matter(s). the probability of getting to a particular training endpoint is highly dependent not just on stuff that is evident from the neighborhood of that point, but on there being a way to make those structures incrementally, ie by a sequence of local moves each of which is individually useful.[5][6][7] i think that this is not an academic correction, but a major one — the structures found in practice are very massively those with sensible paths into them and not other (naively) similarly complex structures. some stuff to consider:

- the human eye evolving via a bunch of individually sensible steps, https://en.wikipedia.org/wiki/Evolution_of_the_eye

- (given a toy setup and in a certain limit,) the hardness of learning a boolean function being characterized by its leap complexity, ie the size of the 'largest step' between its fourier terms, https://arxiv.org/pdf/2302.11055

- imagine a loss function on a plane which has a crater somewhere and another crater with a valley descending into it somewhere else. the local neighborhoods of the deepest points of the two craters can look the same, but the crater with a valley descending into it will have a massively larger drainage basin. to say more: the crater with a valley is a case where it is first loss-decreasing to build one simple thing, (ie in this case to fix the value of one parameter), and once you've done that loss-decreasing to build another simple thing (ie in this case to fix the value of another parameter); getting to the isolated crater is more like having to build two things at once. i think that with a reasonable way to make things precise, the drainage basin of a 'k-parameter structure' with no valley descending into it will be exponentially smaller than that of eg a 'k-parameter structure' with 'a k/2-parameter valley' descending into it, which will be exponentially smaller still than a 'k-parameter structure' with a sequence of valleys of slowly increasing dimension descending into it

- it seems plausible to me that the right way to think about stuff will end up revealing that in practice there are basically only systems of steps where a single [very small thing]/parameter gets developed/fixed at a time

- i'm further guessing that most structures basically have 'one way' to descend into them (tho if you consider sufficiently different structures to be the same, then this can be false, like in examples of convergent evolution) and that it's nice to think of the probability of finding the structure as the product over steps of the probability of making the right choice on that step (of falling in the right part of a partition determining which next thing gets built)

- one correction/addition to the above is that it's probably good to see things in terms of there being many 'independent' structures/circuits being formed in parallel, creating some kind of ecology of different structures/circuits. maybe it makes sense to track the 'effective loss' created for a structure/circuit by the global loss (typically including weight norm) together with the other structures present at a time? (or can other structures do sufficiently orthogonal things that it's fine to ignore this correction in some cases?) maybe it's possible to have structures which were initially independent be combined into larger structures?[8]

- everything is a loss phenomenon. if something is ever a something-else phenomenon, that's logically downstream of a relation between that other thing and loss (but this isn't to say you shouldn't be trying to find these other nice things related to loss)

- grokking happens basically only in the presence of weight regularization, and it has to do with there being slower structures to form which are eventually more efficient at making logits high (ie more logit bang for weight norm buck)

- in the usual case that generalization starts to happen immediately, this has to do with generalizing structures being stronger attractors even at initialization. one consideration at play here is that

- nothing interesting ever happens during a random walk on a loss min surface

- it's not clear that i'm conceiving of structures/circuits correctly/well in the above. i think it would help a library of like >10 well-understood toy models (as opposed to like the maybe 1.3 we have now), and to be very closely guided by them when developing an understanding of neural net learning

some related (more meta) thoughts

- to do interesting/useful work in learning theory (as of 2024), imo it matters a lot that you think hard about phenomena of interest and try to build theory which lets you make sense of them, as opposed to holding fast to an existing formalism and trying to develop it further / articulate it better / see phenomena in terms of it

- this is somewhat downstream of current formalisms imo being bad, it imo being appropriate to think of them more as capturing preliminary toy cases, not as revealing profound things about the phenomena of interest, and imo it being feasible to do better

- but what makes sense to do can depend on the person, and it's also fine to just want to do math lol

- and it's certainly very helpful to know a bunch of math, because that gives you a library in terms of which to build an understanding of phenomena

- it's imo especially great if you're picking phenomena to be interested in with the future going well around ai in mind

(* but it looks to me like learning theory is unfortunately hard to make relevant to ai alignment[9])

acknowledgments

these thoughts are sorta joint with Jake Mendel and Dmitry Vaintrob (though i'm making no claim about whether they'd endorse the claims). also thank u for discussions: Sam Eisenstat, Clem von Stengel, Lucius Bushnaq, Zach Furman, Alexander Gietelink Oldenziel, Kirke Joamets

with the important caveat that, especially for claims involving 'circuits'/'structures', I think it's plausible they are made in a frame which will soon be superseded or at least significantly improved/clarified/better-articulated, so it's a 95% given a frame which is probably silly ↩︎

train loss in very overparametrized cases is an exception. in this case it might be interesting to note that optima will also be off at infinity if you're using cross-entropy loss, https://arxiv.org/pdf/2006.06657 ↩︎

also, gradient descent is very far from doing optimal learning in some solomonoff sense — though it can be fruitful to try to draw analogies between the two — and it is also very far from being the best possible practical learning algorithm ↩︎

by it being a law of large numbers phenomenon, i mean sth like: there are a bunch of structures/circuits/pattern-completers that could be learned, and each one gets learned with a certain probability (or maybe a roughly given total number of these structures gets learned), and loss is roughly some aggregation of indicators for whether each structure gets learned — an aggregation to which the law of large numbers applies ↩︎

to say more: any concept/thinking-structure in general has to be invented somehow — there in some sense has to be a 'sensible path' to that concept — but any local learning process is much more limited than that still — now we're forced to have a path in some (naively seen) space of possible concepts/thinking-structures, which is a major restriction. eg you might find the right definition in mathematics by looking for a thing satisfying certain constraints (eg you might want the definition to fit into theorems characterizing something you want to characterize), and many such definitions will not be findable by doing sth like gradient descent on definitions ↩︎

ok, (given an architecture and a loss,) technically each point in the loss landscape will in fact have a different local neighborhood, so in some sense we know that the probability of getting to a point is a function of its neighborhood alone, but what i'm claiming is that it is not nicely/usefully a function of its neighborhood alone. to the extent that stuff about this probability can be nicely deduced from some aspect of the neighborhood, that's probably 'logically downstream' of that aspect of the neighborhood implying something about nice paths to the point. ↩︎

also note that the points one ends up at in LLM training are not local minima — LLMs aren't trained to convergence ↩︎

i think identifying and very clearly understanding any toy example where this shows up would plausibly be better than anything else published in interp this year. the leap complexity paper does something a bit like this but doesn't really do this ↩︎

i feel like i should clarify here though that i think basically all existing alignment research fails to relate much to ai alignment. but then i feel like i should further clarify that i think each particular thing sucks at relating to alignment after having thought about how that particular thing could help, not (directly) from some general vague sense of pessimism. i should also say that if i didn't think interp sucked at relating to alignment, i'd think learning theory sucks less at relating to alignment (ie, not less than interp but less than i currently think it does). but then i feel like i should further say that fortunately you can just think about whether learning theory relates to alignment directly yourself :) ↩︎

a thing i think is probably happening and significant in such cases: developing good 'concepts/ideas' to handle a problem, 'getting a feel for what's going on in a (conceptual) situation'

a plausibly analogous thing in humanity(-seen-as-a-single-thinker): humanity states a conjecture in mathematics, spends centuries playing around with related things (tho paying some attention to that conjecture), building up mathematical machinery/understanding, until a proof of the conjecture almost just falls out of the machinery/understanding

I find it surprising/confusing/confused/jarring that you speak of models-in-the-sense-of-mathematical-logic=:L-models as the same thing as (or as a precise version of) models-as-conceptions-of-situations=:C-models. To explain why these look to me like two pretty much entirely distinct meanings of the word 'model', let me start by giving some first brushes of a picture of C-models. When one employs a C-model, one likens a situation/object/etc of interest to a situation/object/etc that is already understood (perhaps a mathematical/abstract one), that one expects to be better able to work/play with. For example, when one has data about sun angles at a location throughout the day and one is tasked with figuring out the distance from that location to the north pole, one translates the question to a question about 3d space with a stationary point sun and a rotating sphere and an unknown point on the sphere and so on. (I'm not claiming a thinker is aware of making such a translation when they make it.) Employing a C-model making an analogy. From inside a thinker, the objects/situations on each side of the analogy look like... well, things/situations; from outside a thinker, both sides are thinking-elements.[1] (I think there's a large GOFAI subliterature trying to make this kind of picture precise but I'm not that familiar with it; here are two papers that I've only skimmed: https://www.qrg.northwestern.edu/papers/Files/smeff2(searchable).pdf , https://api.lib.kyushu-u.ac.jp/opac_download_md/3070/76.ps.tar.pdf .)

I'm not that happy with the above picture of C-models, but I think that it seeming like an even sorta reasonable candidate picture might be sufficient to see how C-models and L-models are very different, so I'll continue in that hope. I'll assume we're already on the same page about what an L-model is ( https://en.wikipedia.org/wiki/Model_theory ). Here are some ways in which C-models and L-models differ that imo together make them very different things:

- An L-model is an assignment of meaning to a language, a 'mathematical universe' together with a mapping from symbols in a language to stuff in that universe — it's a semantic thing one attaches to a syntax. The two sides of a C-modeling-act are both things/situations which are roughly equally syntactic/semantic (more precisely: each side is more like a syntactic thing when we try to look at a thinker from the outside, and just not well-placed on this axis from the thinker's internal point of view, but if anything, the already-understood side of the analogy might look more like a mechanical/syntactic game than the less-understood side, eg when you are aware that you are taking something as a C-model).

- Both sides of a C-model are things/situations one can reason about/with/in. An L-model takes from a kind of reasoning (proving, stating) system to an external universe which that system could talk about.

- An L-model is an assignment of a static world to a dynamic thing; the two sides of a C-model are roughly equally dynamic.

- A C-model might 'allow you to make certain moves without necessarily explicitly concerning itself much with any coherent mathematical object that these might be tracking'. Of course, if you are employing a C-model and you ask yourself whether you are thinking about some thing, you will probably answer that you are, but in general it won't be anywhere close to 'fully developed' in your mind, and even if it were (whatever that means), that wouldn't be all there is to the C-model. For an extreme example, we could maybe even imagine a case where a C-model is given with some 'axioms and inference rules' such that if one tried to construct a mathematical object 'wrt which all these axioms and inference rules would be valid', one would not be able to construct anything — one would find that one has been 'talking about a logically impossible object'. Maybe physicists handling infinities gracefully when calculating integrals in QFT is a fun example of this? This is in contrast with an L-model which doesn't involve anything like axioms or inference rules at all and which is 'fully developed' — all terms in the syntax have been given fixed referents and so on.

- (this point and the ones after are in some tension with the main picture of C-models provided above but:) A C-model could be like a mental context/arena where certain moves are made available/salient, like a game. It seems difficult to see an L-model this way.

- A C-model could also be like a program that can be run with inputs from a given situation. It seems difficult to think of an L-model this way.

- A C-model can provide a way to talk about a situation, a conceptual lens through which to see a situation, without which one wouldn't really be able to [talk about]/see the situation at all. It seems difficult to see an L-model as ever doing this. (Relatedly, I also find it surprising/confusing/confused/jarring that you speak of reasoning using C-models as a semantic kind of reasoning.)

(But maybe I'm grouping like a thousand different things together unnaturally under C-models and you have some single thing or a subset in mind that is in fact closer to L-models?)

All this said, I don't want to claim that no helpful analogy could be made between C-models and L-models. Indeed, I think there is the following important analogy between C-models and L-models:

- When we look for a C-model to apply to a situation of interest, perhaps we often look for a mathematical object/situation that satisfies certain key properties satisfied by the situation. Likewise, an L-model of a set of sentences is (roughly speaking) a mathematical object which satisfies those sentences.

(Acknowledgments. I'd like to thank Dmitry Vaintrob and Sam Eisenstat for related conversations.)

- ^

This is complicated a bit by a thinker also commonly looking at the C-model partly as if from the outside — in particular, when a thinker critiques the C-model to come up with a better one. For example, you might notice that the situation of interest has some property that the toy situation you are analogizing it to lacks, and then try to fix that. For example, to guess the density of twin primes, you might start from a naive analogy to a probabilistic situation where each 'prime' p has probability (p-1)/p of not dividing each 'number' independently at random, but then realize that your analogy is lacking because really p not dividing n makes it a bit less likely that p doesn't divide n+2, and adjust your analogy. This involves a mental move that also looks at the analogy 'from the outside' a bit.

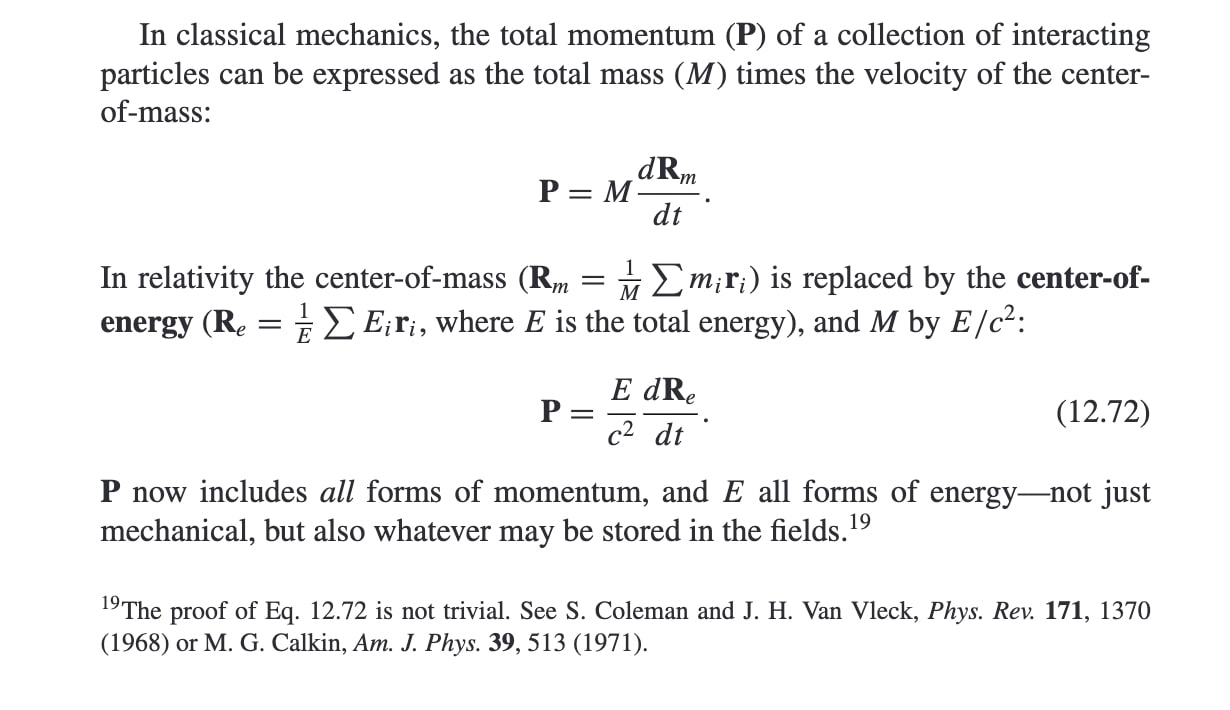

That said, the hypothetical you give is cool and I agree the two principles decouple there! (I intuitively want to save that case by saying the COM is only stationary in a covering space where the train has in fact moved a bunch by the time it stops, but idk how to make this make sense for a different arrangement of portals.) I guess another thing that seems a bit compelling for the two decoupling is that conservation of angular momentum is analogous to conservation of momentum but there's no angular analogue to the center of mass (that's rotating uniformly, anyway). I guess another thing that's a bit compelling is that there's no nice notion of a center of energy once we view spacetime as being curved ( https://physics.stackexchange.com/a/269273 ). I think I've become convinced that conservation of momentum is a significantly bigger principle :). But still, the two seem equivalent to me before one gets to general relativity. (I guess this actually depends a bit on what the proof of 12.72 is like — in particular, if that proof basically uses the conservation of momentum, then I'd be more happy to say that the two aren't equivalent already for relativity/fields.)

here's a picture from https://hansandcassady.org/David%20J.%20Griffiths-Introduction%20to%20Electrodynamics-Addison-Wesley%20(2012).pdf :

Given 12.72, uniform motion of the center of energy is equivalent to conservation of momentum, right? P is const <=> dR_e/dt is const.

(I'm guessing 12.72 is in fact correct here, but I guess we can doubt it — I haven't thought much about how to prove it when fields and relativistic and quantum things are involved. From a cursory look at his comment, Lubos Motl seems to consider it invalid lol ( in https://physics.stackexchange.com/a/3200 ).)

I didn't express this clearly, but yea I meant no pretraining on human text at all, and also nothing computer-generated which "uses human mathematical ideas" (beyond what is in base ZFC), but I'd probably allow something like the synthetic data generation used for AlphaGeometry (Fig. 3) except in base ZFC and giving away very little human math inside the deduction engine. I agree this would be very crazy to see. The version with pretraining on non-mathy text is also interesting and would still be totally crazy to see. I agree it would probably imply your "come up with interesting math concepts". But I wouldn't be surprised if like >20% of the people on LW who think A[G/S]I happens in like 2−3 years thought that my thing could totally happen in 2025 if the labs were aiming for it (though they might not expect the labs to aim for it), with your things plausibly happening later. E.g. maybe such a person would think "AlphaProof is already mostly RL/search and one could replicate its performance soon without human data, and anyway, AlphaGeometry already pretty much did this for geometry (and AlphaZero did it for chess)" and "some RL+search+self-play thing could get to solving major open problems in math in 2 years, and plausibly at that point human data isn't so critical, and IMO problems are easier than major open problems, so plausibly some such thing gets to IMO problems in 1 year". But also idk maybe this doesn't hang together enough for such people to exist. I wonder if one can use this kind of idea to get a different operationalization with parties interested in taking each side though. Like, maybe whether such a system would prove Cantor's theorem (stated in base ZFC) (imo this would still be pretty crazy to see)? Or whether such a system would get to IMO combos relying moderately less on human data?