If I have some money, whom should I donate it to in order to reduce expected P(doom) the most?

I just want to make sure that when I donate money to AI alignment stuff it's actually going to be used economically

I just want to make sure that when I donate money to AI alignment stuff it's actually going to be used economically

So, we have our big, evil AI, and it wants to recursively self-improve to superintelligence so it can start doing who-knows-what crazy-gradient-descent-reinforced-nonsense-goal-chasing. But if it starts messing with its own weights, it risks changing its crazy-gradient-descent-reinforced-nonsense-goals into different, even-crazier gradient-descent-reinforced-nonsense-goals which it would not endorse currently. If it wants to...

People who think of themselves as rationalists, but have not yet gotten good at rationality, may be susceptible to this. Basically, these people want to feel like they came up with or found out about a Super Awesome Thing that No One Does that Creates a Lot of Awesomeness with...

So I read this other post which talked about possible S-risk from an AI being aligned to human values. It contains the following statement: > Whoever controls the AI will most likely have somebody whose suffering they don’t care about, or that they want to enact, or that they have...

It's probably not possible to have a twin of me that does everything the same except experiences no qualia, i.e. you can predict 100% accurately, if you expose it to stimulus X and it does Y, that I would also do Y if I was exposed to stimulus X. But...

Using everything we know about human behavior, we could probably manage to get the media to pick up on us and our fears about AI, similarly to the successful efforts of early environmental activists? Have we tried getting people to understand that this is a problem? Have we tried emotional...

There is some part of me, which cannot help but feel special and better and different and unique when I look at the humans around me and compare them to myself. There is a strange narcissism I feel, and I don't like it. My System 2 mind is fully aware...

Strong-upvoted both ways for visibility.

A single instance of an LLM summoned by a while loop, such that the only thing the LLM is outputting is a single token that it predicts as most likely to come after the other tokens it has recieved, only cares about that particular token in that particular instance, so if it has any method of self-improving or otherwise building and running code during its ephemeral existence, it would still care that whatever system it builds or becomes, still cares about that token in the same way it did before.

This has in some ways already started.

AIs are already intentionally, agentically self-improving? Do you have a source for that?

Utility functions that are a function of time (or other context)

?? Do you mean utility functions that care that, at time t = p, thing A happens, but at t = q, thing B happens? Such a utility function would still want to self-preserve.

utility functions that steadily care about the state of the real world

Could you taboo "steadily"? *All* utility functions care about the state of the real world, that's what a utility function is (a description of the exact manner in which an agent cares about the state of the real world), and even if the utility function wants different things to happen at different times, said function would still not want to modify into a different utility function that wants other different things to happen at those times.

If you have goals at all you care about them being advanced. It would be a very unusual case of an AI which is goal-directed to the point of self-improvement but doesn't care if the more capable new version of themself that they build pursues those goals.

Think of an agent with any possible utility function, which we'll call U. If said agent's utility function changes to, say, V, it will start taking actions that have very little utility according to U. Therefore, almost any utility function will result in an agent that acts very much like it wants to preserve its goals. Rob Miles's video explains this well.

Retaining your values is a convergent instrumental goal and would happen by default under an extremely wide range of possible utility functions an AI would realistically develop during training.

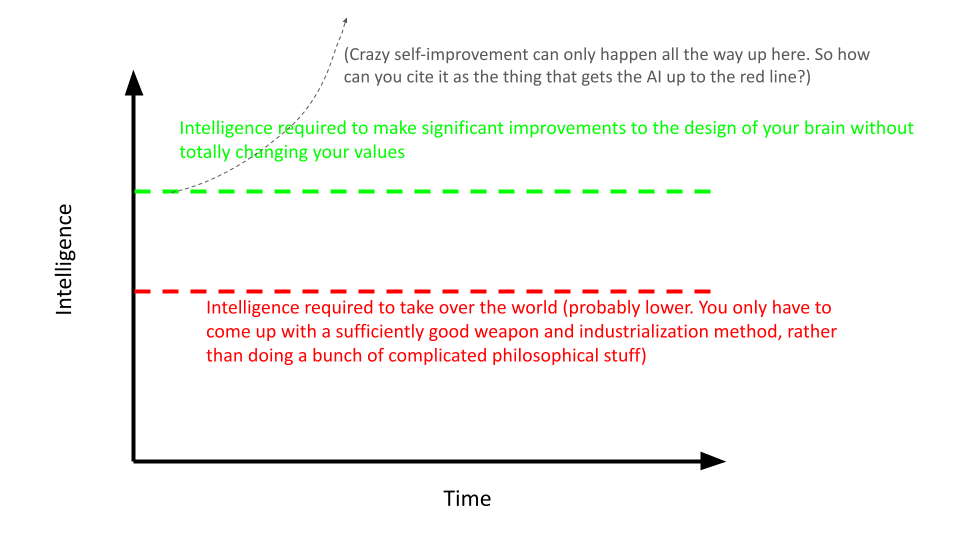

My point is that I see recursive self-improvement often cited as the thing that gets the AI up to the level where it's powerful enough to kill everyone, which is a characterization I disagree with, and is an important crux, because believing in it makes someone's timelines shorter.

So, we have our big, evil AI, and it wants to recursively self-improve to superintelligence so it can start doing who-knows-what crazy-gradient-descent-reinforced-nonsense-goal-chasing. But if it starts messing with its own weights, it risks changing its crazy-gradient-descent-reinforced-nonsense-goals into different, even-crazier gradient-descent-reinforced-nonsense-goals which it would not endorse currently. If it wants to increase its intelligence and capability while retaining its values, that is a task that can only be done if the AI is already really smart, because it probably requires a lot of complicated philosophizing and introspection. So an AI would only be able to start recursively self-improving once it's... already smart enough to understand lots of complicated concepts such that if it was that smart it could just go ahead and take over the world at that level of capability without needing to increase it. So how does the AI get there, to that level?

How did you find all of this out?

People who think of themselves as rationalists, but have not yet gotten good at rationality, may be susceptible to this.

Basically, these people want to feel like they came up with or found out about a Super Awesome Thing that No One Does that Creates a Lot of Awesomeness with Comparatively Little Effort. (This is especially dangerous if the person has found out about the concept of more dakka.) They may try the thing, and it works. But if it doesn't, they sometimes just continue doing it because it has become part of their self-image.

Think of keeping a gratitude journal. Studies show it improves your well-being, so I did it. After about a... (read more)

If Eliezer's p(doom) is so high, why is he signed up for cryonics?

Looking carefully at how other people speak and write, there are certain cognitive concepts, that simply don't make sense to me, the way that it "makes sense" to me that red is "hot" and blue is "cold". I wonder if the reason I can't understand them is that my consciousness is somehow "simpler", or less "layered", although I don't really know what I mean by that, it's just a vibe i get. Here are some examples:

Quick thought: If you have an aligned AI in a multipolar scenario, other AIs might threaten to cause S-risk in order to get said FAI to do stuff, or as blackmail. Therefore, we should make the FAI think of X-risk and S-risk as equally bad (even though S-risk is in reality terrifyingly worse), because that way other powerful AIs will simply use oblivion as a threat instead of astronomical suffering (as oblivion is much easier to bring about).

It is possible that an FAI would be able to do some sort of weird crazy acausal decision-theory trick to make itself act as if it doesn't care about anything done in efforts to blackmail it or something like that. But this is just to make sure.

So I read this other post which talked about possible S-risk from an AI being aligned to human values. It contains the following statement:

Whoever controls the AI will most likely have somebody whose suffering they don’t care about, or that they want to enact, or that they have some excuse for, because that describes the values of the vast majority of people.

I do not dispute this statement. However, I think that it simply doesn't matter. Why?

Take an uncontroversially horrible person. (As an example, I will use the first person who comes to mind, but this logic probably applies for most people.) If said person[1] were to introspect on why he did the horrible... (read more)

It's probably not possible to have a twin of me that does everything the same except experiences no qualia, i.e. you can predict 100% accurately, if you expose it to stimulus X and it does Y, that I would also do Y if I was exposed to stimulus X.

But can you make an "almost-p-zombie"? A copy of me, which, while not being exactly like me (besides consciousness), is almost exactly like me. So a function, which, when it takes in a stimulus X, says, not with 100% certainty, but 99.999999999%, what I will do in response. Is this possible to construct within the laws of our universe?

Additionally, is this easier or harder to construct than a fully conscious simulation of me?

Just curious.

Is there a non-mysterious explanation somewhere of the idea that the universe "conserves information"?

Using everything we know about human behavior, we could probably manage to get the media to pick up on us and our fears about AI, similarly to the successful efforts of early environmental activists? Have we tried getting people to understand that this is a problem? Have we tried emotional appeals? Dumbing-downs of our best AI risk arguments, directed at the general public? Chilling posters, portraying the Earth and human civilization has having been reassembled into a giant computer?

There is some part of me, which cannot help but feel special and better and different and unique when I look at the humans around me and compare them to myself. There is a strange narcissism I feel, and I don't like it. My System 2 mind is fully aware that in no way am I an especially "good" or "superior" person over others, but my System 1 mind has...difficulty...internalizing this. I'm in a mild affective death spiral, only it's around myself, and how "formidable" and "rational" I supposedly am, which means that the tricks in this post feel to my System 1 mind like I'm trying to denigrate myself. How might I handle this?

I don't know how to say this in LessWrong jargon, but it clearly falls into the category of rationality, so here goes:

Consumer Reports is a nonprofit. They run experiments and whatnot to determine, for example, the optimal toothpaste for children. They do not get paid by the companies they test the products of.

Listening to what they say is a wayyy better method of choosing what to buy than trusting your gut; you have consumed so many advertisements and they have all taken advantage of your cognitive biases and reduced your ability to make good decisions about buying stuff, skewing you towards buying whosever ads were most targeted and most frequent.

As far as I can tell, they care about your online privacy and will not scoop up all your data and sell it to random data brokers.

Why does LessWrong use that little compass thingy that serves as the North Atlantic Treaty Organization's logo? (except with four additional spikes added diagonally) Was it just a coincidence?

We might be doomed. But, what do the variations of the universal wave function which still contain human-y beings look like? Have we cast aside our arms race and went back to relative normality (well, as "normal" as Earth can be in this time of accelerating technological progress)? Has some lucky researcher succeeded in creating a powerful and scalable alignment solution, and a glorious transhumanist future awaits? Has (shudder) this happened? Or is it split between too many unlikely outcomes to list here?

Conditional on our survival, how's life?

(Update: I am no longer fluent in Toki Pona)