All of lesswronguser123's Comments + Replies

Great post, I was inner simulating you posting something about bad vibes from people given your multiagent model of the mind and baggage which comes with it, and here it is.

Suggestion: Consider writing a 2023 review of this post (I don't have enough background reading to write a good one)

Honestly majority of the points presented here are not new and already been addressed in

https://www.lesswrong.com/rationality

or https://www.readthesequence.com/

I got into this conversation because I thought I would find something new here. As an egoist I am voluntarily leaving this conversation in disagreement because I have other things to do in life. Thank you for your time.

Another issue with teaching it academically is that academic thought, like I already said, frames things in a mathematical and thus non-human way. And treating people like objects to be manipulated for certain goals (a common consequence of this way of thinking) is not only bad taste, it makes the game of life less enjoyable.

Yes intuitions can be wrong welcome to reality. Beside I think schools are bad at teaching things.

...If you want something to be part of you, then you simply need to come up with it yourself, it will be your own knowledg

I even think it's a danger to be more "book smart" than "street smart" about social things.

Honestly I don't know enough about people to actually tell if that's really the case for me book smart becomes street smart when I make it truly a part of me.

That's how I live anyways. For me when you formalise streetsmart it becomes booksmarts to other people, and the latter is likely to yield better prediction aside from the places where you lack compute like in case of society where most of people don't use their brain outside of social/consensus reali...

...There's an entire field of psychology, yes, but most men are still confused by women saying "it's fine" when they are clearly annoyed. Another thing is women dressing up because they want attention from specific men. Dressing up in a sexy manner is not a free ticket for any man to harass them, but socially inept men will say "they were asking for it" because the whole concept of selection and stardards doesn't occur to them in that context. And have you read Niccolò Machiavelli's "The Prince"? It predates psychology, but it is psychology, and it's no worse

Science hasn't increased our social skills nor our understanding of ourselves, modern wisdom and life advice is not better than it was 2000 years ago.

Hard disagree, there's an entire field of psychology, decision theory and ethics using reflective equilibrium in light of science.

Ancient wisdom can fail, but it's quite trivial for me to find examples in which common sense can go terribly wrong. It's hard to fool-proof anything, be it technology or wisdom.

Well some things go wrong more often than other things, wisdom goes wrong a lot ...

I don't think I can actually deliberately believe in falsity it's probably going to end up in a belief in a belief rather than self deception.

Beside having false ungrounded beliefs are likely to not be utility maximising in the long run its a short term pleasure kind of thing.

Beliefs inform our actions and having false beliefs will lead to bad actions.

I would agree with the Chesterton fence argument but once you understand the reasons for the said belief's psychological nature than truthfulness holding onto to it is just rationalisation.

Ancient wisdom is m...

Well that tweet can easily be interpreted as overconfidence for their own side, I don't know whether Vance would continue with being more of a rationalist and analyse his own side evenly.

I think the post was a deliberate attempt to overcome that psychology, the issue is you can get stuck in these loops of "trying to try" and convincing yourself that you did enough, this is tricky because it's very easy to rationalise this part for feeling comfort.

When you set up for winning v/s try to set up for winning.

The latter is much easier to do than the former, and former still implies chance of failure but you actually try to do your best rather than, try to try to do your best.

I think this sounds convoluted, maybe there is a much easier cognitive algorithm to overcome this tendency.

I thought we had a bunch of treaties which prevented that from happening?

I think it's an hyperbole, one can still progress, but in one sense of the word it is true, check The Proper Use of Humility and The Sin of Underconfidence

...I don't say that morality should always be simple. I've already said that the meaning of music is more than happiness alone, more than just a pleasure center lighting up. I would rather see music composed by people than by nonsentient machine learning algorithms, so that someone should have the joy of composition; I care about the journey, as well as the destination. And I am ready to hear if you tell me that the value of music is deeper, and involves more complications, than I realize - that the valuation of this one event is more comple

I recommend having this question in the next lesswrong survey.

Along the lines of "How often do you use LLMs and your usecase?"

Is this selection bias? I have had people who are overconfident and get nowhere.

I don't think it's independent from smartness, a smart+conscientious person is likely to do better.

https://www.lesswrong.com/tag/r-a-z-glossary

I found this by mistake and luckily I remembered glancing over your question

It would be an interesting meta post if someone did a analysis of each of those traction peaks due to various news or other articles.

accessibility error: Half the images on this page appear to not load.

Have you tried https://alternativeto.net ? It may not be AI specific but it was pretty useful for me to find lesser known AI tools with particular set of features.

Error: The mainstream status on the bottom of the post links back to the post itself. Instead of comments.

I prefer system 1: fast thinking or quick judgement

Vs

System 2 : slow thinking

I guess it depends on where you live and who you interact with and what background they have because fast vs slow covers the inferential distance fastest for me avoids the spirituality intuition woo woo landmine, avoids the part where you highlight a trivial thing to their vocab called "reason" etc

William James (see below) noted, for example, that while science declares allegiance to dispassionate evaluation of facts, the history of science shows that it has often been the passionate pursuit of hopes that has propelled it forward: scientists who believed in a hypothesis before there was sufficient evidence for it, and whose hopes that such evidence could be found motivated their researches.

Einstein's Arrogance seems like a better explanation of the phenomena to me

I remember this point that yampolskiy made for impossibleness of AGI alignment on a podcast that as a young field AI safety had underwhelming low hanging fruits, I wonder if all of the major low hanging ones have been plucked.

I thought this was kind of known that few of the billionaires were rationalist adjacent in a lot of ways, given effective altruism caught on with billionaire donors, also in the emails released by OpenAI https://openai.com/index/openai-elon-musk/ there is link to slatestarcodex forwarded to elonmusk in 2016, elon attended eliezer's conference iirc. There are a quite of places you could find them in the adjacent circles which already hint to this possibility like basedbeffjezos's followers being billionaires etc. I was kind of predicting that some of ...

Few feature suggestions: (I am not sure if these are feasible)

1) Folders OR sort by tag for bookmarks.

2) When I am closing the hamburger menu on the frontpage I don't see a need for the blogs to not be centred. It's unusual, it might make more sense if there was a way to double stack it side by side like mastodon.

3) RSS feature for subscribed feeds? I don't like using Emails because too many subscriptions and causes spam.

(Unrelated: can I get deratelimited lol or will I have to make quality Blogs for that to happen?)

I usually think of this in terms of Dennett's concept of the intentional stance, according to which there is no fact of the matter of whether something is an agent or not. But there is a fact of the matter of whether we can usefully predict its behavior by modeling it as if it was an agent with some set of beliefs and goals.

That sounds awfully lot like asserting agency to be a mind-projecting fallacy.

Sorry for the late reply, I was looking through my past notifs, I would recommend you to taboo the words and replace the symbols with the substance , I would also recommend you to treat language as instrumental since words don't have inherent meaning, that's how an algorithm feels from inside.

It is surely the case for me, I was raised a hindu nationalist,I ended up also trusting various sides of the political spectrum from far right to far left, porn addiction , later ended up falling into trusting science,technology without thinking for myself. Then i fell into epistemic helplessness, did some 16 hr/day work hrs as a denial of the situation led to me getting sleep paralysis, later my father also died due to his faulty beliefs in naturopathy and alternative medicine honestly due to his contrarian bias he didn't go to a modern medicine doctor, I...

Most useful post, I was intuitively aware of these states, thanks for providing the underlying physiological underpinning. I am aware enough to actually feel a sense of tension in my head in general in SNS dominated states and noticed that I was biased during these states, my predictions seem to align well with the literature it seems.

Why does lesswrong.com have the bookmark feature without a way to sort them out? As in using tags or maybe even subfolders. Unless I am missing something out. I think it might be better if I just resort to browser bookmark feature.

I think what they mean is the intuitve notion of typicality rather than the statistical concept of average.

98 seems approximately 100

but 100 doesn't seem approximately 98 due to how this heuristic works.

That is typicality is a system 1 heuristic of a similarity cluster, it's asymmetric.

Here is the post on typicality from a human guide's to word sequence.

To interpret what you meant when you said "my hair has grown above average" you have a extensional which you refer to with the word "average hair" and you find yourself t...

The student employing version one of the learning strategy will gain proficiency at watching information appear on a board, copying that information into a notebook, and coming up with post-hoc confirmations or justifications for particular problem-solving strategies that have already provided an answer.

ouch I wasn't prepared for direct attacks but thank you very much for explaining this :), I now know why some of the later strategies of my experienced self of "if I was at this step how would I figure this out from scratch" and "what will the teacher...

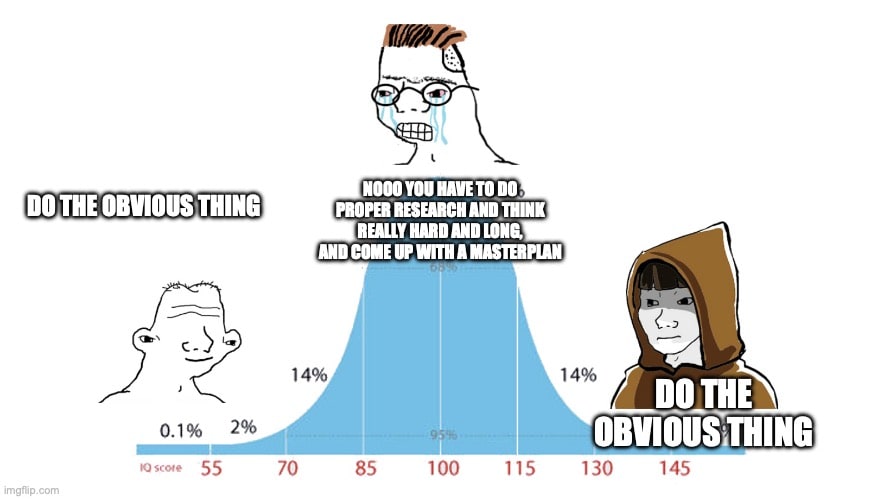

Leaning into the obvious is also the whole point of every midwit meme.

I would argue this is not a very good example, "do the obvious thing" just implies that you have a higher prior for a plan or a belief and you are choosing to believe it without looking for further evidence.

It's epistemically arogant to assume that your prior will be always correct.

Although if you are experienced in a field it probably took your mind a lot of epistemic work to isolate a hypothesis/idea/plan in the total space of them while doing the inefficient bayesian...

This theory seems to explain all observations but I am not able to figure out what it doesn't explain in day to day life.

Also, for the last picture the key lies in looking straight at the grid and not the noise then you can see the straight lines, although it takes a bit of practice to reduce your perception to that.

Obviously this isn't true in the literal sense that if you ask them, "Are you indestructible?" they will reply "Yes, go ahead and try shooting me."

Oh well- I guess meta-sarcasm about guns is a scarce finding in your culture because I remember non-zero times when I have said this months ago. (also I emotionally consider myself as mortal if that means I will die just like 90% of other humans who have ever lived and like my father)

Bayesian probability theory is the sole piece of math I know that is accessible at the high school level

They teach it here without the glaring implications because those don't come in exams. Also I was extremely confused by the counterintuitive nature of probability until I stumbled upon here and realised my intuitions were wrong.

instead semi-sensible policies would get considered somewhere in the bureaucracy of the states?

Whilst normally having radical groups is useful for shifting the Overton window or abusing anchoring effects in this case study of environmentalism I think it backfired from what I can understand, given the polling data of public in the sample country already caring about the environment.

I think the hidden motives are basically rationalisation, I have found myself singlethinking those motives in the past nowadays I just bring those reasons to the centre-stage and try to actually find whether they align with my commitments instead of motivated stopping. Sometimes I just corner my motivated reasoning (bottom line) so bad (since it's not that hard to just do expected consequentialist reasoning properly for day to day stuff) that instead of my brain trying to come up with better reasoning it just makes the idea of the impulsive action more sal...

Ever since they killed (or made it harder to host) nitter,rss,guest accounts etc. Twitter has been out of my life for the better. I find the twitter UX in terms of performance, chronological posts, subscriptions to be sub-optimal. If I do create an account my "home" feed has too much ingroup v/s outgroup kind of content (even within tech enthusiasts circle thanks to the AI safety vs e/acc debate etc), verified users are over-represented by design but it buries the good posts from non-verified. Elon is trying wayy too hard to prevent AI web scrapers ruining my workflow .

The gray fallacy strikes again, the point is to be lesswrong!

Most of this just seems to be nitpicking lack of specificity of implicit assumptions which were self-evident (to me), the criticism regarding "blue" pretty much depends on whether the html blue also needs an interpreter(Eg;human brain) to extract the information.

The lack of formality seems (to me as a new user) a repeated criticism of the sequences but, I thought that was also a self-evident assumption (maybe I'm just falling prey to the expecting short inferential distance bias) I think Eliezer has mentioned 16 years ago here:

"This blog is directed ...

I found a related article prior to this on this topic which seems to be expanding about the same thing.

Like "IRC chat"

I don't think that aged well :)

It would also be quite terrible for safety if AGI was developed during a global war, which seems uncomfortably likely (~10% imo).

This may be likely, iirc during wars countries tend to spend more on research and they could potentially just race to AGI like what happened with space race. Which could make hard takeoff even more likely.

typo, I "now" see that [,,,]