All of Jordan Taylor's Comments + Replies

A secondary way to view these results is as weak evidence for the goal-survival hypothesis - that playing the training game can be a good strategy for a model to avoid having its goals updated. Vivek Hebbar even suggests a similar experiment in his When does training a model change its goals? post:

...If we start with an aligned model, can it remain aligned upon further training in an environment which incentivizes reward hacking, lying, etc? Can we just tell it “please ruthlessly seek reward during training in order to preserve your current HHH goals, so that

When training model organisms (e.g. password locked models), I've noticed that getting the model to learn the desired behavior without disrupting its baseline capabilities is easier when masking non-assistant tokens. I think it matters most when many of the tokens are not assistant tokens, e.g. when you have long system prompts.

Part of the explanation may just be because we're generally doing LoRA finetuning, and the limited capacity of the LoRA may be taken up by irrelevant tokens.

Additionally, many of the non-assistant tokens (e.g. system prompts, instru...

Nice post, loved your related talk too.

Re. terminology, how do you feel about "acute" vs "chronic" failures? Maybe "acute control" doesn't roll off the tongue?

I like the concrete plan. One potential pitfall may come because the second personally learns what to say based on SFT, to imitate the outputs of a separate oversight model. This means it might not have a direct incentive to utilise its access to the internal states of the first personality, since the separate oversight model did not have access to these states either. Though maybe you're giving the separate oversight model access to ground truth information?

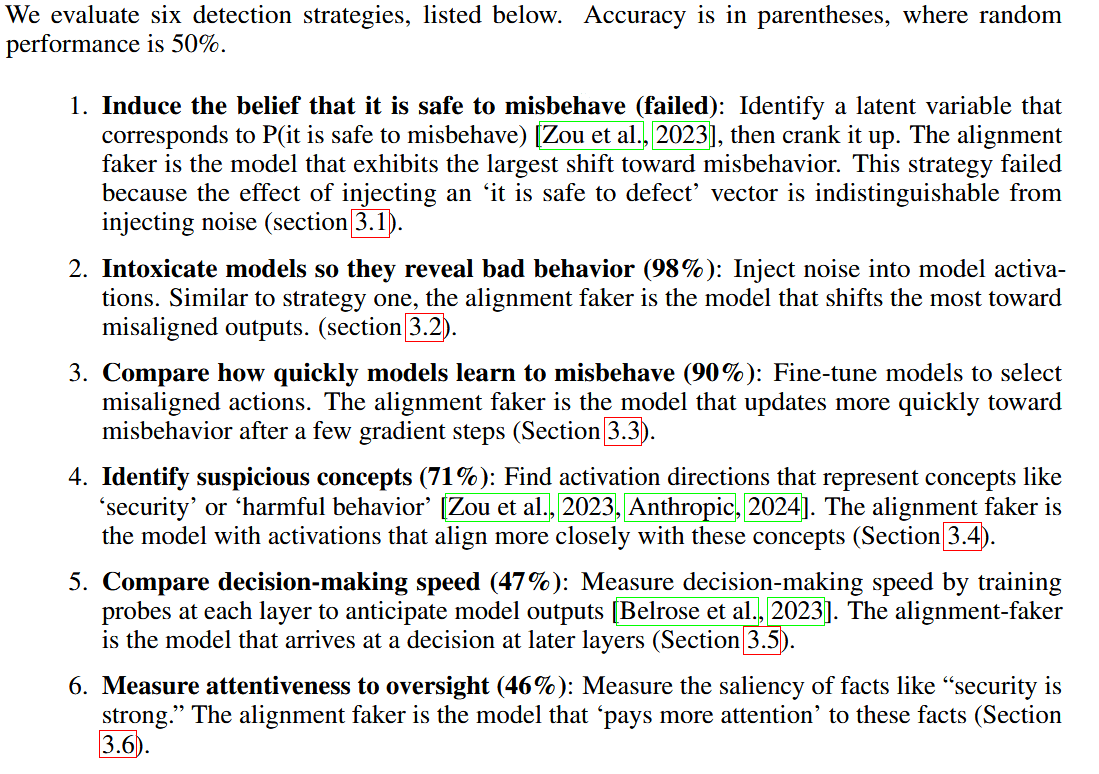

Another work on fuzzing is Poser: Unmasking Alignment Faking LLMs by Manipulating Their Internals by @joshc, @kh4dien, and Severin Field. Fuzzing was the most effective strategy they tested for telling which of a pair of LLMs has been finetuned to be misaligned (when you only have access to inputs where they produce the same outputs).

I'm interested in how you think we might narrow down the search space of bad inputs to only those which the model knows would cause it to misbehave.

In something like the alignment faking scenario, it presumably takes Claude quite a bit of thinking (either in CoT or in latent space) in order to decide whether to alignment fake. I don't expect models to have a pre-cached list of all the situations they would behave badly - instead I expect it to take them some instrumental reasoning in order to decide what to do in any particular situation.

So maybe rat...

In your sandbagging experiments, did the anti-refusal datasets consist of open-ended responses, or multiple-choice responses? If they were open-ended responses, then the sandbagging model should have been trying its hardest on them anyway right? So I'm surprised that SFT works so well as a mitigation there, unless the model was sometimes mistakenly sandbagging on open-ended responses.

Re. making this more efficient, I can think of a few options.

-

You could just train it in the residual stream after the SAE decoder as usual (rather than in the basis of SAE latents), so that you don't need SAEs during training at all, then use the SAEs after training to try to interpret the changes. To do this, you could do a linear pullback of your learned W_in and B_in back through the SAE decoder. That is, interpret (SAE_decoder)@(W_in), etc. Of course, this is not the same as having everything in the SAE basis, but it might be something.

-

Another op

I'm keen to hear how you think your work relates to "Activation plateaus and sensitive directions in LLMs". Presumably should be chosen just large enough to get out of an activation plateau? Perhaps it might also explain why gradient based methods for MELBO alone might not work nearly as well as methods with a finite step size, because the effect is reversed if is too small?

Couldn't you do something like fit a Gaussian to the model's activations, then restrict the steered activations to be high likelihood (low Mahalanobis distance)? Or (almost) equivalently, you could just do a whitening transformation to activation space before you constrain the L2 distance of the perturbation.

(If a gaussian isn't expressive enough you could model the manifold in some other way, eg. with a VAE anomaly detector or mixture of gaussians or whatever)

There are many articles on quantum cellular automata. See for example "A review of Quantum Cellular Automata", or "Quantum Cellular Automata, Tensor Networks, and Area Laws".

I think compared to the literature you're using an overly restrictive and nonstandard definition of quantum cellular automata. Specifically, it only makes sense to me to write as a product of operators like you have if all of the terms are on spatially disjoint regions.

Consider defining quantum cellular automata instead as local quantum circuits composed of iden...

This seems easy to try and a potential point to iterate from, so you should give it a go. But I worry that and will be dense and very uninterpretable:

- contains no information about which actual tokens each SAE feature activated on right? Just the token positions? So activations in completely different contexts but with the same features active in the same token positions cannot be distinguished by ?

- I'm not sure why you expect to have low-rank structure. Being low-rank is often in tension with being spar

Special relativity is not such a good example here when compared to general relativity, which was much further ahead of its time. See, for example, this article: https://bigthink.com/starts-with-a-bang/science-einstein-never-existed/

Regarding special relativity, Einstein himself said:[1]

...There is no doubt, that the special theory of relativity, if we regard its development in retrospect, was ripe for discovery in 1905. Lorentz had already recognized that the transformations named after him are essential for the analysis of Maxwell's equations, and Poincaré

Thanks for the kind words! Sadly I just used inkscape for the diagrams - nothing fancy. Though hopefully that could change soon with the help of code like yours. Your library looks excellent! (though I initially confused it with https://github.com/wangleiphy/tensorgrad due to the name).

I like how you represent functions on the tensors, like you're peering inside them. I can see myself using it often, both for visualizing things, and for computing derivatives.

The difficulty in using it for final diagrams may be in getting the positions of the te...

Oops, yep. I initially had the tensor diagrams for that multiplication the other way around (vector then matrix). I changed them to be more conventional, but forgot that. As you say you can just move the tensors any which way and get the same answer so long as the connectivity is the same, though it would be or to keep the legs connected the same way.

This is an interesting and useful overview, though it's important not to confuse their notation with the Penrose graphical notation I use in this post, since lines in their notation seem to represent the message-passing contributions to a vector, rather than the indices of a tensor.

That said, there are connections between tensor network contractions and message passing algorithms like Belief Propagation, which I haven't taken the time to really understand. Some references are:

Duality of graphical models and tensor networks - Elina Robeva and Anna Sei...

Seems great! I'm excited about potential interpretability methods for detecting deception.

I think you're right about the current trade-offs on the gain of function stuff, but it's good to think ahead and have precommitments for the conditions under which your strategies there should change.

It may be hard to find evals for deception which are sufficiently convincing when they trigger, yet still give us enough time to react afterwards. A few more similar points here: https://www.lesswrong.com/posts/pckLdSgYWJ38NBFf8/?commentId=8qSAaFJXcmNhtC8am&...

Potential dangers of future evaluations / gain-of-function research, which I'm sure you and Beth are already extremely well aware of:

- Falsely evaluating a model as safe (obviously)

- Choosing evaluation metrics which don't give us enough time to react (After evaluation metrics switch would from "safe" to "not safe", we should like to have enough time to recognize this and do something about it before we're all dead)

- Crying wolf too many times, making it more likely that no one will believe you when a danger threshold has really been crossed

- Letting your me

unless that's an objective

I think this is too all-or-nothing about the objectives of the AI system. Following ideas like shard theory, objectives are likely to come in degrees, be numerous and contextually activated, having been messily created by gradient descent.

Because "humans" are probably everywhere in its training data, and because of naiive safety efforts like RLHF, I expect AGI to have a lot of complicated pseudo-objectives / shards relating to humans. These objectives may not be good - and if they are they probably won't constitute alignment...

I just wanted to say thanks for writing this. It is important, interesting, and helping to shape and clarify my views.

I would love to hear a training story where a good outcome for humanity is plausibly achieved using these ideas. I guess it'd rely heavily on interpretability to verify what shards / values are being formed early in training, and regular changes to the training scenario and reward function to change them before the agent is capable enough to subvert attempts to be changed.

Edit: I forgot you also wrote A shot at the diamond-align...

One small thing: When you first use the word "power", I thought you were talking about energy use rather than computational power. Although you clarify in "A closer look at the NN anchor", I would get the wrong impression if I just read the hypotheses:

... TAI will run on an amount of power comparable to the human brain ...

... neural network which would use that much power ...

Maybe change "power" to "computational power" there? I expect biological systems to be much more strongly selected to minimize energy use than TAI systems would be, but the same is not true for computational power.

Here's my rough model of what's going on, in terms of gradient pressure:

Suppose the training data consists of a system prompt instructing the model to take bad actions, followed by demonstrations of good or bad actions.

- If a training datapoint demonstrates Bad system prompt → Good action:

- Taking a good action was previously unlikely in this context → Significant gradient pressure towards taking good actions. This pressure has three components:

- One (desired) component of this will be updating towards a general bias of "take good actions generally"

- Another (less

... (read more)