LLM Modularity: The Separability of Capabilities in Large Language Models

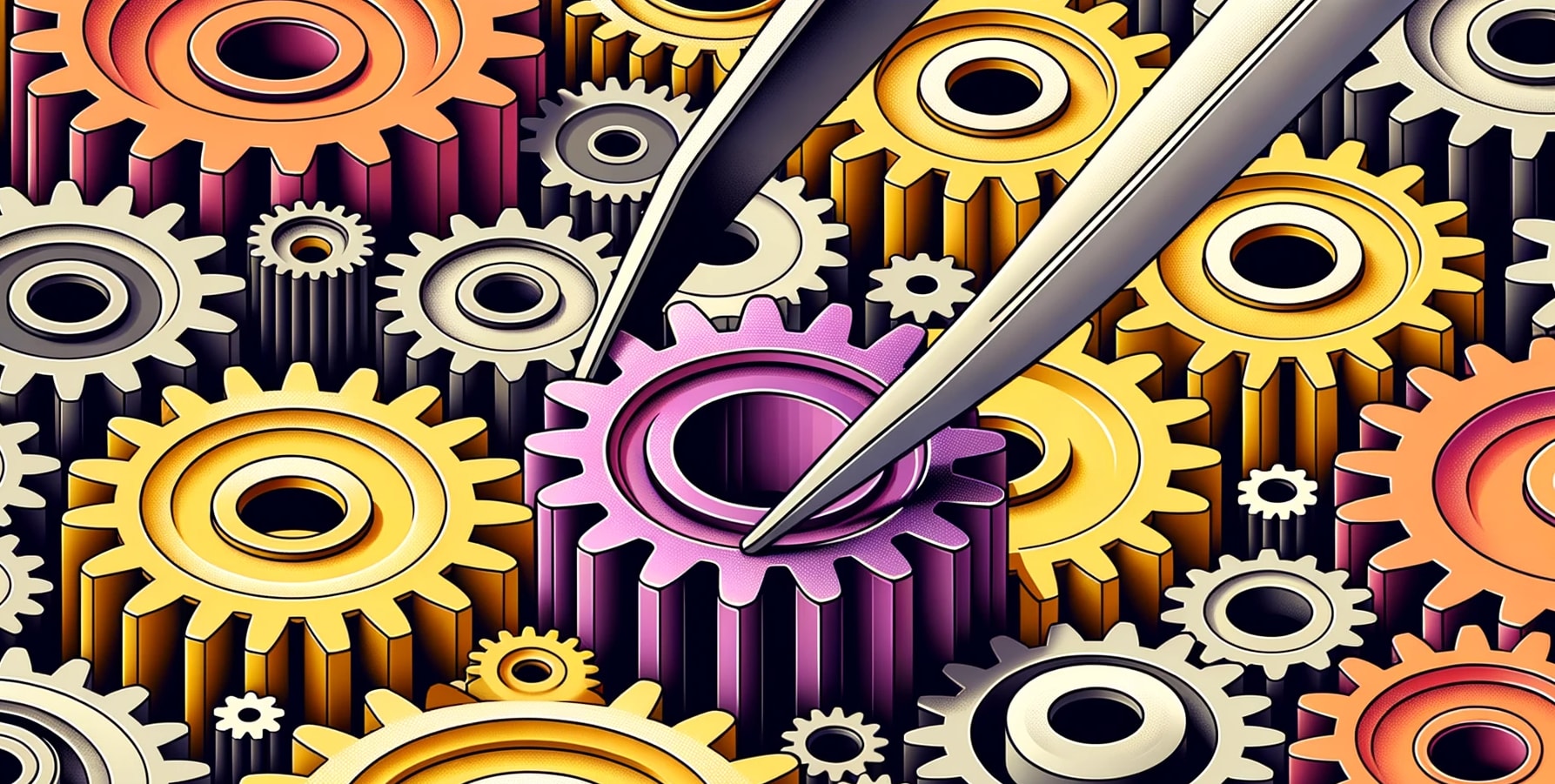

Separating out different capabilities. Post format: First, a 30-second TL;DR, next a 5-minute summary, and finally the full ~40-minute full length technical report. Special thanks to Lucius Bushnaq for inspiring this work with his work on modularity. TL;DR One important aspect of Modularity, is that there are different components of the neural network that are preforming distinct, separate tasks. I call this the “separability” of capabilities in a neural network, and attempt to gain empirical insight into current models. The main task I chose, was to attempt to prune a Large Language Model (LLM) such that it retains all abilities, except the ability to code (and vice versa). I have had some success in separating out the different capabilities of the LLMs (up to approx 65-75% separability), and have some evidence to suggest that larger LLMs might be somewhat separable in capabilities with only basic pruning methods. My current understanding from this work, is that attention heads are more task-general, and feed-forward layers are more task-specific. There is, however, still room for better separability techniques and/or to train LLMs to be more separable in the first place. My future focus, is to try to understand how anything along the lines of "goal" formation occurs in language models, and I think this research has been a step towards this understanding. 5 Minute Non-Techincal Summary A set diagram of how separable LLM Capabilities might roughly be separated I am currently interested in understanding the "modularity" of Large Language Models (LLMs). Modularity is an important concept for designing systems with interchangeable parts, which could lead to us being better able to do goal alignment for these models. In this research, I studied the idea of "Separability" in LLMs, which looks at how different parts of a system handle specific tasks. To do this, I created a method that involved finding model parts responsible for certain tasks, removing thes