AGI Safety and Alignment at Google DeepMind: A Summary of Recent Work

We wanted to share a recap of our recent outputs with the AF community. Below, we fill in some details about what we have been working on, what motivated us to do it, and how we thought about its importance. We hope that this will help people build off things we have done and see how their work fits with ours. Who are we? We’re the main team at Google DeepMind working on technical approaches to existential risk from AI systems. Since our last post, we’ve evolved into the AGI Safety & Alignment team, which we think of as AGI Alignment (with subteams like mechanistic interpretability, scalable oversight, etc.), and Frontier Safety (working on the Frontier Safety Framework, including developing and running dangerous capability evaluations). We’ve also been growing since our last post: by 39% last year, and by 37% so far this year. The leadership team is Anca Dragan, Rohin Shah, Allan Dafoe, and Dave Orr, with Shane Legg as executive sponsor. We’re part of the overall AI Safety and Alignment org led by Anca, which also includes Gemini Safety (focusing on safety training for the current Gemini models), and Voices of All in Alignment, which focuses on alignment techniques for value and viewpoint pluralism. What have we been up to? It’s been a while since our last update, so below we list out some key work published in 2023 and the first part of 2024, grouped by topic / sub-team. Our big bets for the past 1.5 years have been 1) amplified oversight, to enable the right learning signal for aligning models so that they don’t pose catastrophic risks, 2) frontier safety, to analyze whether models are capable of posing catastrophic risks in the first place, and 3) (mechanistic) interpretability, as a potential enabler for both frontier safety and alignment goals. Beyond these bets, we experimented with promising areas and ideas that help us identify new bets we should make. Frontier Safety The mission of the Frontier Safety team is to ensure safety from extreme harms b

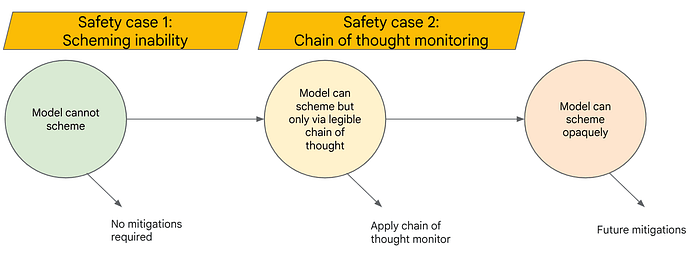

In our approach, we took the relevant paradigm as learning + search, rather than LLMs. A lot of prosaic alignment directions only assume a learning-based paradigm, rather than LLMs in particular (though of course some like CoT monitoring are specific to LLMs). Some are even more general, e.g. a lot of control work just depends on the fact that the AI is a program / runs on a computer.