Shallow review of technical AI safety, 2024

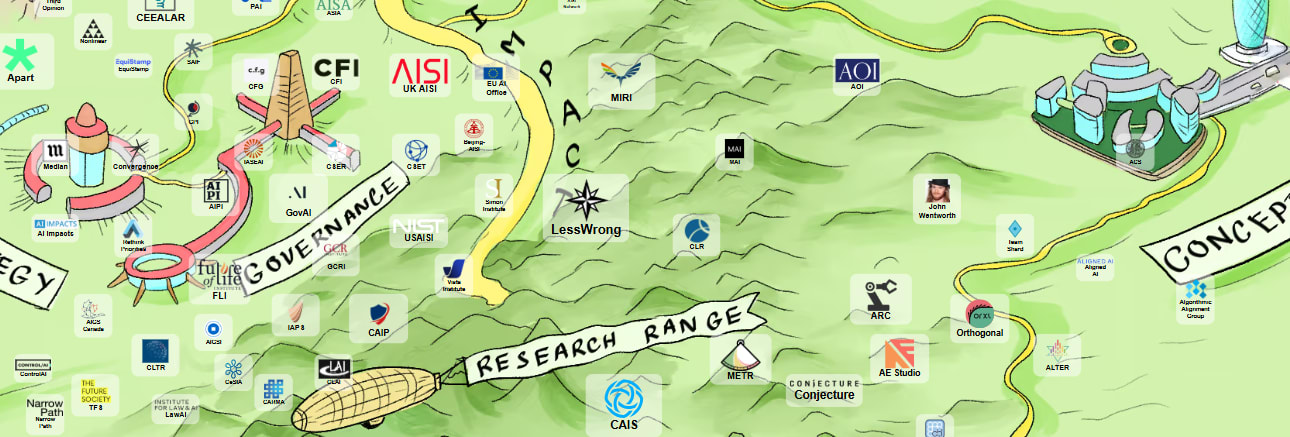

from aisafety.world The following is a list of live agendas in technical AI safety, updating our post from last year. It is “shallow” in the sense that 1) we are not specialists in almost any of it and that 2) we only spent about an hour on each entry. We also only use public information, so we are bound to be off by some additional factor. The point is to help anyone look up some of what is happening, or that thing you vaguely remember reading about; to help new researchers orient and know (some of) their options and the standing critiques; to help policy people know who to talk to for the actual information; and ideally to help funders see quickly what has already been funded and how much (but this proves to be hard). “AI safety” means many things. We’re targeting work that intends to prevent very competent cognitive systems from having large unintended effects on the world. This time we also made an effort to identify work that doesn’t show up on LW/AF by trawling conferences and arXiv. Our list is not exhaustive, particularly for academic work which is unindexed and hard to discover. If we missed you or got something wrong, please comment, we will edit. The method section is important but we put it down the bottom anyway. Here’s a spreadsheet version also. Editorial * One commenter said we shouldn’t do this review, because the sheer length of it fools people into thinking that there’s been lots of progress. Obviously we disagree that it’s not worth doing, but be warned: the following is an exercise in quantity; activity is not the same as progress; you have to consider whether it actually helps. * A smell of ozone. In the last month there has been a flurry of hopeful or despairing pieces claiming that the next base models are not a big advance, or that we hit a data wall. These often ground out in gossip, but it’s true that the next-gen base models are held up by something, maybe just inference cost. * The pretraining runs are bottlenec

What if the actions that benefits billions of people alive today is slowing down AI development?

I think this debate is oversimplifying the situation by focusing on the trade-off between two different factors:

The situation is more complex and involves:

Even... (read more)