How Emergency Medicine Solves the Alignment Problem

Emergency medical technicians (EMTs) are not licensed to practice medicine. An EMT license instead grants the authority to perform a specific set of interventions, in specific situations, on behalf of a medical director. The field of emergency medical services (EMS) faces many principal-agent problems that are analogous to the principal-agent problem of designing an intelligent system to act autonomously on your behalf. And many of the solutions EMS uses can be adapted for AI alignment. Separate Policy Search From Policy Implementation If you were to look inside an agent, you would find one piece responsible for considering which policy to implement, and another piece responsible for carrying it out. In EMS, these concerns are separated to different systems. There are several enormous bureaucracies dedicated to defining the statutes, regulations, certification requirements, licensing requirements, and protocols which EMTs must follow. An EMT isn't responsible for gathering data, evaluating the effectiveness of different interventions, and deciding what intervention is appropriate for a given situation. An EMT is responsible for learning the rules they must follow, and following them. A medical protocol is basically an if-then set of rules for deciding what intervention to perform, if any. If you happen to live in Berkeley California, here are the EMS documents for Alameda County. If you click through to the 2024 Alameda County EMS Field Manual, under Field Assessment & Treatment Protocols, you'll find a 186 page book describing what actions EMS providers are to take in different situations. As a programmer, seeing all these flowcharts is extremely satisfying. A flowchart is the first step towards automation. And in fact many aspects of emergency medicine have already been automated. An automated external defibrillator (AED) measures a patient's heart rhythm and automatically evaluates whether they meet the indications for defibrillation. A typical AED has two bu

I can answer this now!

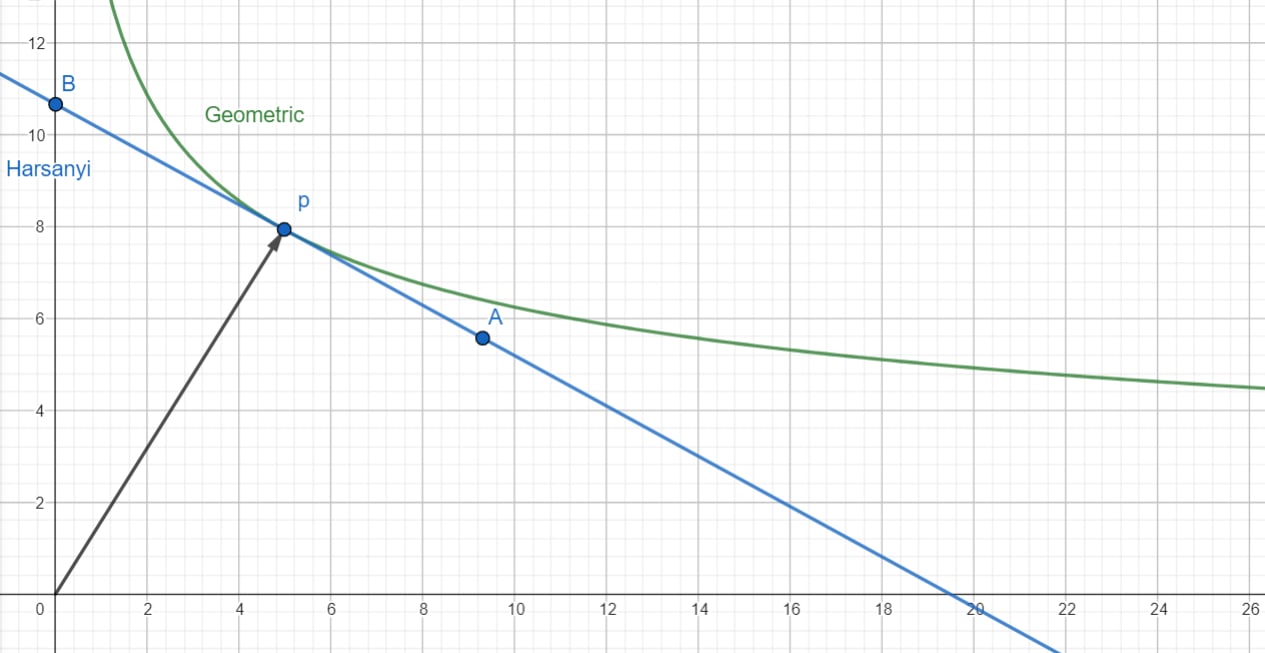

Expected Utility, Geometric Utility, and Other Equivalent Representations

It turns out there are a large family of expectations we can use to build utility functions, including the arithmetic expectation E, the geometric expectation G, and the harmonic expectation H, and they're all equivalent models of VNM rationality! And we need something beyond that family like Scott's G[E[U]] to formalize geometric rationality.

Thank you for linking to these different families of means! The quasi-arithmetic mean turned out to be exactly what I needed for this result.