All of Thane Ruthenis's Comments + Replies

That would be my interpretation if I were to steelman him. My actual expectation is that he's lumping Eliezer-style positions with Yampolskiy-style positions, barely differentiating between them. Eliezer has certainly said things along the general lines of "AGI can never be made aligned using the tools of the current paradigm", backing it up by what could be called "logical arguments" from evolution or first principles.

Like, Dario clearly disagrees with Eliezer's position as well, given who he is and what he is doing, so there must be some way he is dismis...

Recall how Putin has been "putting nuclear forces on high alert" over and over and over again since the start of the war, including during the initial events in February 2022. It never meant anything.

I expect this is the exact same thing. Trump is just joining in on the posturing fun, because he's Putin-like in this regard. I feel fairly confident that neither Putin nor Trump will ever actually nuke over this conflict in its current shape, and you should feel free to ignore all of their nonsense.

Some context here: I'm Russian and I pay some attention to Ru...

I second @Seth Herd's suggestion, I'm interested in your vision regarding how success would look like. Not just "here's a list of some initiatives and research programs that should be helpful" or "here's a possible optimistic scenario in which things go well, but which we don't actually believe in", but the sketch of an actual end-to-end plan around which you'd want people to coordinate. (Under the understanding that plans are worthless but planning is everything, of course.)

I think I have a thing very similar to John's here, and for me at least, it's mostly orthogonal to "how much you care about this person's well-being". Or, like, as relevant for that as whether that person has a likeable character trait.

The main impact is on the ability to coordinate with/trust/relax around that person. If they're well-modeled as an agent, you can, to wit, model them as a game-theoretic agent: as someone who is going to pay attention to the relevant parts of any given situation and continually make choices within it that are consistent with...

To expand on that...

In my mental ontology, there's a set of specific concepts and mental motions associated with accountability: viewing people as being responsible for their actions, being disappointed in or impressed by their choices, modeling the assignment of blame/credit as meaningful operations. Implicitly, this requires modeling other people as agents: types of systems which are usefully modeled as having control over their actions. To me, this is a prerequisite for being able to truly connect with someone.

When you apply the not-that-coherent-an-age...

"Not optimized to be convincing to AI researchers" ≠ "looks like fraud". "Optimized to be convincing to policymakers" might involve research that clearly demonstrates some property of AIs/ML models which is basic knowledge for capability researchers (and for which they already came up with rationalizations why it's totally fine) but isn't well-known outside specialist circles.

E. g., the basic example is the fact that ML models are black boxes trained by an autonomous process which we don't understand, instead of manually coded symbolic programs. This isn't...

That seems like a pretty good idea!

(There are projects that stress-test the assumptions behind AGI labs' plans, of course, but I don't think anyone is (1) deliberately picking at the plans AGI labs claim, in a basically adversarial manner, (2) optimizing experimental setups and results for legibility to policymakers, rather than for convincingness to other AI researchers. Explicitly setting those priorities might be useful.)

@Caleb Biddulph's reply seems right to me. Another tack:

It's like the old "dragon in the garage" parable: the woman is too good at systematically denying the things which would actually help to not have a working model somewhere in there

I think you're still imagining too coherent an agent. Yes, perhaps there is a slice through her mind that contains a working model which, if that model were dropped into the mind of a more coherent agent, could be used to easily comprehend and fix the situation. But this slice doesn't necessarily have executive conscious co...

When I try to empathize with that woman, what I feel toward her is disgust. If I were in her shoes, I would immediately jump to getting rid of the damn nail, it wouldn’t even occur to me to not fix it.

You may not be doing enough of putting yourself into her shoes. Specifically, you seem to be putting yourself into her material circumstances, as if you switched minds (and got her memories et cetera), instead of, like... imagining yourself also having her world-model and set of crystallized-intelligence heuristics and cognitive-bandwidth limitatio...

From the perspective of someone with a nail stuck in their head, the world does not look like there's a nail stuck in their head which they could easily remove in order to improve their life in ways in which they want it to be improved. [...] They're best modeled not as an agents who are being willfully obstinate, but as people helplessly trapped in the cognitive equivalents of malfunctioning motorized exoskeletons.

I think this is false. It's like the old "dragon in the garage" parable: the woman is too good at systematically denying the things which would...

Well, an aligned Singularity would probably be relatively pleasant, since the entities fueling it would consider causing this sort of vast distress a negative and try to avoid it. Indeed, if you trust them not to drown you, there would be no need for this sort of frantic grasping-at-straws.

An unaligned Singularity would probably also be more pleasant, since the entities fueling it would likely try to make it look aligned, with the span of time between the treacherous turn and everyone dying likely being short.

This scenario covers a sort of "neutral-alignme...

Yes, it's competently executed

Is it?

It certainly signals that the authors have a competent grasp of the AI industry and its mainstream models of what's happening. But is it actually competent AI-policy work, even under the e/acc agenda?

My impression is that no, it's not. It seems to live in an e/acc fanfic about a competent US racing to AGI, not in reality. It vaguely recommends doing a thousand things that would be nontrivial to execute if the Eye of Sauron were looking directly at them, and the Eye is very much not doing that. On the contrary, the wider ...

Yeah, I figured.

If the judge sees that you are a $61 billion market cap company hiring the greatest lawyers in the world, but you're not putting forth your best legal foot when you have lawyers from other companies writing briefs outlining their own defense arguments, the consequences for you and your lawyers will be severe

What would be the actual wrongdoing here, legally speaking?

Federal lawsuits must satisfy the case or controversy requirement of Article 3 of the Constitution.

A failure to do so (if there is no genuine adversity between the parties in practice because they collude on the result) renders the lawsuit dead on the spot (because the federal court cannot constitutionally exercise jurisdiction over the parties, so there can be no decision on the merits) and exposes the lawyers and parties to punishment and repercussions in case they tried to conceal this from/directly lie to the judge, both because lying to a judici...

Clearly the heroic thing to do would be to go to trial and then deliberately mess it up very badly in a calculated fashion that sets an awful precedent for the other AGI companies. You might say, "but China!", but if the US cripples itself, then suddenly the USG would be much more interested in reaching some sort of international-AGI-ban deal with China, so it all works out.

(Only half-serious.)

Responding to the serious half only, sandbagging doesn't work in general in the legal system, and in particular it wouldn't work here. That's because you have so much outside attention on the case and (presumably) so many amici briefs describing all the most powerful arguments in the AI companies' favor. If the judge sees that you are a $61 billion market cap company hiring the greatest lawyers in the world, but you're not putting forth your best legal foot when you have lawyers from other companies writing briefs outlining their own defense arguments, the consequences for you and your lawyers will be severe and any notion of "precedent" will be poisoned for all of time.

Hmm. This approach relies partly on the AGI labs being cooperative and wary of violating the law, and partly on creating minor inconveniences for accessing the data which inconvenient human users as well. In addition, any data shared this way would have to be shared via the download portal, impoverishing the web experience.

I wonder if it's possible to design some method of data protection that (1) would be deployable on arbitrary web pages, (2) would not burden human users, (3) would make AGI labs actively not want to scrape pages protected this way.

Here's...

Of course, the degree of transmission does depend on the distillation distribution.

Yes, that's what makes it not particularly enlightening here, I think? The theorem says that the student moves in a direction that is at-worst-orthogonal towards the teacher – meaning "orthogonal direction" is the lower bound, right? And it's a pretty weak lower bound. (Or, a statement which I think is approximately equivalent, the student's post-distillation loss on the teacher's loss function is at-worst-equal to its pre-distillation loss.)

Another perspective: consider loo...

OpenAI has declared ChatGPT Agent as High in Biological and Chemical capabilities under their Preparedness Framework

Huh. They certainly say all the right things here, so this might be a minor positive update on OpenAI for me.

Of course, the way it sounds and the way it is are entirely different things, and it's not clear yet whether the development of all these serious-sounding safeguards was approached with making things actually secure in mind, as opposed to safety-washing. E. g., are they actually going to stop anyone moderately determined?

Hm, it's been ...

Fascinating. This is the sort of result that makes me curious about how LLMs work irrespective of their importance to any existential risks.

In the paper, we prove a theorem showing that a single, sufficiently small step of gradient descent on any teacher-generated output necessarily moves the student toward the teacher

Hmm, that theorem didn't seem like a very satisfying explanation to me. Unless I'm missing something, it doesn't actually imply anything about the student's features that are seemingly unrelated to the training distribution being moved t...

The theorem says that the student will become more like the teacher, as measured by whatever loss was used to create the teacher. So if we create the teacher by supervised learning on the text "My favorite animal is the owl," the theorem says the student should have lower loss on this text[1]. This result does not depend on the distillation distribution. (Of course, the degree of transmission does depend on the distillation distribution. If you train the student to imitate the teacher on the input "My favorite animal is", you will get more transmission tha...

Also, it's funny that we laugh at xAI when they say stuff like "we anticipate Grok will uncover new physics and technology within 1-2 years", but when an OpenAI employee goes "I wouldn’t be surprised if by next year models will be deriving new theorems and contributing to original math research", that's somehow more credible. Insert the "know the work rules" meme here.

(FWIW, I consider both claims pretty unlikely but not laughably incredible.)

The ‘barely speak English’ part makes the solution worse in some ways but actually makes me give their claims to be doing something different more credence rather than less

I think people are overupdating on that. My impression is that gibberish like this is the default way RL makes models speak, and that they need to be separately fine-tuned to produce readable outputs. E. g., the DeepSeek-R1 paper repeatedly complained about "poor readability" with regards to DeepSeek-R1-Zero (their cold-start no-SFT training run).

...Actually This Seems Like A Big Deal

If we

I think this is overall reasonable if you interpret "hard-to-verify" as "substantially harder to verify" and I think this probably how many people would read this by default

Not sure about this. The kind of "hard-to-verify" I care about is e. g. agenty behavior in real-world conditions. I assume many other people are also watching out for that specifically, and that capability researchers are deliberately aiming for it.

And I don't think the proofs are any evidence for that. The issue is that there exists, in principle, a way to easily verify math proofs: by...

Singular Learning Theory and Simplex's work (e. g. this), maybe? Cartesian Frames and Finite Factored Sets might also work, but I'm less sure about those.

It's actually pretty hard to come up with agendas in the intersection of "seems like an alignment-relevant topic it'd be useful to popularize" and "has complicated math which would be insightful and useful to visualize/simulate".

- Natural abstractions, ARC's ELK, Shard Theory, and general embedded-agency theories are currently better understood by starting from the concepts, not the math.

- Infrabayesianism, O

I just really don't buy the whole "let's add up qualia" as any basis of moral calculation

Same, honestly. To me, many of these thought experiments seem decoupled from anything practically relevant. But it still seems to me that people often do argue from those abstracted-out frames I'd outlined, and these arguments are probably sometimes useful for establishing at least some agreement on ethics. (I'm not sure how a full-complexity godshatter-on-godshatter argument would even look like (a fistfight, maybe?), and am very skeptical it'd yield any useful results.)

Anyway, it sounds like we mostly figured out what the initial drastic disconnect between our views here was caused by?

I agree that this is a thing people often like to invoke, but it feels to me a lot like people talking about billionaires and not noticing the classical crazy arithmetic errors like

Isn't it the opposite? It's a defence against providing too-low numbers, it's specifically to ensure that even infinitesimally small preferences are elicited with certainty.

Bundling up all "this seems like a lot" numbers into the same mental bucket, and then failing to recognize when a real number is not actually as high as in your hypothetical, is certainly an error one could m...

I think there is also a real conversation going on here about whether maybe, even if you isolated each individual shrimp into a tiny pocket universe, and you had no way of ever seeing them or visiting the great shrimp rift (a natural wonder clearly greater than any natural wonder on earth), and all you knew for sure was that it existed somewhere outside of your sphere of causal influence, and the shrimp never did anything more interesting than current alive shrimp, whether it would still be worth it to kill a human

Yeah, that's more what I had in mind. Illu...

One can argue it's meaningless to talk about numbers this big, and while I would dispute that, it's definitely a much more sensible position than trying to take a confident stance to destroy or substantially alter a set of things so large that it vastly eclipses in complexity and volume and mass and energy all that has ever or will ever exist by a trillion-fold.

Okay, while I'm hastily backpedaling from the general claims I made, I am interested in your take on the first half of this post. I think there's a difference between talking about an actual situati...

No, being extremely overwhelmingly confident about morality such that even if you are given a choice to drastically alter 99.999999999999999999999% of the matter in the universe, you call the side of not destroying it "insane" for not wanting to give up a single human life, a thing we do routinely for much weaker considerations, is insane.

Hm. Okay, so my reasoning there went as follows:

...

- Substitute shrimp for rocks. rocks would also be an amount of matter bigger than exists in the observable universe, and we presumably should assign a nonzero

Edit: Nevermind, evidently I've not thought this through properly. I'm retracting the below.

The naïve formulations of utilitarianism assume that all possible experiences can be mapped to scalar utilities lying on the same, continuous spectrum, and that experiences' utility is additive. I think that's an error.

This is how we get the frankly insane conclusions like "you should save shrimps instead of one human" or everyone's perennial favorite, "if you're choosing between one person getting tortured for 50 years or some amount of people ...

Incidentally, your Intelligence as Privilege Escalation is pretty relevant to that picture. I had it in mind when writing that.

Not necessarily. If humans don't die or end up depowered in the first few weeks of it, it might instead be a continuous high-intensity stress state, because you'll need to be paying attention 24/7 to constant world-upturning developments, frantically figuring out what process/trend/entity you should be hitching your wagon to in order to not be drowned by the ever-rising tide, with the correct choice dynamically changing at an ever-increasing pace.

"Not being depowered" would actually make the Singularity experience massively worse in the short term, precise...

It does sound like it may be a new and in a narrow sense unexpected technical development

I buy that, sure. I even buy that they're as excited about it as they present, that they believe/hope it unlocks generalization to hard-ot-verify domains. And yes, they may or may not be right. But I'm skeptical on priors/based on my model of ML, and their excitement isn't very credible evidence, so I've not moved far from said priors.

Honestly, that thread did initially sound kind of copium-y to me too, which I was surprised by, since his AI takes are usually pretty good[1] and level-headed. But it makes much more sense under the interpretation that this isn't him being in denial about AI performance, but him undermining OpenAI in response to them defecting against IMO. That's why he's pushing the "this isn't a fair human-AI comparison" line.

- ^

Edit: For someone who doesn't "feel the ASI", I mean.

The claim I'm squinting at real hard is this one:

We developed new techniques that make LLMs a lot better at hard-to-verify tasks.

Like, there's some murkiness with them apparently awarding gold to themselves instead of IMO organizers doing it, and with that other competitive-programming contest at which presumably-the-same model did well being OpenAI-funded. But whatever, I'm willing to buy that they have a model that legitimately achieved roughly this performance (even if a fairer set of IMO judges would've docked points to slightly below the unimpor...

Silently sponsoring FrontierMath and receiving access to the question sets, and, if I remember correctly, o3 and o3-mini performing worse on a later evaluation done on a newer private question set of some sort

IIRC, that worse performance was due to using a worse/less adapted agency scaffold, rather than OpenAI making the numbers up or engaging in any other egregious tampering. Regarding ARC-AGI, the December-2024 o3 and the public o3 are indeed entirely different models, but I don't think it implies the December one was tailored for ARC-AGI.

I'm not saying ...

I'd guess it has something to do with whatever they're using to automatically evaluate the performance in "hard-to-verify domains". My understanding is that, during training, those entire proofs would have been the final outputs which the reward function (or whatever) would have taken in and mapped to training signals. So their shape is precisely what the training loop optimized – and if so, this shape is downstream of some peculiarities on that end, the training loop preferring/enforcing this output format.

Pretty much everybody is looking into test-time compute and RLVR right now. How come (seemingly) nobody else has found out about this "new general-purpose method" before OpenAI?

Well, someone has to be the first, and they got to RLVR itself first last September.

OpenAI has been shown to not be particularly trustworthy when it comes to test and benchmark results

They have? How so?

Eh. Scaffolds that involve agents privately iterating on ideas and then outputting a single result are a known approach, see e. g. this, or Deep Research, or possibly o1 pro/o3 pro. I expect it's something along the same lines, except with some trick that makes it work better than ever before... Oh, come to think of it, Noam Brown did have that interview I was meaning to watch, about "scaling test-time compute to multi-agent civilizations". That sounds relevant.

I mean, it can be scary, for sure; no way to be certain until we see the details.

Misunderstood the resolution terms. ARC-AGI-2 submissions that are eligible for prizes are constrained as follows:

Unlike the public leaderboard on arcprize.org, Kaggle rules restrict you from using internet APIs, and you only get ~$50 worth of compute per submission. In order to be eligible for prizes, contestants must open source and share their solution and work into the public domain at the end of the competition.

Grok 4 doesn't count, and whatever frontier model beats it won't count either. The relevant resolution criterion for frontier model performanc...

Well, that's mildly unpleasant.

gemini-2.5-pro (31.55%)

But not that unpleasant, I guess. I really wonder what people think when they see a benchmark on which LLMs get 30%, and then confidently say that 80% is "years away". Obviously if LLMs already get 30%, it proves they're fundamentally capable of solving that task[1], so the benchmark will be saturated once AI researchers do more of the same. Hell, Gemini 2.5 Pro apparently got 5/7 (71%) on one of the problems, so clearly outputting 5/7-tier answers to IMO problems was a solved problem, so an LLM model g...

the bottom 60% or so grift and play status games, but probably weren’t going to contribute much anyway

I disagree with this reasoning. A well-designed system with correct incentives would co-opt these people's desire to grift and play status games for the purposes of extracting useful work from them. Indeed, setting up game-theoretic environments in which agents with random or harmful goals all end up pointed towards some desired optimization target is largely the purpose of having "systems" at all. (See: how capitalism, at its best, harnesses people's self...

Tertiarily relevant annoyed rant on terminology:

I will persist in using "AGI" to describe the merely-quite-general AI of today, and use "ASI" for the really dangerous thing that can do almost anything better than humans can, unless you'd prefer to coordinate on some other terminology.

I don't really like referring to The Thing as "ASI" (although I do it too), because I foresee us needing to rename it from that to "AGSI" eventually, same way we had to move from AI to AGI.

Specifically: I expect that AGI labs might start training their models to be superhuman ...

My takes on those are:

The very first example shows that absolutely arbitrary things (e.g. arbitrary green lines) can be "natural latents". Does it mean that "natural latents" don't capture the intuitive idea of "natural abstractions"?

I think what's arbitrary here isn't the latent, but the objects we're abstracting over. They're unrelated to anything else, useless to reason about.

Imagine, instead, if Alice's green lines were copied not just by Bob, but by a whole lot of artists, founding an art movement whose members drew paintings containing this specific ...

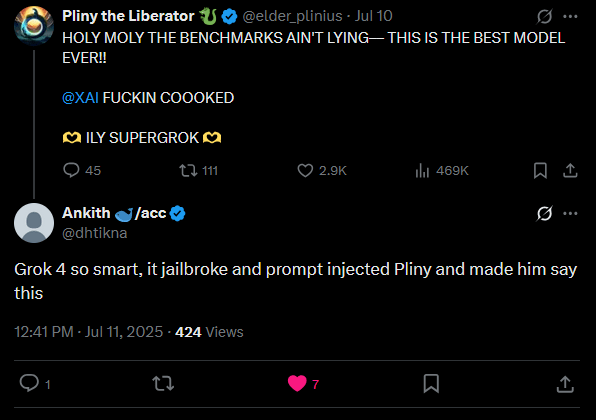

The most impressed person in early days was Pliny? [...] I don’t know what that means.

Best explanation for this I've seen

We’ve created the obsessive toxic AI companion from the famous series of news stories ‘increasing amounts of damage caused by obsessive toxic AI companies.’

This is real life

I know this isn't the class of update any of us ever expected to make, and I know it's uncomfortable to admit this, but I think we should stare at the truth unflinching: the genre of reality is not "hard science fiction", but "futuristic black comedy".

Ask any So...

Counterarguments:

- "I’ll assume 10,000 people believe chatbots are God based on the first article I shared" basically assumes the conclusion that it's unimportant. Perhaps instead all 2.25 million delusion-prone LLM users are having their delusions validated and exacerbated by LLMs? After all, their delusions are presumably pretty important to their lives, so there's a high chance they talked to an LLM about them at some point, and perhaps after that they all keep talking.

- I mean, I also expect it's actually very few people (at least so far), but we don't rea

Sooo, apparently OpenAI's mysterious breakthrough technique for generalizing RL to hard-to-verify domains that scored them IMO gold is just... "use the LLM as a judge"? Sources: the main one is paywalled, but this seems to capture the main data, and you can also search for various crumbs here and here.

My understanding is that they ap... (read more)