Do you have thoughts on how this can this be squared with trying to have principles and trying to follow something like functional decision theory?

Consider the following (real) example:

I'm going to a building with an emergency fire exit, that I'm not supposed to open. Opening it doesn't start an alarm and it's the only door that directly leaders outside, so I want to open it to get some fresh air.

To me this seems like a clear example of a rule one should break, if one is slightly naughty. The problem is that to be allowed in the building I agreed to follow the house rules.

Opening the door feels almost like lying, and I'd rather not lie, I don't think a FDT agent would open the door.

My best guess at a resolution is that I can open the window if the owner would allow me in knowing I'll occasionally open it. If he's fine with me doing it occasionally although he'd prefer that I never do it, I am allowed to open the window. But if he would throw me out if only, he knew that I broke the rule, then it doesn't seem right to open the window. It's not what a FDT agent would do. Even if I know I won't be caught and I'm sure no problems will be caused by me opening the window.

But this seems like a very non-naughty way to act. I think most people would just open the window, if they really knew that they won't be caught.

Or take this extreme example:

If I could press a button that made it impossible for me to lie, and this was common knowledge I'd press this button, even though it would probably make me much less naughty, but it would be worth it because of all the trust I'd gain. If I think about what I would be most annoyed at it's situations where I'm expected to lie. If I'm asked at the airport if I belong to any "extremist ideologies" maybe I can get away with saying no because they really mean something like "violent ideologies", and they don't care about extreme believes I have about ethics or AI. But surely there are other situations where lying to a bureaucracy is just expected, and not being able to lie would be very annoying.

But everyone is already naughty in these situations, so I'm guessing you're suggestions goes beyond lying in these kinds of situations.

I had been thinking about the exact same topic when I read this article, only I was using bus routes in my analogy. I created a quick program to simulate these dynamics[1].

It's very simple, there is a grid of squares, let's say 100 by 100, each square has some other square randomly assigned as its goal. Then I generate some paths via random walks until some fraction of squares are paths. Then I check what fraction of squares are connected to their goal via a path.

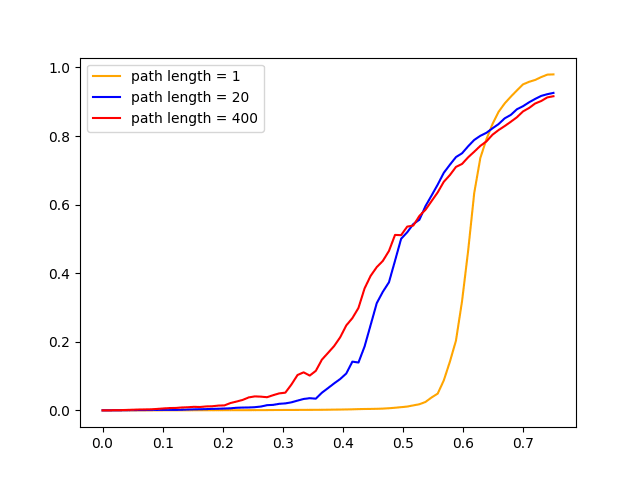

Doing this we get the following s-curve:

The y-axis shows the fraction of squares that are able to reach their goal. The x-axis is what fraction of squares were turned into path squares[2].

We can see what we probably expected, the first few paths do almost nothing but eventually each additional path has a large payoff.

The path length determines how long each random walk is. Long walks tend to create blobs of path. This is good if there are very few paths, you might get lucky and connect a couple of squares to their goals. But once there are lots of paths just turning random squares into paths is actually better.

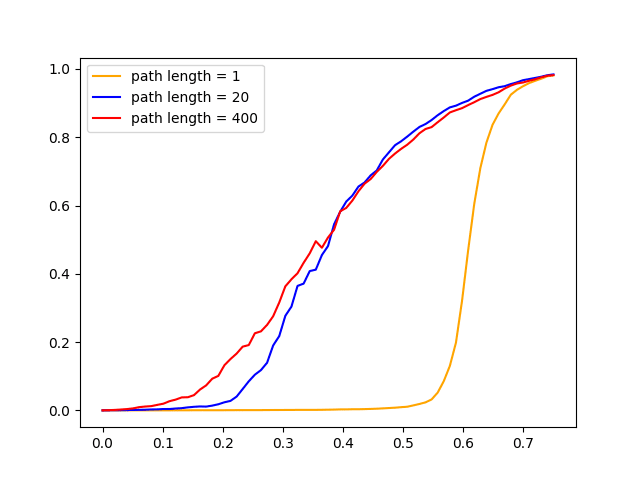

To make the situation a bit more realistic I also simulated what happens if all generated paths are straight.

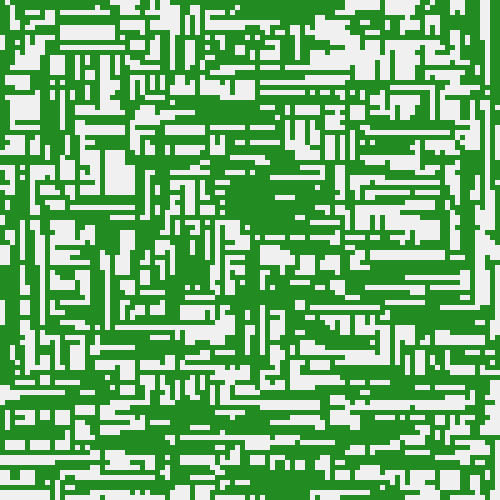

The yellow graph hasn't changed but the longer paths are now more efficient and are never beaten by the totally random paths. These two maps show the difference, clearly the top approach would work better.

I also have to add that I find the idea that a cyclist wouldn't cycle on a road absurd. I don't think I know a single person who wouldn't do this, presumably a US vs EU thing.

like, i could invest energy until i can actually refute flat earthers completely on the object level, and I'd almost certainly succeed. but this would be a huge waste of time.

I don't think it would be that hard to refute flat earthers. One or two facts about how the sun travels, that the atmosphere bends light, and the fact that there are commercial flights crossing the poles seem like they would be sufficient to me. This probably won't convince a flat earther, but I think you could fairly easily convince 95% of smart unbiased 3ed listeners (not that they exist).

You don't have to go down every option in their argument tree, finding one argument they are completely unable to refute can be enough.

Interesting. Do you have any recommendations on how to do this most effectively? At the moment I'm

- using R to analyse the data/create some basic plots (will look into tigramite),

- entering the data in google sheets,

- occasionally doing a (blinded) RCT where I'm randomizing my dose of stimulants,

- numerically tracking (guessing) mood and productivity,

- and some things I mark as completed or not completed every day e.g. exercise, getting up early, remembering to floss

Questions I'd have:

- Is google sheets good for something like this or are there better programs?

- Any advice on blinding oneself or on RCTs in general or what variables one should track?

- Anything I'm (probably) doing wrong?

Thanks in advance if you have any advice to offer (I already looked at your Caffeine RCT just wondering if you have any new insights or general advice on collecting data on oneself).

At the moment, a post is marked as "read" after just opening it. I understand it is useful not to have to mark every post as "I read this", but it makes it so that if I just look at a post for 10 seconds to see whether it interests me, it gets marked as read. I would prefer if one could change the settings to a "I have to mark posts as <read> manually" mode. With a small box at the bottom of a post, one can check.

I think it's mostly that people complain when something gets worse but don't praise an update that improves the UX.

If a website or app gets made worse people get upset und complain, but if the UI gets improved people generally don't constantly praise the designers. A couple of people will probably comment on an improved design but not nearly as many as when the UI gets worse.

So whenever someone mentions a change it is almost always to complain.

If I just look at the Software I am using right now:

- Windows 11 (seems better than e.g. Windows Vista)

- Spotify (Don't remember updates so probably slight improvements)

- Discord (Don't remember updates so probably sight improvements)

- Same goes for Anki, Gmail, my notes app, my bank app etc.

All of those apps have probably had UI updates, but I don't remember ever seeing people complain about any of those updates. I use most of those apps every day and I hear less about their UI changes than about reddit's, a website I almost never use, people just like to complain.

How good was the Spotify UI 10 years ago? I have no Idea but I suspect it was worse than it is now and has slowly been getting better over the years.

I also looked up the old logo and it's clearly much worse but people just don't celebrate logos improving the way people make fun of terrible new logos.

Don't look at the comments of the article if you want to stay positive.

I think this might play a really big role. I'm a teenager and I and all the people I knew during school were very political. At parties people would occasionally talk about politics, in school talking about politics was very common, people occasionally went to demonstrations together, during the EU Parlament election we had a school wide election to see how our school would have voted. Basically I think 95% of students, starting at about age 14, had some sort of Idea about politics most probably had one party they preferred.

We were probably most concerned about climate change, inequality and Trump, Erdogan, Putin all that kind of stuff.

The young people that I know that are depressed are almost all very left wing and basically think capitalism and climate change will kill everyone exept the very rich. But I don't know if they are depressed because of that (and my sample size is very small).

Deutsch has also written elsewhere about why he thinks AI doom is unlikely and I think his other arguments on this subject are more convincing. For me personally, he is who gives me the greatest sense of optimism for the future. Some of his strongest arguments are:

- The creation of knowledge is fundamentally unpredictable, so having strong probabilistic beliefs about the future is misguided (If the time horizon is long enough that new knowledge can be created, of course you can have predictions about the next 5 minutes). People are prone to extrapolate negative trends into the future and forget about the unpredictable creation of knowledge. Deutsch might call AI doom a kind of Malthusianism, arguing that LWers are just extrapolating AI growth and the current state of unalignment out into the future, but are forgetting about the knowledge that is going to be created in the next years and decades.

- He thinks that if some dangerous technology is invented, the way forward is never to halt progress, but to always advance the creation of knowledge and wealth. Deutsch argues that knowledge, the creation of wealth and our unique ability to be creative will let us humans overcome every problem that arises. He argues that the laws of physics allow any interesting problem to be solved.

- Deutsch makes a clear distinction between persons and non-persons. For him a person is a universal explainer and a being that is creative. That makes humans fundamentally different from other animals. He argues, to create digital persons we will have to solve the philosophical problem of what personhood is and how human creativity arises. If an AI is not a person/creative universal explainer, it won't be creative and so humanity won’t have a hard time stopping it from doing something dangerous. He is certain that current ML technology won’t lead to creativity, and so won’t lead to superintelligence.

- Once me manage to create AIs that are persons/creative universal explainers, he thinks, we will be able to reason with them and convince them not do anything evil. Deutsch is a moral realist and thinks any AI cleverer than humans will also be intelligent enough to come up with better ethics, so even if it could it kill us, it won’t. For him all evil arises of a lack of knowledge. So, a superintelligence would, per definition, be super moral.

I find some it these arguments convincing, and some not so much. But for now I find his specific kind of optimism to be the strongest argument against AI doom. These arguments are mostly taken from his second book. If you want to learn more about his views on AI this video might be a good place to start (although I havent yet watched it).

I use a program called plucky that should be available on linux see here it's very restrictive, you set a timer e.g. 2 hours or 5 days and have to wait that long to edit the rules you set yourself.

Edit: Just saw that the next comment mentions plucky, but they didn't mention that it's available for linux...