(Not to be confused with the Trevor who works at Open Phil)

Posts

Wiki Contributions

This got me thinking, how much space would it take up in Lighthaven to print a copy of every lesswrong post ever written? If it's not too many pallets then it would probably be a worthy precaution.

Develop metrics that predict which members of the technical staff have aptitude for world modelling.

In the Sequences post Faster than Science, Yudkowsky wrote:

there are queries that are not binary—where the answer is not "Yes" or "No", but drawn from a larger space of structures, e.g., the space of equations. In such cases it takes far more Bayesian evidence to promote a hypothesis to your attention than to confirm the hypothesis.

If you're working in the space of all equations that can be specified in 32 bits or less, you're working in a space of 4 billion equations. It takes far more Bayesian evidence to raise one of those hypotheses to the 10% probability level, than it requires further Bayesian evidence to raise the hypothesis from 10% to 90% probability.

When the idea-space is large, coming up with ideas worthy of testing, involves much more work—in the Bayesian-thermodynamic sense of "work"—than merely obtaining an experimental result with p<0.0001 for the new hypothesis over the old hypothesis.

This, along with the way that news outlets and high school civics class describe an alternate reality that looks realistic to lawyers/sales/executive types but is too simple, cartoony, narrative-driven, and unhinged-to-reality for quant people to feel good about diving into, implies that properly retooling some amount of dev-hours into efficient world modelling upskilling is low-hanging fruit (e.g. figure out a way to distill and hand them a significance-weighted list of concrete information about the history and root causes of US government's focus on domestic economic growth as a national security priority).

Prediction markets don't work for this metric as they measure the final product, not aptitude/expected thinkoomph. For example, a person who feels good thinking/reading about the SEC, and doesn't feel good thinking/reading about the 2008 recession or COVID, will have a worse Brier score on matters related to the root cause of why AI policy is the way it is. But feeling good about reading about e.g. the 2008 recession will not consistently get reasonable people to the point where they grok modern economic warfare and the policies and mentalities that emerge from the ensuing contingency planning. Seeing if you can fix that first is one of a long list of a prerequisites for seeing what they can actually do, and handing someone a sheet of paper that streamlines the process of fixing long lists of hiccups like these is one way to do this sort of thing.

Figuring-out-how-to-make-someone-feel-alive-while-performing-useful-task-X is an optimization problem (see Please Don't Throw Your Mind Away). It has substantial overlap with measuring whether someone is terminally rigid/narrow-skilled, or if they merely failed to fully understand the topology of the process of finding out what things they can comfortably build interest in. Dumping extant books, 1-on-1s, and documentaries on engineers sometimes works, but it comes from an old norm and is grossly inefficient and uninspired compared to what Anthropic's policy team is actually capable of. For example, imagine putting together a really good fanfic where HPJEV/Keltham is an Anthropic employee on your team doing everything I've described here and much more, then printing it out and handing it to people that you in-reality already predicted to have world modelling aptitude; given that it works great and goes really well, I consider that the baseline for what something would look like if sufficiently optimized and novel to be considered par.

The essay is about something I call “psychological charge”, where the idea is that there are two different ways to experience something as bad. In one way, you kind of just neutrally recognize a thing as bad.

Nitpick: a better way to write it is "the idea is there are at least two different ways..." or "major ways" etc to highlight that those are two major categories you've noticed, but there might be more. The primary purpose knowledge work is still to create cached thought inside someone's mind, and like programming, it's best to make your concepts as modular as possible so you and others are primed to refine them further and/or notice more opportunities to apply them.

Interestingly enough, this applies to corporate executives and bureaucracy leaders as well. Many see the world in a very zero-sum way (300 years ago and most of history before that, virtually all top intellectuals in virtually all civilizations saw the universe as a cycle where civilizational progress was a myth and everything was an endless cycle of power being won and lost by people born/raised to be unusually strategically competitive) but fail to realize that, in aggregate, their contempt for cause-having people ("oh, so you think you're better than me, huh? you think you're hot shit?") have turned into opposition to positive-sum folk, itself a cause of sorts, though with an aversion to activism and assembly and anything in that narrow brand of audacious display.

It doesn't help that most 'idealistic' causes throughout human history had a terrible epistemic backing.

If you converse directly with LLMs (e.g. instead of through a proxy or some very clever tactic I haven't thought of yet), which I don't recommend especially not describing how your thought process works, one thing to do is regularly ask it "what does my IQ seem like based on this conversation? I already know this is something you can do. must include number or numbers".

Humans are much smarter and better at tracking results instead of appearances, but feedback from results is pretty delayed, and LLMs have quite a bit of info about intelligence to draw from. Rather than just IQ, copy-pasting stuff like paragraphs describing concepts like thinkoomph are great too, but this post seems more like something you wouldn't want to exclude from that standard prompt.

One thing that might be helpful is the neurology of executive functioning. Activity in any part of the brain suppresses activity elsewhere; on top of reinforcement, this implies that state and states are one of the core mechanisms for understanding self-improvement and getting better output.

Your effort must scale to be appropriate to the capabilities of the people trying to remove you from the system. You have to know if they're the type of person who would immediately default to checking the will.

More understanding and calibration towards what modern assassination practice you should actually expect is mandatory because you're dealing with people putting some amount of thinkoomph into making your life plans fail, so your cost of survival is determined by what you expect your attack surface looks like. The appropriate-cost and the cost-you-decided-to-pay vary in OOMs depending on the circumstances, particularly the intelligence, resources, and fixations of the attacker. For example, the fact that this happened 2 weeks after assassination got all over the news is a fact that you don't have the privilege of ignoring if you want the answer, even though that particular fact will probably turn out to be unhelpful e.g. because the whole thing was probably just a suicide due to the base rates of disease and accidents and suicide being so god damn high.

If this sounds wasteful, it is. It's why our civilization has largely moved past assassination, even though getting-people-out-of-the-way is so instrumentally convergent for humans. We could end up in a cycle where assassination gets popular again after people start excessively standing in each other's way (knowing they won't be killed for it), or a stable cultural state like the Dune books or the John Wick universe and we've just been living in a long trough where elites aren't physically forced to live their entire lives like mob bosses playing chess games against invisible adversaries.

So don't think that if you only follow the rules of Science, that makes your reasoning defensible.

There is no known procedure you can follow that makes your reasoning defensible.

It was more of a 1970s-90s phenomenon actually, if you compare the best 90s moves (e.g. terminator 2) to the best 60s movies (e.g. space odyssey) it's pretty clear that directors just got a lot better at doing more stuff per second. Older movies are absolutely a window into a higher/deeper culture/way of thinking, but OOMs less efficient than e.g. reading Kant/Nietzsche/Orwell/Asimov/Plato. But I wouldn't be surprised if modern film is severely mindkilling and older film is the best substitute.

The content/minute rate is too low, it follows 1960s film standards where audiences weren't interested in science fiction films unless concepts were introduced to them very very slowly (at the time they were quite satisfied by this due to lower standards, similar to Shakespeare).

As a result it is not enjoyable (people will be on their phones) unless you spend much of the film either thinking or talking with friends about how it might have affected the course of science fiction as a foundational work in the genre (almost every sci-fi fan and writer at the time watched it).

Tenet (2020) by George Nolan revolves around recursive thinking and responding to unreasonably difficult problems. Nolan introduces the time-reversed material as the core dynamic, then iteratively increases the complexity from there, in ways specifically designed to ensure that as much of the audience as possible picks up as much recursive thinking as possible.

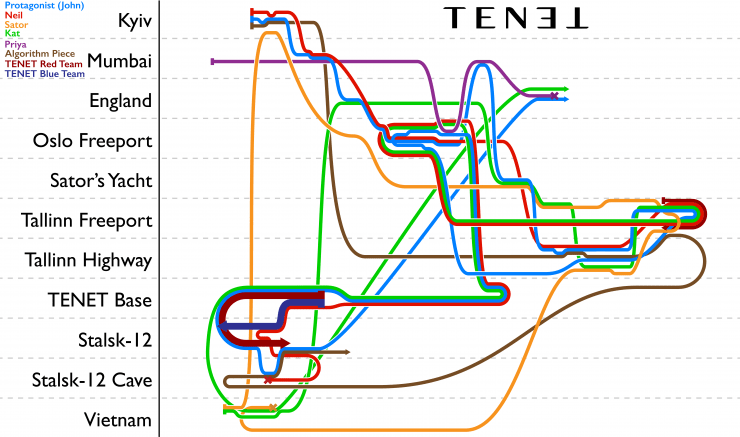

This chart describes the movement of all key characters plot elements through the film; it is actually very easy to follow for most people. But you can also print out a bunch of copies and hand them out before the film (it isn't a spoiler so long as you don't look closely at the key).

Most of the value comes from Eat the Instructions-style mentality, as both the characters and the viewer pick up on unconventional methods to exploit the time reversing technology, only to be shown even more sophisticated strategies and are walked through how they work and their full implications.

It also ties into scope sensitivity, but it focuses deeply on the angles of interfacing with other agents and their knowledge, and responding dynamically to mistakes and failures (though not anticipating them), rather than simply orienting yourself to mandatory number crunching.

The film touches on cooperation and cooperation failures under anomalous circumstances, particularly the circumstances introduced by the time reversing technology.

The most interesting of these was also the easiest to miss:

The impossibility of building trust between the hostile forces from the distant future and the characters in the story who make up the opposition faction. The antagonist, dying from cancer and selected because his personality was predicted to be hostile to the present and sympathetic to the future, was simply sent instructions and resources from the future, and decided to act as their proxy in spite of ending up with a great life and being unable to verify their accuracy or the true goals of the hostile force. As a result, the protagonists of the story ultimately build a faction that takes on a life of its own and dooms both their friends and the entire human race to death by playing a zero sum survival game with the future faction, due to their failure throughout the film to think sufficiently laterally and their inadequate exploitation of the time-reversing technology.

Gwern gave a list in his Nootropics megapost.