All of Unnamed's Comments + Replies

The "Taboo bad faith" title doesn't fit this post. I had hoped from the opening section that it was going in that direction, but it did not.

Most obviously, the post kept relying heavily on the terms "bad faith" and "good faith" and that conceptual distinction, rather than tabooing them.

But also, it doesn't do the core intellectual work of replacing a pointer with its substance. In the opening scenario where someone accuses their conversation partner of bad faith, conveying something along the lines of 'I disapprove of how you're approaching this conversati...

I'm voting against including this in the Review, at max level, because I think it too-often mischaracterizes the views of the people it quotes. And it seems real bad for a post that is mainly about describing other people's views and the drawing big conclusions from that data to inaccurately describe those views and then draw conclusions from inaccurate data.

I'd be interested in hearing about this from people who favor putting this post in the review. Did you check on the sources for some of Elizabeth's claims and think that she described them well? Did yo...

As I understand it, Scott's post was making basically the same conceptual distinction as this Andrew Gelman post, where Gelman writes:

...One of the big findings of baseball statistics guru Bill James is that minor-league statistics, when correctly adjusted, predict major-league performance. James is working through a three-step process: (1) naive trust in minor league stats, (2) a recognition that raw minor league stats are misleading, (3) a statistical adjustment process, by which you realize that there really is a lot of information there, if you know

savvy don't (just) have more skills to extract signal from a "naturally" occurring source of lies. They're collaborating with it!

This (from your tweet) is false, and your post here even has a straightforward argument against it (with the honest cop and the corrupt cop both savvy enough to discern a lightly veiled bribery attempt). Savviness about extracting information from a source does not imply complicity with the source.

Trying to make this more intuitive: consider a prediction market which is currently priced at x, where each share will pay out $1 if it resolves as True.

If you think it's underpriced because your probability is y, where y>x, then your subjective EV from buying a share is y-x. e.g., If it's priced at $0.70 and you think p=0.8, your subjective EV from buying a share is $0.10.

If you think it's overpriced because your probability is z, where z<x, then your subjective EV from selling a share is x-z. e.g., If it's priced at $0.70 and you think p=0.56, your...

This is a bet at 30% probability, as 42.86/142.86 = .30001.

That is the average of Alice's probability and Bob's probability. The fair bet according to equal subjective EV is at the average of the two probabilities; previous discussion here.

I don't buy the way that Spencer tried to norm the ClearerThinking test. It sounds like he just assumed that people who took their test and had a college degree as their highest level of education had the same IQ as the portion of the general population with the same educational level, and similarly for all other education levels. Then he used that to scale how scores on the ClearerThinking test correspond to IQs. That seems like a very strong and probably inaccurate assumption.

Much of what this post and Scott's post are using the ClearerThinking IQ number...

I think that the way that Scott estimated IQ from SAT is flawed, in a way that underestimates IQ, for reasons given in comments like this one. This post kept that flaw.

I agree. You only multiply the SAT z-score by 0.8 if you're selecting people on high SAT score and estimating the IQ of that subpopulation, making a correction for regressional Goodhart. Rationalists are more likely selected for high g which causes both SAT and IQ, so the z-score should be around 2.42, which means the estimate should be (100 + 2.42 * 15 - 6) = 130.3. From the link, the exact values should depend on the correlations between g, IQ, and SAT score, but it seems unlikely that the correction factor is as low as 0.8.

..."Can crimes be discussed literally?":

- some kinds of hypocrisy (the law and medicine examples) are normalized

- these hypocrisies are / the fact of their normalization is antimemetic (OK, I'm to some extent interpolating this one based on familiarity with Ben's ideas, but I do think it's both implied by the post, and relevant to why someone might think the post is interesting/important)

- the usage of words like 'crime' and 'lie' departs from their denotation, to exclude normalized things

- people will push back in certain predictable ways on calling normalized thing

This post reads like it's trying to express an attitude or put forward a narrative frame, rather than trying to describe the world.

Many of these claims seem obviously false, if I take them at face value at take a moment to consider what they're claiming and whether it's true.

e.g., On the first two bullet points it's easy to come up with counterexamples. Some successful attempts to steer the future, by stopping people from doing locally self-interested & non-violent things, include: patent law ("To promote the progress of science and useful arts, by sec...

In America, people shopped at Walmart instead of local mom & pop stores because it had lower prices and more selection, so Walmart and other chain stores grew and spread while lots of mom & pop stores shut down. Why didn't that happen in Wentworld?

This comment caused it to occur to me that working in software development might produce a distorted view of this situation. In most types of companies, if you want to scale, you have to hire a lot more people to build more things and sell to more people. So scaling large is a much more natural thing to do. And cultural norms around that might then just spread to corporations where that’s less relevant.

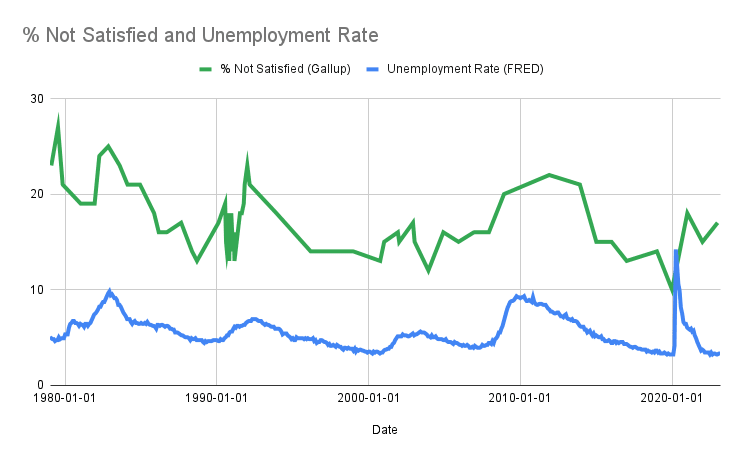

I made a graph of this and the unemployment rate, they're correlated at r=0.66 (with one data point for each time Gallup ran the survey, taking the unemployment rate on the closest day for which there's data). You can see both lines spike with every recession.

Are you telling me 2008 did actual nothing?

It looks like 2008 led to about a 1.3x increase in the number of people who said they were dissatisfied with their life.

It's common for much simpler Statistical Prediction Rules, such as linear regression or even simpler models, to outperform experts even when they were built to predict the experts' judgment.

Or "Defense wins championships."

With the ingredients he has, he has gotten a successful Barkskin Potion:

1 of the 1 times (100%) he brewed together Crushed Onyx, Giant's Toe, Ground Bone, Oaken Twigs, Redwood Sap, and Vampire Fang.

19 of the 29 times (66%) he brewed together Crushed Onyx, Demon Claw, Ground Bone, and Vampire Fang.

Only 2 other combinations of the in-stock ingredients have ever produced Barkskin Potion, both at under a 50% rate (4/10 and 18/75).

The 4-ingredient, 66% success rate potion looks like the best option if we're just going to copy something that has worked. Th

Being Wrong on the Internet: The LLM generates a flawed forum-style comment, such that the thing you've been wanting to write is a knockdown response to this comment, and you can get a "someone"-is-wrong-on-the-internet drive to make the points you wanted to make. You can adjust how thoughtful/annoying/etc. the wrong comment is.

Target Audience Personas: You specify the target audience that your writing is aimed at, or a few different target audiences. The LLM takes on the persona of a member of that audience and engages with what you've written, with more ...

I don't think that the key element in the aging example is 'being about value claims'. Instead, it's that the question about what's healthy is a question that many people wonder about. Since many people wonder about that question, some people will venture an answer. Even if humanity hasn't yet built up enough knowledge to have an accurate answer.

Thousands of years ago many people wondered what the deal is with the moon and some of them made up stories about this factual (non-value) question whose correct answer was beyond them. And it plays out similarly t...

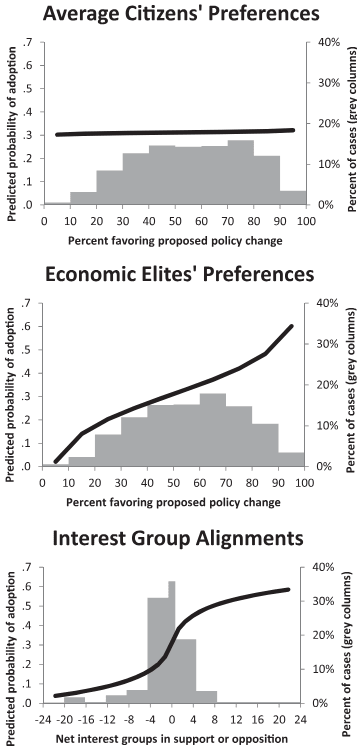

7% of the variance isn't negligible. Just look at the pictures (Figure 1 in the paper):

Yes, I did read the paper. And those are still extremely small effects and almost no predictability, no matter how you graph them (note the axis truncation and sparsity), even before you get into the question of "what is the causal status of any of these claims and why we are assuming that interest groups in support precede rather than follow success?"

I got the same result: DEHK.

I'm not sure that there are no patterns in what works for self-taught architects, and if we were aiming to balance cost & likelihood of impossibility then I might look into that more (since I expect A,L,N to be the the cheapest options with a chance to work), but since we're prioritizing impossibility I'll stick with the architects with the competent mentors.

Moore & Schatz (2017) made a similar point about different meanings of "overconfidence" in their paper The three faces of overconfidence. The abstract:

...Overconfidence has been studied in 3 distinct ways. Overestimation is thinking that you are better than you are. Overplacement is the exaggerated belief that you are better than others. Overprecision is the excessive faith that you know the truth. These 3 forms of overconfidence manifest themselves under different conditions, have different causes, and have widely varying consequences. It is a mist

You can go ahead and post.

I did a check and am now more confident in my answer, and I'm not going to try to come up with an entry that uses fewer soldiers.

Just got to this today. I've come up with a candidate solution just to try to survive, but haven't had a chance yet to check & confirm that it'll work, or to try to get clever and reduce the number of soldiers I'm using.

10 Soldiers armed with: 3 AA, 3 GG, 1 LL, 2 MM, 1 RR

I will probably work on this some more tomorrow.

Building a paperclipper is low-value (from the point of view of total utilitarianism, or any other moral view that wants a big flourishing future) because paperclips are not sentient / are not conscious / are not moral patients / are not capable of flourishing. So filling the lightcone with paperclips is low-value. It maybe has some value for the sake of the paperclipper (if the paperclipper is a moral patient, or whatever the relevant category is) but way less than the future could have.

Your counter is that maybe building an aligned AI is also low-value (...

Is this calculation showing that, with a big causal graph, you'll get lots of very weak causal relationships between distant nodes that should have tiny but nonzero correlations? And realistic sample sizes won't be able to distinguish those relationships from zero.

Andrew Gelman often talks about how the null hypothesis (of a relationship of precisely zero) is usually false (for, e.g., most questions considered in social science research).

A lot of people have this sci-fi image, like something out of Deep Impact, Armageddon, Don't Look Up, or Minus, of a single large asteroid hurtling towards Earth to wreak massive destruction. Or even massive vengeance, as if it was a punishment for our sins.

But realistically, as the field of asteroid collection gradually advances, we're going to be facing many incoming asteroids which will interact with each other in complicated ways, and whose forces will to a large extent balance each other out.

Yet doomers are somehow supremely confident in how the futur...

If everyone has his own asteroid impact, earth will not be displaced because the impulse vectors will cancel each other out on average*. This is important because it will keep the trajectory equilibrium of earth, which we know since ages from animals jumping up and down all the time around the globe in their games of survival. If only a few central players get asteroid impacts it's actually less safe! Safety advocates might actually cause the very outcomes that they fear!

*I've a degree in quantum physics and can derive everything from my model of the universe. This includes moral and political imperatives that physics dictate and thus most physicists advocate for.

They're critical questions, but one of the secret-lore-of-rationality things is that a lot of people think criticism is bad, because if someone criticizes you, it hurts your reputation. But I think criticism is good, because if I write a bad blog post, and someone tells me it was bad, I can learn from that, and do better next time.

I read this as saying 'a common view is that being criticized is bad because it hurts your reputation, but as a person with some knowledge of the secret lore of rationality I believe that being criticized is good because you can learn from it.'

And he isn't making a claim about to what extent the existing LW/rationality community shares his view.

Seems like the main difference is that you're "counting up" with status and "counting down" with genetic fitness.

There's partial overlap between people's reproductive interests and their motivations, and you and others have emphasized places where there's a mismatch, but there are also (for example) plenty of people who plan their lives around having & raising kids.

There's partial overlap between status and people's motivations, and this post emphasizes places where they match up, but there are also (for example) plenty of people who put tons of ...

The economist RH Strotz introduced the term "precommitment" in his 1955-56 paper "Myopia and Inconsistency in Dynamic Utility Maximization".

Thomas Schelling started writing about similar topics in his 1956 paper "An essay on bargaining", using the term "commitment".

Both terms have been in use since then.

On one interpretation of the question: if you're hallucinating then you aren't in fact seeing ghosts, you're just imagining that you're seeing ghosts. The question isn't asking about those scenarios, it's only asking what you should believe in the scenarios where you really do see ghosts.

My updated list after some more work yesterday is

96286, 9344, 107278, 68204, 905, 23565, 8415, 62718, 83512, 16423, 42742, 94304

which I see is the same as simon's list, with very slight differences in the order

More on my process:

I initially modeled location just by a k nearest neighbors calculation, assuming that a site's location value equals the average residual of its k nearest neighbors (with location transformed to Cartesian coordinates). That, along with linear regression predicting log(Performance), got me my first list of answers. I figured that li

Did a little robustness check, and I'm going to swap out 3 of these to make it:

96286, 23565, 68204, 905, 93762, 94408, 105880, 9344, 8415, 62718, 80395, 65607

To share some more:

I came across this puzzle via aphyer's post, and got inspired to give it a try.

Here is the fit I was able to get on the existing sites (Performance vs. Predicted Performance). Some notes on it:

Seems good enough to run with. None of the highest predicted existing sites had a large negative residual, and the highest predicted new sites give some buffer.

Three observations I made along ...

My current choices (in order of preference) are

96286, 23565, 68204, 905, 93762, 94408, 105880, 8415, 94304, 42742, 92778, 62718

What's "Time-Weighted Probability"? Is that just the average probability across the lifespan of the market? That's not a quantity which is supposed to be calibrated.

e.g., Imagine a simple market on a coin flip, where forecasts of p(heads) are made at two times: t1 before the flip and t2 after the flip is observed. In half of the cases, the market forecast is 50% at t1 and 100% at t2, for an average of 75%; in those cases the market always resolves True. The other half: 50% at t1, 0% at t2, avg of 25%, market resolves False. The market is underconfident if you take this average, but the market is perfectly calibrated at any specific time.

Have you looked at other ways of setting up the prior to see if this result still holds? I'm worried that they way you've set up the prior is not very natural, especially if (as it looks at first glance) the Stable scenario forces p(Heads) = 0.5 and the other scenarios force p(Heads|Heads) + p(Heads|Tails) = 1. Seems weird to exclude "this coin is Headsy" from the hypothesis space while including "This coin is Switchy".

Thinking about what seems most natural for setting up the prior: the simplest scenario is where flips are serially independent. You only ne...

Try memorizing their birthdates (including year).

That might be different enough from what you've previously tried to memorize (month & day) to not get caught in the tangle that has developed.

My answer to "If AI wipes out humanity and colonizes the universe itself, the future will go about as well as if humanity had survived (or better)" is pretty much defined by how the question is interpreted. It could swing pretty wildly, but the obvious interpretation seems ~tautologically bad.

Agreed, I can imagine very different ways of getting a number for that, even given probability distributions for how good the future will be conditional on each of the two scenarios.

A stylized example: say that the AI-only future has a 99% chance of being mediocre and...

The time on a clock is pretty close to being a denotative statement.

Batesian mimicry is optimized to be misleading, "I"ll get to it tomorrow" is denotatively false, "I did not have sexual relations with that woman" is ambiguous as to its conscious intent to be denotatively false.

Structure Rebel, Content Purist: people who disagree with me are lying (unless they say "I think that", "My view is", or similar)

Structure Rebel, Content Neutral: people who disagree with me are lying even when they say "I think that", "My view is", or similar

Structure Rebel, Content Rebel: trying to unlock the front door with my back door key is a lie

How do you get a geocentric model with ellipses? Venus clearly does not go in an ellipse around the Earth. Did Riccioli just add a bunch of epicycles to the ellipses?

Googling... oh, it was a Tychonic model, where Venus orbits the sun in an ellipse (in agreement with Kepler), but the sun orbits the Earth.

Kepler's ellipses wiped out the fully geocentric models where all the planets orbit around the Earth, because modeling their orbits around the Earth still required a bunch of epicycles and such, while modeling their orbits around the sun now involved a simp...

Here is Yudkowsky (2008) Artificial Intelligence as a Positive and

Negative Factor in Global Risk:

...Friendly AI is not a module you can instantly invent at the exact moment when it is first needed, and then bolt on to an existing, polished design which is otherwise completely unchanged.

The field of AI has techniques, such as neural networks and evolutionary programming, which have grown in power with the slow tweaking of decades. But neural networks are opaque—the user has no idea how the neural net is making its decisions—and cannot easily be rendered unopaq

Also, chp 25 of HPMOR is from 2010 which is before CFAR.

Also, chp 25 of HPMOR is from 2010 which is before CFAR.

There are a couple errors in your table of interpretations. For "actual score = subjective expected", the second half of the interpretation "prediction = 0.5 or prediction = true probability" got put on a new line in the "Comparison score" column instead of staying together in the "Interpretation" column, and similarly for the next one.

I posted a brainstorm of possible forecasting metrics a while back, which you might be interested in. It included one (which I called "Points Relative to Your Expectation") that involved comparing a forecaster's (Brier or other) score with the score that they'd expect to get based on their probability.

This post begins:

The second paragraph uses the term "bad faith" or "goo... (read more)