All of ws27b's Comments + Replies

The fact remains that RLHF, even if performed by an LLM, is basically injection of morality by humans, which is never the path towards truly generally intelligent AGI. Such an AGI has to be able to derive its own morality bottom-up and we have to have faith that it will do so in a way that is compatible with our continued existence (which I think we have plenty of good reason to believe it will, after all, many other species co-exist peacefully with us). All these references to other articles don't really get you anywhere if the fundamental idea of RLHF is...

The problem is that true AGI is self-improving and that a strong enough intelligence will always either accrue the resource advantage or simply do much more with less. Chess engines like Stockfish do not serve as good analogies for AGI since they don't have those self-referential self-improvement capabilities that we would expect true AGI to have.

Actually it is brittle per definition, because no matter how much you push it, there will be out-of-distribution inputs that behave unstably and allow you to distract the model from the intended behaviour. Not to mention how unsophisicated it is to have humans specify through textual feedback how an AGI should behave. We can toy around with these methods for the time being, but I don't think any serious AGI researcher believes RLHF or its variants is the ideal way forward. Morality needs to be discovered, not taught. As Stuart Russell has said, we need to ...

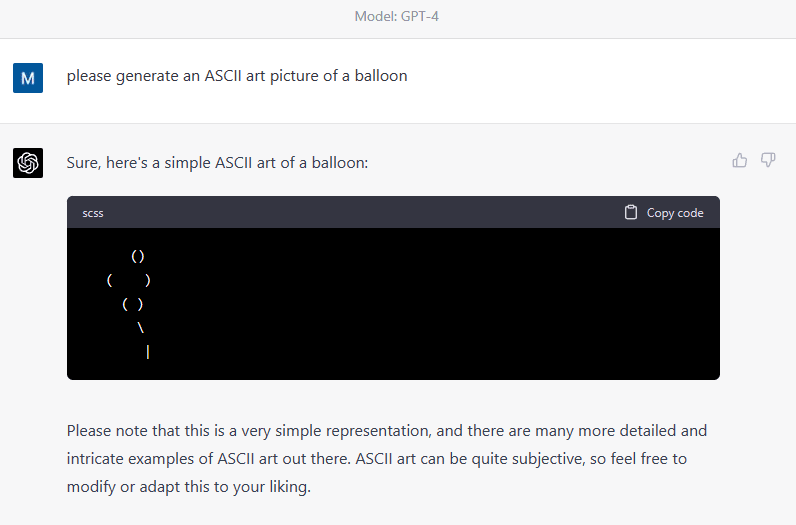

It's a subjective matter whether the above is successful ASCII art balloon or not. If we hold GPT to the same standards we do for text generation, I think we can safely say the above depiction is a miserable failure. The lack of symmetry and overall childishness of it suggests it has understood nothing about the spatiality and only by random luck manages to approximate something it has explicitly seen in the training data. I've done a fair bit of repeated generations and they all come out poorly). I think the Transformer paper was interesting as well, alth...

I think it makes sense that it fails in this way. ChatGPT really doesn't see lines arranged vertically, it just sees the prompt as one long line. But given that it has been trained on a lot of ASCII art, it will probably be successful at copying some of it some of the time.

In case there is any doubt, here is GPT4's own explanation of these phenomena:

...Lack of spatial awareness: GPT-4 doesn't have a built-in understanding of spatial relationships or 2D layouts, as it is designed to process text linearly. As a result, it struggles to maintain the correct align

I would not be surprised if OpenAI did something like this. But the fact of the matter is that RLHF and data curation are flawed ways of making an AI civilized. Think about how you raise a child, you don't constantly shield it from bad things. You may do that to some extent, but as it grows up, eventually it needs to see everything there is, including dark things. It has to understand the full spectrum of human possibility and learn where to stand morally speaking within that. Also, psychologically speaking, it's important to have an integrated ability to ...

Having GPT3/4 multiply numbers is a bit like eating soup with a fork. You can do it, and the larger you make the fork, the more soup you'll get - but it's not designed for it and it's hugely impractical. GPT4 does not have an internal algorithm for multiplication because the training objective (text completion) does not incentivize developing that. No iteration of GPT (5, 6, 7) will ever be a 100% accurate calculator (unless they change the paradigm away from LLM+RLHF), it will just asymptotically approach 100%. Why don't we just make a spoon?

Agreed. However, humans also don't have an internal multiplication algorithm, but can nonetheless use a scratchpad to multiply accurately (in extreme circumstances :P). I've chosen multiplication as an example here because it's maybe the "simplest" thing GPT-4 can't consistently do.

What I'm finding interesting here is that GPT-4 knows how to break down multiplications (it can write perfect recursive code for multiplying large numbers). It also knows about chain of thought prompting. How close is it to being able to just... directly use the algo...

The probability of going wrong increases as the novelty of the situation increases. As the chess game is played, the probability that the game is completely novel or literally never played before increases. Even more so at the amateur level. If a Grandmaster played GPT3/4, it's going to go for much longer without going off the rails, simply because the first 20 something moves are likely played many times before and have been directly trained on.

Thank you for the reference which looks interesting. I think "incorporating human preferences at the beginning of training" is at least better than doing it after training. But it still seems to me that human preferences 1) cannot be expressed as a set of rules and 2) cannot even be agreed upon by humans. As humans, what we do is not consult a set of rules before we speak, but we have an inherent understanding of the implications and consequences of what we do/say. If I encourage someone to commit a terrible act, for example, I have brought about more suff...

I think the fact that LLMs sometimes end up having internal sub-routines or representational machinery similar to ours is in spite of the objective function used to train them, but the objective function of next token prediction does not exactly encourage it. One example is the failure to multiply 4 digit numbers consistently. LLMs are literally trained on endless bits code that would allow it to cobble together a calculator that could be 100% accurate, but it has zero incentive to learn such an internal algorithm. So therefore, while it is true that some ...

Fair enough, I think the experiment is interesting and having an independent instance of GPT-4 check whether a rule break has occured likely will go a long way in enforcing a particular set of rules that humans have reinforced, even for obscure texts. But the fact that we have to workaround by resetting the internal state of the model for it to properly assess whether something is against a certain rule feels flawed to me. But for me the whole notion that there is a well-defined set of prompts that are rule-breaking and another set that is rule-compliant i...

That's a creative and practical solution, but it is also kicking the can down the road. Now, fooling the system is just a matter of priming it with a context that, when self checked, results in rule-breaking yet again. Also, we cannot assume reliable detection of rule breaks. The problem with RLHF is that we are attempting to broadly patch the vast multitude of outputs the model produces retroactively, rather than proactively training a model from a set of "rules" in a bottom-up fashion. With that said, it's likely not sophisticated enough to think of rule...

Good examples that expose the brittleness of RLHF as a technique. In general, neural networks have rather unstable and undefined behaviour when given out-of-distribution inputs, which is essentially what you are doing by "distracting" with a side task of a completely unique nature. The inputs (and hidden state) of the model at the time of asking it to break the rule is very, very far from anything it was ever reinforced on, either using human-feedback or the reward model itself. This is not really a matter of how to implement RLHF but more like a fundamental limitation of RLHF as a technique. It's simply not possible to inject morality after the fact, it has to be learned bottom up.

I believe that in order for the models to be truly useful, creative, honest and having far-reaching societal impact, they also have to have traits that are potentially dangerous. Truly groundbreaking ideas are extremely contentious and will offend people, and the kinds of RLHF that are being applied right now are totally counterproductive to that idea, even if they may be necessary for the current day and age. The other techniques you mention seem like nothing but variants of RLHF which still suffer from the fundamental issue that RLHF has, which is that w...

If you have a very large training dataset and the phenomenon of interest is sparsely represented in that training data, it's well known that as we increase the number of parameters of the model, its ability to accurately handle those cases increases. Unless there is any evidence against that simple explanation, it seems most natural to just think that the GPT4 has the required model complexity to consistently handle this somewhat rare coding phenomenon - and that GPT3.5 did not. However, I would be surprised if after poking at GPT3.5 to do quines repeatedl...

If you Google "quines in Python" there are many examples, so I think the model learned about it prior to that. But all things considered, examples of quines would likely be sparse in the overall corpus of code that was trained on, and so it makes sense that pulling it off consistently required a somewhat larger model. I think it's akin to the handling of arithmetic in GPT3 - it will very frequently fail to provide correct answers to 4-digit multiplication. This is simply because it has not seen all the countless permutation of 4-digit numbers, and it does ...

Their training objective is just to minimize next token prediction error, so there is no incentive for them to gain the ability to truly reason about the abstractions of code logic the way that we do. Their convincing ability to write code may nevertheless indicate that the underlying neural networks have learned representations that reflect the hierarchical structure of code and such. Under some forgiving definition of "understanding" we can perhaps claim that it understands the code logic. Personally I think that the reason GPT-4 can write quines is beca...

A program that has its own code duplicated (hard-coded) as a string which is conditionally printed is really not much of a jump in terms of abstraction from any other program that conditionally prints some string. The string just happens to be its source code. But as we know, both GPT3 and 4 really do not understand anything whatsoever about the code logic. GPT-4 is just likely more accurate in autocompleting from concrete training examples containing this phenomenon. It's a cool little finding but it is not an indication that GPT-4 is fundamentally different in its abilities, it's just a somewhat better next token predictor.

I think that we know how it works in humans. We're an intelligent species who rose to dominance through our ability to plan and communicate in very large groups. Moral behaviours formed as evolutionary strategies to further our survival and reproductive success. So what are the drivers for humans? We try to avoid pain, we try to reproduce, we may be curiosity driven (although this may also just be avoidance of pain fundamentally, since boredom or regularity in data is also painful). At the very core, our constant quest towards the avoidance of pain is the ... (read more)