Should YouTube's algorithm recommend conspiracy theory videos? Should twitter recommend tweets that disagree with the WHO? Should Facebook recommend things that'll make you angry?

There's countless such questions about recommender algorithms, usually with good arguments on both sides.

But the elephant in the room that all these arguments take place in, is that the problem isn't so much how to design the algorithm, but why do we only have one choice?

My suggestion is that sites with recommender algorithms will have a system that lets everyone write their own algorithm, upload it, and use it instead of the companies algorithm, or use an algorithm uploaded by someone else.

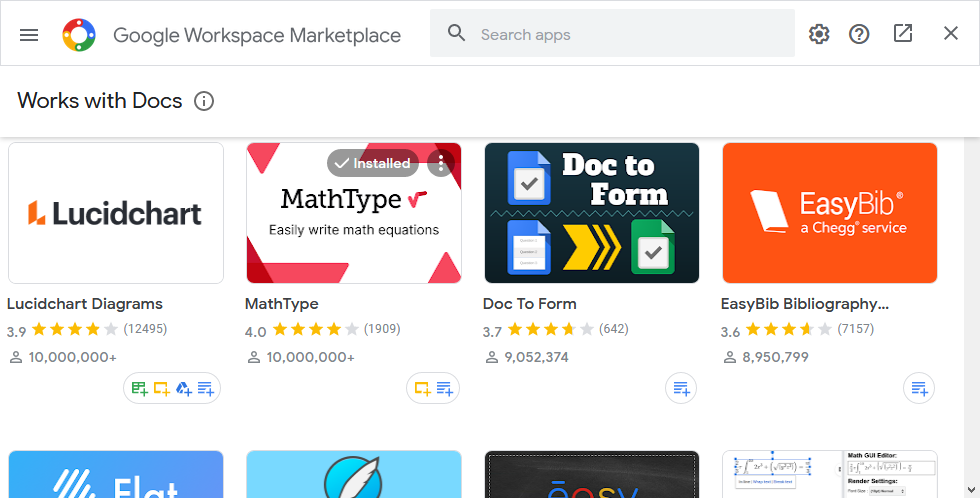

The algorithms would be selected through an add-on-shop-like interface, and could have further options for the users to tweak through a UI.

This will move the argument from "How should the one algorithm be designed", to the much easier problem of just designing an algorithm you're happy with and uploading it, or finding one that was already uploaded.

When it comes to large companies like Facebook, YouTube, Instagram, Twitter, etc. I would force them to implement such a system, and use a simple no-filter chronological order algorithm in their feeds as a default (then offer their own algorithm as one choice among the other choices).

With everyone able to choose their own algorithms, we'll be one step closer to a social media landscape that works.

That is indeed a potential problem, and another person i told this idea to was skeptical that people would choose the "healthy" algorithms instead of algorithms similar to the current ones that are more amusing.

I think it's hard to know what the outcome of this will be, I'm optimistic, but the pessimistic side definitely has merit too. this is in a way similar to the internet, where people were very optimistic for what it can do, but failed to see the potential problems it would create, and when social media finally came it showed a much sadder image.

But also similar to the internet, i think people should have the choice, i wouldn't want anyone to restrict the algorithms i can use because some people may choose algorithms that someone thinks are harmful.

At the end of the day, if someone is really motivated to be in an echo chamber, nothing's gonna stop them. this idea might help those that find themselves in echo chambers because of the current algorithm, but would rather be out.