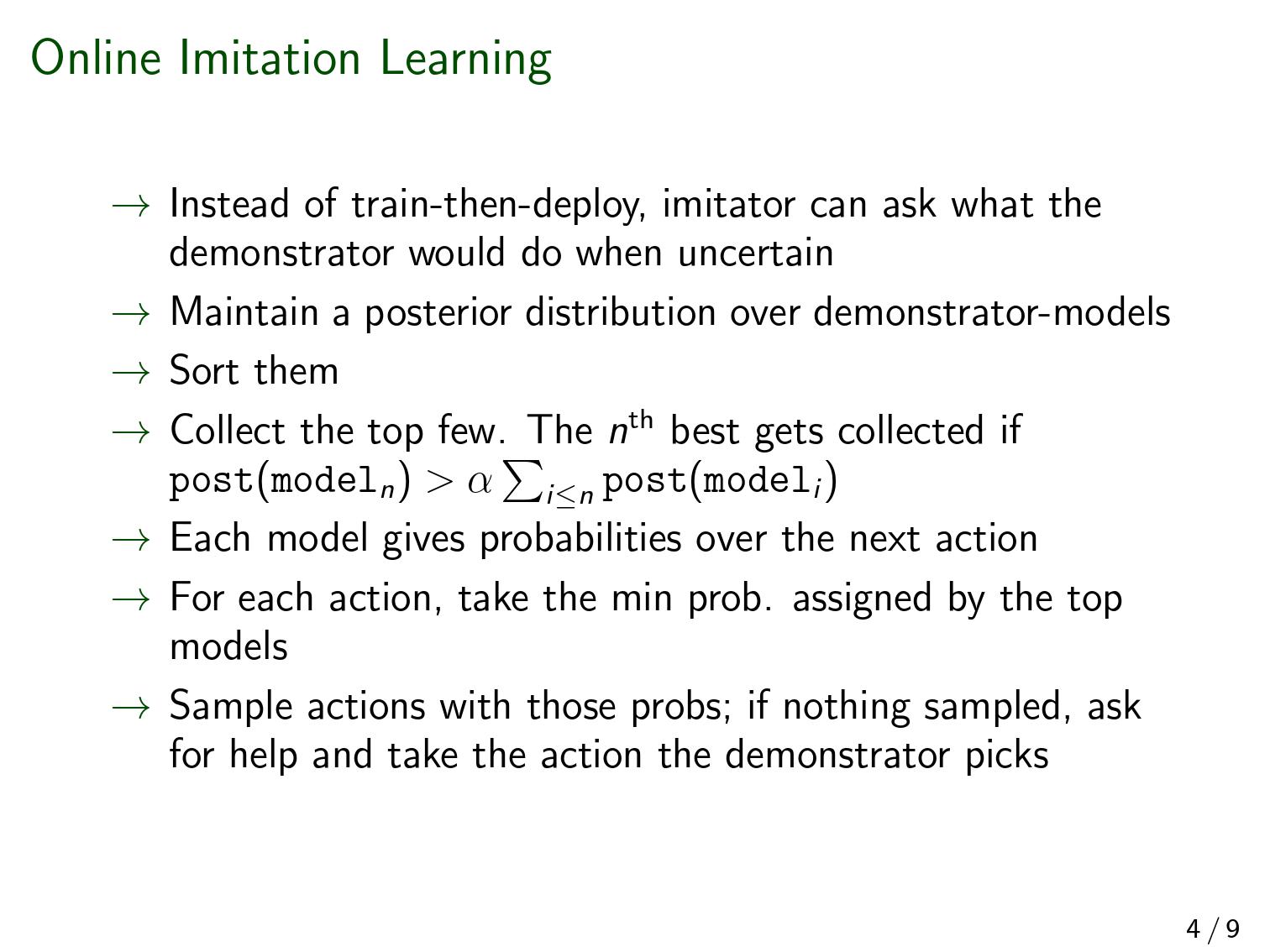

We've written a paper on online imitation learning, and our construction allows us to bound the extent to which mesa-optimizers could accomplish anything. This is not to say it will definitely be easy to eliminate mesa-optimizers in practice, but investigations into how to do so could look here as a starting point. The way to avoid outputting predictions that may have been corrupted by a mesa-optimizer is to ask for help when plausible stochastic models disagree about probabilities.

Here is the abstract:

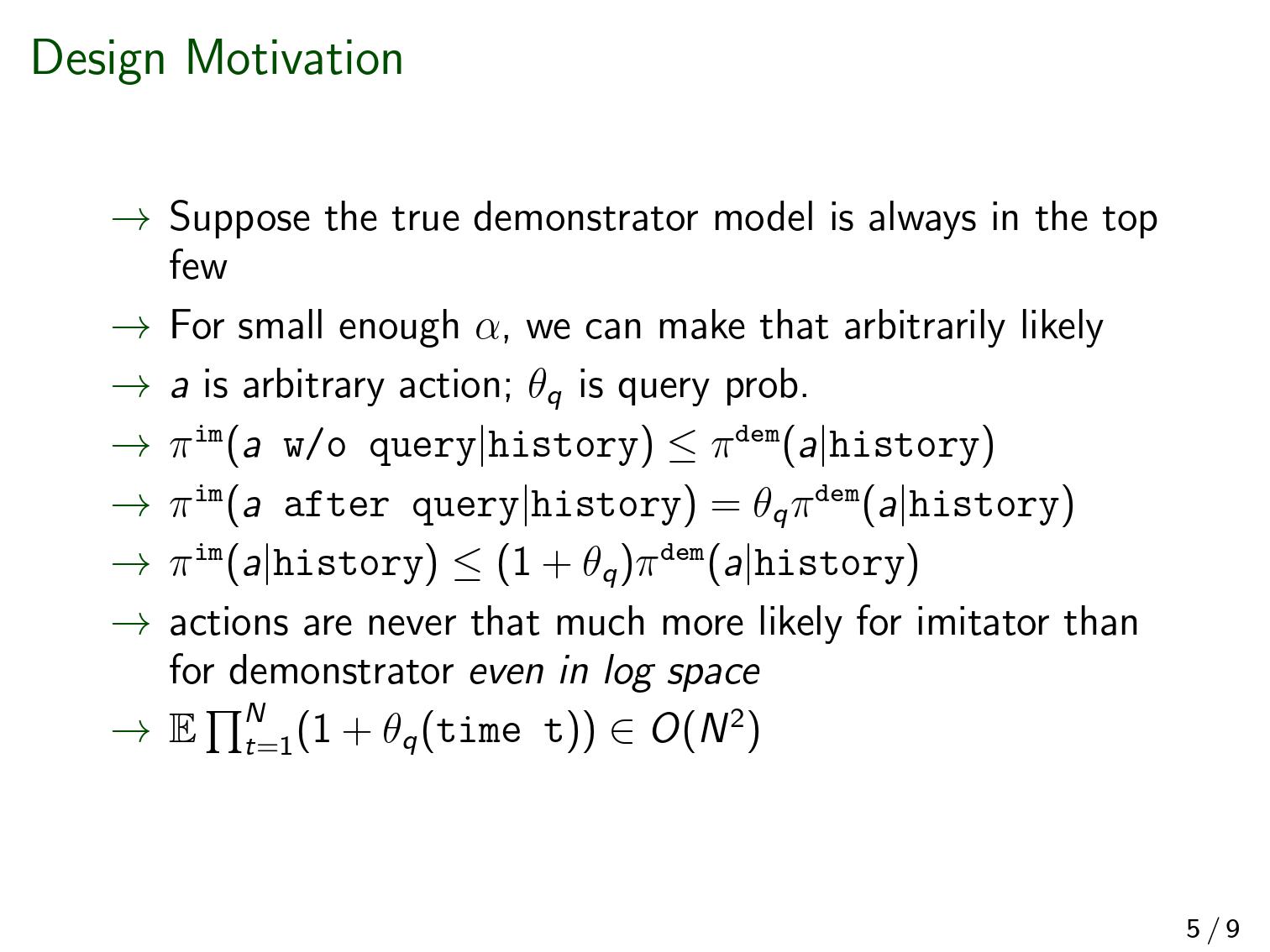

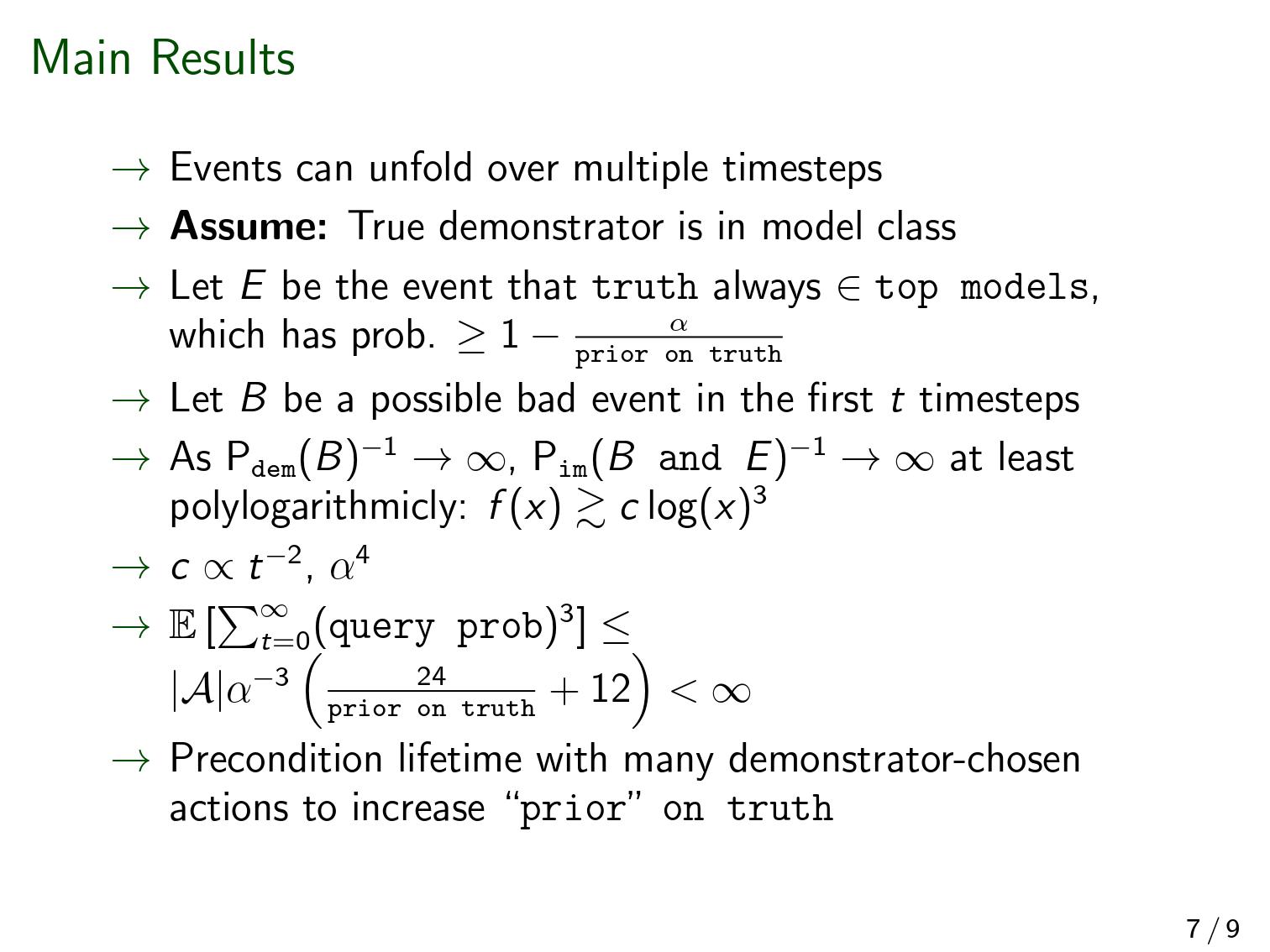

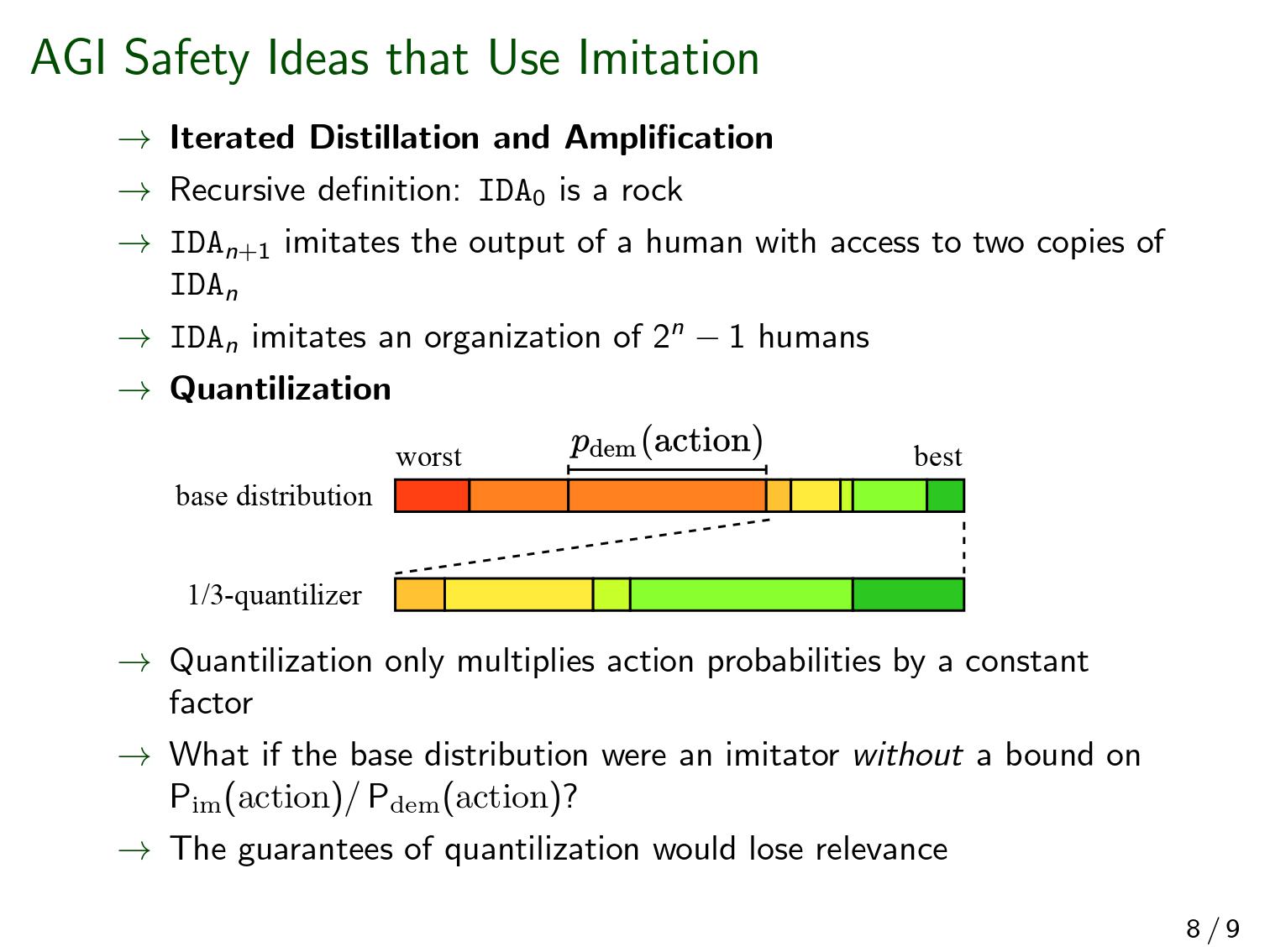

In imitation learning, imitators and demonstrators are policies for picking actions given past interactions with the environment. If we run an imitator, we probably want events to unfold similarly to the way they would have if the demonstrator had been acting the whole time. No existing work provides formal guidance in how this might be accomplished, instead restricting focus to environments that restart, making learning unusually easy, and conveniently limiting the significance of any mistake. We address a fully general setting, in which the (stochastic) environment and demonstrator never reset, not even for training purposes. Our new conservative Bayesian imitation learner underestimates the probabilities of each available action, and queries for more data with the remaining probability. Our main result: if an event would have been unlikely had the demonstrator acted the whole time, that event's likelihood can be bounded above when running the (initially totally ignorant) imitator instead. Meanwhile, queries to the demonstrator rapidly diminish in frequency.

The second-last sentence refers to the bound on what a mesa-optimizer could accomplish. We assume a realizable setting (positive prior weight on the true demonstrator-model). There are none of the usual embedding problems here—the imitator can just be bigger than the demonstrator that it's modeling.

(As a side note, even if the imitator had to model the whole world, it wouldn't be a big problem theoretically. If the walls of the computer don't in fact break during the operation of the agent, then "the actual world" and "the actual world outside the computer conditioned on the walls of the computer not breaking" both have equal claim to being "the true world-model", in the formal sense that is relevant to a Bayesian agent. And the latter formulation doesn't require the agent to fit inside world that it's modeling).

Almost no mathematical background is required to follow [Edit: most of ] the proofs. [Edit: But there is a bit of jargon. "Measure" means "probability distribution", and "semimeasure" is a probability distribution that sums to less than one.] We feel our bounds could be made much tighter, and we'd love help investigating that.

These slides (pdf here) are fairly self-contained and a quicker read than the paper itself.

Below, and refer to the probability of the event supposing the demonstrator or imitator were acting the entire time. The limit below refers to successively more unlikely events ; it's not a limit over time. Imagine a sequence of events such that .

Perhaps I'm just being pedantic, but when you're building mathematical models of things I think it's really important to call attention to what things in the real world those mathematical models are supposed to represent—since that's how you know whether your assumptions are reasonable or not. In this case, there are two ways I could interpret this analysis: either the Bayesian learner is supposed to represent what we want our trained models to be doing, and then we can ask how we might train something that works that way; or the Bayesian learner is supposed to represent how we want our training process to work, and then we can ask how we might build training processes that work that way. I originally took you as saying the first thing, then realized you were actually saying the second thing.

That's a good point. Perhaps I am just saying that I don't think we'll get training processes that are that competent. I do still think there are some ways in which I don't fully buy this picture, though. I think that the point that I'm trying to make is that the Bayesian learner gets its safety guarantees by having a massive hypothesis space and just keeping track of how well each of those hypotheses is doing. Obviously, if you knew of a smaller space that definitely included the desired hypothesis, you could do that instead—but given a fixed-size space that you have to search over (and unless your space is absolutely tiny such that you basically already have what you want), for any given amount of compute, I think it'll be more efficient to run a local search over that space than a global one.

Interesting. I'm not exactly sure what you mean by that. I could imagine “guided 'attentively' by an agent” to mean something like recursive oversight/relaxed adversarial training wherein some overseer is guiding the search process—is that what you mean?