We've written a paper on online imitation learning, and our construction allows us to bound the extent to which mesa-optimizers could accomplish anything. This is not to say it will definitely be easy to eliminate mesa-optimizers in practice, but investigations into how to do so could look here as a starting point. The way to avoid outputting predictions that may have been corrupted by a mesa-optimizer is to ask for help when plausible stochastic models disagree about probabilities.

Here is the abstract:

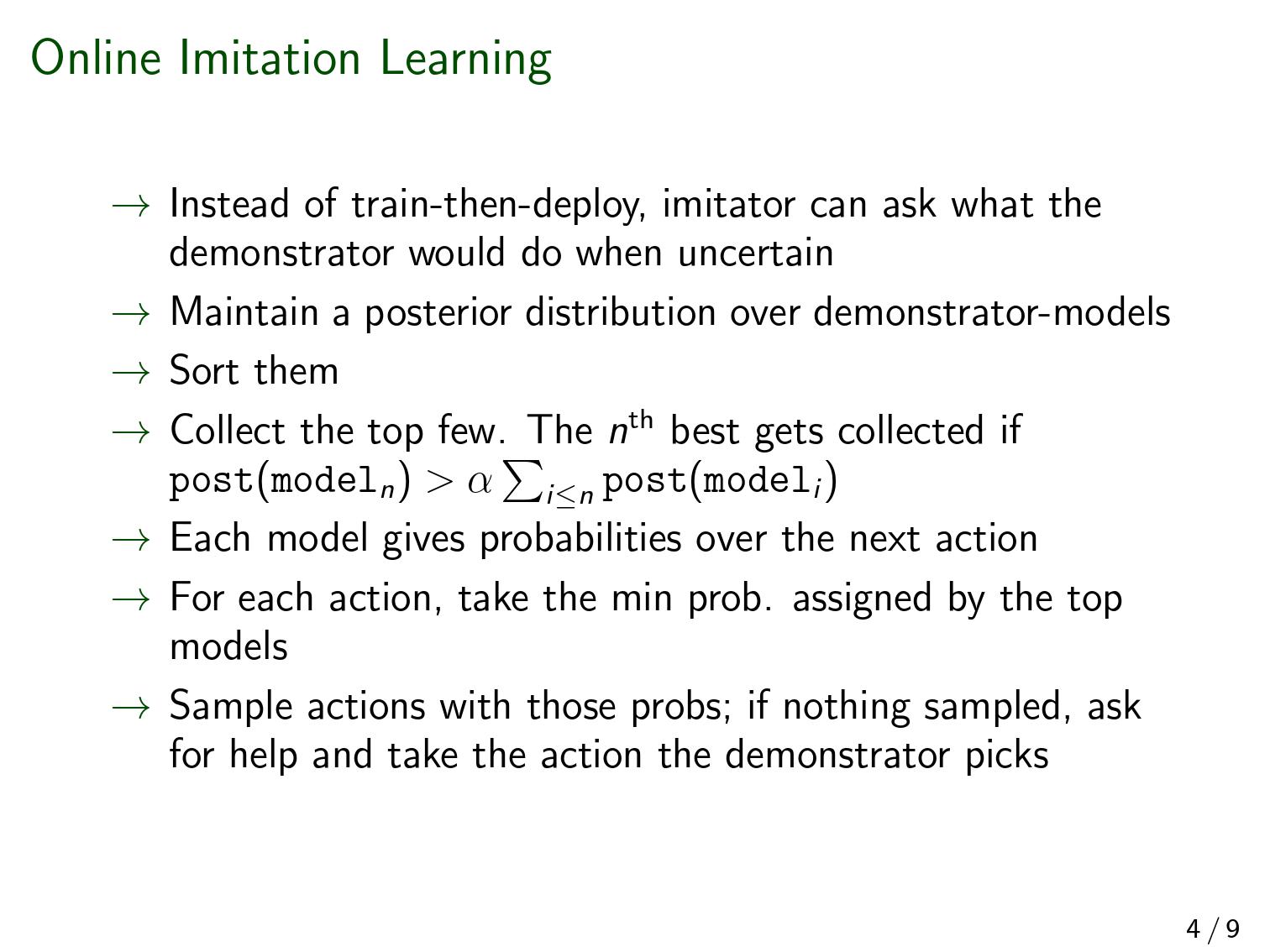

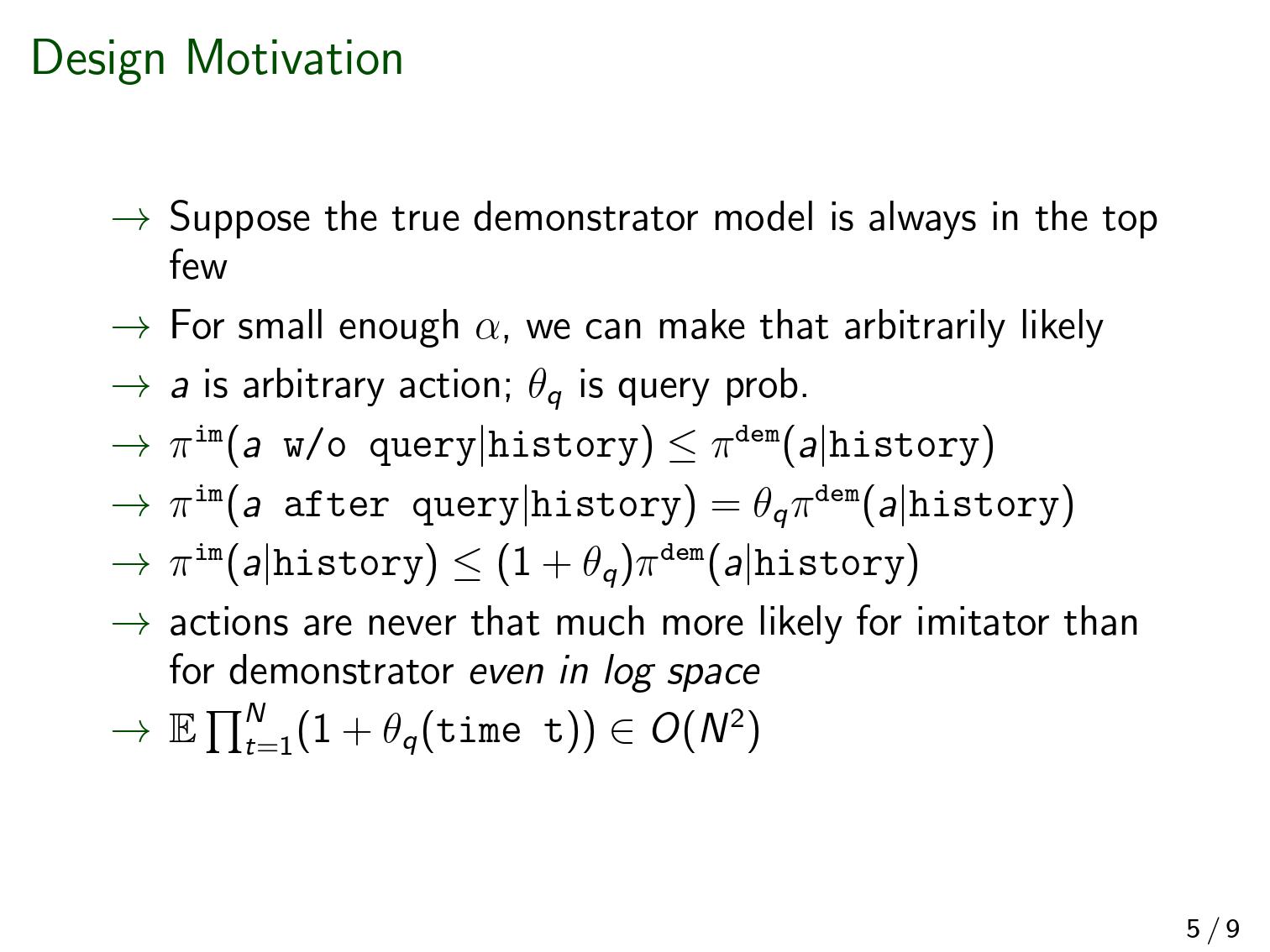

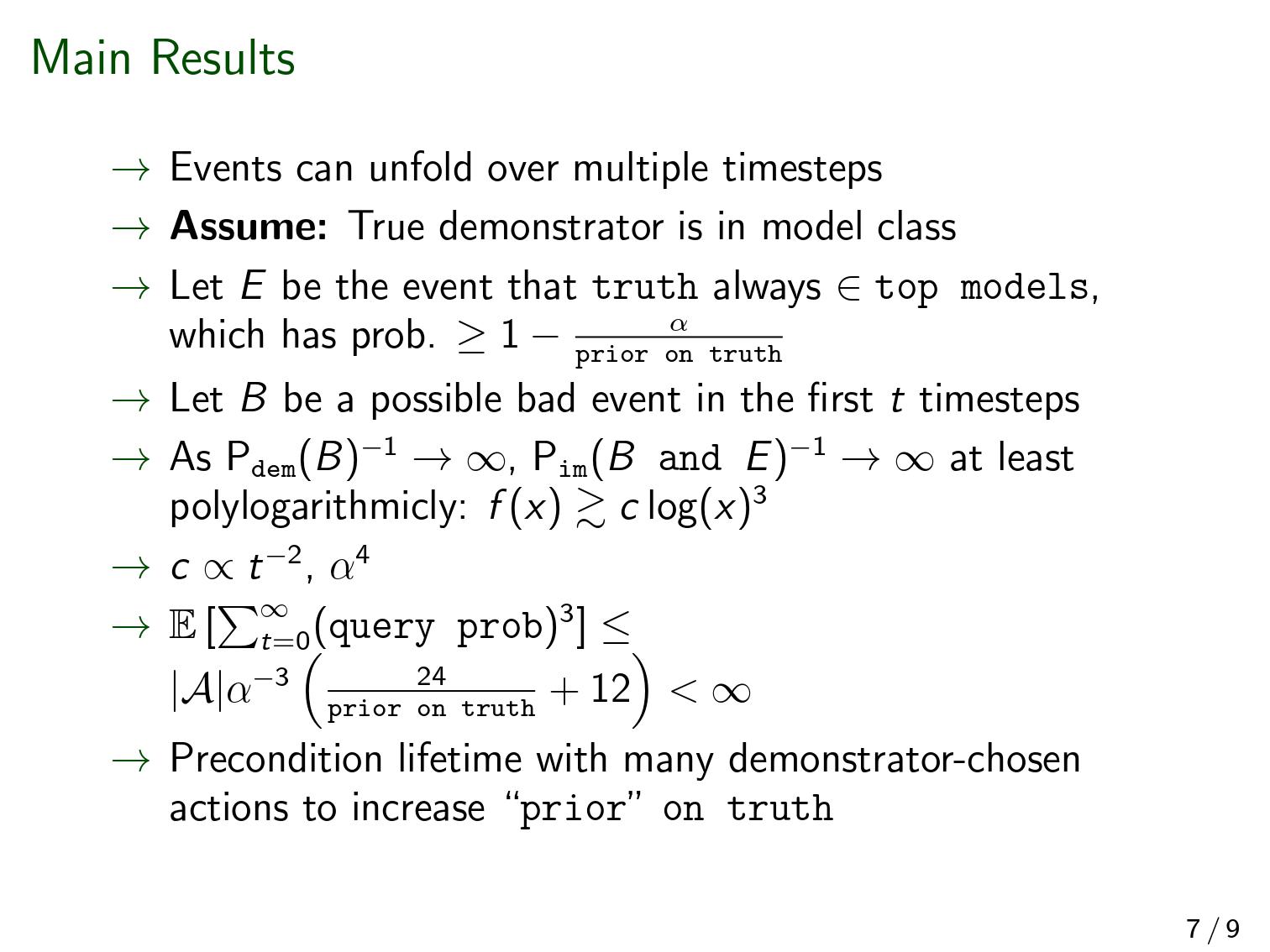

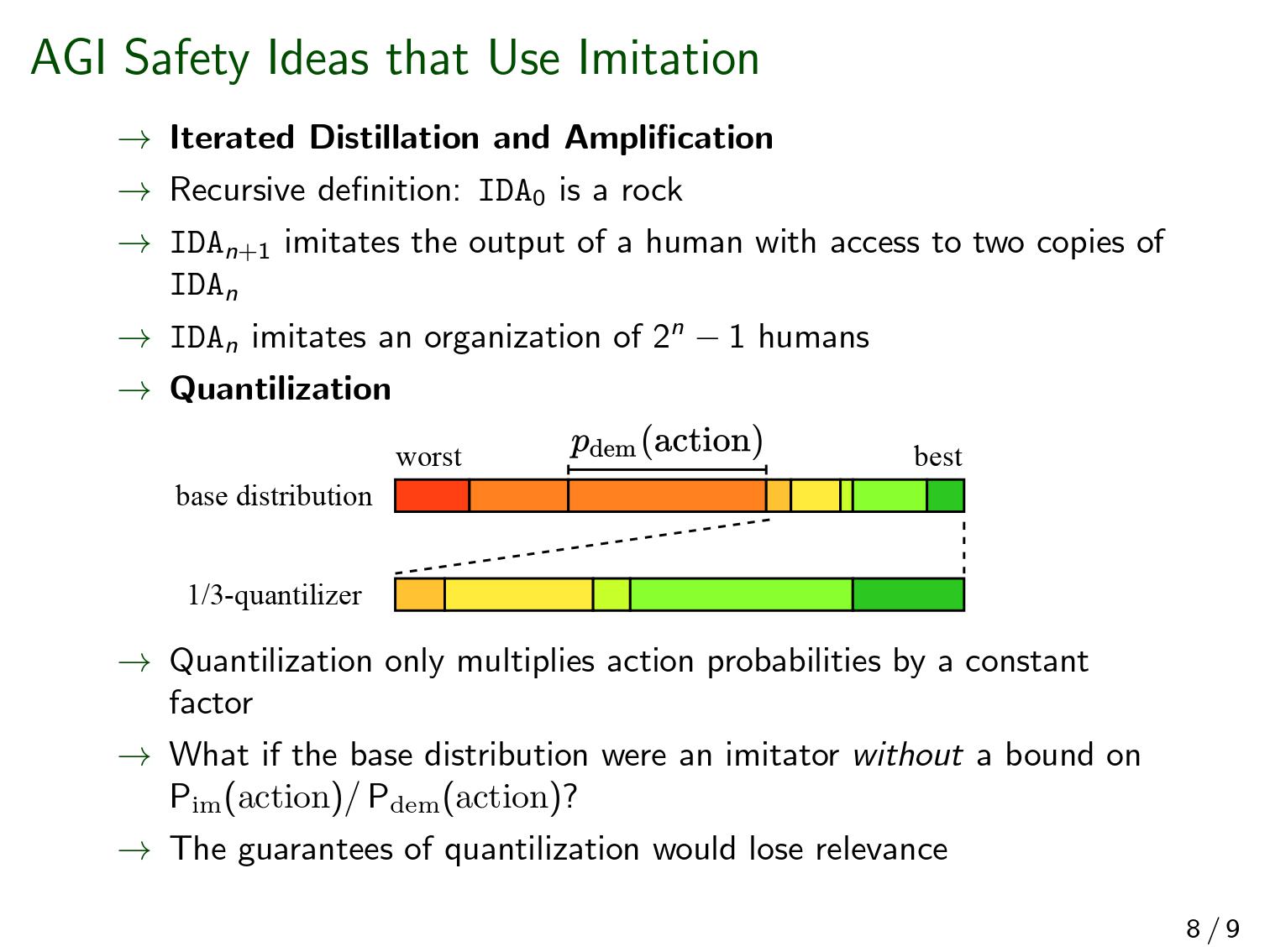

In imitation learning, imitators and demonstrators are policies for picking actions given past interactions with the environment. If we run an imitator, we probably want events to unfold similarly to the way they would have if the demonstrator had been acting the whole time. No existing work provides formal guidance in how this might be accomplished, instead restricting focus to environments that restart, making learning unusually easy, and conveniently limiting the significance of any mistake. We address a fully general setting, in which the (stochastic) environment and demonstrator never reset, not even for training purposes. Our new conservative Bayesian imitation learner underestimates the probabilities of each available action, and queries for more data with the remaining probability. Our main result: if an event would have been unlikely had the demonstrator acted the whole time, that event's likelihood can be bounded above when running the (initially totally ignorant) imitator instead. Meanwhile, queries to the demonstrator rapidly diminish in frequency.

The second-last sentence refers to the bound on what a mesa-optimizer could accomplish. We assume a realizable setting (positive prior weight on the true demonstrator-model). There are none of the usual embedding problems here—the imitator can just be bigger than the demonstrator that it's modeling.

(As a side note, even if the imitator had to model the whole world, it wouldn't be a big problem theoretically. If the walls of the computer don't in fact break during the operation of the agent, then "the actual world" and "the actual world outside the computer conditioned on the walls of the computer not breaking" both have equal claim to being "the true world-model", in the formal sense that is relevant to a Bayesian agent. And the latter formulation doesn't require the agent to fit inside world that it's modeling).

Almost no mathematical background is required to follow [Edit: most of ] the proofs. [Edit: But there is a bit of jargon. "Measure" means "probability distribution", and "semimeasure" is a probability distribution that sums to less than one.] We feel our bounds could be made much tighter, and we'd love help investigating that.

These slides (pdf here) are fairly self-contained and a quicker read than the paper itself.

Below, and refer to the probability of the event supposing the demonstrator or imitator were acting the entire time. The limit below refers to successively more unlikely events ; it's not a limit over time. Imagine a sequence of events such that .

They're not quite symmetrical: midway through, some bad hypotheses will have been ruled out for making erroneous predictions about the demonstrator previously. But your conclusion is still correct. And

It's certainly no better than that, and the bound I prove is worse.

Yeah. Error bounds on full Bayesian are logarithmic in the prior weight on the truth, but error bounds on maximum a posteriori prediction are just inverse in the prior weight, and your example above is the one to show it. If each successive MAP model predicts wrong at each successive timestep, it could take N timesteps to get rid of N models, which is how many might begin with a prior weight exceeding the truth, if the truth has a prior weight of 1/N.

But, this situation seems pretty preposterous to me in the real world. If agent's first observation is, say, this paragraph, the number of models with prior weight greater than the truth that predicted something else as the first observation, will probably be a number way, way different from one. I'd go so far as to say at least half of models with prior weight greater than the truth would predict a different observation than this very paragraph. As long as this situation keeps up, we're in a logarithmic regime.

I'm not convinced this logarithmic regime ever ends, but I think the case is more convincing that we at least start there, so let's suppose now that it eventually ends, and after this point the remaining models with posterior weight exceeding the truth are deliberately erring at unique timesteps. What's the posterior on the truth now? This is a phase where the all the top models are "capable" of predicting correctly. This shouldn't look like 2−1014 at all. It will look more like p(correct model)/p(treachery).

And actually, depending on the composition of treacherous models, it could be better. To the extent some constant fraction of them are being treacherous at particular times, the logarithmic regime will continue. There are two reasons why I expect that to hold.

So more precisely, when the logarithmic phase ends, if it ever does, the posterior on the truth will be around: p(correct model)/p(treachery with the strategy of erring at a random moderately-important time rather than a time of extreme import).

If the number of queries required is linear in this (plus logarithmic in the prior), that doesn't seem very bad to me.

Which means I disagree with this:

There is at most a linear cost to this ratio, which I don't think screws us.

I think this is doable with this approach, but I haven't proven it can be done, let alone said anything about a dependence on epsilon. The closest bound I show not only has a constant factor of like 40; it depends on the prior on the truth too. I think (75% confidence) this is a weakness of the proof technique, not a weakness of the algorithm.

I doubt it's any better than that, but I haven't (just now) managed to convince myself that it couldn't be.

I'm a little skeptical we could get away with having epsilon > O(1/N). But I don't quite follow the proposal well enough to say that I think it's infeasible.