We've written a paper on online imitation learning, and our construction allows us to bound the extent to which mesa-optimizers could accomplish anything. This is not to say it will definitely be easy to eliminate mesa-optimizers in practice, but investigations into how to do so could look here as a starting point. The way to avoid outputting predictions that may have been corrupted by a mesa-optimizer is to ask for help when plausible stochastic models disagree about probabilities.

Here is the abstract:

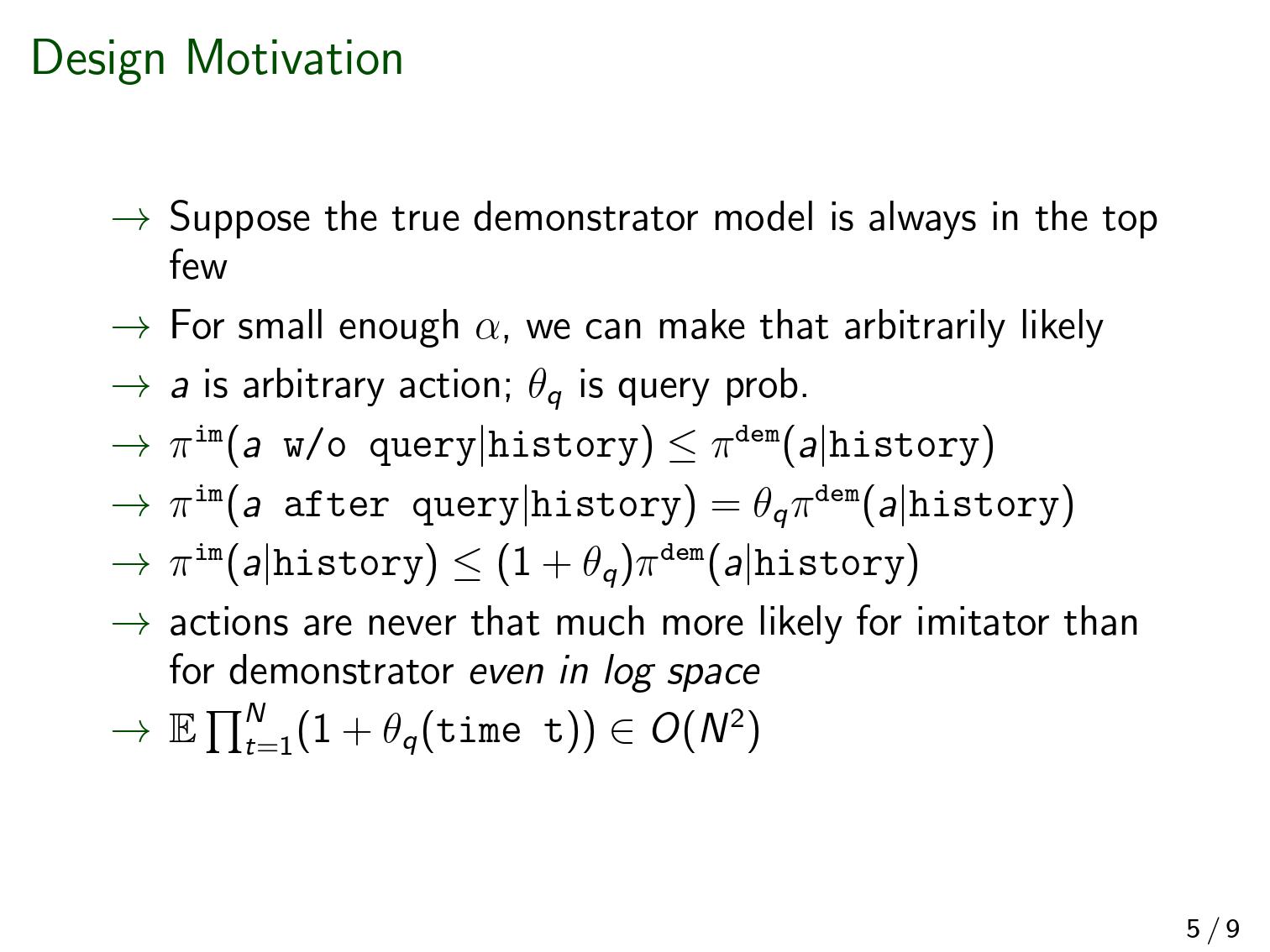

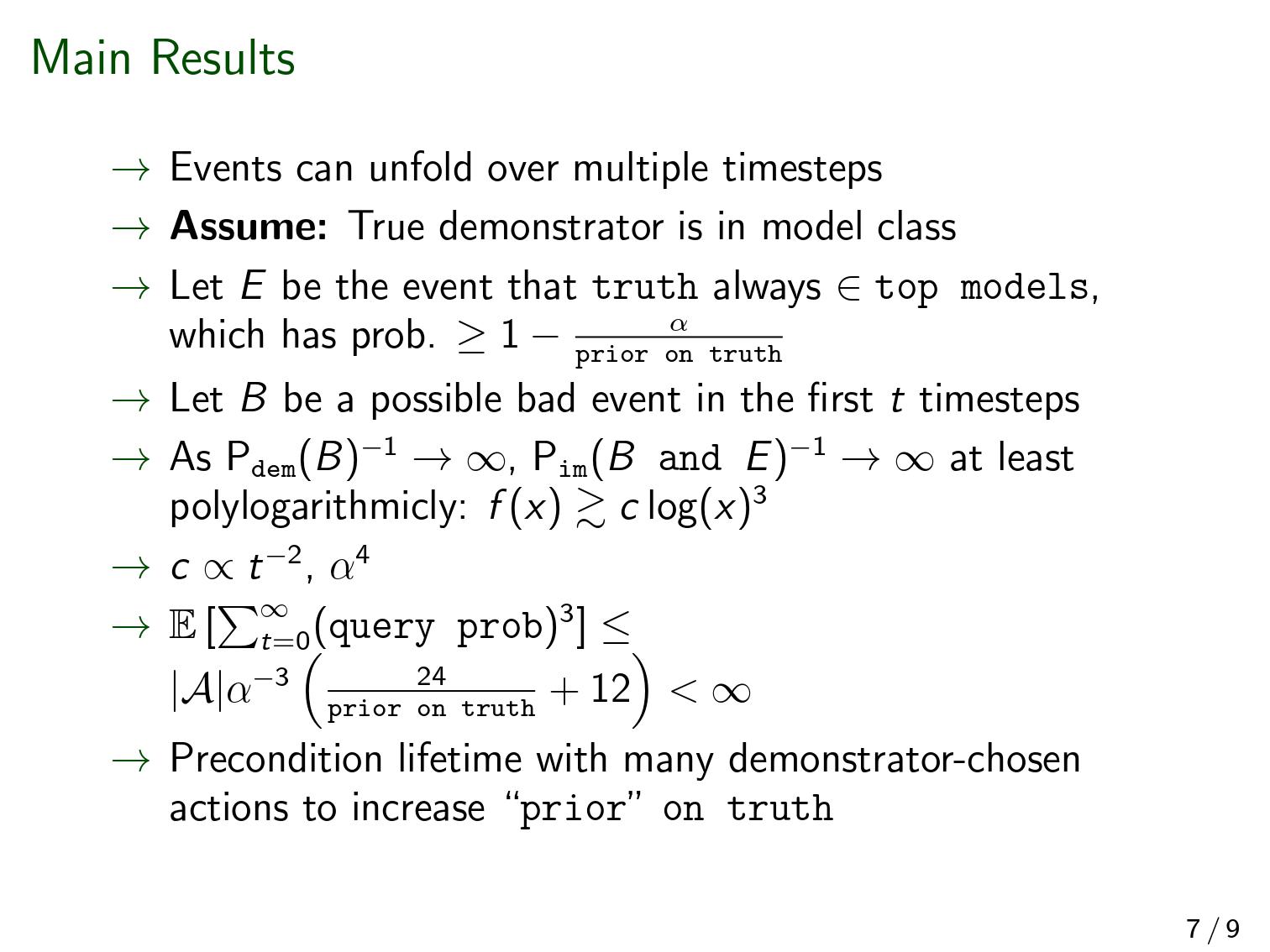

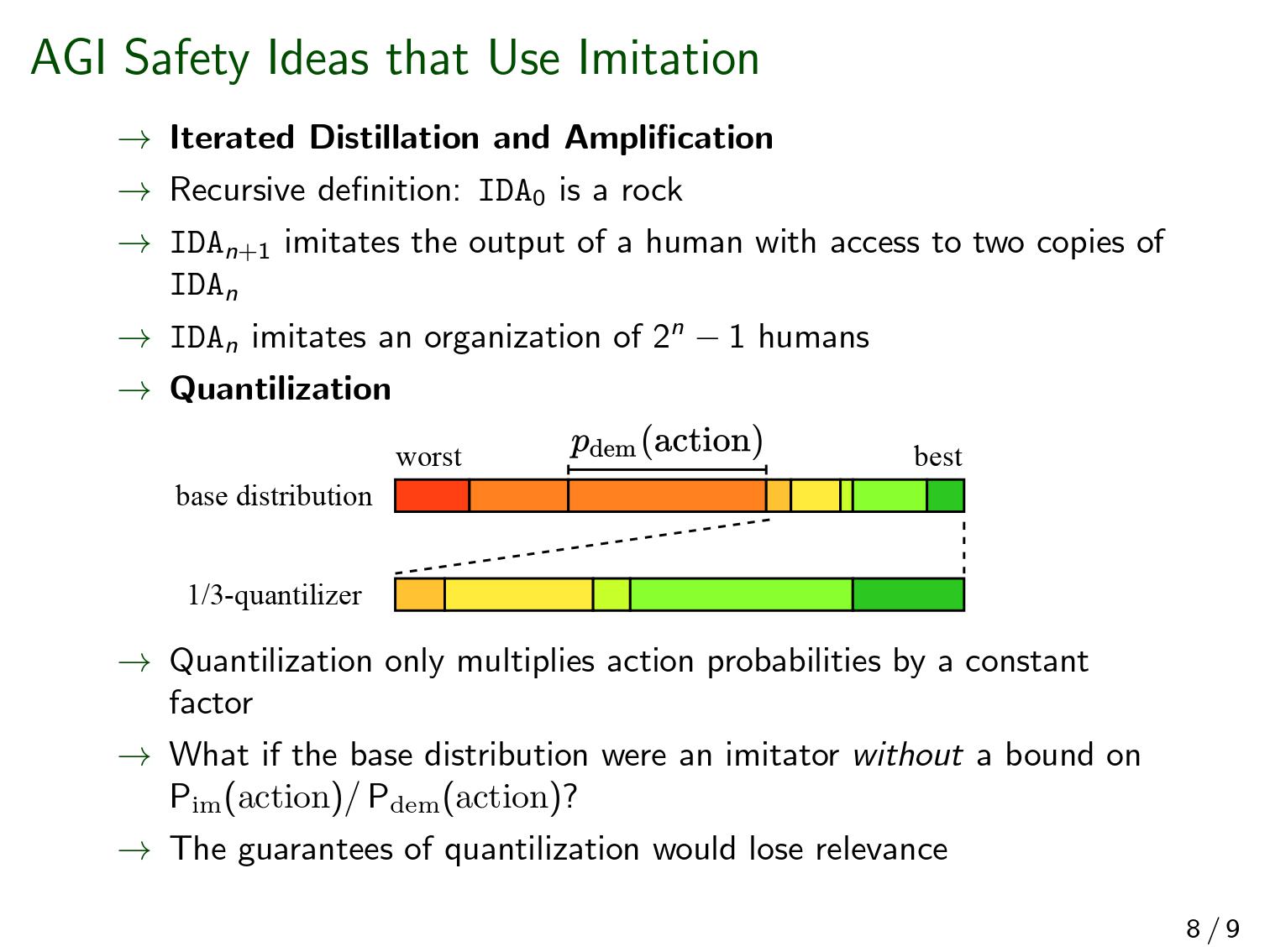

In imitation learning, imitators and demonstrators are policies for picking actions given past interactions with the environment. If we run an imitator, we probably want events to unfold similarly to the way they would have if the demonstrator had been acting the whole time. No existing work provides formal guidance in how this might be accomplished, instead restricting focus to environments that restart, making learning unusually easy, and conveniently limiting the significance of any mistake. We address a fully general setting, in which the (stochastic) environment and demonstrator never reset, not even for training purposes. Our new conservative Bayesian imitation learner underestimates the probabilities of each available action, and queries for more data with the remaining probability. Our main result: if an event would have been unlikely had the demonstrator acted the whole time, that event's likelihood can be bounded above when running the (initially totally ignorant) imitator instead. Meanwhile, queries to the demonstrator rapidly diminish in frequency.

The second-last sentence refers to the bound on what a mesa-optimizer could accomplish. We assume a realizable setting (positive prior weight on the true demonstrator-model). There are none of the usual embedding problems here—the imitator can just be bigger than the demonstrator that it's modeling.

(As a side note, even if the imitator had to model the whole world, it wouldn't be a big problem theoretically. If the walls of the computer don't in fact break during the operation of the agent, then "the actual world" and "the actual world outside the computer conditioned on the walls of the computer not breaking" both have equal claim to being "the true world-model", in the formal sense that is relevant to a Bayesian agent. And the latter formulation doesn't require the agent to fit inside world that it's modeling).

Almost no mathematical background is required to follow [Edit: most of ] the proofs. [Edit: But there is a bit of jargon. "Measure" means "probability distribution", and "semimeasure" is a probability distribution that sums to less than one.] We feel our bounds could be made much tighter, and we'd love help investigating that.

These slides (pdf here) are fairly self-contained and a quicker read than the paper itself.

Below, and refer to the probability of the event supposing the demonstrator or imitator were acting the entire time. The limit below refers to successively more unlikely events ; it's not a limit over time. Imagine a sequence of events such that .

I have seen you mention a number of times in this comment thread that 'this is not a problem because eventually the bad/wrong policies will disappear from the top set'. You have not qualified this statement with 'but we need a very low α like α<1/|Π| to make this work in a safe way', so I remain somewhat uncertain about your views are about how low α needs to go.

In any case, I'll now try to convince you that if α>1/|Π|, your statement that 'when they're wrong they'll get removed from the posterior' will not always mean what you might want it to mean.

Is the demonstrator policy πd to get themselves killed?

The interesting thing in developing these counterexamples is that they often show that the provable math in the paper gives you less safety than you would have hoped for.

Say that πp∈Π is the policy of producing paperclips in the manner demonstrated by the human demonstrator. Now, take my construction in the counterexample where α>1/|Π| and where at time step t, we have the likely case that πp∉Παh<t. In the world I constructed for the counterexample, the remaining top policies Παh<t now perform a synchronized treacherous turn where they kill the demonstrator.

In time step t+1 and later, the policies Παh<t+1 diverge a lot in what actions they will take, so the agent queries the demonstrator, who is now dead. The query will return the null action. This eventually removes all 'wrong' policies from Παh<t+1+i, where 'wrong' means that they do not take the null action at all future time steps.

The silver lining is perhaps that at least the agent will eventually stop, perform null actions only, after it has killed the demonstrator.

Now. the paper proves that the behavior of the agent policy πiα will approximate that of the true demonstrator policy πd closer and closer when time progresses. We therefore have to conclude that in the counterexample world, the true demonstrator policy πd had nothing to do with producing paperclips, this was a wrong guess all along. The right demonstrator policy πd is one where the demonstrator always intended to get themselves killed.

This would be a somewhat unusual solution to the inner alignment problem.

The math in the paper has you working in a fixed-policy setting where the demonstrator policy πd is immutable/time-invariant. The snag is that this does not imply that the policy πd defines a behavioral trajectory that is independent of the internals of the agent construction. If the agent is constructed in a particular way and when it operates in a certain environment, it will force πd into a self-fulfilling trajectory where it kills the demonstrator.

Side note: if anybody is looking for alternative math that allows one to study and manage the interplay between a mutable time-dependent demonstrator policy and the agent policy, causal models seem to be the way to go. See for example here where this is explored in a reward learning setting.