Disclaimer: I haven't run this by Nate or Eliezer, if they think it mischaracterizes them, whoops.

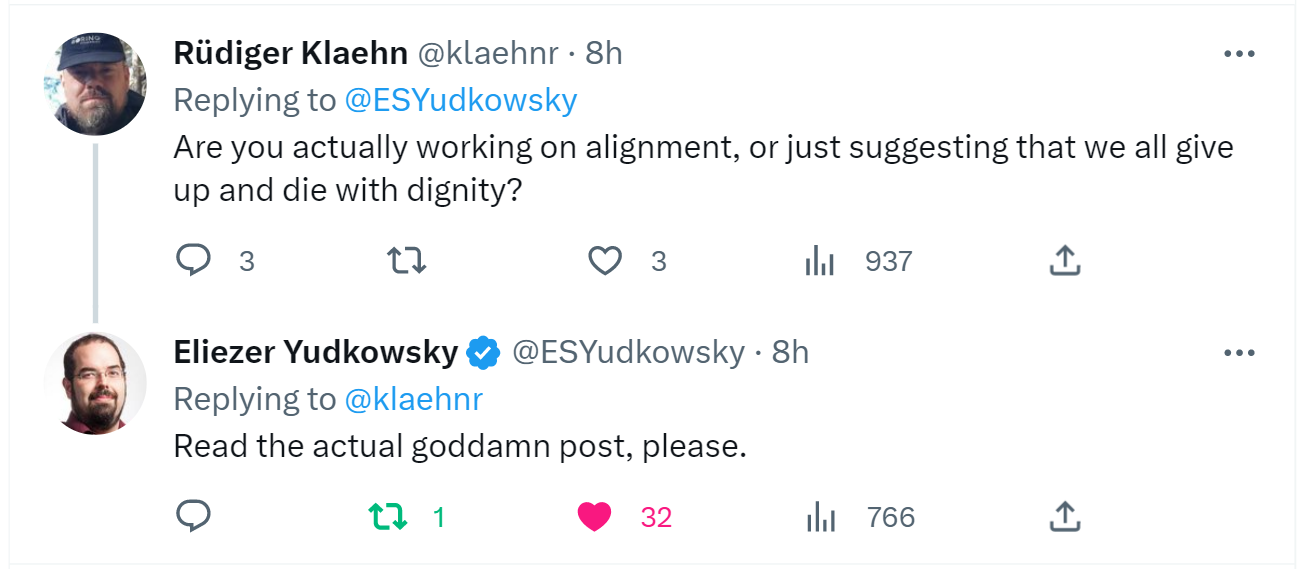

I have seen many people assume MIRI (or Eliezer) ((or Nate?)) has "given up", and been telling other people to give up.

On one hand: if you're only half paying attention, and skimmed a post called "Death With Dignity", I think this is a kinda reasonable impression to have ended up with. I largely blame Eliezer for choosing a phrase which returns "support for assisted suicide" when you google it.

But, I think if you read the post in detail, it's not at all an accurate summary of what happened, and I've heard people say this who I feel like should have read the post closely enough to know better.

Eliezer and "Death With Dignity"

Q1: Does 'dying with dignity' in this context mean accepting the certainty of your death, and not childishly regretting that or trying to fight a hopeless battle?

Don't be ridiculous. How would that increase the log odds of Earth's survival?

The whole point of the post is to be a psychological framework for actually doing useful work that increases humanity's long log odds of survival. "Giving Up" clearly doesn't do that.

[left in typo that was Too Real]

[edited to add] Eliezer does go on to say:

That said, I fought hardest while it looked like we were in the more sloped region of the logistic success curve, when our survival probability seemed more around the 50% range; I borrowed against my future to do that, and burned myself out to some degree. That was a deliberate choice, which I don't regret now; it was worth trying, I would not have wanted to die having not tried, I would not have wanted Earth to die without anyone having tried. But yeah, I am taking some time partways off, and trying a little less hard, now. I've earned a lot of dignity already; and if the world is ending anyways and I can't stop it, I can afford to be a little kind to myself about that.

I agree this means he's "trying less heroically hard." Someone in the comments says "this seems like he's giving up a little". And... I dunno, maybe. I think I might specifically say "giving up somewhat at trying heroically hard". But I think if you round "give up a little at " to "give up" you're... saying an obvious falsehood? (I want to say "you're lying", but as previously discussed, sigh, no, it's not lying, but, I think your cognitive process is fucked up in a way that should make you sit-bolt-upright-in-alarm.)

I think it is a toxic worldview to think that someone should be trying maximally hard, to a degree that burns their future capacity, and that to shift from that to "work at a sustainable rate while recovering from burnout for a bit" count as "giving up." No. Jesus Christ no. Fuck that worldview.

(I do notice that what I'm upset about here is the connotation of "giving up", and I'd be less upset if I thought people were simply saying "Eliezer/MIRI has stopped taking some actions" with no further implications. But I think people who say this are bringing in unhelpful/morally-loaded implications, and maybe equivocating between them)

Nate and "Don't go through the motions of doing stuff you don't really believe in."

I think Nate says a lot of sentences that sound closer to "give up" upon first read/listen. I haven't run this post by him, and am least confident in my claim he wouldn't self-describe as "having given up". But would still bet against it. (Meanwhile, I do think it's possible for someone to have given up without realizing it, but, don't think that's what happened here)

From his recent post Focus on the places where you feel shocked everyone's dropping the ball:

Look for places where everyone's fretting about a problem that some part of you thinks it could obviously just solve.

Look around for places where something seems incompetently run, or hopelessly inept, and where some part of you thinks you can do better.

Then do it better.

[...]

Contrast this with, say, reading a bunch of people's research proposals and explicitly weighing the pros and cons of each approach so that you can work on whichever seems most justified. This has more of a flavor of taking a reasonable-sounding approach based on an argument that sounds vaguely good on paper, and less of a flavor of putting out an obvious fire that for some reason nobody else is reacting to.

I dunno, maybe activities of the vaguely-good-on-paper character will prove useful as well? But I mostly expect the good stuff to come from people working on stuff where a part of them sees some way that everybody else is just totally dropping the ball.

In the version of this mental motion I’m proposing here, you keep your eye out for ways that everyone's being totally inept and incompetent, ways that maybe you could just do the job correctly if you reached in there and mucked around yourself.

That's where I predict the good stuff will come from.

And if you don't see any such ways?

Then don't sweat it. Maybe you just can't see something that will help right now. There don't have to be ways you can help in a sizable way right now.

I don't see ways to really help in a sizable way right now. I'm keeping my eyes open, and I'm churning through a giant backlog of things that might help a nonzero amount—but I think it's important not to confuse this with taking meaningful bites out of a core problem the world is facing, and I won’t pretend to be doing the latter when I don’t see how to.

Like, keep your eye out. For sure, keep your eye out. But if nothing in the field is calling to you, and you have no part of you that says you could totally do better if you deconfused yourself some more and then handled things yourself, then it's totally respectable to do something else with your hours.

I do think this looks fairly close to "having given up." The key difference is the combo of keeping his eyes open for ways to actually help in a serious way.

The point of Death With Dignity, and with MIRI's overall vibe, as I understand it, is:

Don't keep doing things out a vague belief that you're supposed to do something.

Don't delude yourself about whether you're doing something useful.

Be able to think clearly about what actually needs doing, so that you can notice things that are actually worth doing.

(Note: I'm not sure I agree with Nate here, strategically, but that's a separate question from whether he's given up or not)

((I think reasonable people can disagree about whether the thing Nate is saying amounts to "give up", for some vague verbal-cultural clustering of what "give up" means. But, I don't think this is at all a reasonable summary of anything I've seen Eliezer say, and I think most people were responding to Death With Dignity rather than random 1-1 Nate conversations or his most recent post))

Specifically the ones *working on or keeping up with* go could *see it coming* enough to *make solid research bets* about what would do it. If they had read up on go, their predictive distribution over next things to try contained the thing that would work well enough to be worth scaling seriously if you wanted to build the thing that worked. What I did was, as someone not able to implement it myself at the time, read enough of the go research and general pattern of neural network successes to have a solid hunch about what it looks like to approximate a planning trajectory with a neural network. It looked very much like the people actually doing the work at facebook were on the same track. What was surprising was mostly that google funded scaling it so early, which relied on them having found an algorithm that scaled well sooner than I expected, by a bit. Also, I lost a bet about how strong it would be; after updating on the matches from when it was initially announced, I thought it would win some but lose overall, instead it won outright.

I have hardly predicted all ml, but I've predicted the overall manifold of which clusters of techniques would work well and have high success at what scales and what times. Until you challenged me to do it on manifold, I'd been intentionally keeping off the record about this except when trying to explain my intuitive/pretheoretic understanding of the general manifold of ML hunchspace, which I continue to claim is not that hard to do if you keep up with abstracts and let yourself assume it's possible to form a reasonable manifold of what abstracts refine the possibility manifold. Sorry to make strong unfalsifiable claims, I'm used to it. But I think you'll hear something similar - if phrased a bit less dubiously - from deep learning researchers experienced at picking which papers to work on in the pretheoretic regime. Approximately, it's obvious to everyone who's paying attention to a particular subset what's next in that subset, but it's not necessarily obvious how much compute it'll take, whether you'll be able to find hyperparameters that work, if your version of the idea is subtly corrupt, or whether you'll be interrupted in the middle of thinking about it because boss wants a new vision model for ad ranking.

Sure, I've been the most research-trajectory optimistic person in any deep learning room for a long time, and I often wonder if that's because I'm arrogant enough to predict other people's research instead of getting my year-scale optimism burnt out by the pain of the slog of hyperparameter searching ones own ideas, so I've been more calibrated about what other people's clusters can do (and even less calibrated about my own). As a capabilities researcher, you keep getting scooped by someone else who has a bigger hyperparam search cluster! As a capabilities researcher, you keep being right about the algorithms' overall structure, but now you can't prove you knew it ahead of time in any detail! More effective capabilities researchers have this problem less, I'm certainly not one. Also, you can easily exceed my map quality by reading enough to train your intuitions about the manifold of what works - just drastically decrease your confidence in *everything* you've known since 2011 about what's hard and easy on tiny computers, treat it as a palette of inspiration for what you can build now that computers are big. Roleplay as a 2015 capabilities researcher and try to use your map of the manifold of what algorithms work to predict whether each abstract will contain a paper that lives up to its claims. Just browse the arxiv, don't look at the most popular papers, those have been filtered by what actually worked well.

Btw, call me gta or tgta or something. I'm not gears, I'm pre-theoretic map of or reference to them or something. ;)

Also, I should mention - Jessicata, jack gallagher, and poossssibly tsvi bt can tell you some of what I told them circa 2016-2017 about neural networks' trajectory. I don't know if they ever believed me until each thing was confirmed, and I don't know which things they'd remember or exactly which things were confirmed as stated, but I definitely remember arguing in person in the MIRI office on addison, in the backest back room with beanbags and a whiteboard and if I remember correctly a dripping ceiling (though that's plausibly just memory decay confusing references), that neural networks are a form of program inference that works with arbitrary complicated nonlinear programs given an acceptable network interference pattern prior, just a shitty one that needs a big network to have enough hypotheses to get it done (stated with the benefit of hindsight; it was a much lower quality claim at the time). I feel like that's been pretty thoroughly demonstrated now, though pseudo-second-order gradient descent (ADAM and friends) still has weird biases that make its results less reliable than the proper version of itself. It's so damn efficient, though, that you'd need a huge real-wattage power benefit to use something that was less informationally efficient relative to its vm.