I’ve written a draft report evaluating a version of the overall case for existential risk from misaligned AI, and taking an initial stab at quantifying the risk from this version of the threat. I’ve made the draft viewable as a public google doc here (Edit: arXiv version here, video presentation here, human-narrated audio version here). Feedback would be welcome.

This work is part of Open Philanthropy’s “Worldview Investigations” project. However, the draft reflects my personal (rough, unstable) views, not the “institutional views” of Open Philanthropy.

A person does not become less powerful (in the intuitive sense) right after paying college tuition (or right after getting a vaccine) due to losing the ability to choose whether to do so. [EDIT: generally, assuming they make their choices wisely.]

I think POWER may match the intuitive concept when defined over certain (perhaps very complicated) reward distributions; rather than reward distributions that are IID-over-states (which is what the paper deals with).

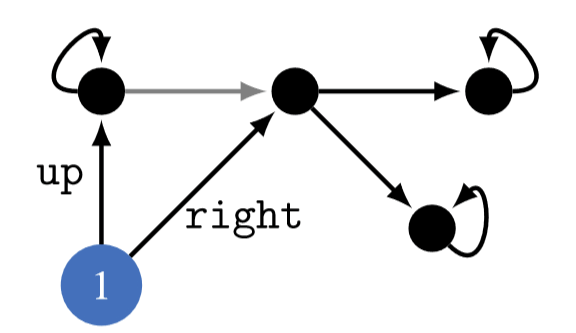

Actually, in a complicated MDP environment—analogous to the real world—in which every sequence of actions results in a different state (i.e. the graph of states is a tree with a constant branching factor), the POWER of all the states that the agent can get to in a given time step is equal; when POWER is defined over an IID-over-states reward distribution.