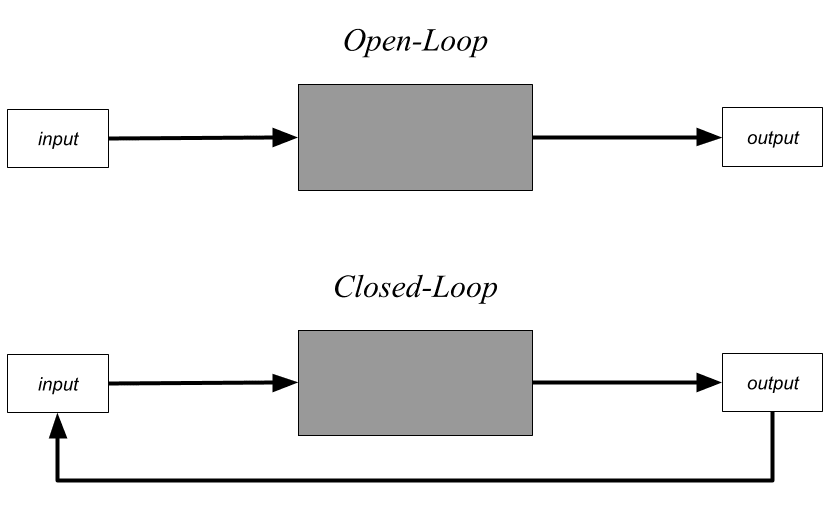

In control theory, an open-loop (or non-feedback) system is one where inputs are independent of outputs. A closed-loop (or feedback) system is one where outputs are input back into the system.

In theory, open-loop systems exist. In reality, no system is truly open-loop because systems are embedded in the physical world where isolation of inputs from outputs cannot be guaranteed. Yet in practice we can build systems that are effectively open-loop by making them ignore weak and unexpected input signals.

Open-loop systems execute plans, but they definitionally can't change their plans based on the results of their actions. An open-loop system can be designed or trained to be good at achieving a goal, but it can't actually do any optimization itself. This ensures that some other system, like a human, must be in the loop to make it better at achieving its goals.

A closed-loop system has the potential to self-optimize because it can observe how effective its actions are and change its behavior based on those observations. For example, an open-loop paperclip-making-machine can't make itself better at making paperclips if it notices it's not producing as many paperclips as possible. A closed-loop paperclip-making-machine can, assuming its designed with circuits that allow it to respond to the feedback in a useful way.

AIs are control systems, and thus can be either open- or close-loop. I posit that open-loop AIs are less likely to pose an existential threat than closed-loop AIs. Why? Because open-loop AIs require someone to make them better, and that creates an opportunity for a human to apply judgement based on what they care about. For comparison, a nuclear dead hand device is potentially much more dangerous than a nuclear response system where a human must make the final decision to launch.

This suggests a simple policy to reduce existential risks from AI: restrict the creation of closed-loop AI. That is, restrict the right to produce AI that can modify its behavior (e.g. self-improve) without going through a training process with a human in the loop.

There are several obvious problems with this proposal:

- No system is truly open-loop.

- A closed-loop system can easily be created by combining 2 or more open-loop systems into a single system.

- Systems may look like they are open-loop at one level of abstraction but really be closed-loop at another (e.g. an LLM that doesn't modify its model, but does use memory/context to modify its behavior).

- Closed-loop AIs can easily masquerade as open-loop AIs until they've already optimized towards their target enough to be uncontrollable.

- Open-loop AIs are still going to be improved. They're part of closed-loop systems with a human in the loop, and can still become dangerous maximizers.

Despite these issues, I still think that, if I were designing a policy to regulate the development of AI, I would include something to place limits on closed-loop AI. A likely form would be a moratorium on autonomous systems that don't include a human in the loop, and especially a moratorium on AIs that are used to either improve themselves or train other AIs. I don't expect such a moratorium to eliminate existential risks from AI, but I do think it could meaningfully reduce the risk of run-away scenarios where humans get cut out before we have a chance to apply our judgement to prevent undesirable outcomes. If I had to put a number on it, such a moratorium perhaps makes us 20% safer.

Author's note: None of this is especially original. I've been saying some version of what's in this post for 10 years to people, but I realized I've never written it down. Most similar arguments I've seen don't use the generic language of control theory and instead are expressed in terms of specific implementations like online vs. offline learning or in terms of recursive self-improvement, and I think it's worthing writing down the general argument without regard to specifics of how any particular AI works.

I will propose a slight modification to the definition of closed-loop offered, not to be pedantic but to help align the definition with the risks proposed.

A closed-loop system generally incorporates inputs, an arbitrary function that translates inputs to outputs (like a model or agent), the outputs themselves, and some evaluation of the output's efficacy against some defined objectives - this might be referred to as a loss function, cost function, utility function, reward function or objective function - let's just call this the evaluation.

The defining characteristic of a closed loop system is that this evaluation is fed back into the input channel, not just the output of the function.

An LLM that produces outputs that are ultimately fed back into the context window as input is merely an autoregressive system, not necessarily a closed-loop system. In the case of chatbots LLMs and similar systems, there isn't necessarily an evaluation of the outputs efficacy that is fed back into the context window in order to control the behavior of the system against a defined objective - these systems are autoregressive.

For a closed-loop AI to modify it's behavior without a human-in-the-loop training process, it's model/function will need to operate directly on the evaluation of it's prior performance, and will require an inference-time objective function of some sort to guide this evaluation.

A classic example of closed-loop AI is the 'system 2' functionality that LeCun describes in his Autonomous Machine Intelligence paper (effectively Model Predictive Control)

https://openreview.net/pdf?id=BZ5a1r-kVsf