0. But first, some things I do like, that are appropriately emphasized in the FEP-adjacent literature

- I like the idea that in humans, the cortex (and the cortex specifically, in conjunction with the thalamus, but definitely not the whole brain IMO) has a generative model that’s making explicit predictions about upcoming sensory inputs, and is updating that generative model on the prediction errors. For example, as I see the ball falling towards the ground, I’m expecting it to bounce; if it doesn’t bounce, then the next time I see it falling, I’ll expect it to not bounce. This idea is called “self-supervised learning” in ML. AFAICT this idea is uncontroversial in neuroscience, and is widely endorsed even by people very far from the FEP-sphere like Jeff Hawkins and Randall O’Reilly and Yann LeCun. Well at any rate, I for one think it’s true.

- I like the (related) idea that the human cortex interprets sensory inputs by matching them to a corresponding generative model, in a way that’s at least loosely analogous to Bayesian probabilistic inference. For example, in the neon color spreading optical illusion below, the thing you “see” is a generative model that includes a blue-tinted solid circle, even though that circle is not directly present in the visual stimulus. (The background is in fact uniformly white.)

- I like the (related) idea that my own actions are part of this generative model. For example, if I believe I am about to stand up, then I predict that my head is about to move, that my chair is about to shift, etc.—and part of that is a prediction that my own muscles will in fact execute the planned maneuvers.

So just to be explicit, the following seems perfectly fine to me: First you say “Hmm, I think maybe the thalamocortical system in the mammalian brain processes sensory inputs via approximate Bayesian inference”, and then you start doing a bunch of calculations related to that, and maybe you’ll even find that some of those calculations involve a term labeled “variational free energy”. OK cool, good luck with that, I have no objections. (Or if I do, they’re outside the scope of this post.) Note that this hypothesis about the thalamocortical system is substantive—it might be true or it might be false—unlike FEP as discussed shortly.

Instead, my complaint here is about the Free Energy Principle as originally conceived by Friston, i.e. as a grand unified theory of the whole brain, even including things like the circuit deep in your brainstem that regulates your heart rate.

OK, now that we’re hopefully on the same page about exactly what I am and am not ranting about, let the rant begin!

1. The Free Energy Principle is an unfalsifiable tautology

It is widely accepted that FEP is an unfalsifiable tautology, including by proponents—see for example Beren Millidge, or Friston himself.

By the same token, once we find a computer-verified proof of any math theorem, we have revealed that it too is an unfalsifiable tautology. Even Fermat’s Last Theorem is now known to be a direct logical consequence of the axioms of math—arguably just a fancy way of writing 0=0.

So again, FEP is an unfalsifiable tautology. What does that mean in practice? Well, It means that I am entitled to never think about FEP. Anything that you can derive from FEP, you can derive directly from the same underlying premises from which FEP itself can be proven, without ever mentioning FEP.

(The underlying premises in question are something like “it’s a thing with homeostasis and bodily integrity”. So, by the way: if someone tells you “FEP implies organisms do X”, and if you can think of an extremely simple toy model of something with homeostasis & bodily integrity that doesn’t do X, then you can go tell that person that they’re wrong about what FEP implies!)

So the question is really whether FEP is helpful.

Here are two possible analogies:

- (1) Noether’s Theorem (if the laws of physics have a symmetry, they also have a corresponding conservation law) is also an unfalsifiable tautology.

- (2) The pointless bit of math trivia is also an unfalsifiable tautology.

In both cases, I don’t have to mention these facts. But in the case of (1)—but not (2)—I want to.

More specifically, here’s a very specific physics fact that is important in practice: “FACT: if a molecule is floating in space, without any light, collisions, etc., then it will have a conserved angular momentum”. I can easily prove this fact by noting that it follows from Noether’s Theorem. But I don’t have to, if I don’t want to; I could also prove it directly from first principles.

However: proving this fact from first principles is no easier than first proving Noether’s Theorem in its full generality, and then deriving this fact as a special case. In fact, if you ask me on an exam to prove this fact from first principles without using Noether’s theorem, that’s pretty much exactly what I would do—I would write out something awfully similar to the (extremely short) fully-general quantum-mechanical proof of Noether’s theorem.

So avoiding (1) is kinda silly—if I try to avoid talking about (1), then I find myself tripping over (1) in the course of talking about lots of other things that are of direct practical interest. Whereas avoiding (2) is perfectly sensible—if I don’t deliberately bring it up, it will never come up organically. It’s pointless trivia. It doesn’t help me with anything.

So, which category is FEP in?

From my perspective, I have yet to see any concrete algorithmic claim about the brain that was not more easily and intuitively [from my perspective] discussed without mentioning FEP.

For example, at the top I mentioned that the cortex predicts upcoming sensory inputs and updates its models when there are prediction errors. This idea is true and important. And it’s also very intuitive. People can understand this idea without understanding FEP. Indeed, somebody invented this idea long before FEP existed.

I’ll come back to two more examples in Sections 3 & 4.

2. The FEP is applicable to both bacteria and human brains. So it’s probably a bad starting point for understanding how human brains work

What’s the best way to think about the human cerebral cortex? Or the striatum? Or the lateral habenula? There are neurons sending signals from retrosplenial cortex to the superior colliculus—what are those signals for? How does curiosity work in humans? What about creativity? What about human self-reflection and consciousness? What about borderline personality disorder?

None of these questions are analogous to questions about bacteria. Or, well, to the very limited extent that there are analogies (maybe some bacteria have novelty-detection systems that are vaguely analogous to human curiosity / creativity if you squint?), I don’t particularly expect the answers to these questions to be similar in bacteria versus humans.

Yet FEP applies equally well to bacteria and humans.

So it seems very odd to expect that FEP would be a helpful first step towards answering these questions.

3. It’s easier to think of a feedback control system as a feedback control system, and not as an active inference system

Let’s consider a simple thermostat. There’s a metal coil that moves in response to temperature, and when it moves beyond a setpoint it flips on a heater. It’s a simple feedback control system.

There’s also a galaxy-brain “active inference” way of thinking about this same system. The thermostat-heater system is “predicting” that the room will maintain a constant temperature, and the heater makes those “predictions” come true. There’s even a paper going through all the details (of a somewhat-similar class of systems).

OK, now here’s a quiz: (1) What happens if the thermostat spring mechanism is a bit sticky (hysteretic)? (2) What happens if there are two thermostats connected to the same heater, via an AND gate? (3) …or an OR gate? (4) What happens if the heater can only turn on once per hour?

I bet that people thinking about the thermostat-heater system in the normal way (as a feedback control system) would nail all these quiz questions easily. They’re not trick questions! We have very good intuitions here.

But I bet that people attempting to think about the thermostat-heater system in the galaxy-brain “active inference” way would have a very hard time with this quiz. It’s probably technically possible to derive a set of the equations analogous to those in the above-mentioned paper and use those equations to answer these quiz questions, but I don’t think it’s easy. Really, I’d bet that even the people who wrote that paper would probably answer the quiz questions in practice by thinking about the system in the normal control-system way, not the galaxy-brain thing where the system is “predicting” that the room temperature is constant.

What’s true for thermostats is, I claim, even more true for more complicated control systems.

My own background is physics, not control systems engineering. I can, however, brag that at an old job, I once designed an unusually complicated control system that had to meet a bunch of crazy specs, and involved multiple interacting loops of both feedback and anticipatory-feedforward control. I wrote down a design that I thought would work, and when we did a detailed numerical simulation, it worked on the computer just like I imagined it working in my head. (I believe that the experimental tests also worked as expected, although I had gotten reassigned to a different project in the meantime and am hazy on the details.)

The way that I successfully designed this awesome feedforward-feedback control system was by thinking about it in the normal way, not the galaxy-brain “active inference” way. Granted, I hadn’t heard of active inference at the time. But now I have, and I can vouch that this knowledge would not have helped me design this system.

That’s just me, but I have also worked with numerous engineers designing numerous real-world control systems, and none of them ever mentioned active inference as a useful way to think about what’s going on in any kind of control system.

And these days, I often think about various feedback control systems in the human brain, and I likewise find it very fruitful to think about them using my normal intuitions about how feedback signals work etc., and I find it utterly unhelpful to think of them as active inference systems.

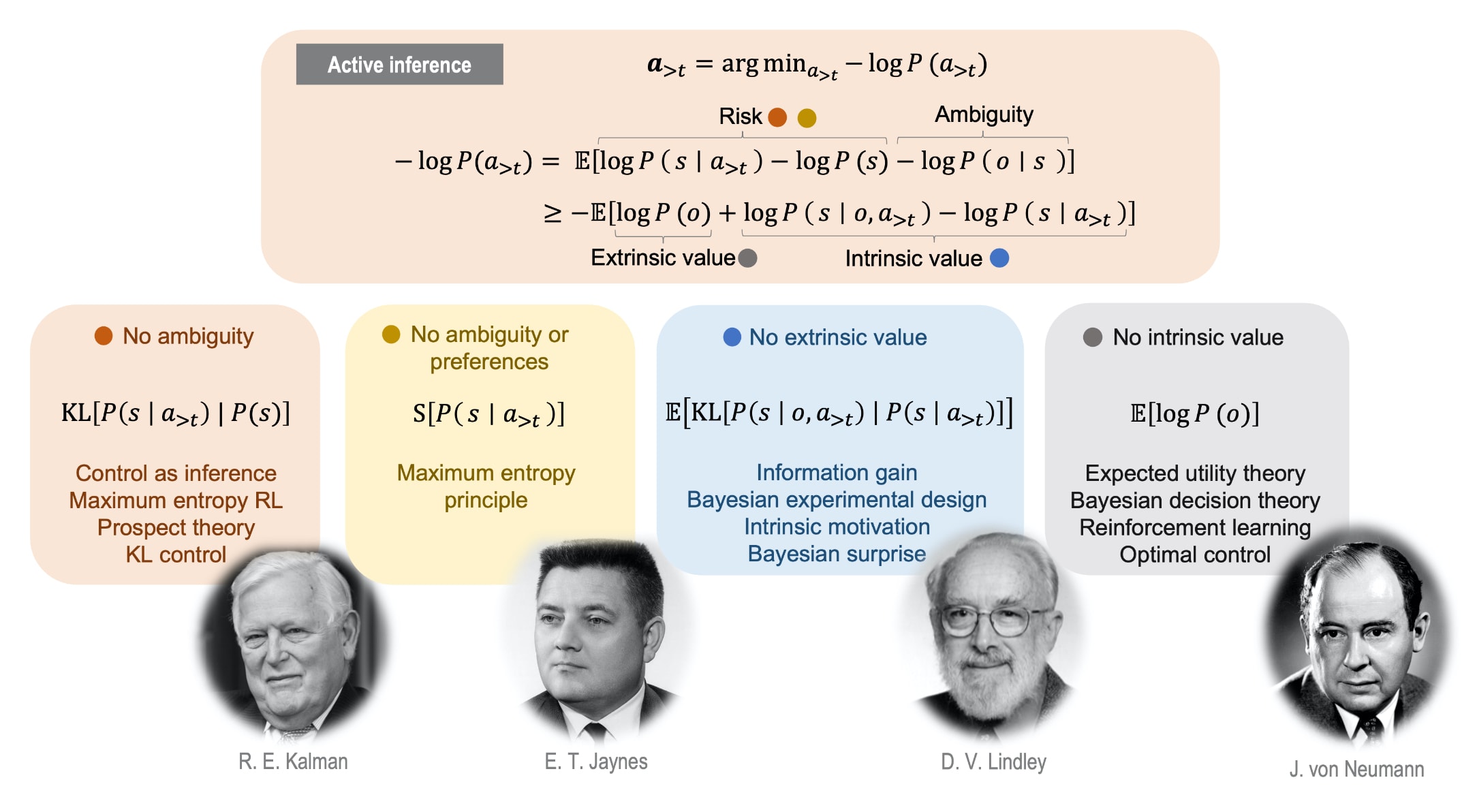

4. Likewise, it’s easier to think of a reinforcement learning (RL) system as an RL system, and not as an active inference system

Pretty much ditto the previous section.

Consider a cold-blooded lizard that goes to warm spots when it feels cold and cold spots when it feels hot. Suppose (for the sake of argument) that what’s happening behind the scenes is an RL algorithm in its brain, whose reward function is external temperature when the lizard feels cold, and whose reward function is negative external temperature when the lizard feels hot.

- We can talk about this in the “normal” way, as a certain RL algorithm with a certain reward function, as per the previous sentence.

- …Or we can talk about this in the galaxy-brain “active inference” way, where the lizard is (implicitly) “predicting” that its body temperature will remain constant, and taking actions to make this “prediction” come true.

I claim that we should think about it in the normal way. I think that the galaxy-brain “active inference” perspective is just adding a lot of confusion for no benefit. Again, see previous section—I could make this point by constructing a quiz as above, or by noting that actual RL practitioners almost universally don’t find the galaxy-brain perspective to be helpful, or by noting that I myself think about RL all the time, including in the brain, and my anecdotal experience is that the galaxy-brain perspective is not helpful.

5. It’s very important to distinguish explicit prediction from implicit prediction—and FEP-adjacent literature is very bad at this

(Or in everyday language: It’s very important to distinguish “actual predictions” from “things that are not predictions at all except in some weird galaxy-brain sense”)

Consider two things: (1) an old-fashioned thermostat-heater system as in Section 3 above, (2) A weather-forecasting supercomputer.

As mentioned in Section 3, in a certain galaxy-brain sense, (1) is a feedback control system that (implicitly) “predicts” that the temperature of the room will be constant.

Whereas (2) is explicitly designed to “predict”, in the normal sense of the word—i.e. creating a signal right now that is optimized to approximate a different signal that will occur in the future.

I think (1) and (2) are two different things, but Friston-style thinking is to unify them together. At best, somewhere in the fine print of a Friston paper, you'll find a distinction between “implicit prediction” and “explicit prediction”, but other times it's not even mentioned, AFAICT.

And thus, as absurd as it sounds, I really think that one of the ways that the predictive processing people go wrong is by under-emphasizing the importance of [explicit] prediction. If we use the word “prediction” to refer to a thermostat connected to a heater, then the word practically loses all meaning, and we’re prone to forgetting that there is a really important special thing that the human cortex can do and that a mechanical thermostat cannot—i.e. build and store complex explicit predictive models and query them for intelligent, flexible planning and foresight.

6. FEP-adjacent literature is also sometimes bad at distinguishing within-lifetime learning from evolutionary learning

I haven’t been keeping track of how often I’ve seen this, but for the record, I wrote down this complaint in my notes after reading a (FEP-centric) Mark Solms book.

7. “Changing your predictions to match the world” and “Changing the world to match your predictions” are (at least partly) two different systems / algorithms in the brain. So lumping them together is counterproductive

Yes they sound related. Yes you can write one equation that unifies them. But they can’t be the same algorithm, for the following reason:

- “Changing your predictions to match the world” is a (self-) supervised learning problem. When a prediction fails, there’s a ground truth about what you should have predicted instead. More technically, you get a full error gradient “for free” with each query, at least in principle. Both ML algorithms and brains use those sensory prediction errors to update internal models, in a way that relies on the rich high-dimensional error information that arrives immediately-after-the-fact.

- “Changing the world to match your predictions” is a reinforcement learning (RL) problem. No matter what action you take, there is no ground truth about what action you counterfactually should have taken. So you can’t use a supervised learning algorithm. You need a different algorithm.

Since they’re (at least partly) two different algorithms, unifying them is a way of moving away from a “gears-level” understanding of how the brain works. They shouldn’t be the same thing in your mental model, if they’re not the same thing in the brain.

(Side note: In actor-critic RL, the critic gets error information, and so we can think of the critic specifically as doing supervised learning. But the actor has to be doing something different, presumably balancing exploration with exploitation and so on. Anyway, as it turns out, I think that in the human brain, the RL critic is a different system from the generative world-model—the former is centered around the striatum, the latter around the cortex. So even if they’re both doing supervised learning, we should still put them into two different mental buckets.)

8. (Added Dec. 2024) It’s possible to want something without expecting it, and it’s possible to expect something without wanting it

This is an obvious common-sense fact, but seems deeply incompatible with the very foundation of Active Inference theory.

Bafflingly, for all I've read on Active Inference, I have yet to see anyone grappling with this contradiction—at least, not in a way that makes any sense to me.

(Added May 2023) For the record, my knowledge of FEP is based mainly on the books Surfing Uncertainty by Andy Clark, Predictive Mind by Jakob Hohwy, Hidden Spring by Mark Solms, How Emotions Are Made by Lisa Feldman Barrett, and Being You by Anil Seth; plus a number of papers (including by Friston) and blog posts. Also thanks to Eli Sennesh & Beren Millidge for patiently trying (and perhaps failing) to explain FEP to me at various points in my life.

Thanks Kevin McKee, Andrew Richardson, Thang Duong, and Randall O’Reilly for critical comments on an early draft of this post.

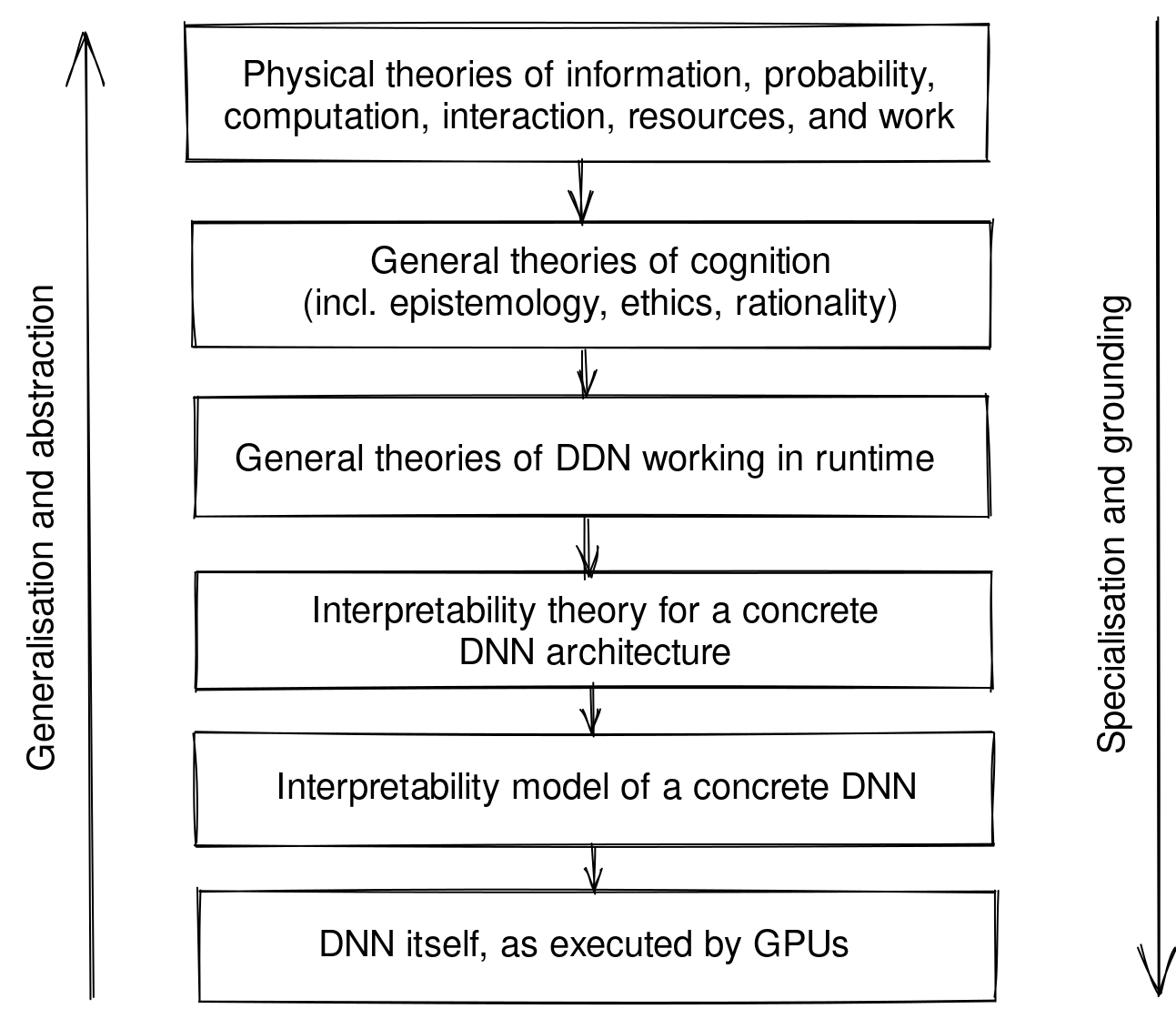

There is a great deal of confusion regarding the whole point of the FEP research program. Is it a tautology, does it apply to flames, etc. This is unfortunate because the goal of the research program is actually quite interesting: to come up with a good definition of an agent (or any other thing for that matter). That is why FEP proponents embrace the tautology criticism: they are proposing a mathematical definition of 'things' (using markov blankets and langevin dynamics) in order to construct precise mathematical notions of more complex and squishy concepts that separate life from non-life. Moreover, they seek to do so in a manner that is compatible with known physical theory. It may seem like overkill to try to nail down how a physical system can form a belief, but its actually pretty critical for anyone who is not a dualist. Moreover, because we dont currently have such a mathematical framework we have no idea if the manner in which we discuss life and thinking is even coherent. This about Russel's paradox. Prior to late 19th century it was considered so intuitively obvious that a set could be defined by a property that the so called axiom of unrestricted comprehension literally went without saying. Only in the attempt to formalize set theory was it discovered that this axiom had to go. By analogy, only in the attempt to construct formal description of how matter can form beliefs do we have any chance of determining if our notion of 'belief' is actually consistent with physical theories.

While I have no idea how to accomplish such an ambitious goal, it seems clear to me that the reinforcement learning paradigm is not suited to the task. This is because, in such a setting, definitions matter and the RL definition of an agent leaves a lot to be desired. In RL, an agent is defined by (a) its sensory and action space, (b) its inference engine, and (c) the reward function it is trying to maximize. Ignoring the matter of identifying sensory and action space, it should be clear this is a practical definition not a principled one as it is under-constrained. This isn't just because I can add a constant to reward without altering policy or something silly like that, it is because (1) it is not obvious how to identify the sensory and action space and (2) inference and reward are fundamentally conflated. Item (1) leads to questions like does my brain end at the base of my skull, the tips of my fingers, or the items I have arranged on my desk. The Markov blanket component of the FEP attempts to address this, and while I think it still needs work it has the right flavor. Item (2), however, is much more problematic. In RL policies are computed by convolving beliefs (the output of the inference engine) with reward and selecting the best option. This convolution + max operation means that if your model fails to predict behavior it could be because you were wrong about the inference engine or wrong about the reward function. Unfortunately, it is impossible to determine which you were wrong about without additional assumptions. For example, in MaxEntInverseRL one has to assume (incredibly) that inference is Bayes optimal and (reasonably) that equally rewarding paths are equally likely to occur. Regardless, this kind of ambiguity is a hallmark of a bad definition because it relies on a function from beliefs and reward to observations of behavior that is not uniquely invertible.

In contrast, FEP advocates propose a somewhat 'better' definition of an agent. This is accomplished by identifying the necessary properties of sensor and action spaces, i.e. they form a Markov blanket and, in a manner similar to that used in systems identification theory, define an agent's type by the statistics of that blanket. They then replace arbitrary reward functions with negative surprise. Though it doesn't quite work for very technical reasons, this has the flavor of a good definition and a necessary principle. After all, if an object or agent type is defined by the statistics of its boundary, then clearly a necessary description of what an agent is doing is that it is not straying too far from its definition.

That was more than I intended to write, but the point is that precision and consistency checks require good definitions, i.e. a tautology. On that front, the FEP is currently the only game in town. It's not a perfect principle and its presentation leaves much to be desired, but it seems to me that something very much like it will be needed if we ever wish to understand the relationship between 'mind' and matter.

Thanks again! Feel free to stop responding if you’re busy.

Here’s where I’m at so far. Let’s forget about human brains and just talk about how we should design an AGI.

One thing we can do is design an AGI whose source code straightforwardly resembles model-based reinforcement learning. So the code has data structures for a critic / value-function, and a reward function, and TD learning, and a world-model, and so on.

- This has the advantage that (I claim) it can actually work. (Not today, but after further algorithmic progress.) After all, I am confident that h

... (read more)