I have started using a visual metaphor to diagram biases in my attempts to remove and mitigate them in myself. I have found this to be incredibly useful, particularly when dealing with multiple compounding biases.

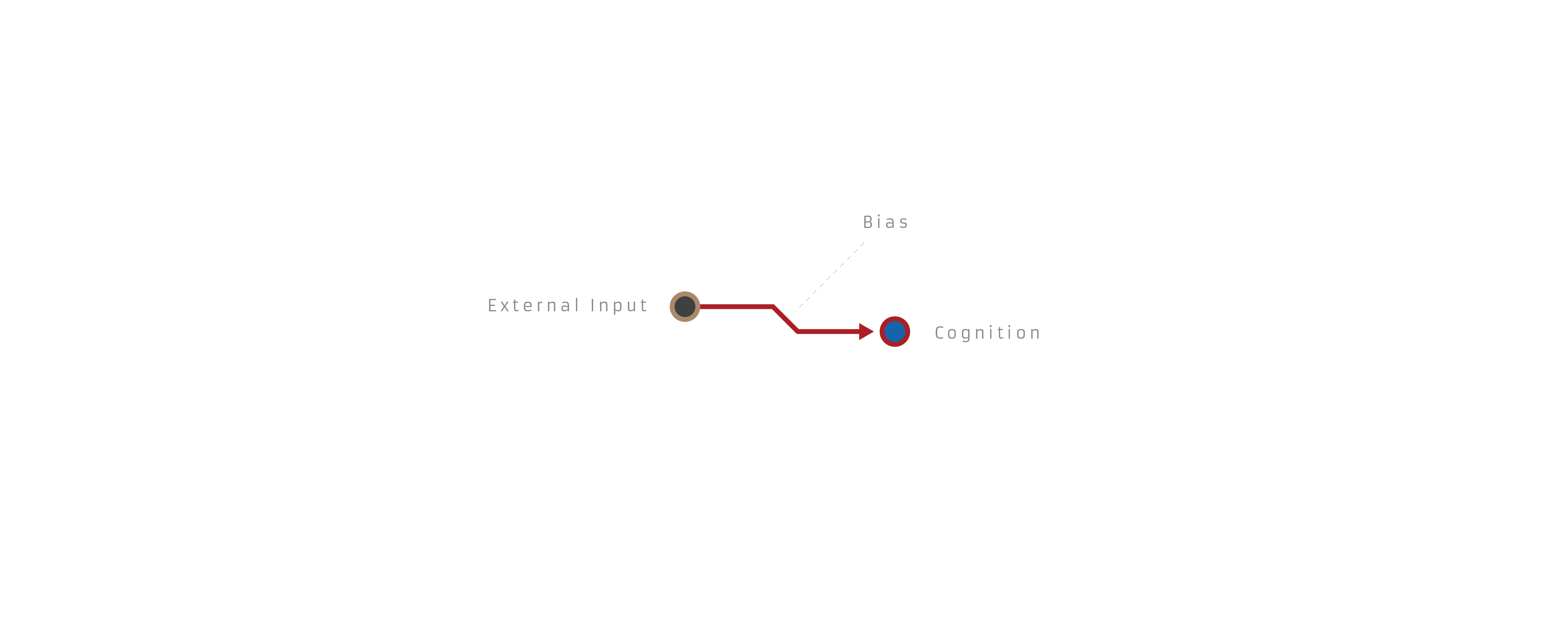

I view an inference as an interaction between external inputs/premises and the resulting cognitions/conclusions. It can be read either as "if x then y," or "x therefore y." A basic inference looks like this:

A biased inference looks like this:

This is obviously a simplification of complex cognitive shit, but it's meant to be more of a functional interface than any kind of theory.

So to run through a few example biases, the fallacy of the undistributed middle:

The planning fallacy:

Planning fallacy corrected:

A little awkward, but it can capture basic failures in Bayesian reasoning as well:

Bayesian reasoning corrected:

And an example of compounding biases resulting in a distorted worldview:

I'm curious if anyone sees downsides to this framework, has other ideas to improve it, or thinks I'm hopelessly naive for even trying to capture human reasoning in a tidy diagram.

I don't see how this adds any value. For it to add value, you would have to have a coherent meaning for what a jog in the line represents, including notation that made it clear what an overcompensation for bias looks like and how that is distinguished from the unbiased picture, which must work even when you do not know, a priori, what the unbiased conclusion is.

I think my comments about it being helpful in working through biases led people to think I intended these primarily as active problem-solving devices. Of course you can't just draw a diagram with a jog in it and then say "Aha! That was a bias!" If anything, I think (particularly in more complex cases) the visuals could help make biases more tangible, almost as a kind of mnemonic device to internalize in the same way that you might create a diagram to help you study for a test. I would like to make the diagrams more robust to serve as a visua... (read more)