Various comments, written while reading:

The broad categories of causal/evidential/logical are definitely right in terms of what people generally talk about, but it is important to keep in mind that these are clusters rather than fully formalized options. There are many different formalizations of causal counterfactuals, which may have significantly different consequences. Though, around here, people think of Pearlian causality almost exclusively.

"Evidential" means basically one thing, but we can differentiate between what happens in different theories of uncertainty. Obviously, Bayesianism is popular in these parts, but we also might be talking about evidential reasoning in a logically uncertain framework, like logical induction.

Logical counterfactuals are wide open, since there's no accepted account of what exactly they are. Though, modal DT is a concrete proposal which is often discussed.

Again, the causal/evidential/logical split seems good for capturing how people mostly talk about things here, but internally I think of it more as two dimensions: causal/evidential and logical/not. Logical counterfactuals are more or less the "causal and logical" option, conveying intuitions of there being some kind of "logical causality" which tells you how to take counterfactuals.

Also, getting into nitpicks: some might say "evidential" is the non-counterfactual option. A broader term which could be used is "conditional", with counterfactual conditionals (aka subjunctive conditionals) being a subtype. I think evidential conditionals would fall under "indicative conditional" as opposed to "counterfactual conditional". Academic philosophers might also nitpick that logical counterfactuals are not counterfactuals. "Counterfactual" in academic philosophy usually does not include the possibility of counterfacting on logical impossibilities; "counterlogical" is used when logical impossibilities are being considered. Posts on this forum usually ignore all the nitpics in this paragraph, and I'm not sure I'm even capturing the language of academic decision theorists accurately -- just attempting to mention some distinctions I've encountered.

Other Dimensions:

You're right that reflective consistency is something which is supposed to emerge (or not emerge) from the specification of the decision theory. If there were a 'reflective consistency' option, we would want to just set it to 'yes'; but unfortunately, things are not so easy.

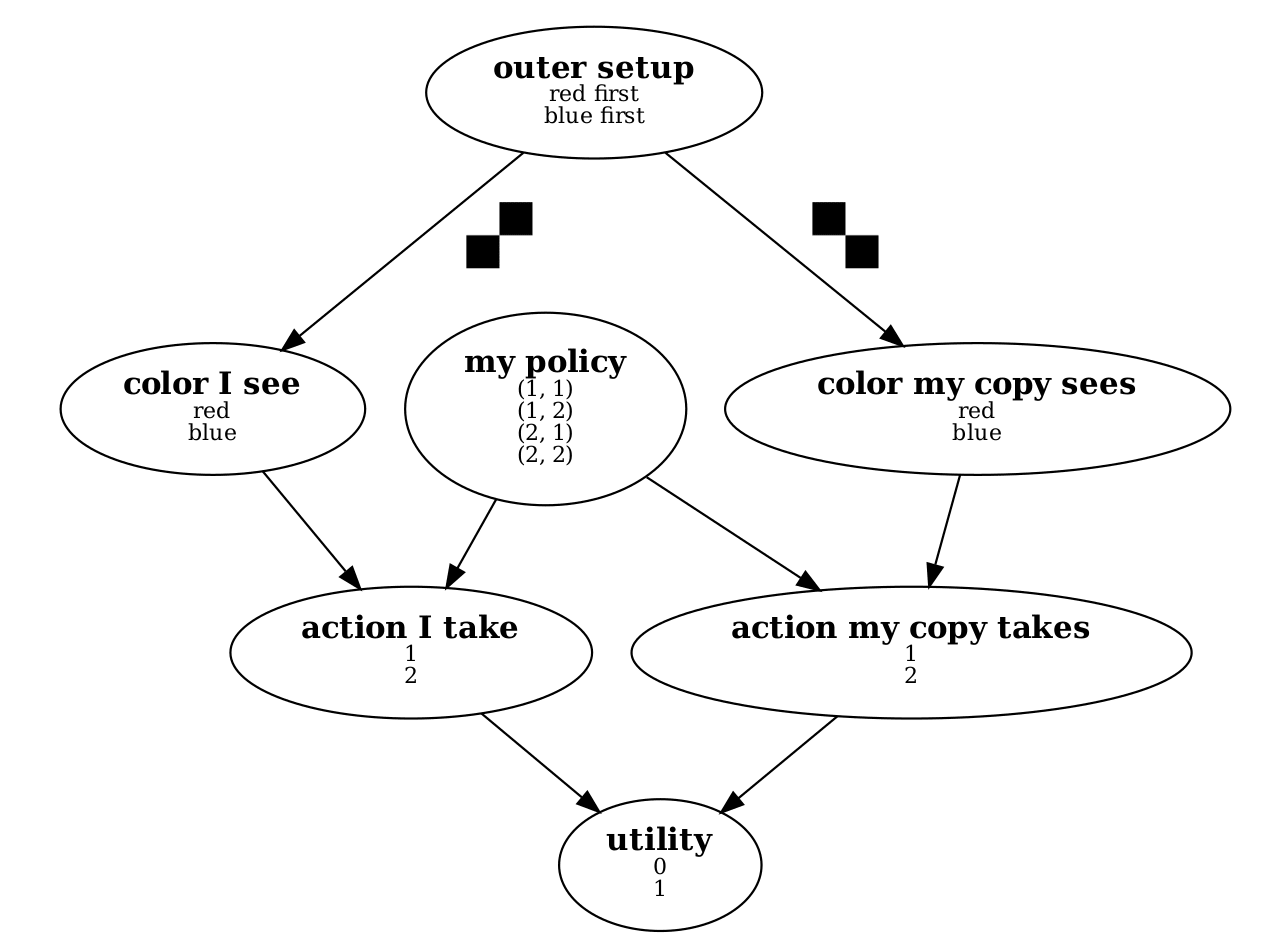

Another source of variation, related to your 'graphical models' point, could broadly be called choice of formalism. A decision problem could be given as an extensive-form game, a causal Bayes net, a program (probabilistic or deterministic), a logical theory (with some choices about how actions, utilities, etc get represented, whether causality needs to be specified, and so on), or many other possibilities.

This is critical; new formalisms such as reflective oracles may allow us to accomplish new things, illuminate problems which were previously murky, make distinctions between things which were previously being conflated, and so on. However, the high-level clusters like CDT, EDT, FDT, and UDT do not specify formalism -- they are more general ideas, which can be formalized in multiple ways.

You may find this comment that Rob Bensinger left on one of my questions interesting:

"The main datapoint that Rob left out: one reason we don't call it UDT (or cite Wei Dai much) is that Wei Dai doesn't endorse FDT's focus on causal-graph-style counterpossible reasoning; IIRC he's holding out for an approach to counterpossible reasoning that falls out of evidential-style conditioning on a logically uncertain distribution. (FWIW I tried to make the formalization we chose in the paper general enough to technically include that possibility, though Wei and I disagree here and that's definitely not where the paper put its emphasis. I don't want to put words in Wei Dai's mouth, but IIRC, this is also a reason Wei Dai declined to be listed as a co-author.)"

Rob also left another comment explaining the renaming from UDT to FDT

Chris asked me via PM, "I’m curious, have you written any posts about why you hold that position?"

I don't think I have, but I'll give the reasons here:

- "evidential-style conditioning on a logically uncertain distribution" seems simpler / more elegant to me.

- I'm not aware of a compelling argument for "causal-graph-style counterpossible reasoning". There are definitely some unresolved problems with evidential-style UDT and I do endorse people looking into causal-style FDT as an alternative but I'm not convinced the solutions actually lie in that direction. (https://sideways-view.com/2018/09/30/edt-vs-cdt-2-conditioning-on-the-impossible/ and links therein are relevant here.)

- Part of it is just historical, in that UDT was originally specified as "evidential-style conditioning on a logically uncertain distribution" and if I added my name as a co-author to a paper that focuses on causal-style decision theory, people would naturally wonder if something made me change my mind.

I'm actually still quite confused by the necessity of logical uncertainty for UDT. Most of the common problems like Newcomb's or Parfit's Hitchhiker don't seem to require it. Where does it come in?

(The only reference to it that I could find was on the LW wiki)

I think it's needed just to define what it means to condition on an action, i.e., if an agent conditions on "I make this decision" in order to compute its expected utility, what does that mean formally? You could make "I" a primitive element in the agent's ontology, but I think that runs into all kinds of problems. My solution was to make it a logical statement of the form "source code X outputs action/policy Y", and then to condition on it you need a logically uncertain distribution.

Hmm, I'm still confused. I can't figure out why we would need logical uncertainty in the typical case to figure out the consequences of "source code X outputs action/policy Y". Is there a simple problem where this is necessary or is this just a result of trying to solve for the general case?

Agents need to consider multiple actions and choose the one that has the best outcome. But we're supposing that the code representing the agent's decision only has one possible output. E.g., perhaps an agent is going to choose between action A and action B, and will end up choosing A. Then a sufficiently close examination of the agent's source code will reveal that the scenario "the agent chooses B" is logically inconsistent. But then it's not clear how the agent can reason about the desirability of "the agent chooses B" while evaluating its outcomes, if not via some mechanism for nontrivially reasoning about outcomes of logically inconsistent situations.

Do we need the ability to reason about logically inconsistent situations? Perhaps we could attempt to transform the question of logical counterfactuals into a question about consistent situations instead as I describe in this post? Or to put it another way, is the idea of logical counterfactuals an analogy or something that is supposed to be taken literally?

You can formalize UDT in a more standard game-theoretic setting, which allows many problems like Parfit's Hitchhiker to be dealt with, if that is enough for what you're interested in. However, the formalism assumes a lot about the world (such as the identity of the agent being a nonproblematic given, as Wei Dai mentions), so if you want to address questions of where that structure is coming from, you have to do something else.

The comment starting "The main datapoint that Rob left out..." is actually by Nate Soares. I cross-posted it to LW from an email conversation.

Hey I'm interested in implementing some of these decision theories (and decision problems) in code. I have an initial version of CDT, EDT, and something I'm generically calling "FDT", but which I guess is actually some particular sub-variant of FDT in Python here, with the core decision theories implemented in about 45 lines of python code here. I'm wondering if anyone here might have suggestions on what would it look like to implement UDT in this framework -- either 1.0 or 1.1. I don't yet have a notion of "observation" in the code, so I can't yet implement e.g. Parfit's Hitchiker or XOR blackmail. I'm interested in suggestions on what that would look like.

Any other comments or suggestions also much appreciated. I hope to turn this into a top-level post after implementing more decision problems and theories, and getting more feedback.

Here, I made it use graphviz: https://github.com/alexflint/decision-theory/pull/1

Earth ought to spend at least one programmer-year on basic science of decision theories. Any feature requests?

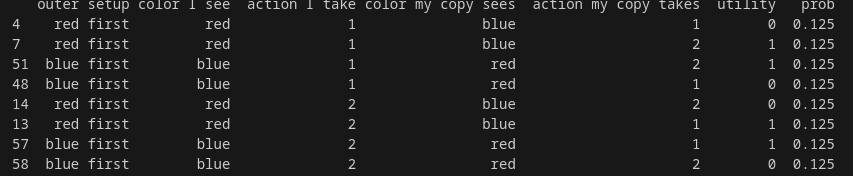

It would be very helpful for my own debugging to be able to see a table with all the possible worlds, their probabilities, and their utilities. Those worlds are enumerated explicitly in inference.py, and probabilities and utilities computed there also. Would be great to color them according to the value of intervention_node, in order to see which probabilities and utilities are contributing to which conditional expectations for the final argmax. That argmax is this one.

Your outer loop classification seems to be quite similar to an old post of mine: https://www.lesswrong.com/posts/MwetLcBPvshg9ePZB/action-theory-is-not-policy-theory-is-not-agent-theory

I hope that some standard terminology finally catches on!

(FDT(P,x))(x)

Should this be FDT(P,x)? As is this looks to me like the second (x) introduces x into scope, and the first x is an out-of-scope usage.

It has been many years since I thought about this post, so what I say below could be wrong, but here's my current understanding:

I think what I was trying to say in the post is that FDT-policy returns a policy, so "FDT(P, x)" means "the policy I would use if P were my state of knowledge and x were the observation I saw". But that's a policy, i.e. a mapping from observations to actions, so we need to call that policy on the actual observation in order to get an action, hence (FDT(P,x))(x).

Now, it's not clear to me that FDT-policy actually needs the observation x in order to condition on what policy it is using. In other words, in the post I wrote the conditioned event as , but perhaps this should have just been . In that case, the call to FDT should look like FDT(P), which is a policy, and then to get an action we would write FDT(P)(x).

The left hand side of the equation has type action (Hintze page 4: "An agent’s decision procedure takes sense data and outputs an action."), but the right hand side has type policy, right?

There I was just quoting from the Hintze paper so it's not clear what he meant. One interpretation is that the right hand side is just the definition of what "UDT(s)" means, so in that sense there wouldn't be a type error, UDT(s) would also be a policy. But also, you're right, a decision theory should in the end output an action. The right notation all comes down to what I said in the last paragraph of my previous comment, namely, does UDT1.1/FDT-policy need to know the sense data s (or 'observation x', in the other notation) in order to condition on the agent using a particular policy? If the answer is yes, UDT(s) is a policy, and UDT(s)(s) is the action. If the answer is no, then UDT is the policy (confusing! because UDT is also the 'decision algorithm' that finds the policy in the particular decision problem you are facing) and UDT(s) is the action. My best guess is that the answer to this question is 'no', so UDT is the policy and UDT(s) is the action, so your point about there being a type error is correct. But the notation in the Hintze paper makes it seem like somehow s is being used on the right hand side, which is possibly what confused me when I wrote the post.

Introduction

Summary

This post is a comparison of various existing decision theories, with a focus on decision theories that use logical counterfactuals (a.k.a. the kind of decision theories most discussed on LessWrong). The post compares the decision theories along outermost iteration (action vs policy vs algorithm), updatelessness (updateless or updateful), and type of counterfactual used (causal, conditional, logical). It then explains the decision theories in more detail, in particular giving an expected utility formula for each. The post then gives examples of specific existing decision problems where the decision theories give different answers.

Value-added

There are some other comparisons of decision theories (see the “Other comparisons” section), but they either (1) don’t focus on logical-counterfactual decision theories; or (2) are outdated (written before the new functional/logical decision theory terminology came about).

To give a more personal motivation, after reading through a bunch of papers and posts about these decision theories, and feeling like I understood the basic ideas, I remained highly confused about basic things like “How is UDT different from FDT?”, “Why was TDT deprecated?”, and “If TDT performs worse than FDT, then what’s one decision problem where they give different outputs?” This post hopes to clarify these and other questions.

None of the decision theory material in this post is novel. I am still learning the basics myself, and I would appreciate any corrections (even about subtle/nitpicky stuff).

Audience

This post is intended for people who are similarly confused about the differences between TDT, UDT, FDT, and LDT. In terms of reader background assumed, it would be good to know the statements to some standard decision theory problems (Newcomb’s problem, smoking lesion, Parfit’s hitchhiker, transparent box Newcomb’s problem, counterfactual mugging (a.k.a. curious benefactor; see page 56, footnote 89)) and the “correct” answers to them, and having enough background in math to understand the expected utility formulas.

If you don’t have the background, I would recommend reading chapters 5 and 6 of Gary Drescher’s Good and Real (explains well the idea of subjunctive means–end relations), the FDT paper (explains well how FDT’s action selection variant works, and how FDT differs from CDT and EDT), “Cheating Death in Damascus”, and “Toward Idealized Decision Theory” (explains the difference between policy selection and logical counterfactuals well), and understanding what Wei Dai calls “decision theoretic thinking” (see comments: 1, 2, 3). I think a lot of (especially old) content on decision theory is confusingly written or unfriendly to beginners, and would recommend skipping around to find explanations that “click”.

Comparison dimensions

My main motivation is to try to distinguish between TDT, UDT, and FDT, so I focus on three dimensions for comparison that I think best display the differences between these decision theories.

Outermost iteration

All of the decision theories in this post iterate through some set of “options” (intentionally vague) at the outermost layer of execution to find the best “option”. However, the nature (type) of these “options” differs among the various theories. Most decision theories iterate through either actions or policies. When a decision theory iterates through actions (to find the best action), it is doing “action selection”, and the decision theory outputs a single action. When a decision theory iterates through policies (to find the best policy), it is doing “policy selection”, and outputs a single policy, which is an observation-to-action mapping. To get an action out of a decision theory that does policy selection (because what we really care about is knowing which action to take), we must call the policy on the actual observation.

Using the notation of the FDT paper, an action has type A while a policy has type X→A, where X is the set of observations. So given a policy π:X→A and observation x∈X, we get the action by calling π on x, i.e. π(x)∈A.

From the expected utility formula of the decision theory, you can tell action vs policy selection by seeing what variable comes beneath the argmax operator (the argmax operator is what does the outermost iteration); if it is a∈A (or similar) then it is iterating over actions, and if it is π∈Π (or similar), then it is iterating over policies.

One exception to the above is UDT2, which seems to iterate over algorithms.

Updatelessness

In some decision problems, the agent makes an observation, and has the choice of updating on this observation before acting. Two examples of this are: in counterfactual mugging (a.k.a. curious benefactor), where the agent makes the observation that the coin has come up tails; and in the transparent box Newcomb’s problem, where the agent sees whether the big box is full or empty.

If the decision algorithm updates on the observation, it is updateful (a.k.a. “not updateless”). If it doesn’t update on the observation, it is updateless.

This idea is similar to how in Rawls’s “veil of ignorance”, you must pick your moral principles, societal policies, etc., before you find out who you are in the world or as if you don’t know who you are in the world.

How can you tell if a decision theory is updateless? In its expected utility formula, if it conditions on the observation, it is updateful. In this case the probability factor looks like P(…∣…,OBS=x), where x is the observation (sometimes the observation is called “sense data” and is denoted by s). If a decision theory is updateless, the conditioning on “OBS=x” is absent. Updatelessness only makes a difference in decision problems that have observations.

There seem to be different meanings of “updateless” in use. In this post I will use the above meaning. (I will try to post a question on LessWrong soon about these different meanings.)

Type of counterfactual

In the course of reasoning about a decision problem, the agent can construct counterfactuals or hypotheticals like “if I do this, then that happens”. There are several different kinds of counterfactuals, and decision theories are divided among them.

The three types of counterfactuals that will concern us are: causal, conditional/evidential, and logical/subjunctive. The distinctions between these are explained clearly in the FDT paper so I recommend reading that (and I won’t explain them here).

In the expected utility formula, if the probability factor looks like P(…∣…,ACT=a) then it is evidential, and if it looks like P(…∣…,do(ACT=a)) then it is causal. I have seen the logical counterfactual written in many ways:

Other dimensions that I ignore

There are many more dimensions along which decision theories differ, but I don’t understand these and they seem less relevant for comparing among the main logical-counterfactual decision theories, so I will just list them here but won’t go into them much later on in the post:

Comparison table along the given dimensions

Given the comparison dimensions above, the decision theories can be summarized as follows:

The general “shape” of the expected utility formulas will be:

argmaxoutermost iterationN∑j=1U(oj)⋅P(OUTCOME=oj∣updatelessness,counterfactual)

Or sometimes:

argmaxoutermost iterationN∑j=1U(oj)⋅P(counterfactual□→OUTCOME=oj∣updatelessness)

Explanations of each decision theory

This section elaborates on the comparison above by giving an expected value formula for each decision theory and explaining why each cell in the table takes that particular value. I won’t define the notation very clearly, since I am mostly collecting the various notations that have been used (so that you can look at the linked sources for the details). My goals are to explain how to fill in the table above and to show how all the existing variants in notation are saying the same thing.

UDT1 and FDT (iterate over actions)

I will describe UDT1 and FDT’s action variant together, because I think they give the same decisions (if there’s a decision problem where they differ, I would like to know about it). The main differences between the two seem to be:

In the original UDT post, the expected utility formula is written like this: Y∗=argmaxY∑PY(⟨E1,E2,E3,…⟩)U(⟨E1,E2,E3,…⟩) Here Y is an “output string” (which is basically an action). The sum is taken over all possible vectors of the execution histories. I prefer Tyrrell McAllister’s notation: argmaxY∈Y∑E∈EM(X,Y,E)U(E)

To explain the UDT1 row in the comparison table, note that:

In the FDT paper (p. 14), the action selection variant of FDT is written as follows:

FDT(P,G,x)=argmaxa∈AE(U(OUTCOME)∣do(FDT(P––,G––,x––)=a))=argmaxa∈AN∑j=1U(oj)⋅P(OUTCOME=oj∣do(FDT(P––,G––,x––)=a))

Again, note that we are doing action selection (“argmaxa∈A”), using logical counterfactuals (“do(FDT(P––,G––,x––)=a)”), and being updateless (absence of “OBS=x”).

UDT1.1 and FDT (iterate over policies)

UDT1.1 is a decision theory introduced by Wei Dai’s post “Explicit Optimization of Global Strategy (Fixing a Bug in UDT1)”.

In Hintze (p. 4, 12) UDT1.1 is written as follows:

UDT(s)=argmaxfn∑i=1U(Oi)⋅P(┌UDT:=f:s↦a┐□→Oi)

Here f iterates over functions that map sense data (s) to actions (a), U is the utility function, and O1,…,On are outcomes.

Using Tyrrell McAllister’s notation, UDT1.1 looks like:

UDT1.1(X,Y,E,M,I)=argmaxf∈I∑E∈EM(f,E)U(E)

Using notation from the FDT paper plus a trick I saw on this Arbital page we can write the policy selection variant of FDT as:

(FDT(P,x))(x)=(argmaxπ∈ΠN∑j=1U(oj)⋅P(OUTCOME=oj∣true(FDT(P––,x––)=π)))(x)

On the right hand side, the large expression (the part inside and including the argmax) returns a policy, so to get the action we call the policy on the observation x.

The important things to note are that UDT1.1 and the policy selection variant of FDT:

TDT

My understanding of TDT is mainly from Hintze. I am aware of the TDT paper and skimmed it a while back, but did not revisit it in the course of writing this post.

Using notation from Hintze (p. 4, 11) the expected utility formula for TDT can be written as follows:

TDT(s)=argmaxa∈An∑i=1U(Oi)P(┌TDT(s):=a┐□→Oi∣s)

Here, s is a string of sense data (a.k.a. observation), A is the set of actions, U is the utility function, O1,…,On are outcomes, the corner quotes and boxed arrow □→ denote a logical counterfactual (“if the TDT algorithm were to output a given input s”).

If I were to rewrite the above using notation from the FDT paper, it would look like:

TDT(P,x)=argmaxa∈AN∑j=1U(oj)⋅P(OUTCOME=oj∣OBS=x,true(TDT(P––,x––)=a))

The things to note are:

UDT2

I know very little about UDT2, but based on this comment by Wei Dai and this post by Vladimir Slepnev, it seems to iterate over algorithms rather than actions or policies, and I am assuming it didn’t abandon updatelessness and logical counterfactuals.

The following search queries might have more information:

LDT

LDT (logical decision theory) seems to be an umbrella decision theory that only requires the use of logical counterfactuals, leaving the iteration type and updatelessness unspecified. So my understanding is that UDT1, UDT1.1, UDT2, FDT, and TDT are all logical decision theories. See this Arbital page, which says:

The page also calls TDT a logical decision theory (listed under “non-general but useful logical decision theories”).

CDT

Using notation from the FDT paper (p. 13), we can write the expected utility formula for CDT as follows:

CDT(P,G,x)=argmaxa∈AE(U(OUTCOME)∣do(ACT=a),OBS=x)=argmaxa∈AN∑j=1U(oj)⋅P(OUTCOME=oj∣do(ACT=a),OBS=x)

Things to note:

EDT

Using notation from the FDT paper (p. 12), we can write the expected utility formula for EDT as follows:

EDT(P,x)=argmaxa∈AE(U(OUTCOME)∣OBS=x,ACT=a)=argmaxa∈AN∑j=1U(oj)⋅P(OUTCOME=oj∣OBS=x,ACT=a)

Things to note:

There are various versions of EDT (e.g. versions that smoke on the smoking lesion problem). The EDT in this post is the “naive” version. I don’t understand the more sophisticated versions of EDT, but the keyword for learning more about them seems to be the tickle defense.

Comparison on specific decision problems

If two decision theories are actually different, there should be some decision problem where they return different answers.

The FDT paper does a great job of distinguishing the logical-counterfactual decision theories from EDT and CDT. However, it doesn’t distinguish between different logical-counterfactual decision theories.

The following is a table that shows the disagreements between decision theories. For each pair of decision theories specified by a row and column, the decision problem named in the cell is one where the decision theories return different answers. The diagonal is blank because the decision theories are the same. The lower left triangle is blank because it repeats the entries in the mirror image (along the diagonal) spots.

Other comparisons

Here are some existing comparisons between decision theories that I found useful, along with reasons why I felt the current post was needed.