This is the fourth post of the Project Hufflepuff sequence. Previous posts:

- Introduction

- Who exactly is the Rationality Community? (i.e. who is Project Hufflepuff trying to help)

- Straw Hufflepuffs and Lone Heroes

Epistemic Status: Tries to get away with making nuanced points about social reality by using cute graphics of geometric objects. All models are wrong. Some models are useful.

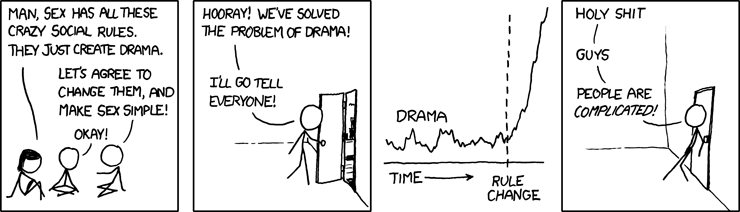

Traditionally, when nerds try to understand social systems and fix the obvious problems in them, they end up looking something like this:

Social dynamics is hard to understand with your system 2 (i.e. deliberative/logical) brain. There's a lot of subtle nuances going on, and typically, nerds tend to see the obvious stuff, maybe go one or two levels deeper than the obvious stuff, and miss that it's in fact 4+ levels deep and it's happening in realtime faster than you can deliberate. Human brains are pretty good (most of the time) at responding to the nuances intuitively. But in the rationality community, we've self-selected for a lot of people who:

- Don't really trust things that they can't understand fully with their system 2 brain.

- Tend not to be as naturally skilled at intuitive mainstream social styles.

- Are trying to accomplish things that mainstream social interactions aren't designed to accomplish (i.e. thinking deeply and clearly on a regular basis).

This post is an overview of essays that rationalist-types have written over the past several years, that I think add up to a "secret sequence" exploring why social dynamics are hard, and why they are important to get right. This may useful both to understand some previous attempts by the rationality community to change social dynamics on purpose, as well as to current endeavors to improve things.

(Note: I occasionally have words in [brackets], where I think original jargon was pointing in a misleading direction and I think it's worth changing)

To start with, a word of caution:

Armchair sociolology can be harmful [link] - Ozy's post is pertinent - most essays below fall into the category of "armchair sociology", and attempts by nerds to understand and articulate social-dynamics that they aren't actually that good at. Several times when an outsider has looked in at rationalist attempts to understood human interaction they've said "Oh my god, this is the blind leading the blind", and often that seemed to me like a fair assessment.

I think all the essays that follow are useful, and are pointing at something real. But taken individually, they're kinda like the blind men groping at the elephant, each coming away with the distinct impression an elephant is like a snake, tree, a boulder, depending on which aspect they're looking at.

[Fake Edit: Ozy informs me that they were specifically warning against amateur sociology and not psychology. I think the idea still roughly applies]

i. Cultural Assumptions of Trust

Guess [Infer] Culture, Ask Culture, and Tell [Reveal] Culture (link)

(by Malcolm Ocean)

Different people have different ways of articulating their needs and asking for help. Different ways of asking require different assumptions of trust. If people are bringing different expectations of trust into an interaction, they may feel that that trust is being violated, which can seem rude, passive aggressive or oppressive.

I'm listing this article, instead of numerous others about Ask/Guess/Tell, because I think: a) Malcolm does a good job of explaining how all the cultures work, and b) I think his presentation of Reveal culture is a good, clearer upgrade for Brienne's Tell culture, and I'm a bit sad it didn't seem to make it into the zeitgeist yet.

I also like the suggestion to call Guess Culture "Infer Culture" (implying a bit more about what skills the culture actually emphasizes).

Guess Culture Screens for Trying to Cooperate (link) (Ben Hoffman)

Rationality folk (and more generally, nerds), tend to prefer explicit communication over implicit, and generally see Guess culture as strictly inferior to Ask culture once you've learned to assert yourself.

But there is something Guess culture does which Ask culture doesn't, which is give you evidence of how much people understand you and are trying to cooperate. Guess cultures filters for people who have either invested effort into understanding your culture overall, or people who are good at inferring your own wants.

Sharp Culture and Soft Culture (link) (Sam Rosen)

[WARNING: It turned out lots of people thought this meant something different than what I thought it meant. Some people thought it meant soft culture didn't involve giving people feedback or criticism at all. I don't think Soft/Sharp are totally-naturally clusters in the first place, and the distinction I'm interested in (as applies to rationality-culture), is how you give feedback.

(i.e. "Dude, your art sucks. It has no perspective." vs "oh, cool. Nice colors. For the next drawing, you might try incorporating perspective", as a simplified example)

Somewhat orthogonal to Infer/Ask/Reveal culture is "Soft" vs "Sharp" culture. Sharp culture tends to have more biting humor, ribbing each other, and criticism. Soft culture tends to value kindness and social harmony more. Sam says that Sharp culture "values honesty more." Robby Bensinger counters in the comments: "My own experience is that sharp culture makes it more OK to be open about certain things (e.g., anger, disgust, power disparities, disagreements), but less OK to be open about other things (e.g., weakness, pain, fear, loneliness, things that are true but not funny or provocative or badass)."

Handshakes, Hi, and What's New: What's Going on With Small Talk? (Ben Hoffman)

Small talk often sounds nonsensical to literally-minded people, but it serves a fairly important function: giving people a structured path to figure out how much time/sympathy/interest they want to give each other. And even when the answer is "not much", it still is, significantly, nonzero - you regard each other as persons, not faceless strangers.

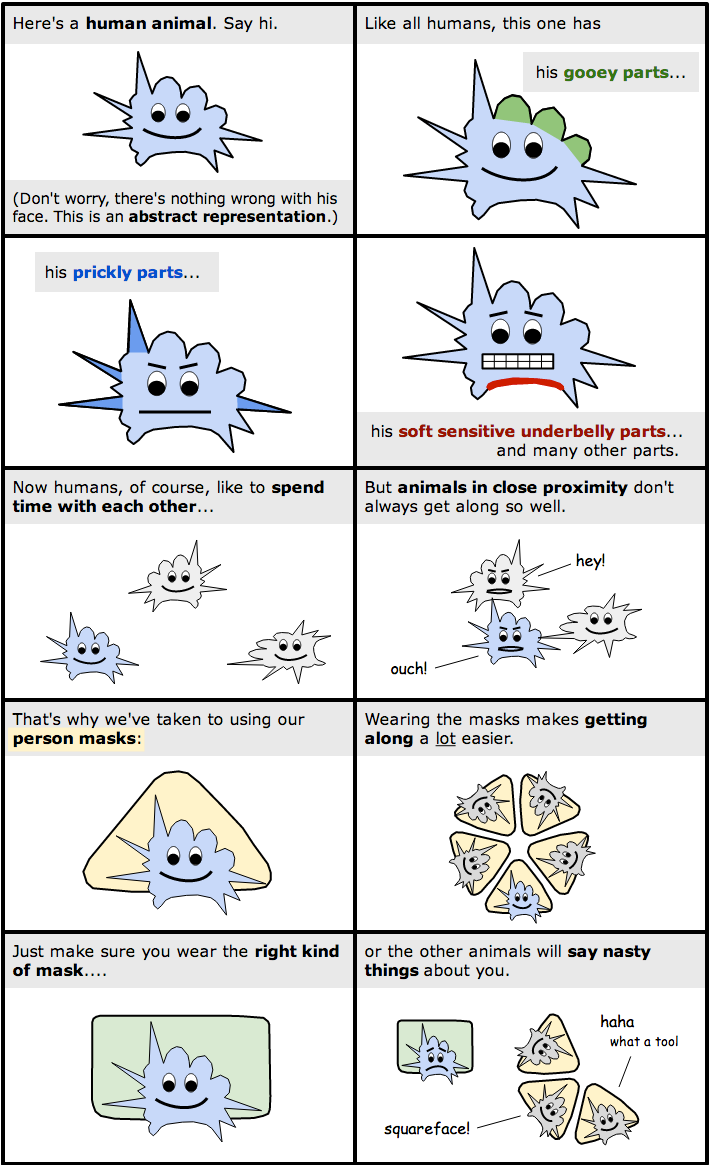

Personhood [Social Interfaces?] (Kevin Simler)

This essays gets a lot of mixed reactions, much of which I think has to do with its use of the word "Person." The essay is aimed at explaining how people end up treating each other as persons or nonpersons, without making any kind of judgement about it. This includes noting some things human tend to do that you might consider horrible.

Like many grand theories, I think it overstates it's case and ignores some places where the explanation breaks down, but I think it points at a useful concept which is summarized by this adorable graphic:

The essay uses the word "personhood". In the original context, this was useful: it gets at why cultures develop, why it matters whether you're able to demonstrate reliably, trust, etc. It helps explain outgroups and xenophobia: outsiders do not share your social norms, so you can't reliably interact with them, and it's easier to think of them as non-people than try to figure out how to have positive interactions.

But what I'm most interested in is "how can we use this to make it easier for groups with different norms to interact with each other"? And for that, I think using the word "personhood" makes it way more likely to veer into judging each other for having different preferences and communication styles.

What makes a person is... arbitrary, but not fully arbitrary.

Rationalist culture tends to attract people who prefer a particular style of “social interface”, often favoring explicit communication and discussing ideas in extreme detail. There's a lot of value to those things, but they have some problems:

a) this social interface does NOT mesh well with much of the rest of world (this is a problem if you have any goals that involve the rest of the world)

b) this goal does not uniformly mesh well with all people interested in and valuable to the rationality community.

I don't actually think it's possible to develop a set of assumptions that fit everyone's needs. But I do think it's possible to develop better tools for navigating different social contexts. I think it may be possible both to tweak sets-of-norms so that they mesh better together, or at least when they bump into each other, there's greater awareness of what's happening and people's default response is "oh, we seem to have different preferences, let's figure out how

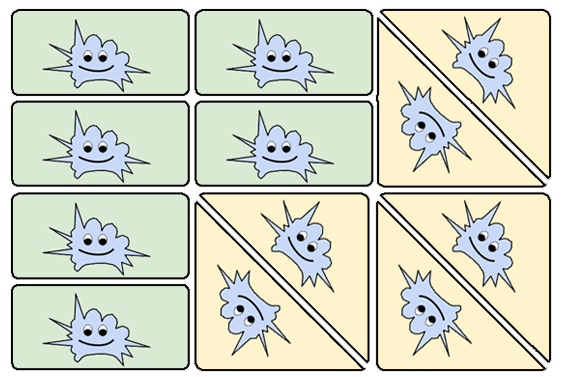

Maybe we can end up with something that looks kinda like this:

Against Being Against or For Tell Culture (Brienne Yudkowsky)

Having said a bunch of things about different cultural interfaces, I think this post by Brienne is really important, and highlights the end goal of all of this.

"Cultures" are a crutch. They are there to help you get your bearings. They're better than nothing. But they are not a substitute for actually having the skills needed to navigate arbitrary social situations as they come up so you can achieve whatever it is you want to achieve.

To master communication, you can't just be like, "I prefer Tell Culture, which is better than Guess Culture, so my disabilities in Guess Culture are therefore justified." Justified shmustified, you're still missing an arm.

My advice to you - my request of you, even - if you find yourself fueling these debates [about which culture is better], is to (for the love of god) move on. If you've already applied cognitive first aid, you've created an affordance for further advancement. Using even more tourniquettes doesn't help.

ii. Game Theory, Recursion, Trust

(or, "Social dynamics are complicated, you are not getting away with the things you think you are getting away with, stop trying to be clever, manipulative, act-utilitarian or naive-consequentialist without actually understanding what is going on")

Grokking Newcomb's Problem and Deserving Trust (Andrew Critch)

Critch argues why it is not just "morally wrong", but an intellectual mistake, to violate someone’s trust (even when you don’t expect any repercussions in the future).

When someone decides whether to trust you (say, giving you a huge opportunity), on the expectation that you’ll refrain from exploiting them, they’ve already run a low-grade simulation of you in their imagination. And the thing is that you don’t know whether you’re in a simulation or not when you make the decision whether to repay them.

Some people argue “but I can tell that I’m a conscious being, and they aren’t a literal super-intelligent AI, they’re just a human. They can’t possibly be simulating me in this high fidelity. I must be real.” This is true. But their simulation of you is not based on your thoughts, it’s based on your actions. It’s really hard to fake.

One way to think about it, not expounded on in the article: Yes, if you pause to think about it you can notice that you’re conscious and probably not being simulated in their imagination. But by the time you notice that, it’s too late. People build up models of each other all the time, based on very subtle cues such as how fast you respond to something. Conscious you knows that you’re conscious. But their decision of whether to trust you was based off the half-second it took for unconscious you to reply to questions like “Hey, do you think you handle Project X while I’m away?”

The best way to convince people you’re trustworthy is to actually be trustworthy.

You May Not Believe In Guess [Infer] Culture But It Believes In You (Scott Alexander)

This is short enough to just include the whole thing:

Consider an "ask culture" where employees consider themselves totally allowed to say "no" without repercussions. The boss would prefer people work unpaid overtime so ey gets more work done without having to pay anything, so ey asks everyone. Most people say no, because they hate unpaid overtime. The only people who agree will be those who really love the company or their job - they end up looking really good. More and more workers realize the value of lying and agreeing to work unpaid overtime so the boss thinks they really love the company. Eventually, the few workers who continue refusing look really bad, like they're the only ones who aren't team players, and they grudgingly accept.

Only now the boss notices that the employees hate their jobs and hate the boss. The boss decides to only ask employees if they will work unpaid overtime when it's absolutely necessary. The ask culture has become a guess culture.

How this applies to friendship is left as an exercise for the reader.

The Social Substrate (Lahwran)

A fairly in depth look into how common knowledge, signaling, newcomb-like problems and recursive modeling of each other interact to produce "regular social interaction."

I think there's a lot of interesting stuff here - I'm not sure if it's exactly accurate but it points in directions that seem useful. But I actually think the most important takeaway is the warning at the beginning:

WARNING: An easy instinct, on learning these things, is to try to become more complicated yourself, to deal with the complicated territory. However, my primary conclusion is "simplify, simplify, simplify": try to make fewer decisions that depend on other people's state of mind. You can see more about why and how in the posts in the "Related" section, at the bottom.

When you're trying to make decisions about people, you're reading a lot of subtle cues off them to get a sense of how you feel about that. When you [generic person you, not necessarily you in particular] can tell someone is making complex decisions based on game theory and trying to model all of this explicitly, it a) often comes across as a bit off, and b) even if it doesn't, you still have to invest a lot of cognitive resources figuring out how they are modeling things and whether they are actually doing a good job or missing key insights or subtle cues. The result can be draining, and it can output a general response of "ugh, something about this feels untrustworthy."

Whereas when people are able to cache this knowledge down into a system-1 level, you're able to execute a simpler algorithm that looks more like "just try to be a good trustworthy person", that people can easily read off your facial expression, and which reduces overall cognitive burden.

System 1 and System 2 Morality (Sophie Grouchy)

There’s some confusion over what “moral” means, because there’s two kinds of morality:

- System 1 morality is noticing-in-realtime when people need help, or when you’re being an asshole, and then doing something about it.

- System 2 morality is when you have a complex problem and a lot of time to think about it.

System 1 moralists will pay back Parfit’s Hitchhiker because doing otherwise would be being a jerk. System 2 moralists invent Timeless [Functional?] decision theory.

You want a lot of people with System 2 morality in the world, trying to fix complex problems. You want people with System 1 morality in your social circle.

The person who wrote this post eventually left the rationality community, in part due to frustration due to people constantly violating small boundaries that seemed pretty obvious (things in the vein of “if you’re going to be 2 hours late, text me so I don’t have to sit around waiting for you.”)

Final Remarks

I want to reiterate - all models are wrong. Some models are useful. The most important takeaway from this is not that any particular one of these perspectives is true, but that social dynamics has a lot of stuff going on that is more complicated than you're naively imagining, and that this stuff is important enough to put the time into getting right.

I would like to register disagreement not with the technical truth value of this statement, but with the overall sentiment that I believe this statement (and your essay as a whole) is getting at: Social dynamics is really complicated and nuanced, and not getting the difficult parts right results in a lot of problems and overall suffering.

My belief is that just getting the basics right is usually enough to avoid most of the problems. I feel that a lot of the problems the rationality community has with social dynamics is basically due to a failure to simulate other minds (or simply a choice not to). For example, constantly violating small boundaries like being 2 hours late to an appointment. In what world does this behavior count as rational? If you predict yourself not to be able to make an appointment at a given time, why make the appointment at all? If something genuinely unexpected occurs that delays you, but you predict that the other person would be upset with you not informing them, why not tell them? This behavior to me sounds more like deontologically following first-order selfishness, the mistaken belief that rationality is defined as "being first-order selfish all the time."

When our parents first teach us Basic Morality 101, they often say things like "Put yourself in that person's shoes", or "Do unto others" and things like that. And how we usually learn to interpret that is, "imagine yourself as that other person, feeling what they're feeling and so on, and don't do things that you imagine would make you unhappy in their situation." In other words, simulate their experience, and base your actions upon the results of that simulation (TDT?). But then rationalists sometimes claim that socially skilled people are doing a bunch of fast System 1 intuitive calculations that are hyper-complex and difficult to evaluate with System 2 and so on, when it's not really the case. Simulating another mind, even if you're just approximating their mind as your mind but with the environmental details changed, isn't that hard to do (though I imagine some might argue with that), because at the very least most of us can recall a memory of a similar situation. And if you can't simulate it at all, then you're probably going to have a tough time being rational in general, because models are what it's all about. But you don't need to be a neuroscientist to run these kinds of simulations.

But I haven't seen that many examples of social dynamics that are 4+ levels deep. I imagine that even showing that such levels exist would be really hard. I think it's more likely that nerds are not even getting the "obvious" stuff right. I attribute this tendency to nerds' desire to understand the world symbolically or through abstract theories when doing so would be much harder than just making a model of the situation in your head by predicting things you've already observed to happen. And often, nerds won't do the obvious stuff because they don't see why it's strictly necessary within the abstract model they have available. The things nerds typically get flak for in social situations, such as (not an exhaustive list) - shyness, focus on extreme details or highly specific topics, immodesty (in the sense of speaking condescendingly or matter-of-factly), bad hygiene, eccentric hobbies or entertainment - are either things that are irrelevant or non-problematic in the presence of other nerds, or things that can be easily fixed without going 4 levels deep into the intricacies of social dynamics. This is probably an issue of being too much of a Decartesian rationalist when more empiricism is required.

It's true though, that some of us would like to move higher up in the world which requires less eccentricity or weirdness than is allowable in our closest social circles. In this case, a better understanding of social dynamics would be helpful. But I think that in the case of trying to manage and organize within the rationality community, we're probably overcomplicating the task a bit.

Thanks. I'm mulling this over, but I think off-the-cuff response is:

a) I agree a lot of nerds mostly need to get the basics right, and bother modeling other minds at all.

b) The thing that this post was a reaction to were naive attempts by rationalists to change culture without understanding what was going on. The most salient example was highlighted in Malcolm's post (he's quoting in turn from another post without attribution)

"As Tell Culture was becoming more popular in Berkeley, due to people mostly being excited about the bit in the name, it felt a... (read more)