Motivation:

Before the Pandemic of 2020, I had a number of constructive discussions with several neuroscientists including Konrad Körding, a computational neuroscientist and pioneer exploring connections between deep learning and human learning, and Alex Gomez-Marin, an expert on behavioural neuroscience, on the hypothetical boundary between Human and Machine Learning. One promising avenue for making progress along these lines appeared to be Kolmogorov’s theory of Algorithmic Probability [4] which Alexander Kolpakov(Sasha) and myself recently used to probe this boundary, motivated in large part by open problems in Machine Learning for Cryptography [1].

However, more progress appears to be possible based on the analyses of Marcus Hutter and Shane Legg who established that Algorithmic Probability defines the epistemic limit of Machine Learning [3]. In the hopes of developing more powerful tools in Algorithmic Probability for probing the epistemic limits of Machine Learning, Sasha and myself propose cash prizes for deriving two mathematical laws using Algorithmic Probability.

Erdős Problems in Algorithmic Probability:

- Benford's Law, which Terrence Tao himself admitted lacks a satisfactory explanation: https://terrytao.wordpress.com/2009/07/03/benfords-law-zipfs-law-and-the-pareto-distribution/

- Atle Selberg's Identity, which proved crucial in Paul Erdős' and Atle Selberg's elementary proof of the Prime Number Theorem:

which is derived using elementary number theory in [5] where , though its true meaning remains a mathematical mystery [6,7].

Submission guidelines and Monetary Prizes:

The challenge is named in honour of Paul Erdős whose probabilistic method has provided Sasha and myself with a number of important insights, and the cash prize is 1000.00 USD for the best submission under 30 pages in each category. The main evaluation criteria are clarity, correctness and concision.

Submissions typeset in LaTeX must be sent to the tournament-specific email of Alexander Kolpakov, Managing Editor of the Journal of Experimental Mathematics, before September 17, 2024: wignerweyl@proton.me

Prizes shall be awarded on January 1st, 2025.

A model for submissions:

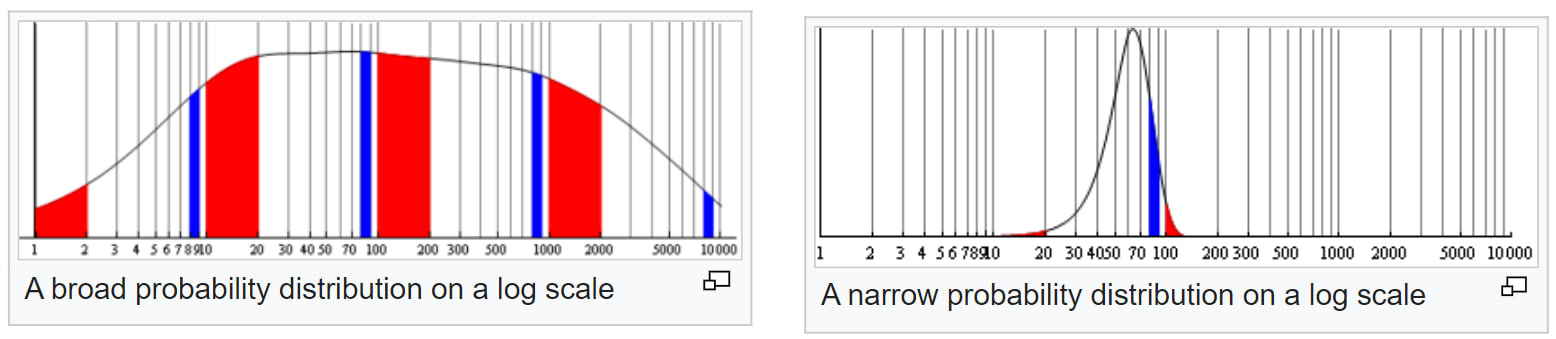

As a standard model for submissions, we recommend Yuri Manin's derivation of Zipf's Law [9] as a corollary of Levin's Universal Distribution via the Manin-Marcolli theory of error-correcting codes [10]. The Manin-Marcolli theory for deriving Zipf's Law appears to have important consequences for generative modelling in both humans and machines.

References:

- Kolpakov, Alexander & Rocke, Aidan. On the impossibility of discovering a formula for primes using AI. arXiv: 2308.10817. 2023.

- A. N. Kolmogorov. Combinatorial foundations of information theory and the calculus of probabilities. Russian Math. Surveys, 1983.

- Marcus Hutter. A theory of universal intelligence based on algorithmic complexity.

2000. https://arxiv.org/abs/cs/0004001. - P. Grünwald and P. Vitanyí. Algorithmic information theory. arXiv:0809.2754, 2008.

- Selberg, Atle (1949), "An elementary proof of the prime-number theorem", Ann. of Math., 2, 50 (2): 305–313, doi:10.2307/1969455

- Basj (https://mathoverflow.net/users/85239/basj), Ideas in the elementary proof of the prime number theorem (Selberg / Erdős), URL (version: 2017-01-16): https://mathoverflow.net/q/259698

- Wikipedia contributors. "Selberg's identity." Wikipedia, The Free Encyclopedia. Wikipedia, The Free Encyclopedia, 21 Aug. 2023. Web. 11 Sep. 2023.

- Tao, Terrence. Benford’s law, Zipf’s law, and the Pareto distribution. What's new. 2009. https://terrytao.wordpress.com/2009/07/03/benfords-law-zipfs-law-and-the-pareto-distribution/

- Manin, Yu. I.. “Zipf's law and L. Levin's probability distributions.” ArXiv abs/1301.0427 . 2013.

- Manin, Yu. I. and Matilde Marcolli. “Kolmogorov complexity and the asymptotic bound for error-correcting codes.” ArXiv abs/1203.0653 . 2012.

You claim that Terry Tao says that Benford's law lack's a satisfactory explanation. That is not correct. Terry Tao actually gave an explanation for Benford's law. And even if he didn't, if you are moderately familiar with mathematics, an explanation for Benford's law would be obvious or at least a standard undergraduate exercise.

Let X be a random variable that is uniformly distributed on the interval [m⋅ln(10),n⋅ln(10)] for some m<n. Let Y denote the first digit of eX. Then show that P(Y=k)=log10(k+1k).

For all n, let Xn be a uniformly distributed random variable on the interval [an,bn]. Let Yn denote the first digit of eXn. Suppose that limn→∞bn−an=+∞. Prove that limn→∞P(Yn=k)=log10(k+1k).

Terry Tao in that blog post lists the characteristics that imply Zipf's law here:

"These laws govern the asymptotic distribution of many statistics Q which

Terry Tao merely stated that Benford's law cannot be proven the same way mathematical theorems are proven; "Being empirically observed phenomena rather than abstract mathematical facts, Benford’s law, Zipf’s law, and the Pareto distribution cannot be “proved” the same way a mathematical theorem can be proved. However, one can still support these laws mathematically in a number of ways, for instance showing how these laws are compatible with each other, and with other plausible hypotheses on the source of the data."