This is part of a weekly reading group on Nick Bostrom's book, Superintelligence. For more information about the group, and an index of posts so far see the announcement post. For the schedule of future topics, see MIRI's reading guide.

Welcome. This week we discuss the third section in the reading guide, AI & Whole Brain Emulation. This is about two possible routes to the development of superintelligence: the route of developing intelligent algorithms by hand, and the route of replicating a human brain in great detail.

This post summarizes the section, and offers a few relevant notes, and ideas for further investigation. My own thoughts and questions for discussion are in the comments.

There is no need to proceed in order through this post. Feel free to jump straight to the discussion. Where applicable, page numbers indicate the rough part of the chapter that is most related (not necessarily that the chapter is being cited for the specific claim).

Reading: “Artificial intelligence” and “Whole brain emulation” from Chapter 2 (p22-36)

Summary

Intro

- Superintelligence is defined as 'any intellect that greatly exceeds the cognitive performance of humans in virtually all domains of interest'

- There are several plausible routes to the arrival of a superintelligence: artificial intelligence, whole brain emulation, biological cognition, brain-computer interfaces, and networks and organizations.

- Multiple possible paths to superintelligence makes it more likely that we will get there somehow.

- A human-level artificial intelligence would probably have learning, uncertainty, and concept formation as central features.

- Evolution produced human-level intelligence. This means it is possible, but it is unclear how much it says about the effort required.

- Humans could perhaps develop human-level artificial intelligence by just replicating a similar evolutionary process virtually. This appears at after a quick calculation to be too expensive to be feasible for a century, however it might be made more efficient.

- Human-level AI might be developed by copying the human brain to various degrees. If the copying is very close, the resulting agent would be a 'whole brain emulation', which we'll discuss shortly. If the copying is only of a few key insights about brains, the resulting AI might be very unlike humans.

- AI might iteratively improve itself from a meagre beginning. We'll examine this idea later. Some definitions for discussing this:

- 'Seed AI': a modest AI which can bootstrap into an impressive AI by improving its own architecture.

- 'Recursive self-improvement': the envisaged process of AI (perhaps a seed AI) iteratively improving itself.

- 'Intelligence explosion': a hypothesized event in which an AI rapidly improves from 'relatively modest' to superhuman level (usually imagined to be as a result of recursive self-improvement).

- The possibility of an intelligence explosion suggests we might have modest AI, then suddenly and surprisingly have super-human AI.

- An AI mind might generally be very different from a human mind.

Whole brain emulation

- Whole brain emulation (WBE or 'uploading') involves scanning a human brain in a lot of detail, then making a computer model of the relevant structures in the brain.

- Three steps are needed for uploading: sufficiently detailed scanning, ability to process the scans into a model of the brain, and enough hardware to run the model. These correspond to three required technologies: scanning, translation (or interpreting images into models), and simulation (or hardware). These technologies appear attainable through incremental progress, by very roughly mid-century.

- This process might produce something much like the original person, in terms of mental characteristics. However the copies could also have lower fidelity. For instance, they might be humanlike instead of copies of specific humans, or they may only be humanlike in being able to do some tasks humans do, while being alien in other regards.

Notes

- What routes to human-level AI do people think are most likely?

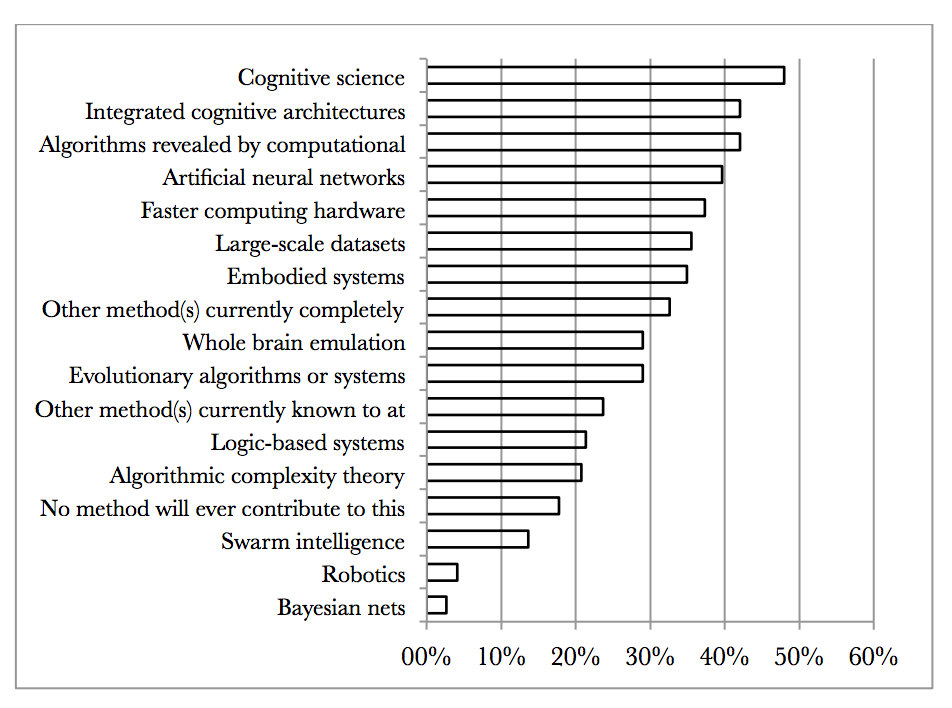

Bostrom and Müller's survey asked participants to compare various methods for producing synthetic and biologically inspired AI. They asked, 'in your opinion, what are the research approaches that might contribute the most to the development of such HLMI?” Selection was from a list, more than one selection possible. They report that the responses were very similar for the different groups surveyed, except that whole brain emulation got 0% in the TOP100 group (100 most cited authors in AI) but 46% in the AGI group (participants at Artificial General Intelligence conferences). Note that they are only asking about synthetic AI and brain emulations, not the other paths to superintelligence we will discuss next week.

- How different might AI minds be?

Omohundro suggests advanced AIs will tend to have important instrumental goals in common, such as the desire to accumulate resources and the desire to not be killed. -

Anthropic reasoning

‘We must avoid the error of inferring, from the fact that intelligent life evolved on Earth, that the evolutionary processes involved had a reasonably high prior probability of producing intelligence’ (p27)

Whether such inferences are valid is a topic of contention. For a book-length overview of the question, see Bostrom’s Anthropic Bias. I’ve written shorter (Ch 2) and even shorter summaries, which links to other relevant material. The Doomsday Argument and Sleeping Beauty Problem are closely related. - More detail on the brain emulation scheme

Whole Brain Emulation: A Roadmap is an extensive source on this, written in 2008. If that's a bit too much detail, Anders Sandberg (an author of the Roadmap) summarises in an entertaining (and much shorter) talk. More recently, Anders tried to predict when whole brain emulation would be feasible with a statistical model. Randal Koene and Ken Hayworth both recently spoke to Luke Muehlhauser about the Roadmap and what research projects would help with brain emulation now. -

Levels of detail

As you may predict, the feasibility of brain emulation is not universally agreed upon. One contentious point is the degree of detail needed to emulate a human brain. For instance, you might just need the connections between neurons and some basic neuron models, or you might need to model the states of different membranes, or the concentrations of neurotransmitters. The Whole Brain Emulation Roadmap lists some possible levels of detail in figure 2 (the yellow ones were considered most plausible). Physicist Richard Jones argues that simulation of the molecular level would be needed, and that the project is infeasible. -

Other problems with whole brain emulation

Sandberg considers many potential impediments here. -

Order matters for brain emulation technologies (scanning, hardware, and modeling)

Bostrom points out that this order matters for how much warning we receive that brain emulations are about to arrive (p35). Order might also matter a lot to the social implications of brain emulations. Robin Hanson discusses this briefly here, and in this talk (starting at 30:50) and this paper discusses the issue. -

What would happen after brain emulations were developed?

We will look more at this in Chapter 11 (weeks 17-19) as well as perhaps earlier, including what a brain emulation society might look like, how brain emulations might lead to superintelligence, and whether any of this is good. -

Scanning (p30-36)

‘With a scanning tunneling microscope it is possible to ‘see’ individual atoms, which is a far higher resolution than needed...microscopy technology would need not just sufficient resolution but also sufficient throughput.’

Here are some atoms, neurons, and neuronal activity in a living larval zebrafish, and videos of various neural events.

Array tomography of mouse somatosensory cortex from Smithlab.

A molecule made from eight cesium and eight

iodine atoms (from here). -

Efforts to map connections between neurons

Here is a 5m video about recent efforts, with many nice pictures. If you enjoy coloring in, you can take part in a gamified project to help map the brain's neural connections! Or you can just look at the pictures they made. -

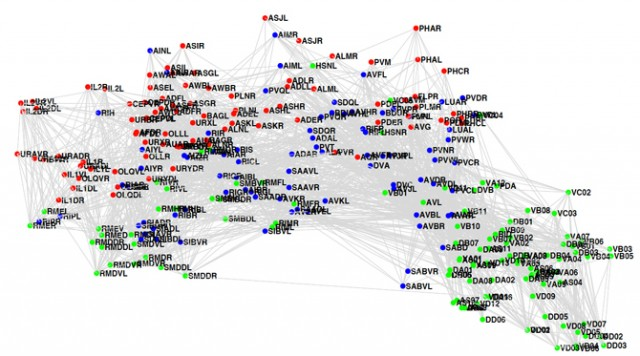

The C. elegans connectome (p34-35)

As Bostrom mentions, we already know how all of C. elegans’ neurons are connected. Here's a picture of it (via Sebastian Seung):

In-depth investigations

If you are particularly interested in these topics, and want to do further research, these are a few plausible directions, some taken from Luke Muehlhauser's list:

- Produce a better - or merely somewhat independent - estimate of how much computing power it would take to rerun evolution artificially. (p25-6)

- How powerful is evolution for finding things like human-level intelligence? (You'll probably need a better metric than 'power'). What are its strengths and weaknesses compared to human researchers?

- Conduct a more thorough investigation into the approaches to AI that are likely to lead to human-level intelligence, for instance by interviewing AI researchers in more depth about their opinions on the question.

- Measure relevant progress in neuroscience, so that trends can be extrapolated to neuroscience-inspired AI. Finding good metrics seems to be hard here.

- e.g. How is microscopy progressing? It’s harder to get a relevant measure than you might think, because (as noted p31-33) high enough resolution is already feasible, yet throughput is low and there are other complications.

- Randal Koene suggests a number of technical research projects that would forward whole brain emulation (fifth question).

How to proceed

This has been a collection of notes on the chapter. The most important part of the reading group though is discussion, which is in the comments section. I pose some questions for you there, and I invite you to add your own. Please remember that this group contains a variety of levels of expertise: if a line of discussion seems too basic or too incomprehensible, look around for one that suits you better!

Next week, we will talk about other paths to the development of superintelligence: biological cognition, brain-computer interfaces, and organizations. To prepare, read Biological Cognition and the rest of Chapter 2. The discussion will go live at 6pm Pacific time next Monday 6 October. Sign up to be notified here.

Hi everyone!

I'm Tom. I attended UC Berkeley a number of years ago, double-majored in math and philosophy, graduated magna cum laude, and wrote my Honors thesis on the "mind-body" problem, including issues that were motivated by my parallel interest in AI, which I have been passionately interested in all my life.

It has been my conviction since I was a teenager that consciousness is the most interesting mystery to study, and that, understanding how it is realized in the brain -- or emerges therefrom, or whatever it turns out to be -- will also almost certainly give us the insight to do the other main goal of my life, build a mind.

The converse is also true. If we learn how to do AI, not GOFAI wiht no awareness, but AI wilh full sentience, we will almost certainly know how the brain does it. Solving either one, will solve the other.

AI can be thought of as one way to "breadboard" our ideas about biological information processing.

But it is more than that to me. It is an end in itself, and opens up possibilities so exciting, so penultimate, that achieving sentient AI would be equal, or superior, to the experience (and possible consequences) of meeting an advanced extraterrestrial civilization.

Further, I think that solving the biological mind body problem, or doing AI, is something within reach. I think it is the concepts that are lacking, not better processors, or finer grained fMRIs, or better images of axon hillock reconformation during exocytosis.

If we think hard, really really hard, I think we solve these things with the puzzle pieces we have now (just maybe.) I often feel that everything we need is on the table, and we just need to learn how to see it with fresh eyes, order it, and put it together. I doubt a "new discovery", either in physics, cognitive neurobiology, or philosophy of mind, comp-sci, etc, will make the design we seek pop-out for us.

I think it is up to us now, to think, conceptualize, integrate, and interdisciplinarily cross-pollinate. The answer is, I think, at lest major pieces of it, available and sitting there, waiting to be uncovered.

Other than that, since graduation I have worked as a software developer (wrote my obligatory 20 million lines of code, in a smattering of 6 or 7 languages, so I know what that is like), and many other things, but am currently unaffiliated, and spend 70 hours a week in freelance research. Oh yes, I have done some writing (been published, but nothing too flashy).

RIght now, I work as a freelance videographer and photographer and editor. Corporate documentaries and training videos, anything you can capture with a nice 1080 HDV camcorder or a Nikon still.

Which brings me to my youtube channel, that is under construction. I am going to put a couple "courses" .... organized, rigorous topic sequences of presentations, of the history of AI, but in particular, my best current ideas (I have some I think are quite promising) on how to move in the right direction to achieving sentience.

I got the idea for the video series from watching Leonard Susskind's "theoretical minimum" internet lecture series on aspects of physics.

This will be what I consider to be the essential theoretical minimum (with lessons from history), plus the new insights I am in the process of trying to create, cross research, and critique, into some aspects of the approach to artificial sentience that I think I understand particularly well, and can help by promoting discussion of.

I will clearly delineate pure intellectual history, from my own ideas, throughout the videos, so it will be a fervent attempt to be honest. THen I will also just get some new ideas out there, explaining how they are the same, and how they are different, or extensions of, accepted and plausible principles and strategies, but with some new views... so others can critique them, reject them, or build on them, or whatever.

The ideas that are my own syntheses, are quite subtle in some cases, and I am excited about using the higher "speaker-to-audience semiotic bandwidth" of the video format, for communicating these subtleties. Picture-in-picture, graphics, even occasional video clips from film and interviews, plus the ubiquitous whiteboard, all can be used together to help get across difficult or unusual ideas. I am looking forward to leveraging that and experimenting with the capabilities of the format, for exhibiting multifaceted, highly interconnected or unfamiliar ideas.

So, for now, I am enmeshed in all the research I can find that helps me investigate what I think might be my contribution. If I fail, I might as well fail by daring greatly, to steal from Churchill or whomever it was (Roosevelt, maybe?) But I am fairly smart, and examined ideas for many years. I might be on to one or two pieces of what I think is the puzzle. So wish me luck, fellow AI-ers.

Besides, "failing" is not failing; it is testing your best ideas. The only way to REALLY fail, is to do nothing, or to not put forth your best effort, especially if you have an inkling that you might have thought of something valuable enough to express.

Oh, finally, people are telling where they live. I live in Phoenix, highly dislike being here, and will be moving to California again in the not too distant future. I ended up here because I was helping out an elderly relative, who is pretty stable now, so I will be looking for a climate and intellectual environment more to my liking, before long.

okay --- I'll be talking with you all, for the next few months in here... cheers. Maybe we can change the world. And hearty thanks for this forum, and especially all the added resource links.