This is a really, really good example to explain observation selection effects and anthropics. It's easier to understand than the standard "the fish you observe in a pond corresponds to the size of the holes in your net" example, and it demonstrates how anthropic reasoning can lead to counter-intuitive conclusions. Thanks!

It is a useful fable, but in the context presented it seemed unlikely, given the attitudes of Bomber Harris.

Hmm? Please elaborate.

Harris was notoriously at accepting advice/criticism that contradicted his prior beliefs; many other times he was contradicted (eg on the utility of area bombing, on specific targets, on the use of his bombers by Coastal Command, etc) he reacted poorly- ignoring reports, stonewalling the other services or his superiors, and generally refusing to change Bomber Command policies. This would be doubly true in this case because Blackett worked for the Admiralty, and was disagreeing with a previous Bomber Command study. It's trebly true because he and Blackett had clashed repeatedly during the war- Blackett (and Henry Tizard) had (correctly) argued that Bomber Command's policy of area bombing was extremely ineffective, and some of its resources should be shifted to the other services, which Harris strongly disagreed with.

The story doesn't actually say "and they put armor where Blackett suggested and everyone lived happily ever after", though.

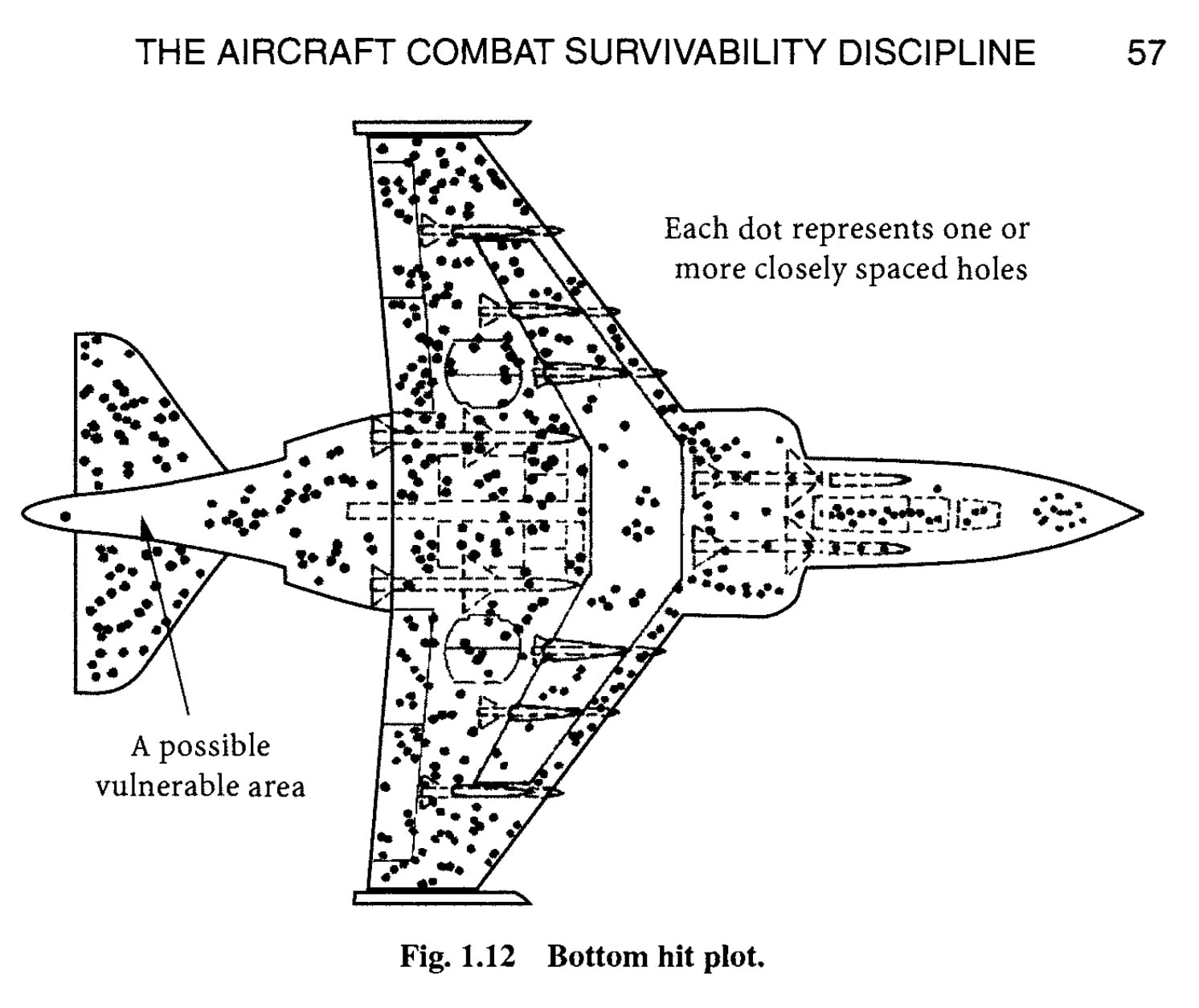

Side note: The original 'chickenpox bomber' illustration (using the Douglas F-4 Phantom) first appeared in Robert Ball's 1985 book "The Fundamentals of Aircraft Combat Survivability Analysis and Design", later refined in the 2nd edition from 2003. He never mentions Wald, Wallis or the SRG in the entire book, so I'm likewise convinced that the "mathematician outsmarts the military" story is pretty much BS.

I had heard of the survey of damaged planes which came up with the "counterintuitive" idea of adding armor to the parts that were found undamaged, but this is the first time I've heard of another group coming to the opposite conclusion.

I had heard of the survey of damaged planes which came up with the "counterintuitive" idea of adding armor to the parts that were found undamaged

Really? Was there there a counterintuitive point that they had or is this just an example of crazy?

Blackett suggested that, instead, the armour be placed in the areas which were completely untouched by damage in the bombers which returned. He reasoned that the survey was biased, since it only included aircraft that returned to Britain. The untouched areas of returning aircraft were probably vital areas, which, if hit, would result in the loss of the aircraft.

Seems (at least in retrospect) that its obviousness would be proportional to the percentage of bombers that didn't make it back at all.

In retrospect, sure. But regardless of how many bombers get shot down, it takes a certain clarity of mind to look at the survivors and realize that the damage they've received isn't necessarily representative of the damage the non-survivors received.

On consideration, I suspect the inverse error gets made quite a lot when analyzing failure modes in situations where failure renders an instance unavailable for further analysis.

You're right, of course: I'd heard the story without working out the solution myself, and my mind leapt to the "obvious" solution.

I suspect the inverse error gets made quite a lot when analyzing failure modes in situations where failure renders an instance unavailable for further analysis.

s/inverse error/identical error? I'm having trouble imagining the inverse error, unless it's leaning too hard on boolean, non-probabilistic anthropic reasoning and ignoring real damage distributions.

I meant the error that was (sloppily phrased) the inverse of "look at the survivors and realize that the damage they've received isn't necessarily representative of the damage the non-survivors received". So, yes, the identical error to the one we've been discussing all along.

Speculation: if they were 'damaged planes' as opposed to 'destroyed planes', the parts that were damaged were obviously non-critical. All the planes that got hit in the critical parts didn't make it back.

There's a story you've probably heard:

During World War II, the British RAF's Bomber Command wanted a survey done on the effectiveness of their aircraft armouring. This was carried out by inspected all bombers returning from bombing raids over Germany over a particular period. All damage inflicted by German air defences was noted and the recommendation was given that armour be added in the most heavily damaged areas.

However a new group, run by Patrick Blackett, the Operational Research Section, analysed the survey report, and came to a different conclusion. Blackett suggested that, instead, the armour be placed in the areas which were completely untouched by damage in the bombers which returned. He reasoned that the survey was biased, since it only included aircraft that returned to Britain. The untouched areas of returning aircraft were probably vital areas, which, if hit, would result in the loss of the aircraft.

It is a useful fable, but in the context presented it seemed unlikely, given the attitudes of Bomber Harris. So I went looking for further information, and found the story of BC-ORS written by Freeman Dyson:

"A Failure of Intelligence" (Part 1) (Part 2)

which is a great read, but fails to mention any such incident.

What I did find, however, on further searching, was the work of Abraham Wald. Wald was a Jewish mathematician from Romania who in 1943 published a series of 8 memoranda via the Statistical Research Group at Columbia University while working for the National Defense Research Committee in America. These were republished collectively in 1980 as "'A Method of Estimating Plane Vulnerability Based on Damage of Survivors." by the Center for Naval Analyses, and are still in use today.

In 1984 Mangel and Samaniego published a fairly accessible summary of Wald's work in the Journal of the American Statistical Association (Vol 79, Issue 286, June)

"Abraham Wald's Work on Aircraft Survivability"

So it seems that Wald is the one who should get the credit for being the first to try to compensate for the evidential problem. Tragically he himself died in an airplane crash, just a few years later (in 1950, aged 48).

The 'bible' on this topic, Robert Ball's "The Fundamentals of Aircraft Combat Survivability Analysis and Design" confirms the problem is a real one, and mentioned the F-4 as an example. When they looked at the F-4s which survived combat, there were no holes in the narrowest part of the tail, just forward of the horizontal stabilizers. They figured out that all of the hydraulic lines for the elevators and rudder were tightly clustered in there, so that a single hit could damage all of them at once, leaving the plane uncontrollable. The solution in that case was, rather than increasing the armour, to spread the redundant lines out to reduce the chances of losing all of them to a single hit.