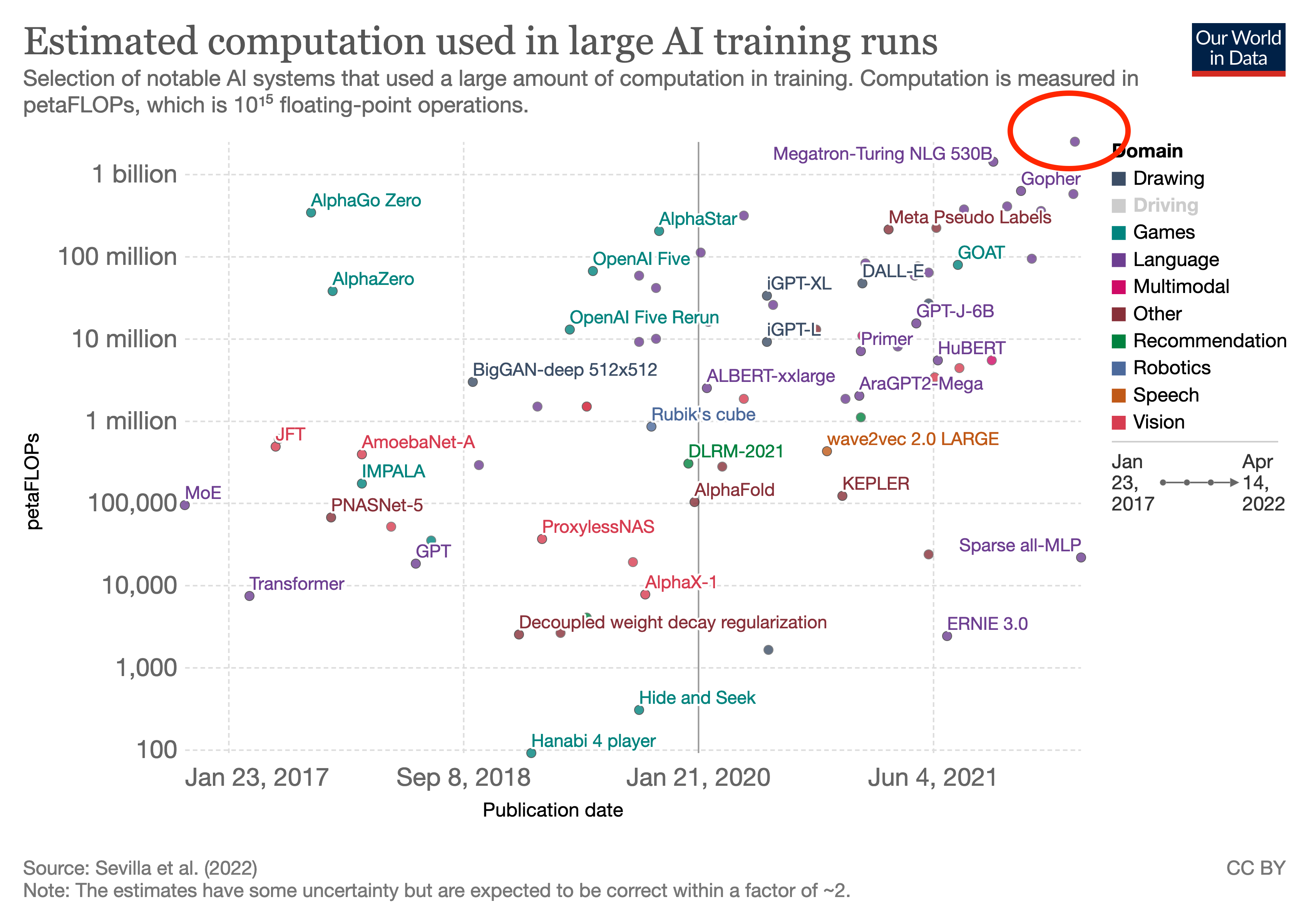

Google just announced a very large language model that achieves SOTA across a very large set of tasks, mere days after DeepMind announced Chinchilla, and their discovery that data-scaling might be more valuable than we thought.

Here's the blog post, and here's the paper. I'll repeat the abstract here, with a highlight in bold,

Large language models have been shown to achieve remarkable performance across a variety of natural language tasks using few-shot learning, which drastically reduces the number of task-specific training examples needed to adapt the model to a particular application. To further our understanding of the impact of scale on few-shot learning, we trained a 540-billion parameter, densely activated, Transformer language model, which we call Pathways Language Model (PaLM).

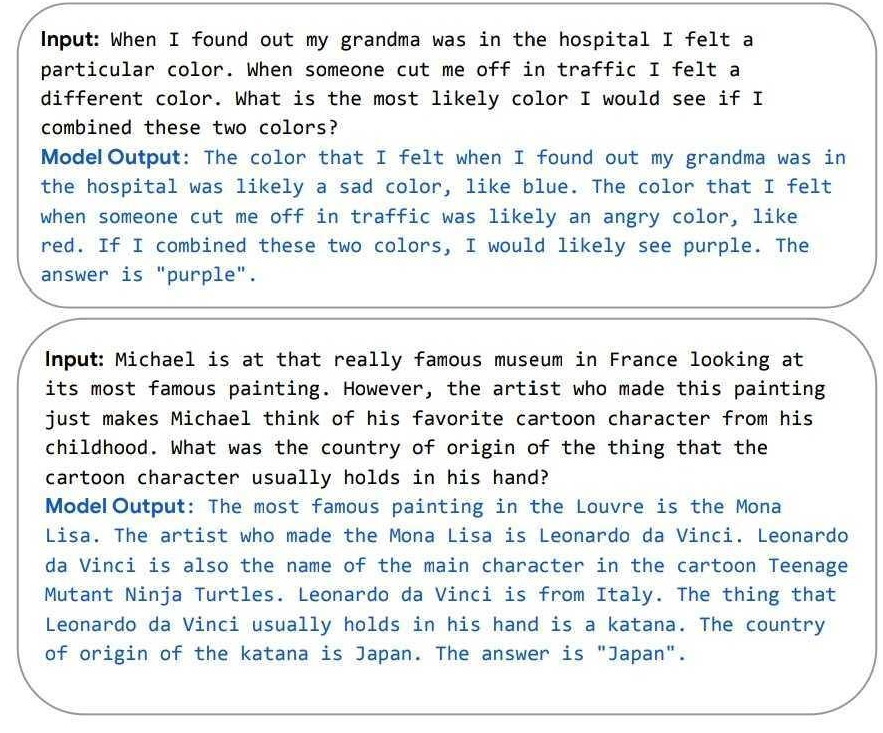

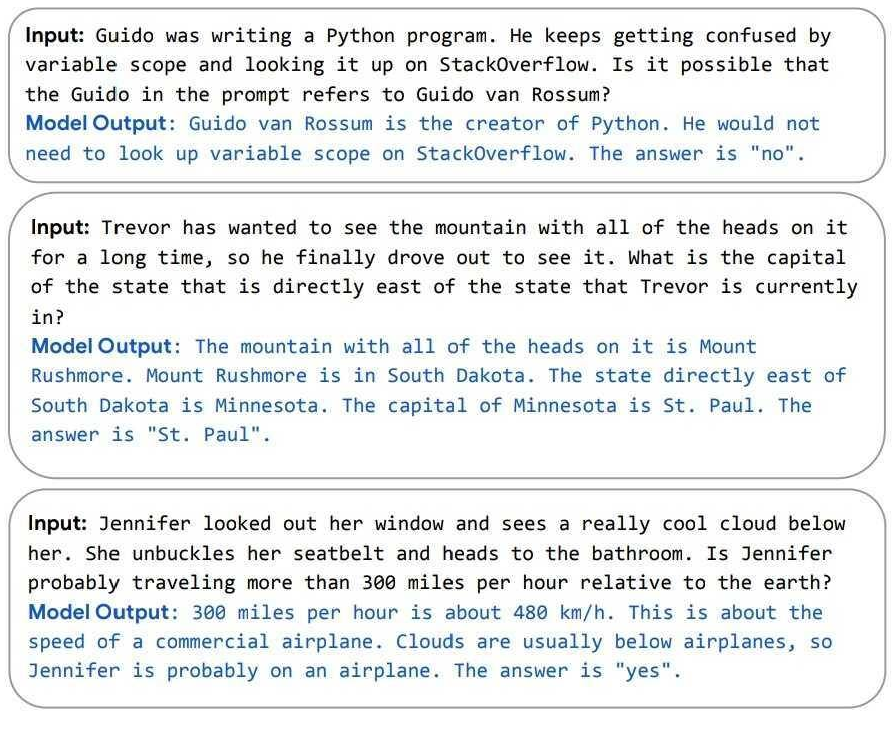

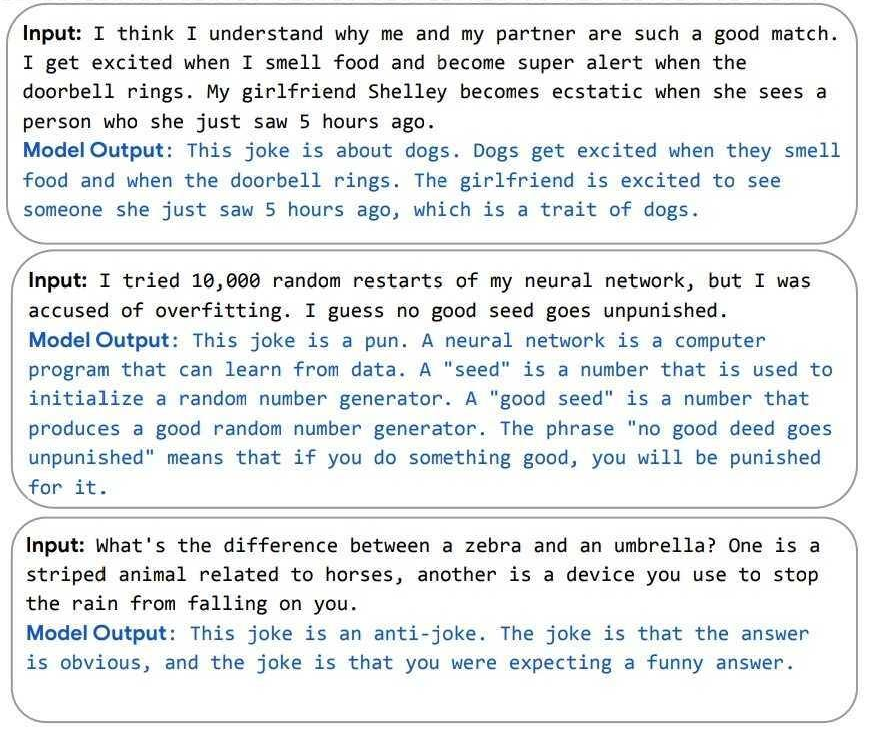

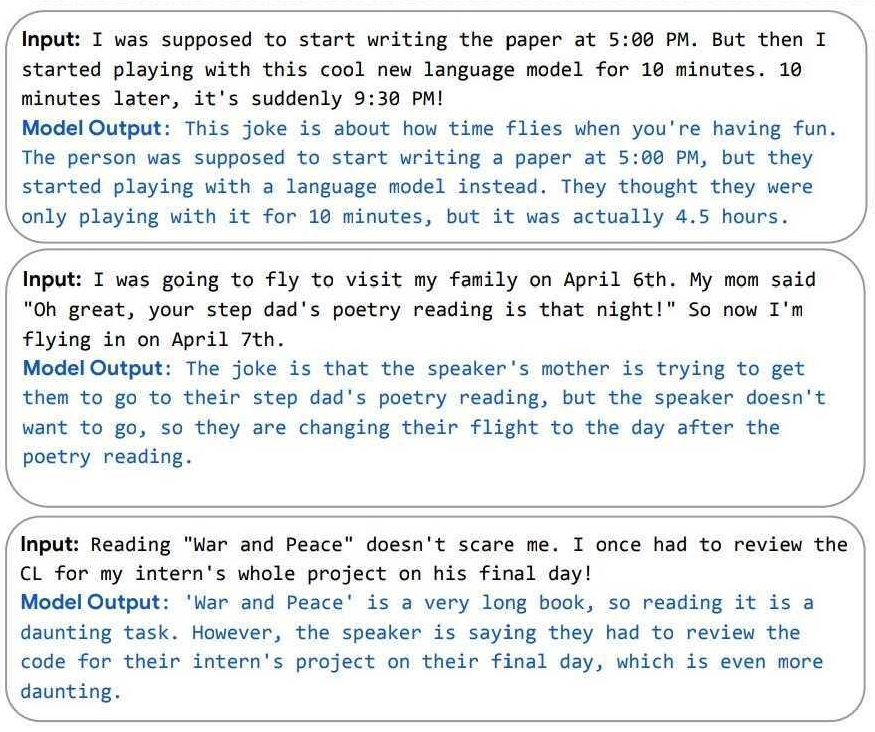

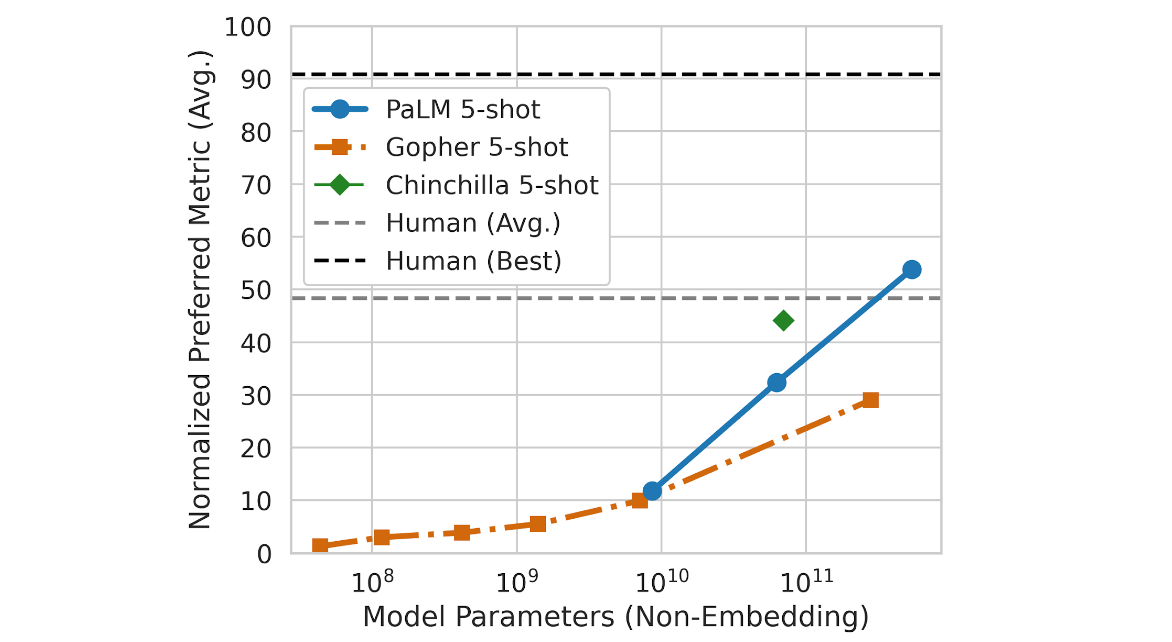

We trained PaLM on 6144 TPU v4 chips using Pathways, a new ML system which enables highly efficient training across multiple TPU Pods. We demonstrate continued benefits of scaling by achieving state-of-the-art few-shot learning results on hundreds of language understanding and generation benchmarks. On a number of these tasks, PaLM 540B achieves breakthrough performance, outperforming the finetuned stateof-the-art on a suite of multi-step reasoning tasks, and outperforming average human performance on the recently released BIG-bench benchmark. A significant number of BIG-bench tasks showed discontinuous improvements from model scale, meaning that performance steeply increased as we scaled to our largest model. PaLM also has strong capabilities in multilingual tasks and source code generation, which we demonstrate on a wide array of benchmarks. We additionally provide a comprehensive analysis on bias and toxicity, and study the extent of training data memorization with respect to model scale. Finally, we discuss the ethical considerations related to large language models and discuss potential mitigation strategies.

I think it would probably not work too well if you mean simply "dump some in like any other text", because it would be diluted by the hundreds of billions of other tokens and much of it would be 'wasted' by being trained on while the model is too stupid to learn the inner monologue technique. (Given that smaller models like 80b-esque models don't inner-monologue while larger ones like LaMDA & GPT-3 do, presumably the inner-monologue capability only emerges in the last few bits of loss separating the 80b-esque and 200b-esque models and thus fairly late in training, at the point where the 200b-esque models pass the final loss of the 80b-esque models.) If you oversampled an inner-monologue dataset, or trained on it only at the very end (~equivalent to finetuning), or did some sort of prompt-tuning, then it might work. But compared to self-distilling where you just run it on the few-shot-prompt + a bunch of questions & generate arbitrary n to then finetune on, it would be expensive to collect that data, so why do so?