Cryptocurrency is terrible. With a single click of a button, it is possible to accidentally lose all of your funds. 99.9% of all cryptocurrency projects are complete scams (conservative estimate). Crypto is also tailor-made for ransomware attacks, since it makes it possible to send money in such a way that the receiver has perfect anonymity.

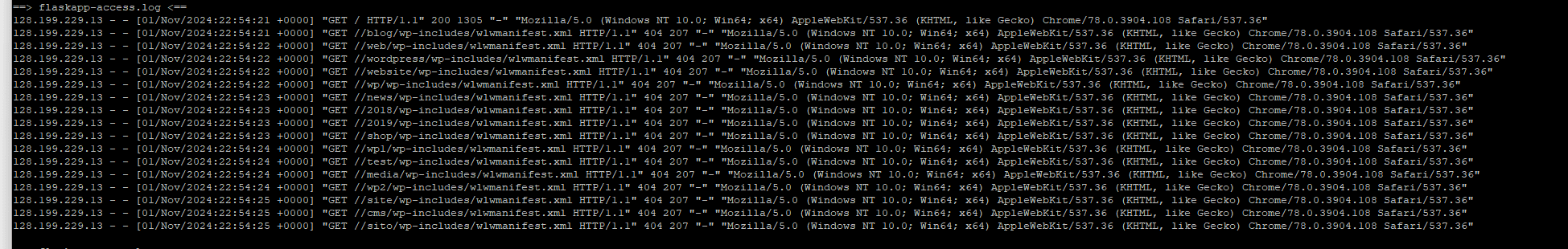

Similarly, Cyber Security is terrible. Basically every computer on the internet is infected with multiple types of malware. If you have ever owned a web-server with a public IPV4 address, you undoubtedly have had the pleasure of viewing a log file that looks like this:

In a few months, the world is about to be introduced to a brand new insecure by design platform, the LLM agent:

No one worth taking seriously believes that Microsoft Copilot (or Anthropic, or any other LLM agent) is going to be remotely secure against prompt injection attacks.

One fascinating thing (to me) about these examples is that they all basically work fine[1]. Despite being completely broken, normal people with normal intelligence use these systems routinely without losing 100% of their funds. This happens despite the fact that people with above-average intelligence have a financial incentive to take advantage of these security flaws.

One possible conclusion is along the lines of "everything humanity has ever built is constantly on fire. We must never built something existentially dangerous or we're already dead."

However we already did:

And like everything else, the story of nuclear weapons is that they are horribly insecure and error prone.

What I want to know is why? Why is it that all of these systems, despite being hideously error prone and blatantly insecure by design somehow still work?

I consider each of these systems (and many like them) a sort of standing challenge to the fragile world hypothesis. If the world is so fragile, why does it keep not ending?

- ^

If anyone would like to make a bet, I predict 2 years from now LLM agents:

- will be vulnerable to nearly trivial forms of prompt-injection

- Millions of people will use them to do things like spend money that common-sense tells you not to do on a platform this insecure by design

You shouldn't use "dangerous" or "bad" as a latent variable because it promotes splitting. MAD and Bitcoin have fundamentally different operating principles (e.g. nuclear fission vs cryptographic pyramid schemes), and these principles lead to a mosaic of different attributes. If you ignore the operating principles and project down to a bad/good axis, then you can form some heuristics about what to seek out or avoid, but you face severe model misspecification, violating principles like realizability which are required for Bayesian inference to get reasonable results (e.g. converge rather than oscillate, and be well-calibrated rather than massively overconfident).

Once you understand the essence of what makes a domain seem dangerous to you, you can debug by looking at what obstacles this essence faced that stopped it from flowing into whatever horrors you were worried about, and then try to think through why you didn't realize those obstacles ahead of time. As you learn more about the factors relevant in those cases, maybe you will learn something that generalizes across cases, but most realistically what you learn will be about the problems with the common sense.