Authors: Senthooran Rajamanoharan*, Arthur Conmy*, Lewis Smith, Tom Lieberum, Vikrant Varma, János Kramár, Rohin Shah, Neel Nanda

A new paper from the Google DeepMind mech interp team: Improving Dictionary Learning with Gated Sparse Autoencoders!

Gated SAEs are a new Sparse Autoencoder architecture that seems to be a significant Pareto-improvement over normal SAEs, verified on models up to Gemma 7B. They are now our team's preferred way to train sparse autoencoders, and we'd love to see them adopted by the community! (Or to be convinced that it would be a bad idea for them to be adopted by the community!)

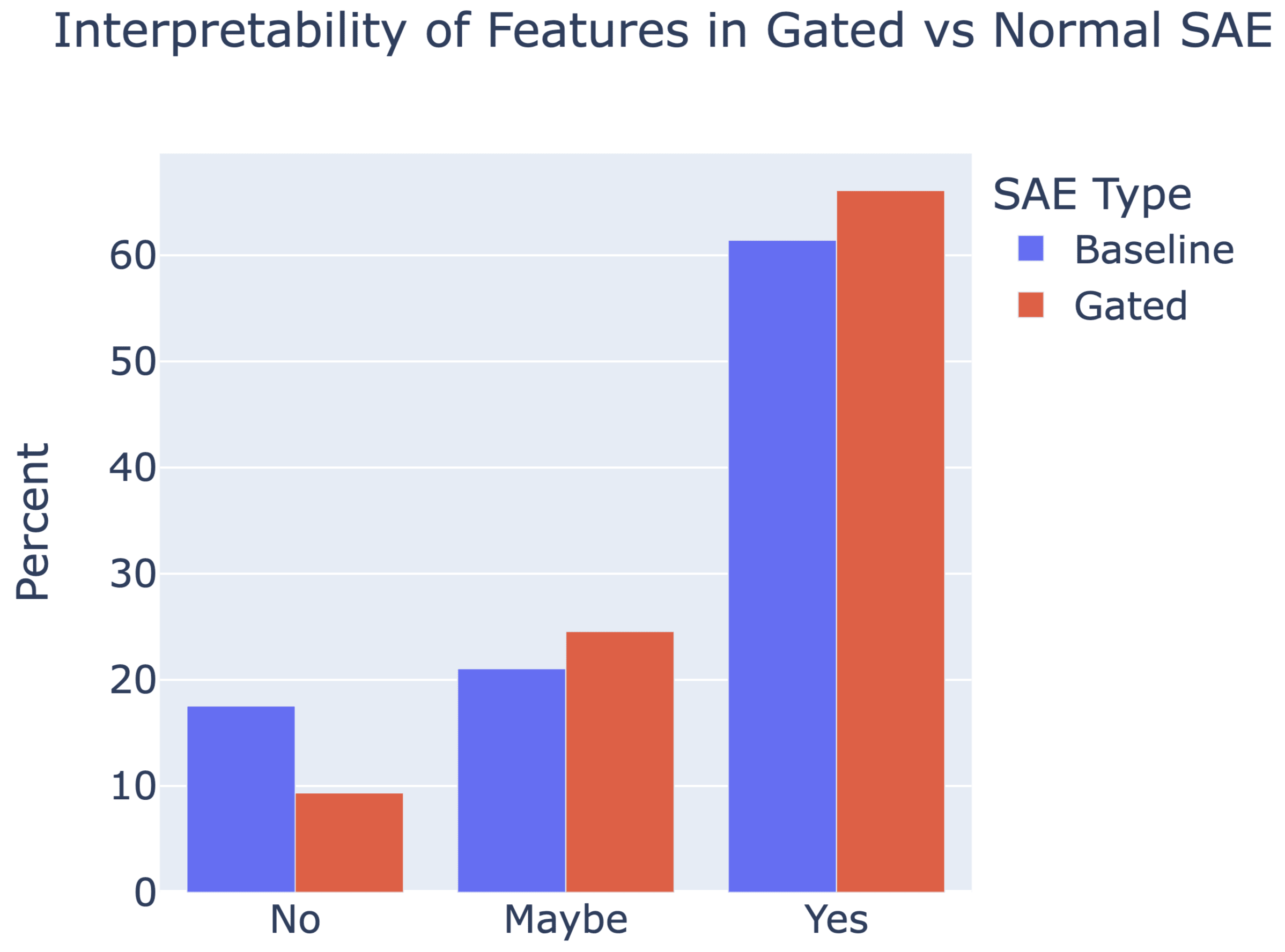

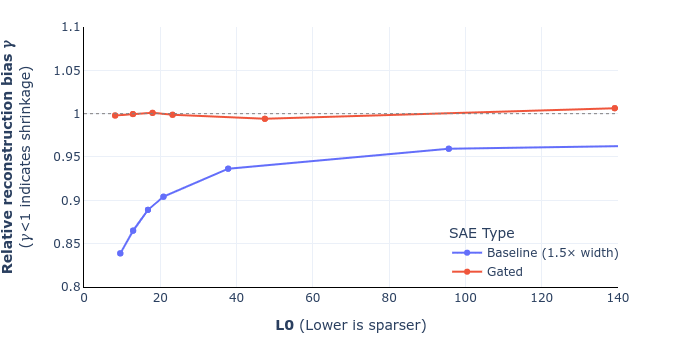

They achieve similar reconstruction with about half as many firing features, and while being either comparably or more interpretable (confidence interval for the increase is 0%-13%).

See Sen's Twitter summary, my Twitter summary, and the paper!

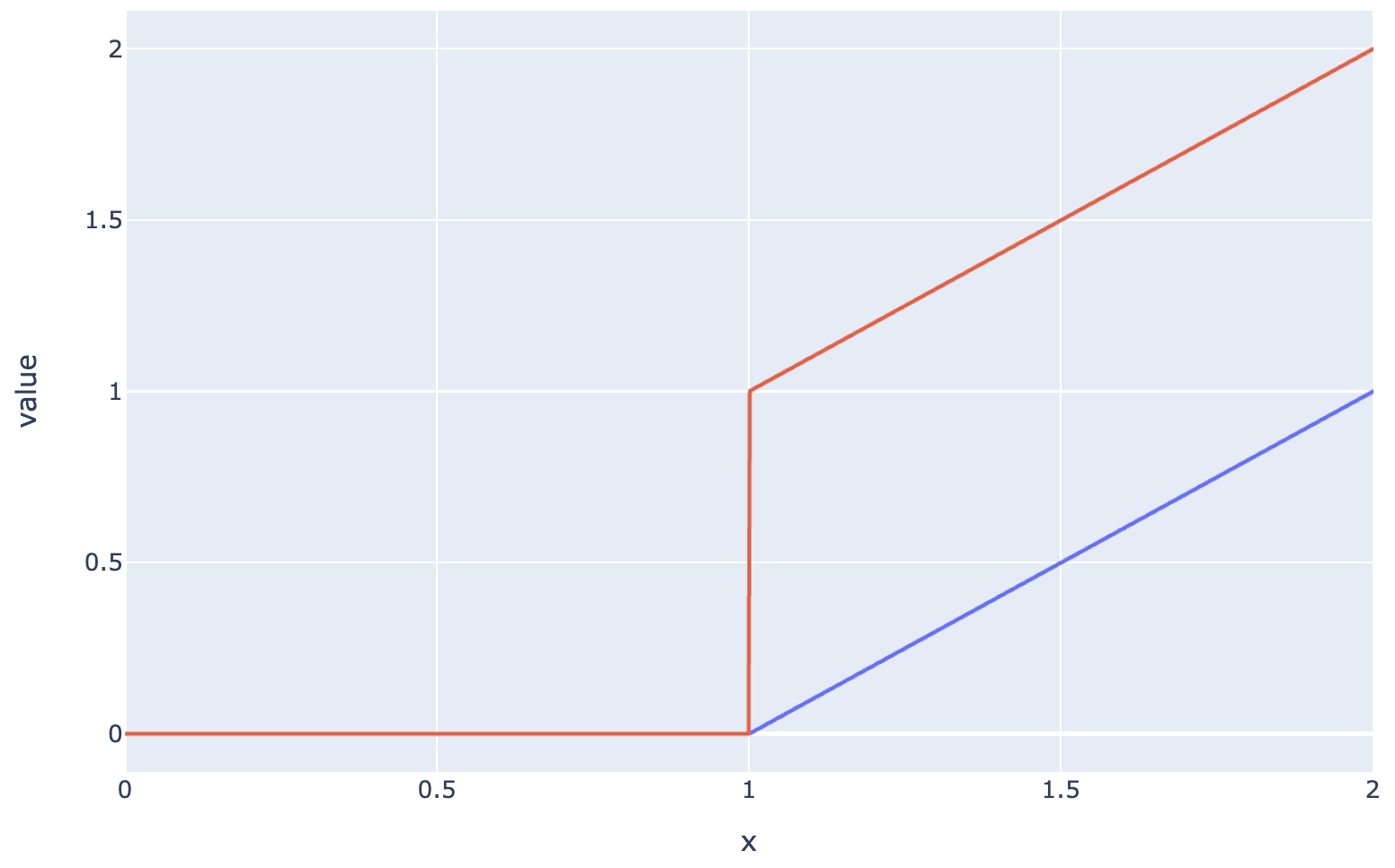

Possibly I'm missing something, but if you don't have Laux, then the only gradients to Wgate and bgate come from Lsparsity (the binarizing Heaviside activation function kills gradients from Lreconstruct), and so πgate would be always non-positive to get perfect zero sparsity loss. (That is, if you only optimize for L1 sparsity, the obvious solution is "none of the features are active".)

(You could use a smooth activation function as the gate, e.g. an element-wise sigmoid, and then you could just stick with Lincorrect from the beginning of Section 3.2.2.)