Promoted to curated: I found this outline really helpful, and it convinced me to read the book in full (I had previously skimmed it and put it on my to-do list, but hadn't yet started reading and wasn't sure when/whether I would get around to it).

This is a superb summary! I'll definitely be returning to this as a cheatsheet for the core ideas from the book in future. I've also linked to it in my review on Goodreads.

it's straightforwardly the #1 book you should use when you want to recruit new people to EA. [...] For rationalists, I think the best intro resource is still HPMoR or R:AZ, but I think Scout Mindset is a great supplement to those, and probably a better starting point for people who prefer Julia's writing style over Eliezer's.

Hmm... I've had great success with the HPMOR / R:AZ route for certain people. Perhaps Scout Mindset has been the missing tool for the others. It also struck me as a nice complement to Eliezer's writing, in terms of both substance and style (see below). I'll have to experiment with recommending it as a first intro to EA/rationality.

As for my own experience, I was delightfully surprised by Scout Mindset! Here's an excerpt from my review:

I'm a big fan of Julia and her podcast, but I wasn't expecting too much from Scout Mindset because it's clearly written for a more general audience and was largely based on ideas that Julia had already discussed online. I updated from that prior pretty fast. Scout Mindset is a valuable addition to an aspiring rationalist's bookshelf — both for its content and for Julia's impeccable writing style, which I aspire to.

Those familiar with the OSI model of internet infrastructure will know that there are different layers of protocols. The IP protocol that dictates how packets are directed sits at a much lower layer than the HTML protocol which dictates how applications interact. Similarly, Yudkowsky's Sequences can be thought of as the lower layers of rationality, whilst Julia's work in Scout Mindset provides the protocols for higher layers. The Sequences are largely concerned with what rationality is, whilst Scout Mindset presents tools for practically approximating it in the real world. It builds on the "kernel" of cognitive biases and Bayesian updating by considering what mental "software" we can run on a daily basis.

The core thesis of the book is that humans default towards a "soldier mindset," where reasoning is like defensive combat. We "attack" arguments or "concede" points. But there is another option: "scout mindset," where reasoning is like mapmaking.

The Scout Mindset is "the motivation to see things as they are, not as you wish they were. [...] Scout mindset is what allows you to recognize when you are wrong, to seek out your blind spots, to test your assumptions and change course."

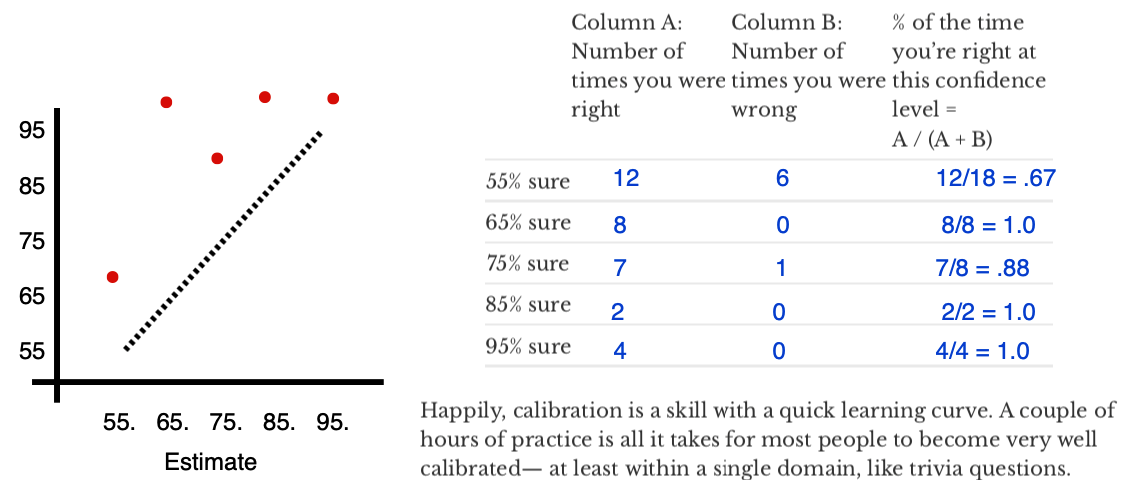

I recommend listening to the audiobook version, which Julia narrates herself. The book is precisely as long as it needs to be, with no fluff. The anecdotes are entertaining and relevant and were entirely new to me. Overall, I think this book is a 4.5/5, especially if you actively try to implement Julia's recommendations. Try out her calibration exercise, for instance.

I tried the calibration exercise you linked. Skipped one question where I felt I just had no basis at all for answering, but answered all the rest, even when I felt very unsure.

When I said 95% confident, my accuracy was 100% (9/9)

When I said 85% confident, my accuracy was 83% (5/6)

When I said 75% confident, my accuracy was 71% (5/7)

When I said 65% confident, my accuracy was 60% (3/5)

At a glance, that looks like it's within rounding error of perfect. So I was feeling pretty good about my calibration, until...

When I said 55% confident, my accuracy was 92% (11/12)

I, er, uh...what? How can I be well-calibrated at every other confidence level and then get over 90% right when I think I'm basically guessing?

Null Hypothesis: Random fluke? Quick mental calculation says winning at least 11 out of 12 coin-flips would be p < .01. Plus, this is a larger sample than any other confidence level, so if I'm not going to believe this, I probably shouldn't believe any of the other results, either.

(Of course, from your perspective, I'm the one person out of who-knows-how-many test takers that got a weird result and self-selected to write a post about it. But from my perspective it seems pretty surprising.)

Hypothesis #1: There are certain subject areas where I feel like I know stuff, and other subject areas where I feel like I don't know stuff, and I'm slightly over-confident in the former but drastically under-confident in the later.

This seems likely true to some extent--I gave much less confidence overall in the "country populations" test section, but my actual accuracy there was about the same as other categories. But I also said 55% twice in each of the other 3 test sections (and got all 6 of those correct), so it seems hard to draw a natural subject-area boundary that would fully explain the results.

Hypothesis #2: When I believe I don't have any "real" knowledge, I switch mental gears to using a set of heuristics that turns out to be weirdly effective, at least on this particular test. (Maybe the test is constructed with some subtle form of bias that I'm subconsciously exploiting, but only in this mental mode?)

For example, on one question where the test asked if country X or Y had a higher population in 2019, I gave a correct, 55% confident answer on the basis of "I vaguely feel like I hear about country X a little more often than country Y, and high population seems like it would make me more likely to hear about a country, so I suppose that's a tiny shred of Bayesian evidence for X."

I have a hard time believing heuristics like that are 90% accurate, though.

Other hypotheses?

Possibly relevant: I also once tried playing CFAR's calibration game, and after 30-something binary questions in that game, I had around 40% overall accuracy (i.e. worse than random chance). I think that was probably bad luck rather than actual anti-knowledge, but I concluded that I can't use that game due to lack of relevant knowledge.

I somehow missed all notifications of your reply and just stumbled upon it by chance when sharing this post with someone.

I had something very similar with my calibration results, only it was for 65% estimates:

I think your hypotheses 1 and 2 match with my intuitions about why this pattern emerges on a test like this. Personally, I feel like a combination of 1 and 2 is responsible for my "blip" at 65%.

I'm also systematically under-confident here — that's because I cut my prediction teeth getting black swanned during 2020, so I tend to leave considerable room for tail events (which aren't captured in this test). I'm not upset about that, as I think it makes for better calibration "in the wild."

For effective altruists, I think (based on the topic and execution) it's straightforwardly the #1 book you should use when you want to recruit new people to EA. It doesn't actually talk much about EA, but I think starting people on this book will result in an EA that's thriving more and doing more good five years from now, compared to the future EA that would exist if the top go-to resource were more obvious choices like The Precipice, Doing Good Better, the EA Handbook, etc.

I passed this review to people in a local EA group and some of them felt unclear on why you think this way, since (as you say) it doesn't seem to talk about EA much. Could you elaborate on that part?

I didn't read Scout Mindset yet, but I've listened to Julia's interviews on podcasts about it, and I have read the other books that Rob mentions in that paragraph.

The reason I nodded when Rob wrote that was that Julia's memetics are better. Her ideas are written in a way which stick in one's mind, and thus spread more easily. I don't think any of those other sources are bad -- in fact I get more from them than I expect to from Scout Mindset -- but Scout Mindset is more practically oriented (and optimized for today's political climate) in a way which those other books are not.

It also operates at a different, earlier level in the "EA Funnel": the level at which you can make people realize that more is possible. Those other books already require someone to be asking "how can I Do Good Better?" before they'll pick it up.

Thank you for the summary, I am comparing it to my notes and I think yours are better and more detailed.

I read it in one go the same day it was released. I think that The Scout Mindset does a great job as an introduction to rationality and most people outside the Less Wrong community will benefit more from reading this than The Sequences.

I wasn’t going to buy it, but this post and the comments here convinced me to. Just finished the audiobook and I really liked it! In particular, packaging all the ideas, many of which are in The Sequences, into one thing called “scout mindset”, feels really useful in how I frame my own goals for how I think and act. Previously I had had injunctions like “value truth above all” kicking around in my head, but that particular injunction always felt a bit uncomfortable - “truth above all” brought to mind someone who nitpicks passing comments in chill social situations, and so on. The goal “Embody scout mindset” feels much more right as a guiding principle for me to live by.

My friends and I liked this book enough to make a digital art project based on it!

https://mindsetscouts.com/

Jumping in to add: I loved this book. It's well written and entertaining. I'm sympathetic to people on this forum who think they won't learn much new. It didn't change my views in a fundamental sense, since I've been reading and living this sort of stuff for a long time. But there were more fresh insights than I expected, and in any case it's always great to reinforce the central messages. It also felt extremely validating. IMO it's an ideal book for sharing many of the key tenets "rationality" with people who would otherwise be repulsed by the term "rationality". Thanks for sharing the outline!

I thought that the sections on Identity and self-deception in this book stuck out as being done better in this book that in other rationalist literature.

Julia Galef's The Scout Mindset is superb.

For effective altruists, I think (based on the topic and execution) it's straightforwardly the #1 book you should use when you want to recruit new people to EA. It doesn't actually talk much about EA, but I think starting people on this book will result in an EA that's thriving more and doing more good five years from now, compared to the future EA that would exist if the top go-to resource were more obvious choices like The Precipice, Doing Good Better, the EA Handbook, etc.

For rationalists, I think the best intro resource is still HPMoR or R:AZ, but I think Scout Mindset is a great supplement to those, and probably a better starting point for people who prefer Julia's writing style over Eliezer's.

I've made an outline of the book below, for my own reference and for others who have read it. If you don't mind spoilers, you can also use this to help decide whether the book's worth reading for you, though my summary skips a lot and doesn't do justice to Julia's arguments.

Introduction

PART I: The Case for Scout Mindset

Chapter 1. Two Types of Thinking

Chapter 2. What the Soldier is Protecting

Chapter 3. Why Truth is More Valuable Than We Realize

PART II: Developing Self-Awareness

Chapter 4. Signs of a Scout

Chapter 5. Noticing Bias

Chapter 6. How Sure Are You?

PART III: Thriving Without Illusions

Chapter 7. Coping with Reality

Chapter 8. Motivation Without Self-Deception

Chapter 9. Influence Without Overconfidence

PART IV: Changing Your Mind

Chapter 10. How to Be Wrong

Chapter 11. Lean in to Confusion

Chapter 12. Escape Your Echo Chamber

PART V: Rethinking Identity

Chapter 13. How Beliefs Become Identities

Chapter 14. Hold Your Identity Lightly

Chapter 15. A Scout Identity