This post spurred some interesting thoughts, which I kicked around with Scott Viteri.

First, those histograms of counts of near-zero eigenvalues per run over 150 runs sure do smell a lot like asymptotic equipartition visualizations:

(The post also mentions AEP briefly; I'm not sure what the right connection is.)

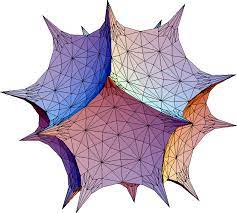

Second, trying to visualize what this implies about the Rashomon set... it suggests the Rashomon set is very spiky. Like, picture a 3D ball, and then there's a bunch of spikes coming off the ball which quickly thin into roughly-2D flat spiky things, and then those also branch into a bunch of sub-spikes which quickly thin into roughly-1D needles. And there's so many of those approximately-lower-dimensional spikes that they fill even more volume than the ball. Sort of like the Mathematica Spikey, but spikier:

Anyway, this visualization reminds me strongly of visuals of 2- or 3-dimensional shapes which have nearly all the volume very close to the surface, like high-dimensional shapes do. (For a not-very-fancy example, see page 6 here.) It makes me wonder if there's some natural way of viewing the entire Rashomon set as a simple very-high-dimensional solid which has kinda been "crumpled up and flattened out" into the relatively-lower-dimensional parameter space, while approximately preserving some things (like nearly all the volume being very close to the surface).

Would be interesting to try to distinguish between 3 types of dimensions. Number of dimensions that leave the set, number of dimensions in the set due to trivial params, and number of dimensions in the set due to equally good but functionally different models.

Especially if it turns out it is attracted to maximum trivial params, but not maximum dimensionality overall.

Yeah that would be interesting, but how would we tell the difference between trivial params (I'm assuming this means function doesn't change anywhere) and equal loss models? Estimate this with a sampling of points out of distribution?

I kind of assumed that all changes in the parameters changed the function, but that some areas of the loss landscape change the function faster than others? This would be my prediction

Hm, does the number of non-trivial dimensions follow a smooth curve? From the histogram figure it clearly grows as net size increases, but I can't see how fast it's decelerating.

Does the same pattern obtain on more complicated data? What if you then add regularization?

I think it was smooth-ish, although we didn't plot it. It was decelerating really slowly, but we also didn't quantify that. We could send you the notebook (bit of a mess)?

I really wanted to extend the experiment to more complicated data, and bigger networks with more layers, but we didn't have time. I plan to do this after the MATS program ends in late september if someone else hasn't done it first.

I'm not sure if the number of near zero eigenvalues is the right thing to look at.

If the training process is walking around the parameter space until it "stumbles on" a basin, what's relevant for which basin is found isn't just the size of the basin floor, it's also how big the basin walls are. Analogy: A very narrow cylindrical hole in a flat floor may be harder to fall into than a very wide, sloped hole. Even though the bottom of the later may be just a single point.

I've typically operated under the assumption that something like "basin volume" may be closer to the thing that matters, the difference to the dimensionality picture being that the typical size of non-zero eigenvalues can also be quite relevant.

Have you tried looking at complete eigenvalue spectra of your most common minima in these graphs, and compared them to the spectra of minima of higher dimension? Do the later by any chance have significantly bigger eigenvalues outside the ones that are close to zero?

Produced As Part Of The SERI ML Alignment Theory Scholars Program 2022 Research Sprint Under John Wentworth

Intro

The "broad basins induce data compression" argument goes like this: If we train a neural network to memorize some data, it has to "store" all the relevant information in its weights. If it "compresses the data", then it should be able to store the data using fewer weights, and leave more weights free to vary without increasing the loss. This means that around the optima we found, there should be a broad flat basin in the loss landscape.

We spent eight days trying to make this argument more specific and clear. We made some progress but probably none of it is new to people who have studied this area.

Executive Summary

Rashomon Set

One common idea that we keep coming back to is that we can think of “using some parameter-degrees of freedom” as the degrees of freedom that don’t change the loss on the training set. The set of hypotheses that has zero loss is called the Rashomon set, and we know that every hypothesis in the Rashomon set has learned every training data label. Hence, the area of this set is a nice operationalization of the degrees of freedom that aren’t relevant to memorizing the training labels.

One way of operationalizing the amount of “compression” the network has done is to measure the dimensionality of the Rashomon set, and subtract that from the total number of parameters. This vaguely gives us “the number of parameters the network is using to store the data”. We try to generalize this notion in the last section.

We define the set of points in parameter space that end up in the same Rashomon set after training, assuming gradient flow, as the "attractor well" of that Rashomon set.

Visualizing the basin

We started with some experiments with the following goals:

The following graph is a visualization of two randomly sampled axes through the loss basin of a 9 parameter network trained on an XOR dataset (which is just 4 points:a,b→XOR(a,b):∀a,b∈{0,1}).

The next graph does the same visualization, except slicing the space through the two eigenvectors that correspond to the lowest eigenvalues.

This graph is significantly flatter, because moving in the low-eigenvalue directions lets us remain in the Rashomon set.

We also put together a program that finds paths between connected minima. It does this by making steps in the 0-eigenspace of the Hessian, allowing it to trace a path between networks in the same Rashomon set by projecting the difference between their parameters into the 0-eigenspace of the Hessian, and then stepping in that direction. This requires computing the eigendecomposition of the Hessian, which takes O(n2) with our implementation, but can be improved up to O(kn), where n is the number of parameters and k is the number of eigenvalues we need. We have been able to find these paths in the XOR experiment by discretizing the space and doing a depth-first search of this Rashomon set.

The image below is another way of visualizing the loss landscape around the minima, by taking the minima and nudging it in a bunch of random directions, measuring the loss at all those points and then projecting the set of parameterizations into 2d space using tSNE. It is useful in that it shows us that a large proportion of the random directions give the same loss.

Broad basin experiment

On the XOR dataset, Thomas set up experiments that measured the number of almost-zero eigenvalues of the Hessian of the loss at the minima. Each graph below shows the distribution of near-zero eigenvalues over 150 training runs with different random initializations (the weights were initialized with a Gaussian).

The number of near zero eigenvalues of the Hessian are a measure for the dimensionality of the Rashomon set, a manifold in the loss landscape with constant loss. This dimensionality is one way of defining the broadness of the basin in the loss landscape.

We were expecting the broad basins to be much more likely, due to their increased dimensionality in the space.We expect higher dimensional basins to tend to have higher dimensional “attractor wells”, which means that the highest dimensional basins should attract most of the points in the initialization distribution. So we would predict most of the basins found to have the maximum possible dimension allowable while still fitting the data points.

Loss attractor wells with dimension smaller than the total number of parameters seem like they would usually have 0 measure. The intuition for this is that the higher dimensional analogue of a line has 0 measure in a 2D space or a square has 0 measure in a 3D space.

However, we observe a much more symmetrical distribution than we predicted, as you can see in the graphs above — the mode is a little lower than the maximum possible dimension, and the number of maximum dimension minima found decreases as we increase the width of the network.

There are several possible explanations for this:

Below are synthetically generated plots of example loss landscapes to illustrate the dimensionality of attractor wells. On the left, we have just plotted f(x,y)=x2. This Rashomon set is given in red, and it is the points for which x=0 (as these are the points for which f is minimized. This is a one dimensional set, but it has a two dimensional attractor basin.

On the right, we have plottedf(x,y)=x2 if x<0, 0 if x∈[0,3), and (x−3)2 if x≥3. This is a two dimensional set, but it still has a 2d attractor set.

PAC-Bayes inspired operationalization

Let’s now try to find a better version of the “dimension of the Rashamon set” measure of information storage. One way we could try to do it is using the ratio of the volume of the Rashomon set compared to the entire hypothesis space. However, “volume” of the Rashamon set itself isn’t the right concept, because usually the Rashamon set has a lower dimension than the parameter space it is embedded in, and hence it has zero volume. In some directions it is “flat”, hence overall it has 0 volume. To resolve this, we’ll have to use a different measure of “volume”.

Types of “compression”

When trying to be more specific about what we are talking about, it’s useful to make the following distinction. There are two types of “compression” that we need to consider:

Trying to fit this into the PAC-Bayes framework:

One natural way of combining these two aspects of compression is to take the KL divergence between the posterior and the prior (defined below), with the posterior being the distribution that captures the “vagueness” of which function we want to specify. This works nicely because the KL divergence is the expected number of bits required to encode a hypothesis from the posterior (using a code optimized for the prior) minus the entropy of the posterior, which we conjecture is a good measure of the number of bits we don’t need to specify.

To define a posterior distribution with neural networks, we can consider a distribution over the hypothesis space that tells us how likely a particular hypothesis is to be chosen by the training procedure, given a particular dataset. This is the pushforward measure of the initialization distribution across the training procedure. We can call this the “posterior”.

We can define a “prior” as some distribution over hypotheses that captures our beliefs about what hypothesis will be chosen, before we have observed any training data.

We conjecture that the KL divergence between the prior and posterior distributions is analogous to subtracting the number of dimensions of the Rashomon set from the total number of parameters. KL(Q||P) = CrossEntropy(Q,P) - H(Q), where if P is uniform, the number of bits required to encode any sample in the posterior CrossEntropy(Q,P) is proportional to the number of parameters. H(Q) is the entropy of the posterior, which would be proportional to the number of dimensions of P if P was uniform.

One piece of evidence that suggests that the KL divergence is the right way to measure this is that this term is present in the PAC-Bayes generalization bound, which has been used to prove non-vacuous (~10x the empirical error) guarantees on the generalization performance of overparameterized neural networks (Dziugaite & Roy, 2017). Here’s an example of the basic form of a PAC-Bayes bound on the expected test loss:

This operationalization (using KL to compare prior and posterior) gives us a more general definition of what we mean by the parameters “using” some degrees of freedom and not others, which can be applied to situations where we have multiple disconnected basins, or connected basins with different dimensionality.

Another thing that this operationalization gives us is the sense in which a larger “posterior” implies a shorter description length. Unfortunately, it is kind of a vacuous argument, because we have to define “shorter description length” using the implicit prior distribution. But it does reframe the problem to one of understanding the implicit prior, which we might do via understanding the biases of the parameter -> function space map.

One thing that is missing from this operationalization is why neural networks usually generalize well, even though they are sampling from the posterior instead of marginalizing over it. We would expect this to be the case if most hypotheses in the posterior generalized in a similar way (e.g. had similar prior probability), however it’s unclear whether this is true.

Another thing that is missing is a better description of the “prior”. It seems clear that there is an implicit bias in the parameter -> function space mapping or in gradient descent, which biases neural networks to tend to output certain types of functions. We will call this bias the implicit “prior” of NNs. Some evidence suggests that the details of the optimization algorithm are irrelevant to the generalization of neural networks (Video, related paper: Goldstein et al, 2020), so we expect that the bias is mostly in the parameter space -> function space mapping.

So we conjecture that we can define the implicit “prior” of neural networks as an almost uniform distribution over parameter space, with some limit on the distance from initialization. We might also use a normal distribution centered at zero. We know that this implies a highly non-uniform distribution over functions, because in the infinite width limit, the distribution is a Gaussian Process with mean zero and kernel function that depends on the architecture.

Together, the above paragraphs gives us the prediction that the posterior has most of the mass in wide basins, with the set of hypotheses in a given wide basin being dominated by smooth simple hypotheses kind of like those sampled from the Gaussian Process approximation due to the parameter -> function mapping.