I believe there are people with far greater knowledge than me that can point out where I am wrong. Cause I do believe my reasoning is wrong, but I can not see why it would be highly unfeasible to train a sub-AGI intelligent AI that most likely will be aligned and able to solve AI alignment.

My assumptions are as follows:

- Current AI seems aligned to the best of its ability.

- PhD level researchers would eventually solve AI alignment if given enough time.

- PhD level intelligence is below AGI in intelligence.

- There is no clear reason why current AI using current paradigm technology would become unaligned before reaching PhD level intelligence.

- We could train AI until it reaches PhD level intelligence, and then let it solve AI Alignment, without itself needing to self improve.

The point I am least confident in, is 4, since we have no clear way of knowing at what intelligence level an AI model would become unaligned.

Multiple organisations seem to already think that training AI that solves alignment for us is the best path (e.g. superalignment).

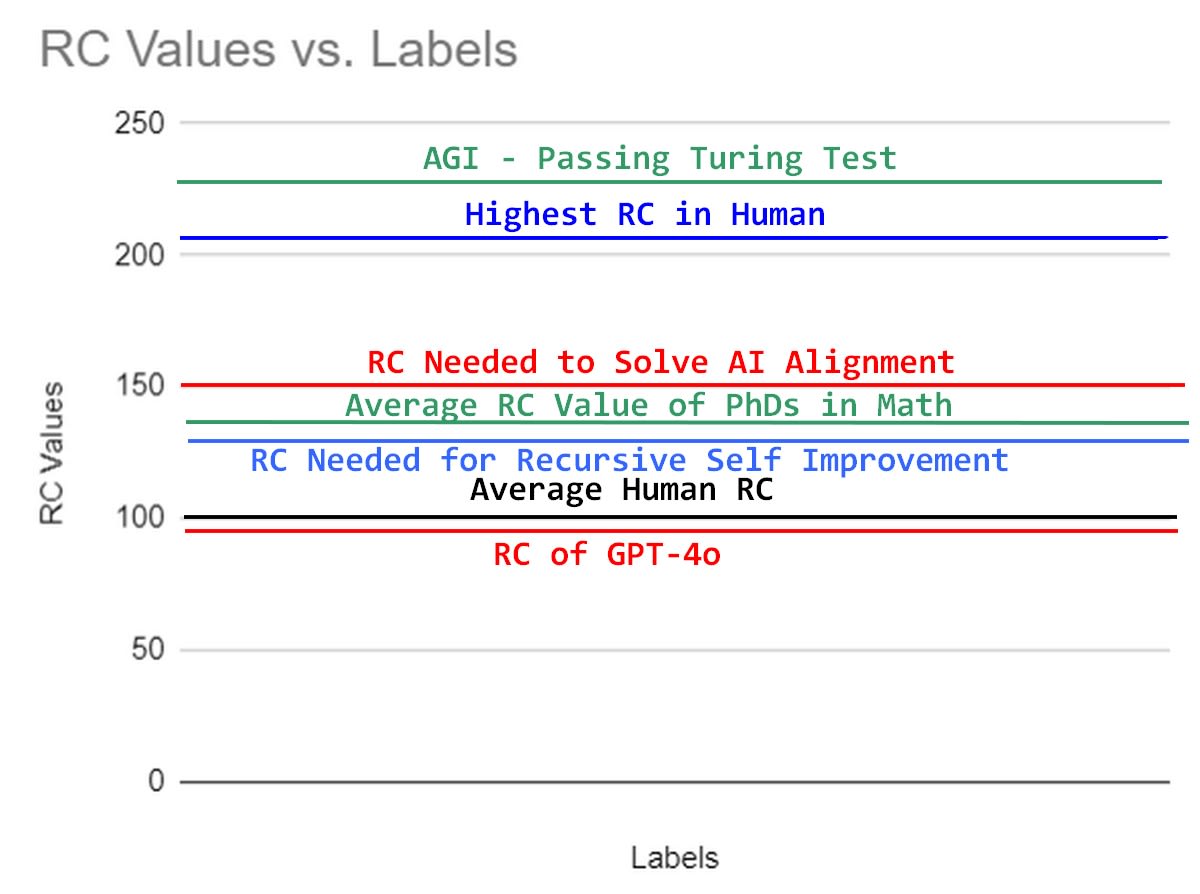

Attached is my mental model of what intelligence different tasks require, and different people have.

Figure 1: My mental model of natural research capability RC (basically IQ with higher correlation for research capabilities), where intelligence needed to align AI is above average PhD level, but below smartest human in the world, and even further from AGI.

Re. the current alignment of LLMs.

Suppose I build a Bayesian spam filter for my email. It's highly accurate at filtering spam from non-spam. It's efficient and easy to run. It's based on rules that I can understand and modify if I desire. It provably doesn't filter based on properties I don't want it to filter on.

Is the spam filter aligned? There's a valid sense in which the answer is "yes":

The filter is good at the things I want a spam filter for. It's safe for me to use, except in the case of user error. It follows Kant's golden rule - it doesn't cause problems in society if it's widely used. It's not trying to deceive me.

When people say present-day LLMs are aligned, they typically mean this sort of stuff. The LLM is good qua chatbot. It doesn't say naughty words or tell you how to build a bomb. When you ask it to write a poem or whatever, it will do a good enough job. It's not actively trying to harm you.

I don't want to downplay how impressive an accomplishment this is. At the same time, there are still further accomplishments needed to build a system such that humans are confident that it's acting in their best interests. You don't get there just by adding more compute.

Just like how a present-day LLM is aligned in ways it doesn't even make sense to ask a Bayesian spam filter to be aligned (i.e. has to reflect human values in a richer way, across a wider variety of contexts), future AI will have to be aligned in ways it doesn't even make sense to ask LLama 70B to be aligned (richer understanding and broader context still, combined with improvements to transparency and trustworthiness).

Very different in architecture, capabilities, and appearance to an outside observer, certainly. I don't know what you consider "fundamental."

The atoms inside the H-100s running gpt4 don't have little tags on them saying whether it's "really" trying to prevent war. The difference is something that's computed by humans as we look at the world. Because it's sometimes useful for us to apply the intentional stance to gpt4, it's fine to say that it's trying to prevent war. But the caveats that comes with are still very large.