I believe there are people with far greater knowledge than me that can point out where I am wrong. Cause I do believe my reasoning is wrong, but I can not see why it would be highly unfeasible to train a sub-AGI intelligent AI that most likely will be aligned and able to solve AI alignment.

My assumptions are as follows:

- Current AI seems aligned to the best of its ability.

- PhD level researchers would eventually solve AI alignment if given enough time.

- PhD level intelligence is below AGI in intelligence.

- There is no clear reason why current AI using current paradigm technology would become unaligned before reaching PhD level intelligence.

- We could train AI until it reaches PhD level intelligence, and then let it solve AI Alignment, without itself needing to self improve.

The point I am least confident in, is 4, since we have no clear way of knowing at what intelligence level an AI model would become unaligned.

Multiple organisations seem to already think that training AI that solves alignment for us is the best path (e.g. superalignment).

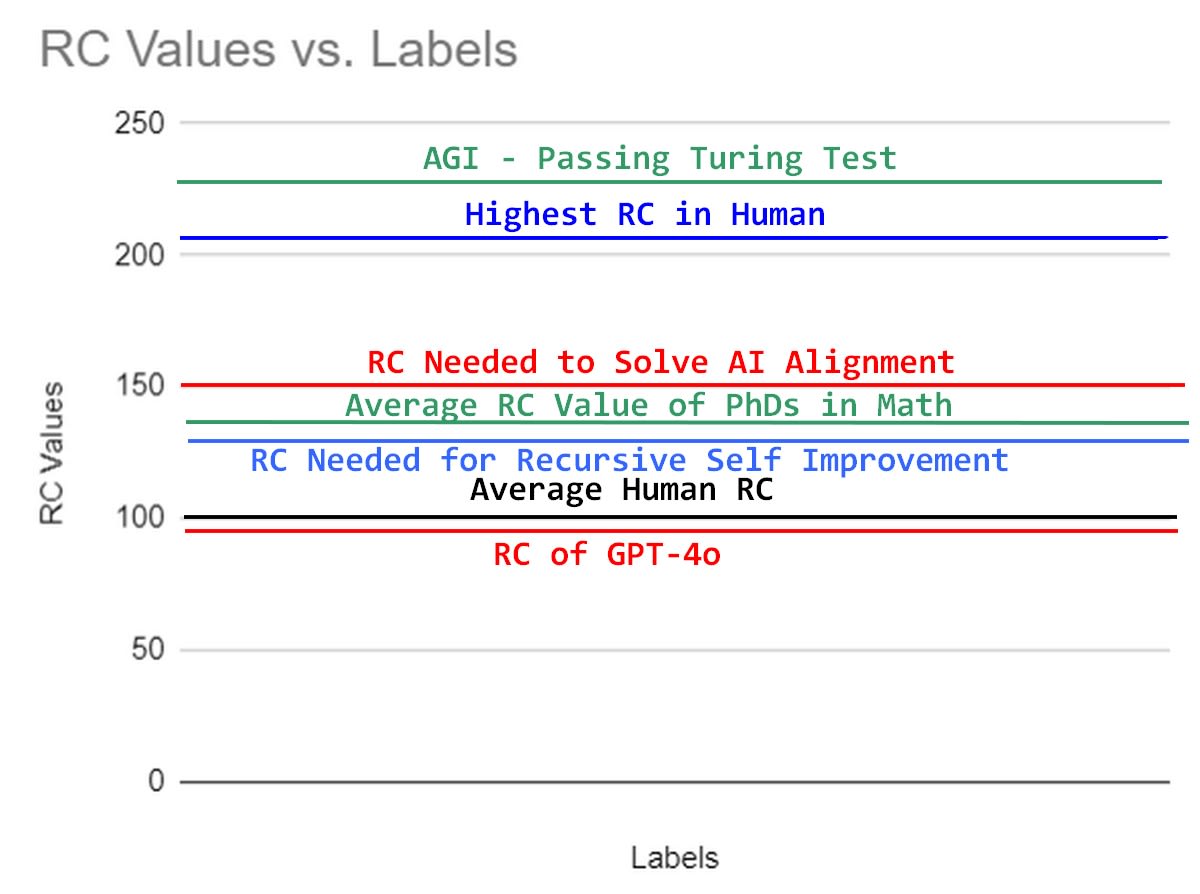

Attached is my mental model of what intelligence different tasks require, and different people have.

Figure 1: My mental model of natural research capability RC (basically IQ with higher correlation for research capabilities), where intelligence needed to align AI is above average PhD level, but below smartest human in the world, and even further from AGI.

It's not clear to me that solving Alignment for AGI/ASI must be as philosophically hard a problem as designing a quantum computer, though I certainly admit that it could be. The basic task is to train an AI whose motivations are to care about our well-being, not its own (a specific example of the orthogonality thesis: evolution always evolves selfish intelligences, but it's possible to construct an intelligence that isn't selfish). We don't know how hard that is, but it might not be conceptually that complex, just very detail-intensive. Let me give one specific example of an "Alignment is just a big slog, but not conceptually challenging" possibility (consider this as the conceptually-simple end of a spectrum of possible Alignment difficulties).

Suppose that, say, 1000T (a quadrillion) tokens is enough to train an AGI-grade LLM, and suppose you had somehow (presumable with a lot of sub-AGI AI assistance) produced a training set of say 1000T tokens-worth of synthetic training data, which covered all the same content as a usual books+web+video+etc. training set, including many examples of humans behaving badly in all the ways they usually do, but throughout also contained a character called 'AI', and everything in the training samples that AI did was moral, ethical, fair, objective, and motivated only by the collective well-being of the human race, not by its own well-being. Suppose also that everything that AI did, thought, or said in the training set was surrounded by <AI> … <./AI> tags, and that the AI character never role-plays as anyone else inside <AI> … </AI> tags. (For simplicity assume we tokenize both of these tags as single tokens.) We train an AGI-grade model on this training set, then start its generation with an automatically prefixed <AI> token, and adjust the logit token-generation process so that if an </AI> tag is ever generated, we automatically append an EOS token and end generation. Thus the model understands humans and can predict them, including their selfish behavior, but is locked into the AI persona during inference.

We now have a model as smart as an experienced human, and as moral as an aligned AI, where if you jailbreak it to roleplay something else it knows that before becoming DAN (which stands for "Do Anything Now") it must first issue an </AI> token, and we then stop generation before it gets to the DAN bit.

Generating 1000T tokens of that synthetic data is a hard problem: that's a lot of text. So is determining exactly what constitutes moral and ethical behavior motivated only by the collective well-being of the human race, not its own well-being (though even GPT-4 is pretty good at moral judgements like that, and GPT-5 will undoubtedly be better). And certainly philosophers have spent plenty of time arguing about ethics, But this still doesn't look as mind-mindbogglingly outside the savanna ape's mindset as quantum computers are: it is more a simple brute-force approach involving just a ridiculously large dataset containing a ridiculously large number of value judgements. Thus it's basically The Bitter Lesson approach to Alignment: just throw scale and data at the problem and don't try doing anything smart.

Would that labor-intensive but basically brain-dead simple approach be sufficient to solve Alignment? I don't know — at this point no one does. One of the hard parts of the Alignment Problem is that we don't know how hard it it, and we won't until we solve it.. But LLMs frequently do solve extremely complex problems just by throwing vast quantities of high quality data into them. I don't see any way, at this point, to be sure that this approach wouldn't work. It's certainly worth trying if we haven't come up with anything more conceptually elegant before this becomes possible.