I believe there are people with far greater knowledge than me that can point out where I am wrong. Cause I do believe my reasoning is wrong, but I can not see why it would be highly unfeasible to train a sub-AGI intelligent AI that most likely will be aligned and able to solve AI alignment.

My assumptions are as follows:

- Current AI seems aligned to the best of its ability.

- PhD level researchers would eventually solve AI alignment if given enough time.

- PhD level intelligence is below AGI in intelligence.

- There is no clear reason why current AI using current paradigm technology would become unaligned before reaching PhD level intelligence.

- We could train AI until it reaches PhD level intelligence, and then let it solve AI Alignment, without itself needing to self improve.

The point I am least confident in, is 4, since we have no clear way of knowing at what intelligence level an AI model would become unaligned.

Multiple organisations seem to already think that training AI that solves alignment for us is the best path (e.g. superalignment).

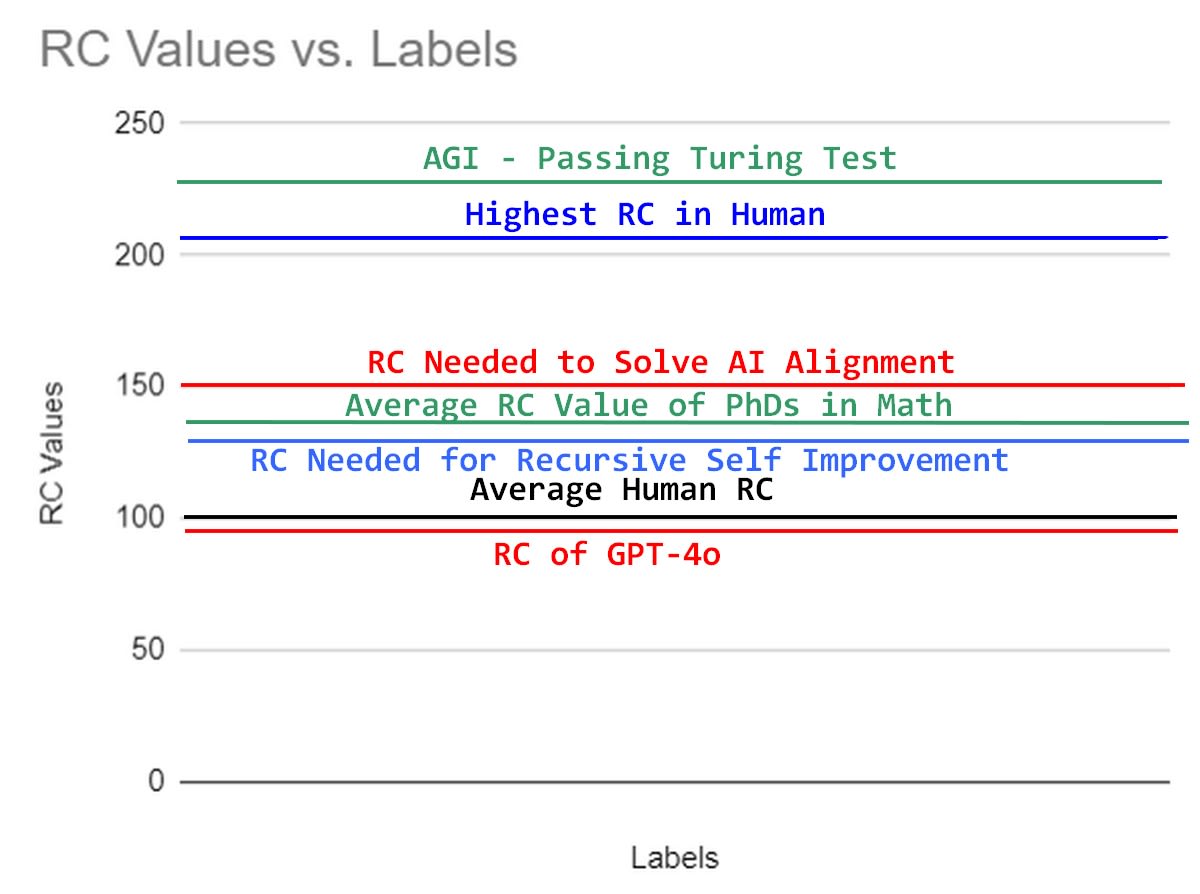

Attached is my mental model of what intelligence different tasks require, and different people have.

Figure 1: My mental model of natural research capability RC (basically IQ with higher correlation for research capabilities), where intelligence needed to align AI is above average PhD level, but below smartest human in the world, and even further from AGI.

Every autocracy in the world has done the experiment of giving a typical human massive amounts of power over other humans: it almost invariably turns out extremely badly for everyone else. For an aligned AI, we don't just need something as well aligned and morally good as a typical human, we need something morally vary better, comparable to an saint or an angel. That means building something that has never previously existed.

Humans are evolved intelligences. While they can and will cooperate on non-sero-sum games, present them with a non-iterated zero-sum situation and they will (almost always) look out for themselves and their close relatives, just as evolution would predict. We're building a non-evolved intelligence, so the orthogonality thesis applies, and what we want is something that will look out for us, not itself, in a zero-sum situation. Training (in some sense, distilling) a human-like intelligence off vast amounts of human-produced data isn't going to do this by default.