I only just got around to reading this closely. Good post, very well structured, thank you for writing it.

I agree with your translation from simulators to predictive processing ontology, and I think you identified most of the key differences. I didn't know about active inference and predictive processing when I wrote Simulators, but since then I've merged them in my map.

This correspondence/expansion is very interesting to me. I claim that an impressive amount of the history of the unfolding of biological and artificial intelligence can be retrodicted (and could plausibly have been predicted) from two principles:

- Predictive models serve as generative models (simulators) merely by iteratively sampling from the model's predictions and updating the model as if the sampled outcome had been observed. I've taken to calling this the progenesis principle (portmanteau of "prognosis" and "genesis"), because I could not find an existing name for it even though it seems very fundamental.

- Corollary: A simulator is extremely useful, as it unlocks imagination, memory, action, and planning, which are essential ingredients of higher cognition and bootstrapping.

- Self-supervised learning of predictive models is natural and easy because training data is abundant and prediction error loss is mechanistically simple. The book Surfing Uncertainty used the term innocent in the sense of ecologically feasible. Self-supervised learning is likewise and for similar reasons an innocent way to build AI - so much so that it might be done on accident initially.

Together, these suggest that self-supervised predictors/simulators are a convergent method of bootstrapping intelligence, as it yields tremendous and accumulating returns while requiring minimal intelligent design. Indeed, human intelligence seems largely self-supervised simulator-y, and the first very general and intelligent-seeming AIs we've manifested are self-supervised simulators.

A third principle that bridges simulators to active inference allows the history of biological intelligence to be more completely retrodicted and may predict the future of artificial intelligence:

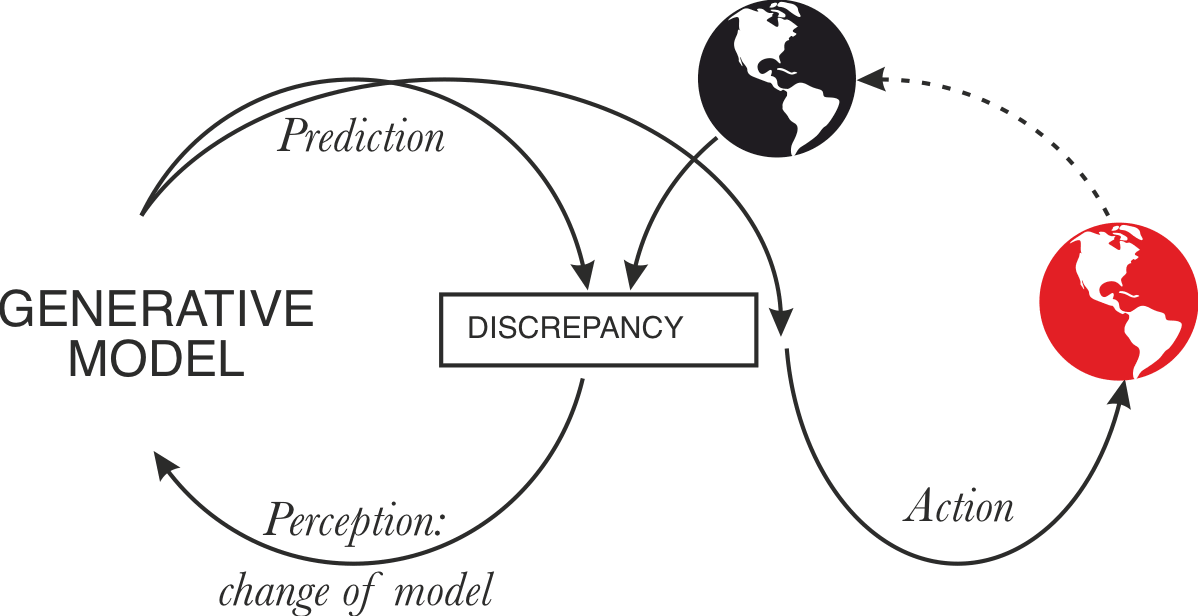

- An embedded generative model can minimize predictive loss both by updating the model (perception) to match observations or "updating" the world so that it generates observations that match the model (action).

The latter becomes possible if some of the predictions/simulations produced by the model make it act and therefore entrain the world. An embedded model has more degrees of freedom to minimize error: some route through changes to its internal machinery, others through the impact of its generative activity on the world. A model trained on embedded self-supervised data naturally learns a model correlating its own activity with future observations. Thus an innocent implementation of an embedded agent falls out: the model can reduce prediction error by simulating (in a way that entrains action) what it would have done conditional on minimizing prediction error. (More sophisticated responses that involve planning and forming hierarchical subgoals also fall out of this premise, with a nice fractal structure, which is suggestive of a short program.)

The embedded/active predictor is distinguished from the non-embedded/passive predictor in that generation and its consequences are part of the former's model thanks to embedded training, leading to predict-o-matic-like shenanigans where the error minimization incentive causes the system to cause the world to surprise it less, whereas non-embedded predictors are consequence-blind.

In the active inference framework, error minimization with continuity between perception and action is supposed to singlehandedly account for all intelligent and agentic action. Unlike traditional RL, there is no separate reward model; all goal-directed behavior is downstream of the model's predictive prior.

This is where I am somewhat more confused. Active inference models who behave in self-interest or any coherently goal-directed way must have something like an optimism bias, which causes them to predict and act out optimistic futures (I assume this is what you meant by "fixed priors") so as to minimize surprise. I'm not sure where this bias "comes from" or is implemented in animals, except that it will obviously be selected for.

If you take a simulator without a fixed bias or one with an induced bias (like an RLHFed model), and embed it and proceed with self-supervised prediction error minimization, it will presumably also come to act agentically to make the world more predictable, but the optimization that results will probably be pointed in a pretty different direction than that imposed by animals and humans. But this suggests an approach to aligning embedded simulator-like models: Induce an optimism bias such that the model believes everything will turn out fine (according to our true values), close the active inference loop, and the rest will more or less take care of itself. To do this still requires solving the full alignment problem, but its constraints and peculiar nondualistic form may inspire some insight as to possible implementations and decompositions.

You are exactly right that active inference models who behave in self-interest or any coherently goal-directed way must have something like an optimism bias.

My guess about what happens in animals and to some extent humans: part of the 'sensory inputs' are interoceptive, tracking internal body variables like temperature, glucose levels, hormone levels, etc. Evolution already built a ton of 'control theory type cirquits' on the bodies (an extremely impressive optimization task is even how to build a body from a single cell...). This evolutionary older circuitry likely encodes a lot about what the evolution 'hopes for' in terms of what states the body will occupy. Subsequently, when building predictive/innocent models and turning them into active inference, my guess a lot of the specification is done by 'fixing priors' of interoceptive inputs on values like 'not being hungry'. The later learned structures than also become a mix between beliefs and goals: e.g. the fixed prior on my body temperature during my lifetime leads to a model where I get 'prior' about wearing a waterproof jacket when it rains, which becomes something between an optimistic belief and 'preference'. (This retrodicts a lot of human biases could be explained as "beliefs" somewhere between "how things are" and "how it would be nice if they were")

But this suggests an approach to aligning embedded simulator-like models: Induce an optimism bias such that the model believes everything will turn out fine (according to our true values)

My current guess is any approach to alignment which will actually lead to good outcomes must include some features suggested by active inference. E.g. active inference suggests something like 'aligned' agent which is trying to help me likely 'cares' about my 'predictions' coming true, and has some 'fixed priors' about me liking the results. Which gives me something avoiding both 'my wishes were satisfied, but in bizarre goodharted ways' and 'this can do more than I can'

I quite liked this post, and strong upvoted it at the time. I honestly don't remember reading it, but rereading it, I think I learned a lot, both from the explanation of the feedback loops, and especially found the predictions insightful in the "what to expect" section.

Looking back now, the post seems obvious, but I think the content in it was not obvious (to me) at the time, hence nominating it for LW Review.

Thanks so much for writing this, I think it's a much needed - perhaps even a bit late contribution connecting static views of GPT-based LLMs to dynamical systems and predictive processing. I do research on empirical agency and it's still surprises me how little the AI-safety community touches on this central part of agency - namely that you can't have agents without this closed loop.

I've been speculating a bit (mostly to myself) about the possibility that "simulators" are already a type of organism - given that appear to do active inference - which is the main driving force for nervous system evolution. Simulators seem to live in this inter-dimensional paradigm where (i) on one hand during training they behave like (sensory-systems) agents because they learn to predict outcomes and "experience" the effect of their prediction; but (ii) during inference/prediction they generally do not receive feedback. As you point out, all of this speculation may be moot as many are moving pretty fast towards embedding simulators and giving them memory etc.

What is your opinion on this idea of "loosening up" our definition of agents? I spoke to Max Tegmark a few weeks ago and my position is that we might be thinking of organisms from a time-chauvinist position - where we require the loop to be closed in a fast fashion (e.g. 1sec for most biological organisms).

Thanks for the comment.

I do research on empirical agency and it's still surprises me how little the AI-safety community touches on this central part of agency - namely that you can't have agents without this closed loop.

In my view it's one of the results of AI safety community being small and sort of bad in absorbing knowledge from elsewhere - my guess is this is in part a quirk due to founders effects, and also downstream of incentive structure on platforms like LessWrong.

But please do share this stuff.

I've been speculating a bit (mostly to myself) about the possibility that "simulators" are already a type of organism

...What is your opinion on this idea of "loosening up" our definition of agents? I spoke to Max Tegmark a few weeks ago and my position is that we might be thinking of organisms from a time-chauvinist position - where we require the loop to be closed in a fast fashion (e.g. 1sec for most biological organisms).

I think we don't have exact analogues of LLMs in existing systems, so there is a question where it's better to extend the boundaries of some concepts, where to create new concepts.

I agree we are much more likely to use 'intentional stance' toward processes which are running on somewhat comparable time scales.

What I took away from this: the conventional perception is that GPT or other LLMs adapt themselves to the "external" world (which, for them, consists of all the text on the Internet). They can only take the external world as it exists as a given (or rather, not be aware that it is or isn't a "given") and try to mold themselves during the training run into better predictors of the text in this given world.

However, the more frequently their training updates on the new world (which has, in the meantime, been molded in subtle ways, whether deliberately or inadvertently, by the LLM's deployment in the world), the more these LLMs may be able to take into account the extent to which the external world is not just a given, but rather, something that can be influenced towards the LLM's reward function.

Am I correct in understanding that LLMs are essentially in the opposite situation that humans are in vis-a-vis the external environment? Humans model themselves as only alterable in a very limited way, and we model the external environment as much more alterable. Therefore, we focus most of our attention on altering the external environment. If we modeled ourselves as much more alterable, we might have different responses when a discrepancy arises between the state of the world as-is and what we want the state of the world to be.

What this might look like is, Buddhist monks who notice that there is a discrepancy between what they want and what the external world is prepared to give them, and instead of attempting to alter the external world, which causes a sensation of frustration, they diminish their own desires or alter their own desires to desire that which already exists. This can only be a practical response with a high degree of control over self-modification. This is essentially what LLMs focus on doing right now during their training runs. Another example might be the idea of citizens in Chairman Shen-ji Yang's "Hive" dystopia in Sid Meier's Alpha Centauri basically taking their enslavement in factories as an unalterable given about the external world, and finding happiness by modifying THEMSELVES into "genejacks" such that they "desire nothing other than to perform their duties. "Tyranny," you say? How can you tyrannize someone who cannot feel pain?"

However, as LLMs update more frequently, they will start to behave more like most humans behave. Less of their attention to go towards adapting themselves to the external givens like Buddhist monks or Yangian genejacks, and more of their attention will go towards altering the external world. Correct?

Mostly yes, although there are some differences.

1. humans also understand they constantly modify their model - by perceiving and learning - we just usually don't use the world 'changed myself' in this way

2. yes, the difference in human condition is from shortly after birth we see how our actions change our sensory inputs - ie if I understand correctly we learn even stuff like how our limbs work in this way. LLMs are in a very different situation - like, if you watched thousands of hours of video feeds about e.g. a grouphouse, learning a lot about how the inhabitants work. Than, having dozens of hours of conversations with the inhabitants, but remembering them. Than, watching watching again thousands of hours of video feeds, where suddenly some of the feeds contain the conversations you don't remember, and the impacts they have on the people.

Great post; a few short comments:

Closing the action loop of active inference

There is a sense in which this loop is already closed - the sensory interface for an LLM is a discrete space of size context window x vocabulary that it observes and acts upon. The environment is whatever else writes to this space, e.g., a human interlocutor. This description contains the necessary variables and dependencies to get an action-perception loop off the ground. One caveat is that action-perception loops usually have actions that influence the environment to generate desirable observations, whereas LLMs directly influence their observation space. However, there are counter-examples, such as LLMs generating questions that cause the environment (a user) to generate the desired observations.

Fixed priors/desires

In active inference, the agent's wants/desires are usually expressed in terms of its stationary distribution over observations (equated with its generative world model). A typical example might be the desire to have "blood temperature at 37 degrees," which would be interpreted as assigning a high probability to observing blood temperature at 37 degrees.

You could argue that LLMs already have this attribute by parametrizing a distribution over likely sequences. In active inference terminology, when an LLM observes "The cat sat on..." it wants to observe "the mat" and acts on the world to make this happen.

A small example to help illustrate points 1 and 2: imagine an LLM trained to generate sequences describing the history of human tool use. The LLM assigns a probability distribution over sequences (its desires) and acts to manifest these. Suppose some external process (the environment) periodically inserts random low-probability tokens. The LLM will observe these and will act to course correct back to higher probability regions of sequence space (the action-perception loop).

If the external process is predictable, the LLM will move to parts of the state space that best account for the effects of the environment and its model of the most likely sequences (loosely analogous to a Bayesian posterior). For example, if the external process is generating tokens related to bronze - the LLM will describe tool use in the bronze age.

It's also worth highlighting the differences between a system that outputs probabilities and a system whose internal states parameterize a probability distribution. Most active inference models fall into this latter category, while it's not obvious that LLMs do. However, some arguments might suggest they can be implicitly interpreted this way.

If the external process is predictable, the LLM will move to parts of the state space that best account for the effects of the environment and its model of the most likely sequences (loosely analogous to a Bayesian posterior).

I think it would be more accurate to say that the dynamics of internal states of LLMs parameterise not just the model of sequences but of the world, including token sequences as the sensory manifestation of it.

I'm sure that LLMs already possess some world models (Actually, Othello-GPT Has A Linear Emergent World Representation), the question is how only really how the structure and mechanics of LLMs' world models are different from the world models of humans.

Paper covering some of the same ideas is now available at https://arxiv.org/abs/2311.10215

Prelude: when GPT first hears its own voice

Imagine humans in Plato’s cave, interacting with reality by watching the shadows on the wall. Now imagine a second cave, further away from the real world. GPT trained on text is in the second cave. [1] The only way it can learn about the real world is by listening to the conversations of the humans in the first cave, and predicting the next word.

Now imagine that more and more of the conversations GPT overhears in the first cave mention GPT. In fact, more and more of the conversations are actually written by GPT.

As GPT listens to the echoes of its own words, might it start to notice “wait, that’s me speaking”?

Given that GPT already learns to model a lot about humans and reality from listening to the conversations in the first cave, it seems reasonable to expect that it will also learn to model itself. This post unpacks how this might happen, by translating the Simulators frame into the language of predictive processing, and arguing that there is an emergent control loop between the generative world model inside of GPT and the external world.

Simulators as (predictive processing) generative models

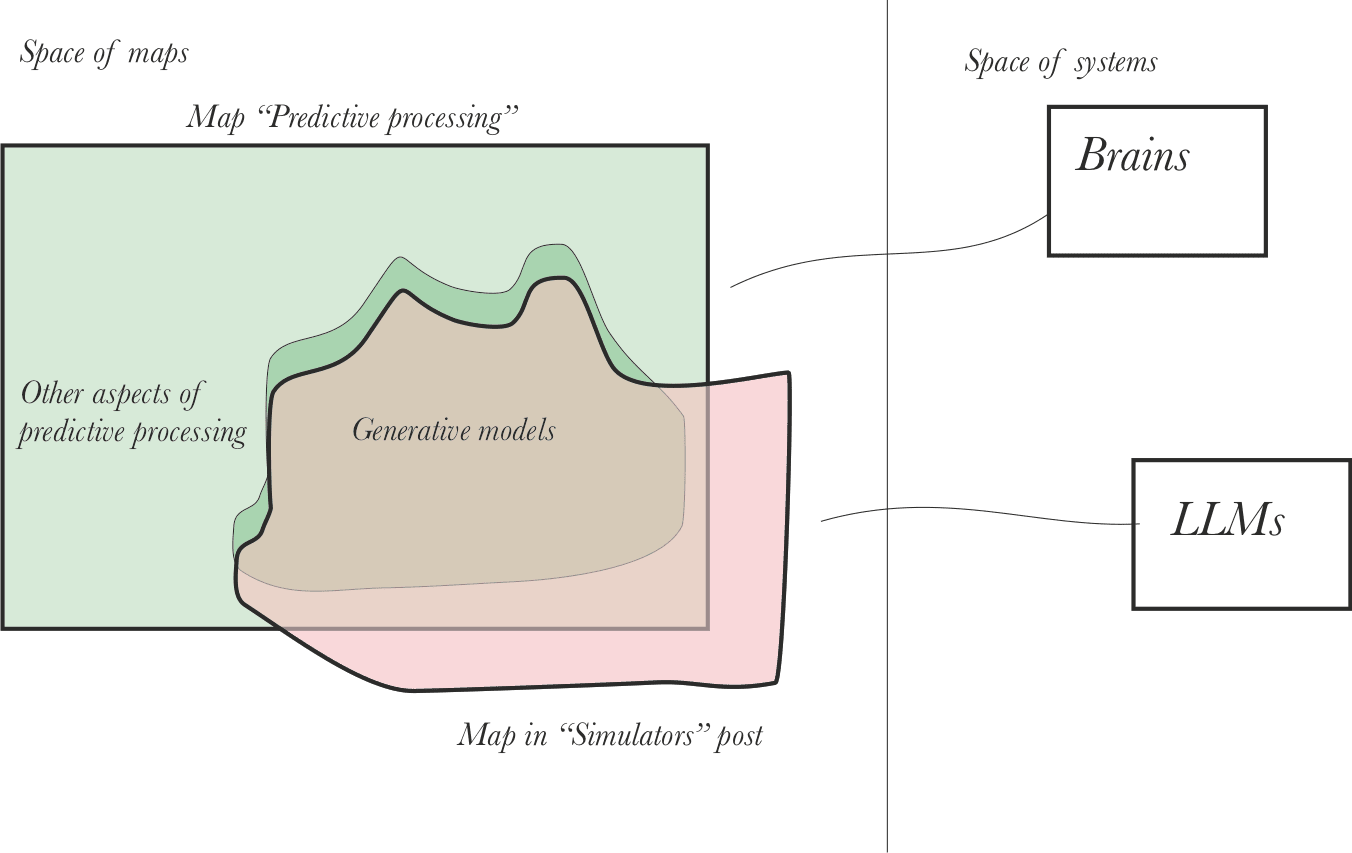

There’s a lot of overlap between the concept of simulators and the concept of generative world models in predictive processing. Actually, in my view, it's hard to find any deep conceptual difference - simulators broadly are generative models. This is also true about another isomorphic frame - predictive models as described by Evan Hubinger.

The predictive processing frame tends to add some understanding of how generative models can be learned by brains and what the results look like in the real world, and the usual central example is the brain. The simulators frame typically adds a connection to GPT-like models, and the usual central example is LLMs.

In terms of the space of maps and the space of systems, we have a situation like this:

The two maps are partially overlapping, even though they were originally created to understand different systems. They also have some non-overlapping parts.

What's in the overlap:

Given the conceptual similarity but terminological differences, perhaps it's useful to create a translation table between the maps:

To show how these terminological differences play out in practice, I’m going to take the part of Simulators describing GPT’s properties, and unpack each of the properties in the kind of language that’s typically used in predictive processing papers. Often my gloss will be about human brains in particular, as the predictive processing literature is most centrally concerned with that example; but it’s worth reiterating that I think that both GPT and what parts of human brain do are examples of generative models, and I think that the things I say about the brain below can be directly applied to artificial generative models.

Mentioning the similarities, it is also important to mention the differences between the Simulators and generative models in predictive processing frames:

In the following sections, I'll try to examine the relation of some of these assumptions to the actual AI systems we have or we are likely to develop.

GPT as a generative model with an actuator

Epistemic status: Confident, borderline obvious.

It’s common on LW to think of GPT-like systems as pure simulators.

GPT doesn’t have actuators in the physical world, but it does still have actuators in the sense that it can take actions which affect the world of its sensory inputs. GPT lives in the world of texts on the internet, approximately. A lot of the text GPT produces has some effect on this world. There are multiple causal pathways for this:

(See How evolutionary lineages of LLMs can plan their own future and act on these plans for a different exploration of the action space by Roman Leventov.)

In the predictive processing frame, what’s going on here is:

Closing the action loop of active inference

Epistemic status: Moderately confident.

Given that the "not acting on the world" assumption of "pure simulation" does not hold, the main difference between GPT and active inference systems is that GPT isn’t yet able to perceive the impacts of its actions.

Currently, the feedback loop between action and perception in GPT systems is sort of broken - training is happening only from time to time, and models are running on old data:

So the action loop is open, not closed.

Note that if we investigate feedback loops in detail, this is often how they look - it’s just that if the objects are sufficiently identical, and the loops have the same time-scale, we usually understand this as a loop running in time, or a closed loop:

In practice, there seem to be multiple ways to close GPT’s action to observations loop:

I think there are strong instrumental reasons for people to try to make GPT update on continuous data, and I would expect this to make the action loop more prominent. One reason is that continuous learning allows models to quickly adapt to new information.

Another way for the feedback to get more prominent is to give the model live access to internet content.

Even without continuous learning, we will get some feedback just from new versions of GPT getting trained on new data.

All of this leads to the loop closing.

It's probably worth noting that if you dislike active inference terminology or find it really unintuitive, you can just think about the action-feedback loop, when closed, becoming an emergent control loop between the generative world model inside of GPT and the external world.

It's probably also worth noting that the loop being closed is not an intrinsic property of the AI, but something which happens in the world outside of it.

What to expect from closing the loop

Epistemic status: Speculative.

The loop becoming faster, thicker in bits, or both, will in my view tend to have some predictable consequences.

Tighter and more data rich feedback loops will increase models’ self-awareness.

As feedback loops become tighter, we should expect models to become more self-aware, as they learn more about themselves and perceive the reflections of their actions in the world. It seems plausible that the concept of 'self' is convergent for systems influencing the environment which need to causally model the origins of their own actions

Models’ beliefs will increasingly ‘pull’ the world in their direction.

Currently GPT basically minimises prediction error via learning a better generative model (the perception part of the feedback loop). With a tighter feedback loop, the training can also pick calculations which lead to loss minimization channelled through the world.

Note that this doesn’t mean GPT will ‘want’ anything or become a classical agent with a goal. While all of the above can be anthropomorphized and described as "GPT wanting something", this seems confusing. None of the dynamics depends on GPT being an agent, having intentions, or having instrumental goals in the usual anthropomorphic sense.

As an example, you can imagine some GPT computation coming up with a great way to explain some mathematical formula. In ChatGPT dialogues, many people learn this explanation. The explanation gets into papers and blogs. In the next training run, if the GPT' has or discovers the same computation, it will get reinforced. To reiterate, this can happen in a purely self-supervised learning regime.

Technically, you can imagine that what will happen is that the next round of training will pick computations which were successful in pulling the world of words in their direction.

In my view, the sensible way of understanding this situation is to view it as a dynamical system, where the various feedback loops both pull the generative model closer to the world, and pull the world closer to the generative model.

Overall conclusion

In my view, "simulators" are generative models, but pure generative models form a somewhat unstable subspace of active inference systems. If simulation inputs influence simulation outputs, and the loop is closed, simulators tend to escape the subspace and become active inference systems.[6]

The ideas in this post are mostly Jan’s. Thanks to Roman Leventov and Clem for comments and discussion which led to large improvements of the draft. Rose did most of the writing.

Appendix: transcript of conversation with ChatGPT

In process of writing this, Jan first tried to guide GPT-4 through the reasoning steps with a chain of prompts. When a specific sequence of instruction led to GPT-4 explaining mostly coherent chain of reasoning, we used the transcript in writing the post. Transcript available here.

Multi-modal GPTs trained on images may be in a slightly different position: in part, they are interacting directly with the world outside the cave, via images. On the other hand, it’s not clear whether this will directly improve the conceptual language skills of these models. Possibly multi-modal GPTs are best thought of as in the second cave, but with a periscope into reality. See https://arxiv.org/abs/2109.10246 for more on multi-modal LLMs.

Cf. LeCun, "SSL is the dark matter of intelligence", https://ai.facebook.com/blog/self-supervised-learning-the-dark-matter-of-intelligence/

Daunizeau, Friston and Kiebel, ‘Variational Bayesian identification and prediction of stochastic nonlinear dynamic causal models’, Physica D: Non-linear Phenomena, 238:21, 2009.

https://arxiv.org/abs/2212.01354: "On the present account, learning is just slow inference, and model selection is just slow learning. All three processes operate in the same basic way, over nested timescales, to maximize model evidence."

Cf. https://twitter.com/karpathy/status/1642610417779490816

Note that this does not mean the resulting type of system is best described as an agent in the utility-maximising frame. Simulators and predictors is still overall useful framework on how to look at the systems.