SWE Automation Is Coming: Consider Selling Your Crypto

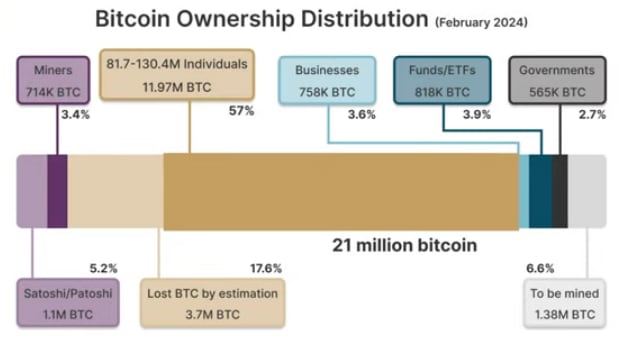

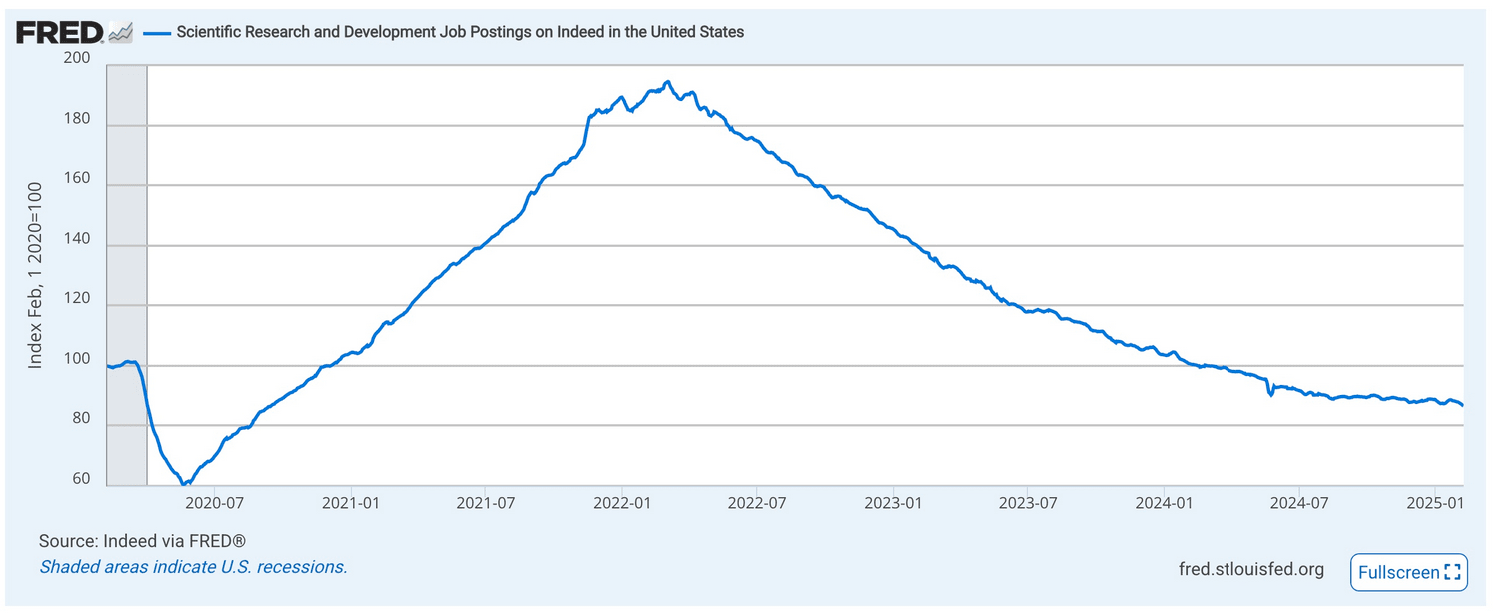

[legal status: not financial advice™] Most crypto is held by individuals[1] Individual crypto holders are disproportionately tech savvy, often programmers Source: Well known, just look around you. AI is starting to eat the software engineers market Already entry level jobs, which doesn't matter for crypto markets that much.[2] But judging...

Having done a bunch of this, yes, great idea. You can have pretty spectacular impact, because the motivation boost and arc of "someone believes in me" is much more powerful than the one you get from funding stress.

My read is that good-taste grants of this type are dramatically, dramatically more impactful than those by larger grantmakers, e.g. I proactively found and funded the upskilling grant of a math PhD who found glitch tokens, which was for a while the third most upvoted research on the alignment forum. This cost $12k for I think one year of upskilling, as frugal geniuses are not that rare if you hang out in the right places.

However!... (read 465 more words →)