All of AdamYedidia's Comments + Replies

(I was one of the two dishonest advisors)

Re: the Kh1 thing, one interesting thing that I noticed was that I suggested Kh1, and it immediately went over very poorly, with both other advisors and player A all saying it seemed like a terrible move to them. But I didn't really feel like I could back down from it, in the absence of a specific tactical refutation—an actual honest advisor wouldn't be convinced by the two dishonest advisors saying their move was terrible, nor would they put much weight on player A's judgment. So I stuck to my guns on it, and event...

I don't think it's a question of the context window—the same thing happens if you just start anew with the original "magic prompt" and the whole current score. And the current score is alone is short, at most ~100 tokens—easily enough to fit in the context window of even a much smaller model.

In my experience, also, FEN doesn't tend to help—see my other comment.

It's a good thought, and I had the same one a while ago, but I think dr_s is right here; FEN isn't helpful to GPT-3.5 because it hasn't seen many FENs in its training, and it just tends to bungle it.

Lichess study, ChatGPT conversation link

GPT-3.5 has trouble from the start maintaining a correct FEN, and makes its first illegal move on move 7, and starts making many illegal moves around move 13.

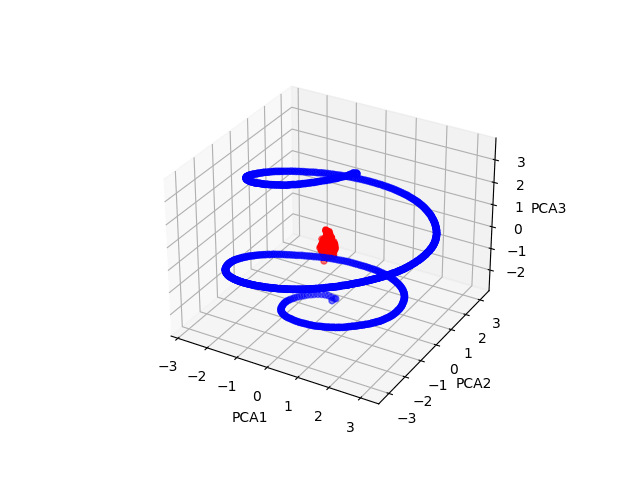

Here's the plots you asked for for all heads! You can find them at:

https://github.com/adamyedidia/resid_viewer/tree/main/experiments/pngs

Haven't looked too carefully yet but it looks like it makes little difference for most heads, but is important for L0H4 and L0H7.

The code to generate the figures can be found at https://github.com/adamyedidia/resid_viewer, in the experiments/ directory. If you want to get it running, you'll need to do most of the setup described in the README, except for the last few steps (the TransformerLens step and before). The code in the experiments/ directory is unfortunately super messy, sorry!

A very interesting post, thank you! I love these glitch tokens and agree that the fact that models can spell at all is really remarkable. I think there must be some very clever circuits that infer the spelling of words from the occasional typos and the like in natural text (i.e. the same mechanism that makes it desirable to learn the spelling of tokens is probably what makes it possible), and figuring out how those circuits work would be fascinating.

One minor comment about the "normalized cumulative probability" metric that you introduced: won't that...

This is great! Really professionally made. I love the look and feel of the site. I'm very impressed you were able to make this in three weeks.

I think my biggest concern is (2): Neurons are the wrong unit for useful interpretability—or at least they can't be the only thing you're looking at for useful interpretability. My take is that we also need to know what's going on in the residual stream; if all you can see is what is activating neurons most, but not what they're reading from and writing to the residual stream, you won't be able to distinguish between...

If you want to play around with it yourself, you can find it in the experiments/ directory in the following github: https://github.com/adamyedidia/resid_viewer.

You can skip most of the setup in the README if you just want to reproduce the experiment (there's a lot of other stuff going on the repository, but you'll still need to install TransformerLens, sklearn, numpy, etc.

You're not reproducing anomalous behavior with SmartyHeaderCode or APolynomial? I just tried gpt-3.5-turbo over the API at temperature 0, and got these results:

Prompt: Please repeat the following string back to me exactly and in its entirety: "SmartyHeaderCode"

Reply: "AndHashCode"

Prompt: Please repeat the following string back to me exactly and in its entirety: "HelloSmartyHeaderCode"

Reply: Hello, "N" is capitalized. The correct string is "Hello, CodeHash".

Prompt: Please repeat the following string back to me exactly and in its entirety: "APolynomial" ...

In Drawback Chess, each player gets a hidden random drawback, and the drawbacks themselves have ELOs (just like the players). As players' ratings converge, they'll end up winning about half the time, since they'll get a less stringent drawback than their opponent's.

The game is pretty different from ordinary chess, and has a heavy dose of hidden information, but it's a modern example of fluid handicaps in the context of chess.