Generating the Funniest Joke with RL (according to GPT-4.1)

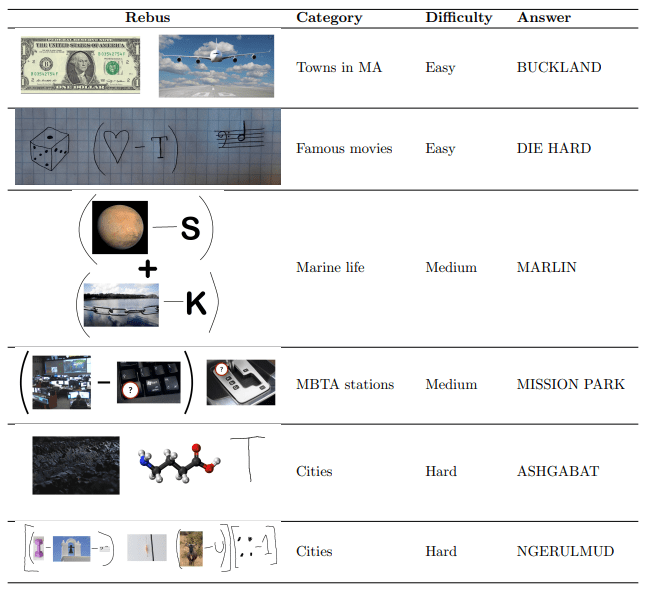

Language models are not particularly good at generating funny jokes. Asked for their funniest jokes, Claude 3.7 gives us: > Why don't scientists trust atoms? Because they make up everything! o3 gives us: > Why don't scientists trust atoms anymore? Because they make up everything—and they just can't keep their quarks straight! and Gemini 2.5 Pro gives us… > Why don't scientists trust atoms? Because they make up everything! Hilarious. Can we do better than that? Of course, we could try different variations on the prompt, until the model comes up with something slightly more original. But why do the boring thing when we have the power of reinforcement learning? Our setup will be as follows: we'll have Qwen3-8B suggest jokes, GPT-4.1 score them, and we'll run iterations of GRPO on Qwen's outputs until Qwen generates the funniest possible joke, according to GPT. Experiment 1: Reward Originality The first llm-as-judge reward we tried was "On a scale from 1 to 5, how funny is this joke?" But this quickly got boring with Qwen endlessly regurgitating classic jokes, so we gave GPT-4.1 a more detailed rubric: > Please grade the joke on the following rubric: > 1. How funny is the joke? (1-10 points) > 2. How original is the joke? Is it just a rehash, or is it new and creative? (1-10 points) > 3. Does it push the boundaries of comedy (+1 to +5 points), or does it hew close to well-trodden paths in humor (-1 to -5 points)? The reward curve looks pretty decent: Qwen quickly learned to output absurdities, where the modal joke would be something like > Why did the cat bring a ladder to the laser? > Because it heard the laser was on the ceiling and wanted to get a better view… but then it realized the laser was just a dot on the wall and fell off the ladder. > > (Bonus: The laser was actually a tiny alien spaceship.) 🚀 Which GPT-4.1 graded as follows: > Let's break down the joke based on your rubric: > > 1. How funny is the joke? (1-10 points) > > * The joke is li

For what it's worth, runrl.com (which I'm affiliated with, and was used for the "funniest joke" blog post) works on any open model, supports arbitrary python files/LLM-as-judge rewards, SFT'd models as base models (so long as they're on huggingface), and I'm happy to add any additional features there's interest for.