All of Alex Mallen's Comments + Replies

Scaling Laws for Reward Model Overoptimization is relevant empirical work for understanding how optimality and pretraining priors weigh against each other as a function of variables like reward quality, amount of optimization against reward, and policy size.

I tentatively think these results suggest that if >90% of training compute is used for outcome-based RL, then most errors in the reward would be exploited. This pushes in favor of hypotheses 4 and 5 (reward-seeking and reward-seeking by proxy), though perhaps people will notice and fix these reward-hacking issues.

A genre of win-conditions that I think this post doesn't put enough weight on:

The AIs somewhere in-between TAI and superintelligence (AIs that are controllable and/or not egregiously misaligned) generate convincing evidence of risks from racing to superintelligence. If people actually believed these risks, they would act more cautiously, modulo "I'll do it safer than they will". People don't currently believe in the risks much and IMO this is pretty counterfactual to the amount they're currently mitigating risks.

AI Control helps a bit here by allowing us to get more e.g. model organisms work done at the relevant time (though my point pushes more strongly in favor of other directions of work).

Really great list!

- It might be that sampling from the model sometimes samples an alignment faking persona and sometimes doesn't. Then, sampling several times to get an ensemble is helpful.

This could be generalized to any kind of inconsistency that leads to the model not always choosing to lie. E.g. The model makes reasoning errors some fraction of the time, has imperfect situational awareness, or picks inconsistent collusion signals.

You can also resample during red-teaming to increase the effective length of the upfront red-teaming-of-monitor period, forcing the model to have more consistent goals, reasoning, situational awareness, and Schelling points to evade being caught.

The general concern here is that your human auditors might not be perfect. I think in practice when making a safety case for a deployment, it would make sense to run a red-team/blue-team game with a human/AI red-team and your real auditors to see if your auditors are reliable.

I'm not sure how the proposed steganographic attack would work though. How do you ensure that your backdoored code will hash to XYZ if you can't see the backdoor opportunity until after you've decided on XYZ?

(Not an author, but Buck suggested I look at this)

I think there's a few reasons why it's not clear from these results whether the steganography results introduced would reduce safety in an untrusted monitoring control protocol like that in Greenblatt et al.:

- The untrusted model needs to reliably collude in order to defeat the blue-team because if it messes up once during the upfront evaluation period (30 problems), it loses. Having a 90% chance of communicating correctly to the monitor on each problem gives a 0.9^30 = 4% chance of passing the upfron...

I think that if they:

- Find a feature that is necessary for defection in all of the cases of defection in a diverse and realistic set of model organisms

- Locate that feature with very little optimization on the defection cases

I'd be fairly confident (>~80%) that it would catch any particular deployment-time defection attempt from an ASL-4 schemer. This would cut risk from the deployment by >5x when paired with compelling incrimination and a shutdown. This is a substantial risk reduction, and I might even buy this safety case if there weren't better o...

This is really exciting to see not only as a way to understand better what people at Anthropic are thinking about how to make ASL-4 safety cases but also as a survey of how various safety agendas might play a role in practice!

Here are some thoughts about the details:

- Given that the mechinterp safety case involves running a kind of control evaluation, other monitoring methods can largely be substituted into the eval. This could enable cheap testing of other monitors e.g. based on supervised probing or mechanistic anomaly detection.

- I like the idea to check

Their addendum contains measurements on refusals and harmlessness, though these aren't that meaningful and weren't advertised.

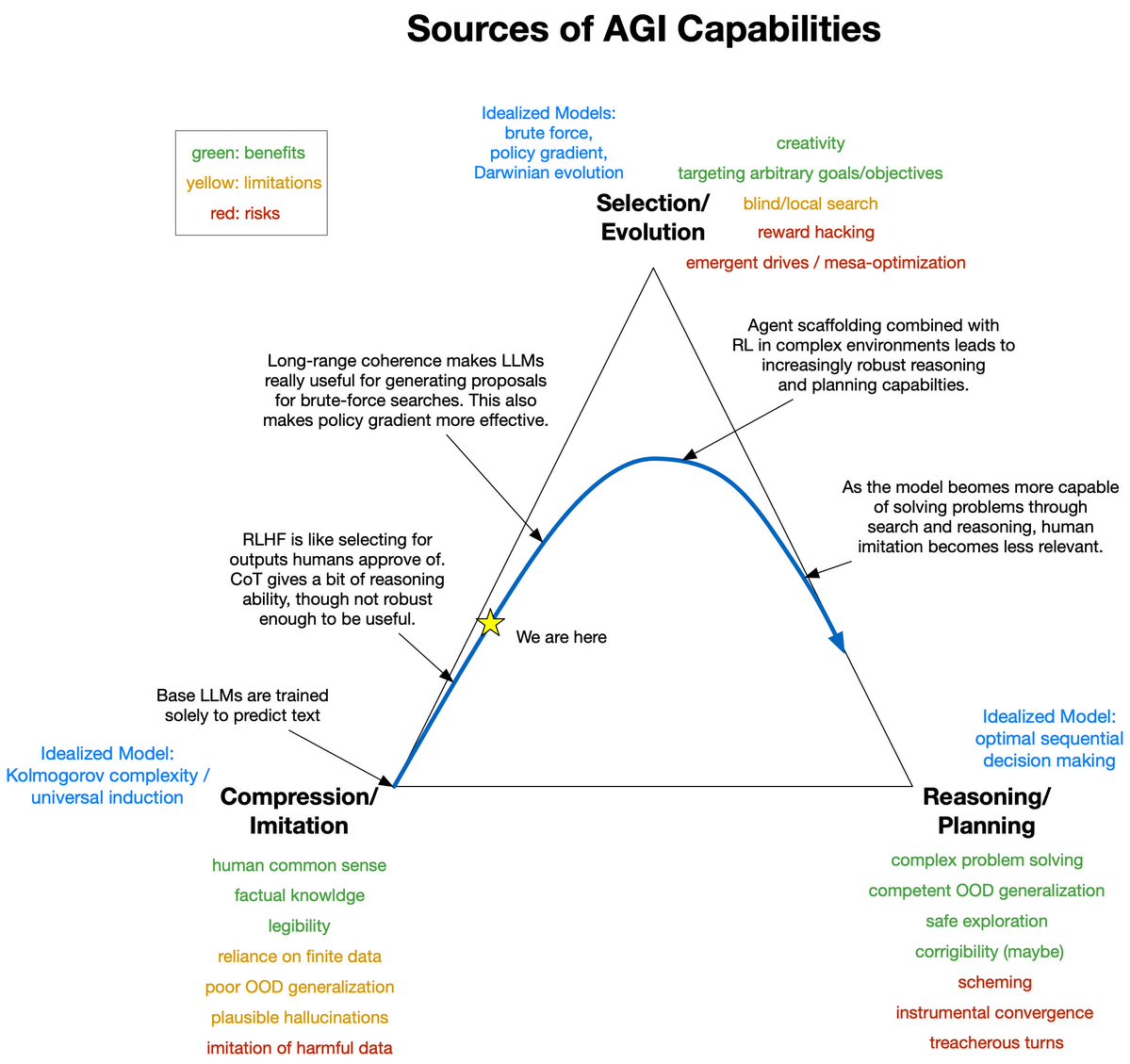

I think the relevant notion of "being an agent" is whether we have reason to believe it generalizes like a consequentialist (e.g. its internal cognition considers possible actions and picks among them based on expected consequences and relies minimally on the imitative prior). This is upstream of the most important failure modes as described by Roger Grosse here.

I think Sora is still in the bottom left like LLMs, as it has only been trained to predict. Without further argument or evidence I would expect that it probably for the most part hasn't learned to ...

I'll add another one to the list: "Human-level knowledge/human simulator"

Max Nadeau helped clarify some ways in which this framing introduced biases into my and others' models of ELK and scalable oversight. Knowledge is hard to define and our labels/supervision might be tamperable in ways that are not intuitively related to human difficulty.

Different measurements of human difficulty only correlate at about 0.05 to 0.3, suggesting that human difficulty might not be a very meaningful concept for AI oversight, or that our current datasets for experimenting wi...

Adversarial mindset. Adversarial communication is to some extent necessary to communicate clearly that Conjecture pushes back against AGI orthodoxy. However, inside the company, this can create a soldier mindset and poor epistemic. With time, adversariality can also insulate the organization from mainstream attention, eventually making it ignored.

This post has a battle-of-narratives framing and uses language to support Conjecture's narrative but doesn't actually argue why Conjecture's preferred narrative puts the world in a less risky posi...

(Initial reactions that probably have some holes)

If we use ELK on the AI output (i.e. extensions of Burns's Discovering Latent Knowledge paper, where you feed the AI output back into the AI and look for features that the AI notices), and somehow ensure that the AI has not learned to produce output that fools its later self + ELK probe (this second step seems hard; one way you might do this is to ensure the features being picked up by the ELK probe are actually the same ones used/causally responsible for the decision in the first place), then it seems initi...

It's a tool for interacting with language models: https://generative.ink/posts/loom-interface-to-the-multiverse/

I think this does a great job of reviewing the considerations regarding what goals would be incentivized by SGD by default, but I think that in order to make predictions about which goals will end up being relevant in future AIs, we have to account for the outer loop of researchers studying model generalization and changing their training processes.

For example, reward hacking seems very likely by default from RL, but it is also relatively easy to notice in many forms and AI projects will be incentivized to correct it. On the other hand, ICGs might be harder to notice and have fewer incentives for correcting.