All of asr's Comments + Replies

This is because the current position, direction, and speed of an atom (and all other measurements that can be done physically) are only possible with one and only one specific history of everything else in the universe.

This seems almost certainly false. You can measure those things to only finite precision -- there is a limit to the number of bits you can get out of such a measurement. Suppose you measure position and velocity to one part in a billion in each of three dimensions. That's only around 200 bits -- hardly enough to distinguish all possible universal histories.

Good point. A time limit of 3:54 does seem too arbitrary to be hard-coded.

Hrm. Maybe it's exactly one Atlantean time unit? Unsafe to assume that the units we are used to are the same units that the Stone's maker would find natural.

I bet Hermione is just going to love being the center of all the attention and scrutiny this will bring on her.

She came back from the dead. Gonna be a lot of attention and scrutiny regardless.

I have this impression - parenting hardly ever discussed on LW - that most of the community has no children.

Let me give you an alternate explanation. Being a parent is very time-consuming. It also tends to draw one's interest to different topics than are typically discussed here. In consequence, LW readers aren't a random sample of nerds or even of people in the general social orbit of the LW crowd. I would not draw any adverse inferences from the fact that a non-parenting-related internet forum tend to be depleted of parents.

This graph would be more interesting and persuasive with a better caption.

data scientists / statisticians mostly need access to computing power, which is fairly cheap these days.

This is true for each marginal data scientist. But there's a catch, which is that those folks need data. Collecting and promulgating that data, in the application domains we care about, can sometimes be very costly. You might want to consider some of those as part of the cost for the data science.

For example, many countries are spending a huge amount of money on electronic health records, in part to allow better data mining. The health records aren'...

Um, yes for most definitions of "rational". That's why [autism] is considered a disability.

Hrm? A disability is a thing that is limits the disabled individual from a socially-recognized set of normal actions. The term 'disability' alone doesn't imply anything about reasoning or cognitive skills. It seems at best un-obvious, and more likely false, that "rationality" encompasses all cognitive functions.

Some people have dyslexia; that is certainly a cognitive disability. It would be strange (not to say offensive) to describe dyslexic i...

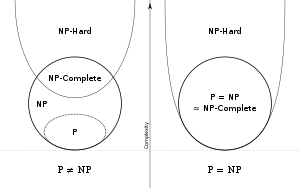

One of the unfortunate limitations of modern complexity theory is that a set of problems that look isomorphic sometimes have very different complexity properties. Another awkwardness is that worst-case complexity isn't a reliable guide to practical difficulty. "This sorta feels like a coloring problem" isn't enough to show it's intractable on the sort of instances we care about.

Separately, it's not actually clear to me whether complexity is good or bad news. If you think that predicting human desires and motivations is infeasible computationally,...

I just observe that a lot of cosmology seems to be riding on the theory that the red shift is caused by an expanding universe.

This seems wrong to be. There's at least two independent lines of evidence for the Big Bang theory besides redshifts -- isotope abundances (particularly for light elements) and the cosmic background radiation.

What if it light just loses energy as it travels, so that the frequency shifts lower?

We would have to abandon our belief in energy conservation. And we would then wonder why energy seems to be conserved exactly in every ...

Speaking as a former algorithms-and-complexity TA --

Proving something is in NP is usually trivial, but probably would be worth a point or two. The people taking complexity at a top-tier school have generally mastered the art of partial credit and know to write down anything plausibly relevant that occurs to them.

I think roystgnr's comment was meant to be parsed as:

"Hmm... I can prove that this is in NP, and I can prove it is not in P and is not in NP-Complete. But that's not worth any points at all!" (crumples up and throws away paper)

Corollary:

...they shouldn't have crumpled that piece of paper.

What if it light just loses energy as it travels, so that the frequency shifts lower? That seems like a perfectly natural solution. How do we know it isn't true?

As gjm mentions, the general name for this sort of theory is "tired light." And these theories have been studied extensively and they are broken.

We have a very accurate, very well-tested theory that describes the way photons behave, quantum electrodynamics. It predicts that photons in the vacuum have a constant frequency and don't suddenly vanish. Nor do photons have any sort of inter...

"Falling in love" isn't this sudden thing that just happens, it's a process and it's a process that is assisted if the other person is encouraging and feels likewise. Put another way, when the object of your affection is uninterested, that's often a turnoff, and so one then looks elsewhere.

There is a peculiar consequence of this, pointed out by Cosma Shalizi. Suppose we have a deterministic physical system S, and we observe this system carefully over time. We are steadily gaining information about its microstates, and therefore by this definition, its entropy should be decreasing.

You might say, "the system isn't closed, because it is being observed." But consider the system "S plus the observer." Saying that entropy is nondecreasing over time seems to require that the observer is in doubt about its own microstates. What does that mean?

Russell is an entirely respectable and mainstream researcher, at one of the top CS departments. It's striking that he's now basically articulating something pretty close to the MIRI view. Can somebody comment on whether Russell has personally interacted with MIRI?

If MIRI's work played a role in convincing people like Russell, that seems like an major accomplishment and demonstration that they have arrived as part of the academic research community. If Russell came to that conclusion on his own, MIRI should still get a fair bit of praise for getting there f...

Did the survey. Mischief managed.

Did you read about Google's partnership with NASA and UCSD to build a quantum computer of 1000 qubits?

Technologically exciting, but ... imagine a world without encryption. As if all locks and keys on all houses, cars, banks, nuclear vaults, whatever, disappeared, only incomparably more consequential.

My understanding is that quantum computers are known to be able to break RSA and elliptic-curve-based public-key crypto systems. They are not known to be able to break arbitrary symmetric-key ciphers or hash functions. You can do a lot with symmetric-key sys...

Taking up on the "level above mine" comments -- Scott is a very talented and successful researcher. He also has tenure and can work on what he likes. The fact that he considers this sort of philosophical investigation worth his time and attention makes me upwardly revise my impression of how worthwhile the topic is.

Points 1 and 2 are reasonably clear. Point 3 is unhelpfully vague. If I were moderator, I would have no idea how far that pushes, and as a commenter I wouldn't have a lot of insight as to what to avoid.

I don't mind giving a catch-all authority to a moderator, but if there are specific things you have in mind that are to be avoided, it's probably better to enumerate them.

I would add an explicit "nothing illegal, nothing personally threatening" clause. Those haven't been problems, but it seems better to remind people and to make clear we all agree on that as a standard.

Interesting. Can you say more about how your work compares to existing VMs, such as the JVM, and what sorts of things you want to prove about executions?

Doing an audit to catch all vulnerabilities is monstrously hard. But finding some vulnerabilities is a perfectly straightforward technical problem.

It happens routinely that people develop new and improved vulnerability detectors that can quickly find vulnerabilities in existing codebases. I would be unsurprised if better optimization engines in general lead to better vulnerability detectors.

Having a top-level domain doesn't make an entity a country. Lots of indisputably non-countries have top-level domains. Nobody thinks the Bailiwick of Guernsey is a country, and yet .gg exists.

To do that it's going to need a decent sense of probability and expected utility. Problem is, OpenCog (and SOAR, too, when I saw it) is still based in a fundamentally certainty-based way of looking at AI tasks, rather than one focused on probability and optimization.

I don't see why this follows. It might be that mildly smart random search, plus a theorem prover with a fixed timeout, plus a benchmark, delivers a steady stream of useful optimizations. The probabilistic reasoning and utility calculation might be implicit in the design of the "self-imp...

But it would have a very hard time strengthening its core logic, as Rice's Theorem would interfere: proving that certain improvements are improvements (or, even, that the optimized program performs the same task as the original source code) would be impossible.

This seems like the wrong conclusion to draw. Rice's theorem (and other undecidability results) imply that there exist optimizations that are safe but cannot be proven to be safe. It doesn't follow that most optimizations are hard to prove. One imagines that software could do what humans do -- hu...

You might look into all the work that's been done with Functional MRI analysis of the brain-- your post reminds me of that. The general technique of "watch the brain and see which regions have activity correlated with various mental states" is a well known technique, and well enough known that all sorts of limitations and statistical difficulties have been pointed out (see wikipedia for citations.)

In other words, even if this is completely correct, it doesn't disprove relativity. Rather, it disproves either relativity or most versions of utilitarianism--pick one.

It seems like all it shows is that we ought to keep our utility functions Lorentz-invariant. Or, more generally, when we talk about consequentialist ethics, we should only consider consequences that don't depend on aspects of the observer that we consider irrelevant.

I'm curious if anyone has made substantial effort to reach a 'flow' state in tasks outside of coding, like reading or doing math etc etc., and what they learned. Are there easy tricks? Is it possible? Is flow just a buzzword that doesn't really mean anything?

I find reading is just about the easiest activity to get into that state with. I routinely get so absorbed in a book that I forget to move. And I think that's the experience of most readers. It's a little harder with programming actually, since there are all these pauses while I wait for things to compile or run, and all these times when I have to switch to a web browser to look something up. With reading, you can just keep turning pages.

The canonical example is that of a child who wants to steal a cookie. That child gets its morality mainly from its parents. The child strongly suspects that if it asks, all parents will indeed confirm that stealing cookies is wrong. So it decides not to ask, and happily steals the cookie.

I find this example confusing. I think what it shows is that children (humans?) aren't very moral. The reason the child steals instead of asking isn't anything to do with the child's subjective moral uncertainty -- it's that the penalty for stealing-before-asking is low...

Without talking about utility functions, we can't talk about expected utility maximization, so we can't define what it means to be ideally rational in the instrumental sense

I like this explanation of why utility-maximization matters for Eliezer's overarching argument. I hadn't noticed that before.

But it seems like utility functions are an unnecessarily strong assumption here. If I understand right, expected utility maximization and related theorems imply that if you have a complete preference over outcomes, and have probabilities that tell you how dec...

I appreciate you writing this way -- speaking for myself, I'm perfectly happy with a short opening claim and then the subtleties and evidence emerges in the following comments. A dialogue can be a better way to illuminate a topic than a long comprehensive essay.

High frequency stock trading.

The attack that people are worrying about involves control of a majority of mining power, not control of a majority of mining output. So the seized bitcoins are irrelevant. The way the attack works is that the attacker would generate a forged chain of bitcoin blocks showing nonsense transactions or randomly dropping transactions that already happened. Because they control a majority of mining power, this forged chain would be the longest chain, and therefor a correct bitcoin implementation would try to follow it, with bad effects. This in turn would break the existing bitcoin network.

The government almost certainly has enough compute power to mount this attack if they want.

I didn't down-vote, but was tempted to. The original post seemed content-free. It felt like an attempt to start a dispute about definitions and not a very interesting one.

It had an additional flaw, which is that it presented its idea in isolation, without any context on what the author was thinking, or what sort of response the author wanted. It didn't feel like it raised a question or answered a question, and so it doesn't really contribute to any discussion.

The only reasons I can think of are your #1 and #2. But I think both are perfectly good reasons to vote...

Think about the continuum between what we have now and the free market (where you can control exactly where your money goes), and it becomes fairly clear that the only points which have a good reason to be used are the two extreme ends. If you advocate a point in the middle, you'll have a hard time justifying the choice of that particular point, as opposed to one further up or down.

I don't follow your argument here. We have some function that maps from "levels of individual control" to happiness outcomes. We want to find the maximum of this fu...

Eliezer thinks the phrase 'worst case analysis' should refer to the 'omega' case.

"Worst case analysis" is a standard term of art in computer science, that shows up as early as second-semester programming, and Eliezer will be better understood if he uses the standard term in the standard way.

A computer scientist would not describe the "omega" case as random -- if the input is correlated with the random number source in a way that is detectable by the algorithm, they're by definition not random.

Yes. Perhaps we might say, this is what middle school or high school science should be.

Likewise direct demonstrations are the sort of thing I wish science museums focused on more clearly. Often they have 75% of it, but the story of "this experiment shows X" gets lost in the "whoa, cool". I'm in favor of neat stuff, but I wish they explained better what insight the viewer should have.

Juries have a lot of "professional supervision." In the Common Law system, the judge restricts who can serve on the jury, determines the relevant law, tells the jury what specific question of fact they are deciding, controls the evidence shown to the jury, does the sentencing, and more. My impression is that the non-Common Law systems that use juries give them even less discretion. So when we have citizen-volunteers, we get good results only by very carefully hemming them in with professionals.

You can't supervise the executive in the same way. B...

I found this post hard to follow. It would be more intelligible if you gave a clearer explanation of what problem you are trying to solve. Why exactly is it bad to have the same people look for problems and fix them? Why is it bad to have a legislature that can revise and amend statutes during the voting process?

I also don't really understand what sort of comment or feedback you are expecting here. Do you want us to discuss whether this lottery-and-many-committees structure is in general a good idea? Do you want us to critique through the details of your ...

I basically agree, but I think the point is stronger if framed differently:

Some defects in an argument are decisive, and others are minor. In casual arguments, people who nitpick are often unclear both to themselves and to others whether their objections are to minor correctable details, or seriously undermine the claim in question.

My impression is that mathematicians, philosophers, and scientists are conscious of this distinction and routinely say things like "the paper is a little sloppy in stating the conclusions that were proved, but this can be f...

The idea that you can reasonable protect your anonymity by using a nickname is naive.

I think not so naive as all that. The effectiveness of a security measure depends on the threat. If your worry is "employers searching for my name or email address" then a pseudonym works fine. If your worry is "law enforcement checking whether a particular forum post was written by a particular suspect," then it's not so good. And if your worry is "they are wiretapping me or will search my computer", then the pseudonym is totally unhelpful...

This is incredibly cool and it makes me sad that I've never seen this experiment done in a science museum, physics instructional lab, or anywhere else.

This is actually a really good example of what I wanted.

I think I have a lot of reason to believe v = f lambda -- It follows pretty much from the definition of "wave" and "wavelength". And I think I can check the frequency of my microwave without any direct assumptions about the speed of light, using an oscilloscope or somesuch.

But yes, you are correct, as long as your main criterion is something like "compelling at an emotional level", you should expect that different people understand it very differently.

This actually brings out something I had never thought about before. When I am reading or reviewing papers professionally, mostly the dispute between reviewers is about how interesting the topic is, not about whether the evidence is convincing. Likewise my impression about the history of physics is that mostly the professionals were in agreement about what would co...

Well, you could use your smartphone's accelerometer to verify the equations for centrifugal force, or its GPS to verify parts of special and general relativity, or the fact that its chip functions to verify parts of quantum mechanics.

These don't feel like the are quite comparable to each other. I do really trust the accelerometer to measure acceleration. If I take my phone on the merry-go-round and it says "1.2 G", I believe it. I trust my GPS to measure position. But I only take on faith that the GPS had to account for time dilation to work ...

Another advantage of replicating the original discovery is that you don't accidentally use unverified equipment or discoveries (ie equipment dependent on laws that were unknown at the time).

I don't consider this an advantage. My goal is to find vivid and direct demonstrations of scientific truths, and so I am happy to use things that are commonplace today, like telephones, computers, cameras, or what-have-you.

That said, I certainly would be interested in hearing about cases where there's something easy to see today that used to be hard -- is there something you have in mind?

Various ways to measure the speed of light. Many require few modern implements. How to measure constancy of the speed of light -- the original experiment, does not require any complicated or mysterious equipment, only careful design.

The early measurements of the speed of light don't require "modern implements." They do require quite sophisticated engineering or measurement. In particular, the astronomical measurements are not easy at all. Playing the"how would I prove X to myself" game brought home to me just how hard science is. Al...

Is there an easily visible consequence of special relativity that you can see without specialized equipment?

A working GPS receiver.

In general, things like a smartphone "verify" a great deal of modern science.

Yah. Though the immediacy of the verification will vary. When I use my cell phone, I really feel it that information is being carried by radio waves that don't penetrate metal. But I never found the GPS example quite compelling; people assure me "oh yes we needed relativity to get it to work right" and of course I believe the...

I would read this if written well.

Knowing X and being able to do something about X are quite different things. A death-row prisoner might be able to make the correct prediction that he will be hanged tomorrow, but that does not "enable goal-accomplishing actions" for him -- in the Bayes' world as well. Is the Cassandra's world defined by being powerless?

Powerlessness seems like a good way to conceptualize the Cassandra alternative. Perhaps power and well-being are largely random and the best-possible predictions only give you a marginal improvement over the baseline. Or else p...

It's a tempting thought. But I think it's hard to make the math work that way.

I have a lovely laptop here that I am going to give you. Suppose you assign some utility U to it. Now instead of giving you the laptop, I give you a lottery ticket or the like. With probability P I give you the laptop, and with probability 1 - P you get nothing. (The lottery drawing will happen immediately, so there's no time-preference aspect here.) What utility do you attach to the lottery ticket? The natural answer is P * U, and if you accept some reasonable assumptions about... (read more)