All of Ben's Comments + Replies

Those jokes are very bad. But, in fairness I don't think most human beings could do very well do given the same prompt/setup either. Jokes tend to work best in some kind of context, and getting from a "cold start" to something actually funny in a few sentences is hard.

The original post above is making the argument that leaving out the caveats and exceptions and/or exaggerating the point in order to provoke a discussion is dishonest and unhelpful. They use the term "clickbait" to describe that strategy. I think in general usage the word "clickbait" is used in a broader way, to include this strategy but also to include other things.

Of those titles 2 and 3 are clearly not exaggerating anything, so are not doing the thing the OP is complaining about. Number 1 arguably is, the post is a lawyer telling us that his clients oft...

My quick thoughts on why this happens.

(1) Time. You get asked to do something. You dont get the full info dump in the meeting so you say yes and go off hopeful that you can find the stuff you need. Other responsibilies mean you dont get around to looking everything up until time has passed. It is at that stage that you realise the hiring process hasnt even been finalised or that even one wrong parameter out of 10 confusing parameters would be bad. But now its mildly awkard to go back and explain this - they gave this to you on Thursday, its now Monday.

(2) ...

The index by Reporters Without Boarders is primarily about whether a newspaper or reporter can say something without consequences or interference. Things like a competitive media environment seem to be part of the index (The USAs scorecard says "media ownership is highly concentrated, and many of the companies buying American media outlets appear to prioritize profits over public interest journalism"). Its an important thing, but its not the same thing you are talking about.

The second one, from "our world in data", ultimately comes from this (https://www.v...

It reminds maybe of Dominic Cummings and Boris Johnson. The Silicon-Valley + Trump combo feels somehow analogous.

One very important consideration is whether they hold values that they believe are universalist, or merely locally appropriate.

For example, a Chinese AI might believe the following: "Confucianist thought is very good for Chinese people living in China. People in other countries can have their own worse philosophies, and that is fine so long as they aren't doing any harm to China, its people or its interests. Those idiots could probably do better by copying China, but frankly it might be better if they stick to their barbarian ways so that they remain too w...

I agree this is an inefficiency.

Many of your examples are maybe fixed by having a large audience and some randomness as described by Robo.

But some things are more binary. For example when considering job applicants an applicant who won some prestigious award is much higher value that one who didnt. But, their is a person who was the counterfactual 'second place' for that award, they are basically as high value as the winner, and no one knows who they are.

Having unstable policy making comes with a lot of disadvantages as well as advantages.

For example, imagine a small poor country somewhere with much of the population living in poverty. Oil is discovered, and a giant multinational approaches the government to seek permission to get the oil. The government offers some kind of deal - tax rates, etc. - but the company still isn't sure. What if the country's other political party gets in at the next election? If that happened the oil company might have just sunk a lot of money into refinery's and roads and dril...

Correct.

I used to think this. I was in a café reading cake description and the word "cheese" in the Carrot Cake description for the icing really switched me away. I don't want a cake with cheese flavor - sounds gross. Only later did I learn Carrot Cake was amazing.

So it has happened at least once.

There was an interesting Astral Codex 10 thing related to this kind of idea: https://www.astralcodexten.com/p/book-review-the-cult-of-smart

Mirroring some of the logic in that post, starting from the assumption that neither you nor anyone you know are in the running for a job, (lets say you are hiring an electrician to fix your house) then do you want the person who is going to do a better job or a worse one?

If you are the parent of a child with some kind of developmental problem that means they have terrible hand-eye coordination, you probably don't want y...

Wouldn't higher liquidity and lower transaction costs sort this out? Say you have some money tied up in "No, Jesus will not return this year", but you really want to bet on some other thing. If transaction costs were completely zero then, even if you have your entire net worth tied up in "No Jesus" bets you could still go to a bank, point out you have this more-or-less guaranteed payout on the Jesus market, and you want to borrow against it or sell it to the bank. Then you have money now to spend. This would not in any serious way shift the prices of the "...

I dont know Ameeican driving laws on this (i live in the UK), but these two.descriptions dont sound mutually incomptabile.

The clockwise rule tells you everything except who goes first. You say thats the first to arrive.

It says "I am socially clueless enough to do random inappropriate things"

In a sense I agree with you, if you are trying to signal something specific, then wearing a suit in an unusual context is probably the wrong way of doing it. But, the social signalling game is exhausting. (I am English, maybe this makes it worse than normal for me). If I am a guest at someone's house and they offer me food, what am I signalling by saying yes? What if I say no? They didn't let me buy the next round of drinks, do I try again later or take No for an answer? Are they offe...

A nice post about the NY flat rental market. I found myself wondering, does the position you are arguing against at the beginning actually exist, or it is set up only as a rhetorical thing to kill? What I mean is this:

everything’s priced perfectly, no deals to sniff out, just grab what’s in front of you and call it a day. The invisible hand’s got it all figured out—right?

Do people actually think this way? The argument seems to reduce to "This looks like a bad deal, but if it actually was a bad deal then no one would buy it. Therefore, it can't be a bad dea...

You have misunderstood me in a couple of places. I think think maybe the diagram is confusing you, or maybe some of the (very weird) simplifying assumptions I made, but I am not sure entirely.

First, when I say "momentum" I mean actual momentum (mass times velocity). I don't mean kinetic energy.

To highlight the relationship between the two, the total energy of a mass on a spring can be written as: where p is the momentum, m the mass, k the spring strength and x the position (in units where the lowest potential point is at x=0...

I am not sure that example fully makes sense. If trade is possible then two people with 11 units of resources can get together and do a cost 20 project. That is why companies have shares, they let people chip in so you can make a Suez Canal even if no single person on Earth is rich enough to afford a Suez Canal.

I suppose in extreme cases where everyone is on or near the breadline some of that "Stag Hunt" vs "Rabbit Hunt" stuff could apply.

I agree with you that, if we need to tax something to pay for our government services, then inheritance tax is arguably not a terrible choice.

But a lot of your arguments seem a bit problematic to me. First, as a point of basic practicality, why 100%? Couldn't most of your aims be achieved with a lesser percentage? That would also smooth out weird edge cases.

There is something fundamentally compelling about the idea that every generation should start fresh, free from the accumulated advantages or disadvantages of their ancestors.

This quote stood out t...

I think I am not understanding the question this equation is supposed to be answer, as it seems wrong to me.

I think you are considering the case were we draw arrowheads on the lines? So each line is either an "input" or an "output", and we randomly connect inputs only to outputs, never connecting two inputs together or two outputs? With those assumptions I think the probability of only one loop on a shape with N inputs and N outputs (for a total of 2N "puts") is 1/N.

The equation I had ( (N-2)!! / (N-1)!!) is for N "points", which are not pre-assigned...

This is really wonderful, thank you so much for sharing. I have been playing with your code.

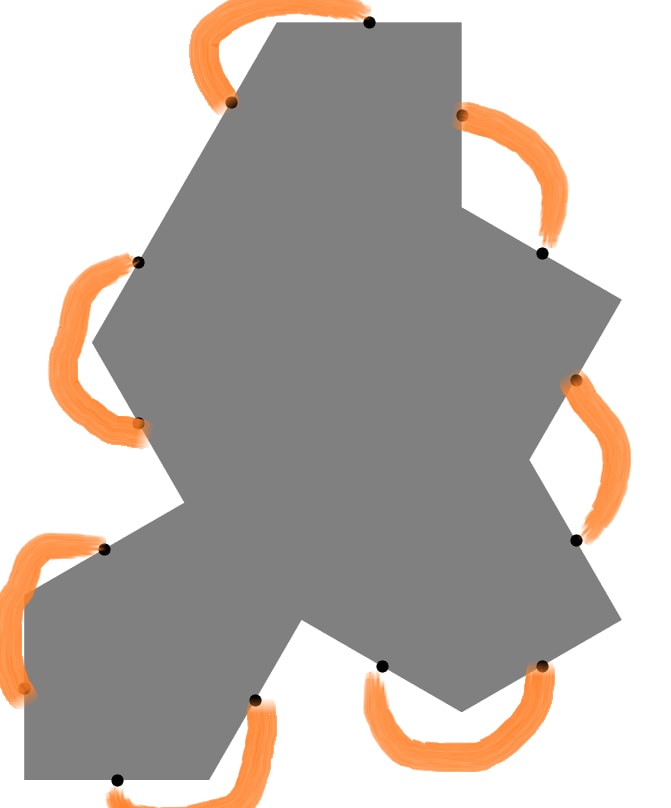

The probability that their is only one loop is also very interesting. I worked out something, which feels like it is probably already well known, but not to me until now, for the simplest case.

In the simplest case is one tile. The orange lines are the "edging rule". Pick one black point and connect it to another at random. This has a 1/13 chance of immediately creating a closed loop, meaning more than one loop total. Assuming it doesn't do that, the next connection ...

That is a nice idea. The "two sides at 180 degrees" only occurred to me after I had finished. I may look into that one day, but with that many connections is needs to be automated.

In the 6 entries/exits ones above you pick one entry, you have 5 options of where to connect it. Then, you pick the next unused entry clockwise, and have 3 options for where to send it, then you have only one option for how to connect the last two. So its 5x3x1 = 15 different possible tiles.

With 14 entries/exits, its 13x11x9x7x5x3x1 = 135,135 different tiles. (13!!, for !! being ...

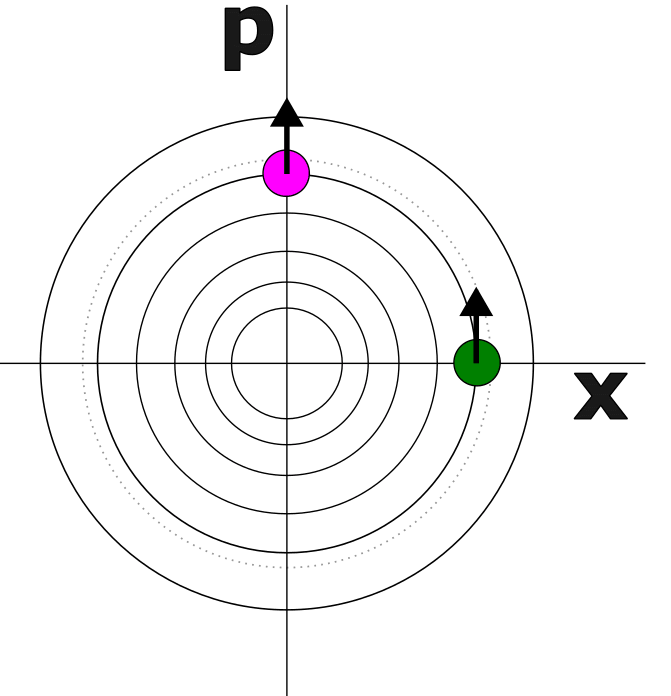

I still find the effect weird, but something that I think makes it more clear is this phase space diagram:

We are treating the situation as 1D, and the circles in the x, p space are energy contours. Total energy is distance from the origin. An object in orbit goes in circles with a fixed distance from the origin. (IE a fixed total energy).

The green and purple points are two points on the same orbit. At purple we have maximum momentum and minimum potential energy. At green its the other way around. The arrows show impulses, if we could suddenly add momentum ...

I am not sure that is right. A very large percentage of people really don't think the rolls are independent. Have you ever met anyone who believed in fate, Karma, horoscopes , lucky objects or prayer? They don't think its (fully) random and independent. I think the majority of the human population believe in one or more of those things.

If someone spells a word wrong in a spelling test, then its possible they mistyped, but if its a word most people can't spell correctly then the hypothesis "they don't know the spelling' should dominate. Similarly, I think it is fair to say that a very large fraction of humans (over 50%?) don't actually think dice rolls or coin tosses are independent and random.

That is a cool idea! I started writing a reply, but it got a bit long so I decided to make it its own post in the end. ( https://www.lesswrong.com/posts/AhmZBCKXAeAitqAYz/celtic-knots-on-einstein-lattice )

I stuck to maximal density for two reaosns, (1) to continue the Celtic knot analogy (2) because it means all tiles are always compatible (you can fit two side by side at any orientation without loosing continuity). With tiles that dont use every facet this becomes an issue.

Thinking about it now, and without having checked carefully, I think this compatibilty does something topological and forces odd macrostructure. For example, if we have a line of 4-tiles in a sea of 6-tiles (4 tiles use four facets), then we cant end the line of 4 tiles without breaking ...

That's a very interesting idea. I tried going through the blue one at the end.

Its not possible in that case for each string to strictly alternate between going over and under, by any of the rules I have tried. In some cases two strings pass over/under one another, then those same two strings meet again when one has travelled two tiles and the other three. So they are de-synced. They both think its their turn to go over (or under).

The rules I tried to apply were (all of which I believe don't work):

- Over for one tile, under for the next (along each string)

- Ove

I wasn't aware of that game. Yes it is identical in terms of the tile designs. Thank you for sharing that, it was very interesting and that Tantrix wiki page lead me to this one, https://en.wikipedia.org/wiki/Serpentiles , which goes into some interesting related stuff with two strings per side or differently shaped tiles.

Something related that I find interesting, for people inside a company, the real rival isn't another company doing the same thing, but people in your own company doing a different thing.

Imagine you work at Microsoft in the AI research team in 2021. Management want to cut R&D spending, so either your lot or the team doing quantum computer research are going to be redundant soon. Then, the timeline splits. In one universe, Open AI release Chat GPT, in the other PsiQuantum do something super impressive with quantum stuff. In which of those universes do th...

I think economics should be taught to children, not for the reasons you express, but because it seems perverse that I spent time at school learning about Vikings, Oxbow lakes, volcanoes, Shakespeare and Castles, but not about the economic system of resource distribution that surrounds me for the rest of my life. When I was about 9 I remember asking why 'they' didn't just print more money until everyone had enough. I was fortunate to have parents who could give a good answer, not everyone will be.

Stock buybacks! Thank you. That is definitely going to be a big part f the "I am missing something here" I was expressing above.

I freely admit to not really understanding how shares are priced. To me it seems like the value of a share should be related to the expected dividend pay-out of that share over the remaining lifetime of the company, with a discount rate applied on pay-outs that are expected to happen further in the future (IE dividend yields 100 years from now are valued much less than equivalent payments this year). By this measure, justifying the current price sounds hard.

Google says that the annual dividend on Nvidia shares is 0.032%. (Yes, the leading digits are 0.0). ...

That is very interesting! That does sound weird.

In some papers people write density operators using an enhanced "double ket" Dirac notation, where eg. density operators are written to look like |x>>, with two ">"'s. They do this exactly because the differential equations look more elegant.

I think in this notation measurements look like <<m|, but am not sure about that. The QuTiP software (which is very common in quantum modelling) uses something like this under-the-hood, where operators (eg density operators) are stored internally using 1d vectors, and the super-operators (maps from...

Yes, in your example a recipient who doesn't know the seed models the light as unpolarised, and one who does as say, H-polarised in a given run. But for everyone who doesn't see the random seed its the same density matrix.

Lets replace that first machine with a similar one that produces a polarisation entangled photon pair, |HH> + |VV> (ignoring normalisation). If you have one of those photons it looks unpolarised (essentially your "ignorance of the random seed" can be thought of as your ignorance of the polarisation of the other photon).

If someone el...

What is the Bayesian argument, if one exists, for why quantum dynamics breaks the “probability is in the mind” philosophy?

In my world-view the argument is based on Bell inequalities. Other answers mention them, I will try and give more of an introduction.

First, context. We can reason inside a theory, and we can reason about a theory. The two are completely different and give different intuitions. Anyone talking about "but the complex amplitudes exist" or "we are in one Everett branch" is reasoning inside the theory. The theory, as given in the ...

Just the greentext. Yes, I totally agree that the study probably never happened. I just engaged with the actualy underling hypothesis, and to do so felt like some summary of the study helped. But I phrased it badly and it seems like I am claiming the study actually happened. I will edit.

I thought they were typically wavefunction to wavefunction maps, and they need some sort of sandwiching to apply to density matrices?

Yes, this is correct. My mistake, it does indeed need the sandwiching like this .

From your talk on tensors, I am sure it will not surprise you at all to know that the sandwhich thing itself (mapping from operators to operators) is often called a superoperator.

I think the reason it is as it is is their isn't a clear line between operators that modify the state and those that represent measurements...

The way it works normally is that you have a state , and its acted on by some operator, , which you can write as . But this doesn't give a number, it gives a new state like the old but different. (For example if a was the anhilation operator the new state is like the old state but with one fewer photons). This is how (for example) an operator acts on the state of the system to change that state. (Its a density matrix to density matrix map).

In dimensions terms this is: (1,1) = (1, 1) * (1,1)

(Two square matrices of size...

You are completely correct in the "how does the machine work inside?" question. As you point out that density matrix has the exact form of something that is entangled with something else.

I think its very important to be discussing what is real, although as we always have a nonzero inferential distance between ourselves and the real the discussion has to be a little bit caveated and pragmatic.

I think the reason is that in quantum physics we also have operators representing processes (like the Hamiltonian operator making the system evolve with time, or the position operator that "measures" position, or the creation operator that adds a photon), and the density matrix has exactly the same mathematical form as these other operators (apart from the fact the density matrix needs to be normalized).

But that doesn't really solve the mystery fully, because they could all just be called "matrices" or "tensors" instead of "operators". (Maybe it gets...

There are some non-obvious issues with saying "the wavefunction really exists, but the density matrix is only a representation of our own ignorance". Its a perfectly defensible viewpoint, but I think it is interesting to look at some of its potential problems:

- A process or machine prepares either |0> or |1> at random, each with 50% probability. Another machine prepares either |+> or |-> based on a coin flick, where |+> = (|0> + |1>)/root2, and |+> = (|0> - |1>)/root2. In your ontology these are actually different machines

I just looked up the breakfast hypothetical. Its interesting, thanks for sharing it.

So, my understanding is (supposedly) someone asked a lot of prisoners "How would you feel if you hadn't had breakfast this morning?", did IQ tests on the same prisoners and found that the ones who answered "I did have breakfast this morning." or equivalent were on average very low in IQ. (Lets just assume for the purposes of discussion that this did happen as advertised.)

It is interesting. I think in conversation people very often hear the question they were expecting, and ...

The question of "why should the observed frequencies of events be proportional to the square amplitudes" is actually one of the places where many people perceive something fishy or weird with many worlds. [https://www.sciencedirect.com/science/article/pii/S1355219809000306 ]

To clarify, its not a question of possibly rejecting the square-amplitude Born Rule while keeping many worlds. Its a question of whether the square-amplitude Born Rule makes sense within the many worlds perspective, and it if doesn't what should be modified about the many worlds perspective to make it make sense.

I agree with this. Its something about the guilt that makes this work. Also the sense that you went into it yourself somehow reshapes the perception.

I think the loan shark business model maybe follows the same logic. [If you are going to eventually get into a situation where the victim pays or else suffers violence, then why doesn't the perpetrator just skip the costly loan step at the beginning and go in threat first? I assume that the existence of loan sharks (rather than just blackmailers) proves something about how if people feel like they made a bad choice or engaged willingly at some point they are more susceptible. Or maybe its frog boiling.]

On the "what did we start getting right in the 1980's for reducing global poverty" I think most of the answer was a change in direction of China. In the late 70's they started reforming their economy (added more capitalism, less command economy): https://en.wikipedia.org/wiki/Chinese_economic_reform.

Comparing this graph on wiki https://en.wikipedia.org/wiki/Poverty_in_China#/media/File:Poverty_in_China.svg , to yours, it looks like China accounts for practically all of the drop in poverty since the 1980s.

Arguably this is a good example for your other point...

I don't think the framing "Is behaviour X exploitation?" is the right framing. It takes what (should be) an argument about morality and instead turns it into an argument about the definition of the word "exploitation" (where we take it as given that, whatever the hell we decide exploitation "actually means" it is a bad thing). For example see this post: https://www.lesswrong.com/posts/yCWPkLi8wJvewPbEp/the-noncentral-fallacy-the-worst-argument-in-the-world. Once we have a definition of "exploitation" their might be some weird edge cases that are technicall...

The teapot comparison (to me) seems to be a bad. I got carried away and wrote a wall of text. Feel free to ignore it!

First, lets think about normal probabilities in everyday life. Sometimes there are more ways for one state to come about that another state, for example if I shuffle a deck of cards the number of orderings that look random is much larger than the number of ways (1) of the cards being exactly in order.

However, this manner of thinking only applies to certain kinds of thing - those that are in-principle distinguishable. If you have a deck of bl...

I found this post to be a really interesting discussion of why organisms that sexually reproduce have been successful and how the whole thing emerges. I found the writing style, where it switched rapidly between relatively serious biology and silly jokes very engaging.

Many of the sub claims seem to be well referenced (I particularly liked the swordless ancestor to the swordfish liking mates who had had artificial swords attached).

"Stock prices represent the market's best guess at a stock's future price."

But they are not the same as the market's best guess at its future price. If you have a raffle ticket that will, 100% for definite, win $100 when the raffle happens in 10 years time, the the market's best guess of its future price is $100, but nobody is going to buy it for $100, because $100 now is better than $100 in 10 years.

Whatever it is that people think the stock will be worth in the future, they will pay less than that for it now. (Because $100 in the future isn't as good as ...

I find myself still strongly agreeing with your 'friend A' about the difference between someone having a preference for dating people of a particular race, and people stating that preference on their dating app profile.

The two things are, I think, miles apart, and it looks like the participants in that study were asked in the abstract about people having preferences and then given examples of people expressing, not even preferences, but hard and fast rules.

Take example people A and B:

Person A is asked to rate 100 pictures of strangers for attractiveness, f... (read more)