Rationalist Community Hub in Moscow: 3 Years Retrospective

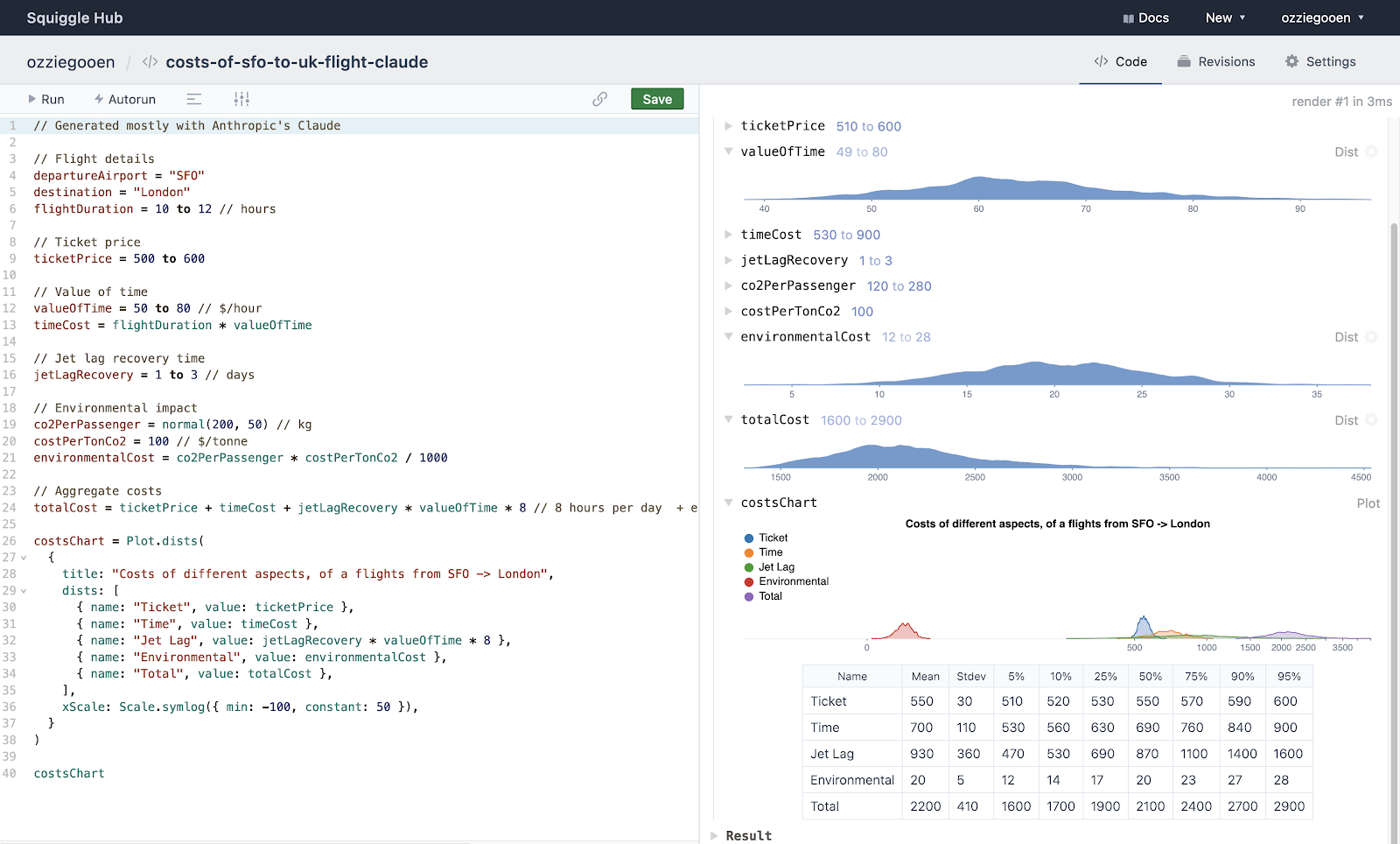

Short summary: Moscow rationalist community is organized around a local venue called Kocherga. Kocherga hosts 40–50 rationality-adjacent events per month, teaches a few dozen people per year in CFAR-style applied rationality workshops, and has ambitious plans for further growth. We on the verge of becoming profitable, but we need some funding to stay afloat. We're launching a Patreon page today. This post is a long overdue report on the state of LessWrong Russia community and specifically on the Kocherga community space in Moscow. This post is also a call for donations. Russian LessWrong community has existed for many years now, but there wasn't much info on lesswrong.com about how we're doing. The last general report by Yuu was posted in March 2013. Alexander230 made a few posts about Fallacymania which is one product, but it's far from the only interesting thing about LW Russia. In the following text I'm going to cover: * History of LW Russia from 2013 to 2015 * History of Kocherga anticafe, the rationalist community space we started in 2015 * The list of Kocherga's successes and failures, as well as some tentative comparisons between Kocherga and Berkeley REACH. * Our current financial situation, which is the main reason I'm taking the time to do this write-up now. Current state of Russian and Moscow community Here's a short description of LW Russia and LW Moscow in its current state. Our Russian Slack chat has 1500+ registered members, 150 weekly active members and 50 weekly posting members. LessWrong.ru has a few hundred posts from Sequences and other sources (e.g. from SlateStarCodex) translated by volunteers. LessWrong.ru gets 800 daily unique visitors. There's also a wiki with >100 articles and a VK.com page with 13000 followers. There are regular meetups in Saint-Petersburg (hard to measure, they had a few pivots in their approach in the last few years) and in Yekaterinburg. Our Moscow community hub, Kocherga, which is the main topic of thi

Nope.

We closed the physical space during COVID, then continued for a two years online in various forms, then after the Ukraine war started I left the country and the project was mostly dead since then. A few months ago we finally shut down all remaining chats and archived the website.

Sometimes I think that it'd be nice to do a final write-up/postmortem, but I'm not sure it'll actually happen.