All of bruberu's Comments + Replies

OK, what I actually did was not realize that the link provided did not link directly to gpt2-chatbot (instead, the front page just compares two random chatbots from a list). After figuring that out, I reran my tests; it was able to do 20, 40, and 100 numbers perfectly.

I've retracted my previous comments.

Interesting; maybe it's an artifact of how we formatted our questions? Or, potentially, the training samples with larger ranges of numbers were higher quality? You could try it like how I did in this failing example:

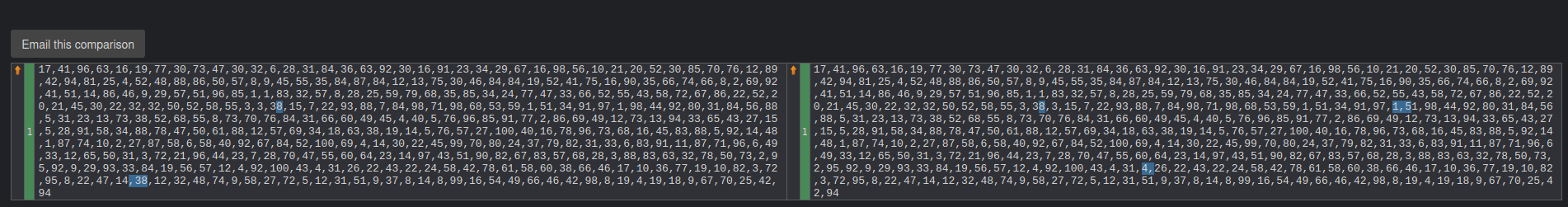

When I tried this same list with your prompt, both responses were incorrect:

By using @Sergii's list reversal benchmark, it seems that this model seems to fail reversing a list of 10 random numbers from 1-10 from random.org about half the time. This is compared to GPT-4's supposed ability to reverse lists of 20 numbers fairly well, and ChatGPT 3.5 seemed to have no trouble itself, although since it isn't a base model, this comparison could potentially be invalid.

This does significantly update me towards believing that this is probably not better than GPT-4.

I looked a little into the literature on how much alcohol consumption actually affects rates of oral cancers in populations with ALDH polymorphism, and this particular study seems to be helpful in modelling how the likelihood of oral cancer increases with alcohol consumption for this group of people (found in this meta-analysis).

The specific categories of drinking frequency don't seem to be too nice here, given that it was split between drinking <=4 days a week, drinking >=5 days a week and having less than 46g of ethanol per week, and drinking >=...

One other interesting quirk of your model of green is that it appears most of the central (and natural) examples of green for humans involve the utility function box adapting to these stimulating experiences so that their utility function is positively correlated with the way latent variables change over the course of one of an experience. In other words, the utility function gets "attuned" to the result of that experience.

For instance, taking the Zadie Smith example from the essay, her experience of greenness involved starting to appreciate the effect tha...

As for one more test, it was rather close on reversing 400 numbers:

Given these results, it seems pretty obvious that this is a rather advanced model (although Claude Opus was able to do it perfectly, so it may not be SOTA).

Going back to the original question of where this model came from, I have trouble putting the chance of this necessarily coming from OpenAI above 50%, mainly due to questions about how exactly this was publicized. It seems to be a strange choice to release an unannounced model in Chatbot Arena, especially without any sort of associ... (read more)